Marco Carraro

Real-time Tracking-by-Detection of Human Motion in RGB-D Camera Networks

Jul 28, 2019

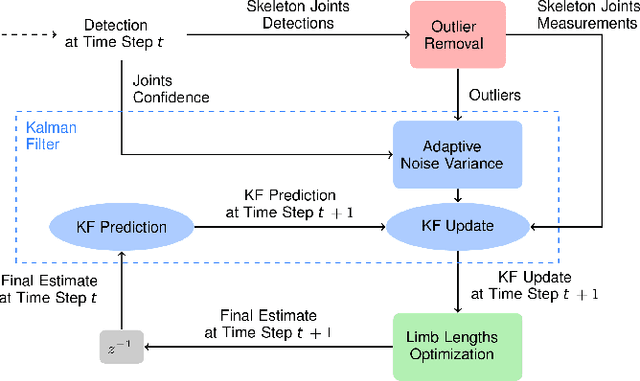

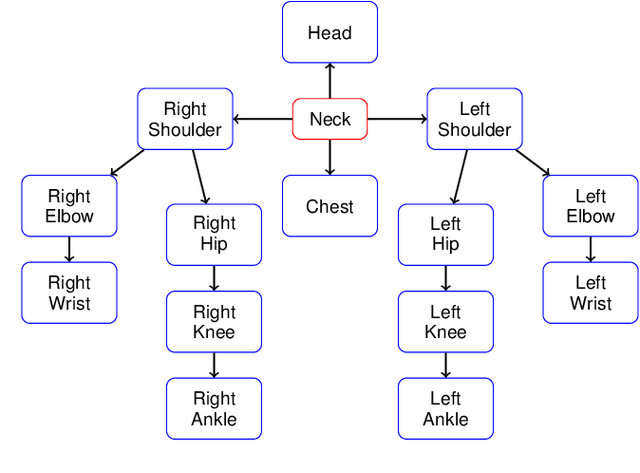

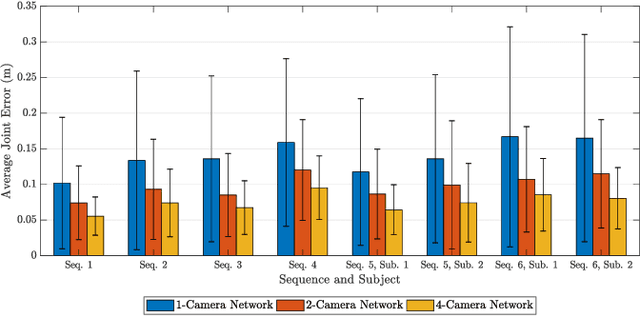

Abstract:This paper presents a novel real-time tracking system capable of improving body pose estimation algorithms in distributed camera networks. The first stage of our approach introduces a linear Kalman filter operating at the body joints level, used to fuse single-view body poses coming from different detection nodes of the network and to ensure temporal consistency between them. The second stage, instead, refines the Kalman filter estimates by fitting a hierarchical model of the human body having constrained link sizes in order to ensure the physical consistency of the tracking. The effectiveness of the proposed approach is demonstrated through a broad experimental validation, performed on a set of sequences whose ground truth references are generated by a commercial marker-based motion capture system. The obtained results show how the proposed system outperforms the considered state-of-the-art approaches, granting accurate and reliable estimates. Moreover, the developed methodology constrains neither the number of persons to track, nor the number, position, synchronization, frame-rate, and manufacturer of the RGB-D cameras used. Finally, the real-time performances of the system are of paramount importance for a large number of real-world applications.

RUR53: an Unmanned Ground Vehicle for Navigation, Recognition and Manipulation

Nov 23, 2017

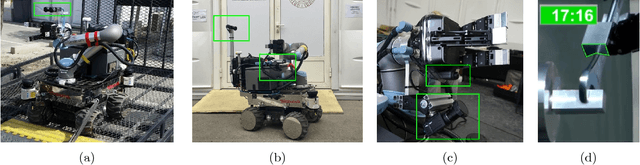

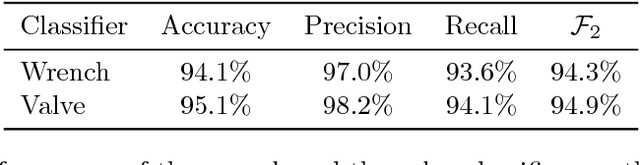

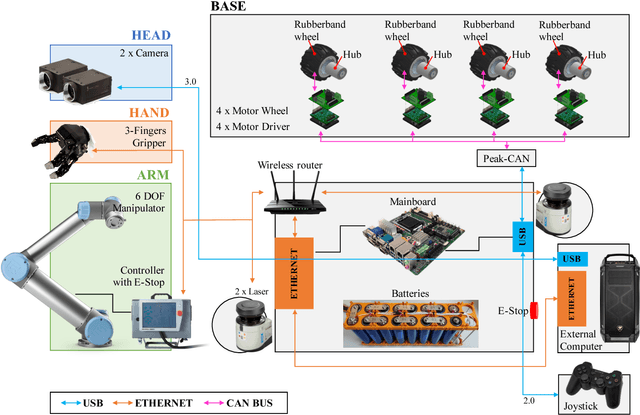

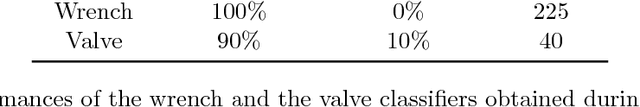

Abstract:This paper describes RUR53, the unmanned mobile manipulator robot developed by the Desert Lion team of the University of Padova (Italy), and its experience in Challenge 2 and the Grand Challenge of the first Mohamed Bin Zayed International Robotics Challenge (Abu Dhabi, March 2017). According to the competition requirements, the robot is able to freely navigate inside an outdoor arena; locate and reach a panel; recognize and manipulate a wrench; use this wrench to physically operate a valve stem on the panel itself. RUR53 is able to perform these tasks both autonomously and in teleoperation mode. The paper details the adopted hardware and software architectures, focusing on its key aspects: modularity, generality, and the ability of exploiting sensor feedback. These features let the team rank third in the Gran Challenge in collaboration with the Czech Technical University in Prague, Czech Republic, the University of Pennsylvania, USA, and the University of Lincoln, UK. Tests performed both in the Challenge arena and in the lab are presented and discussed, focusing on the strengths and limitations of the proposed wrench and valve classification and recognition algorithms. Lessons learned are also detailed.

Real-time marker-less multi-person 3D pose estimation in RGB-Depth camera networks

Oct 17, 2017

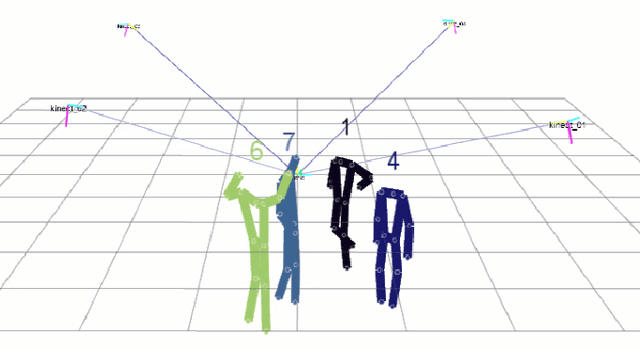

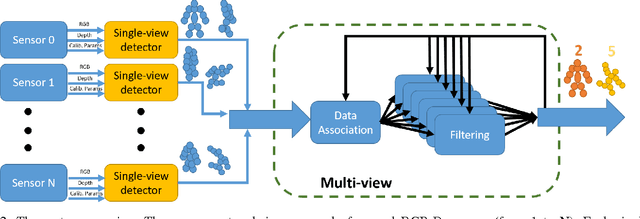

Abstract:This paper proposes a novel system to estimate and track the 3D poses of multiple persons in calibrated RGB-Depth camera networks. The multi-view 3D pose of each person is computed by a central node which receives the single-view outcomes from each camera of the network. Each single-view outcome is computed by using a CNN for 2D pose estimation and extending the resulting skeletons to 3D by means of the sensor depth. The proposed system is marker-less, multi-person, independent of background and does not make any assumption on people appearance and initial pose. The system provides real-time outcomes, thus being perfectly suited for applications requiring user interaction. Experimental results show the effectiveness of this work with respect to a baseline multi-view approach in different scenarios. To foster research and applications based on this work, we released the source code in OpenPTrack, an open source project for RGB-D people tracking.

Fast and Robust Detection of Fallen People from a Mobile Robot

Mar 09, 2017

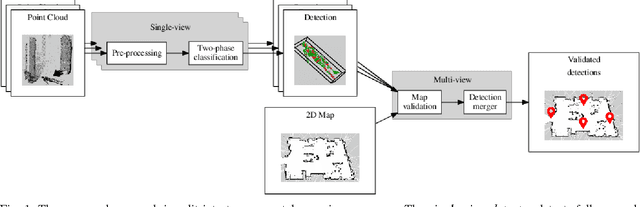

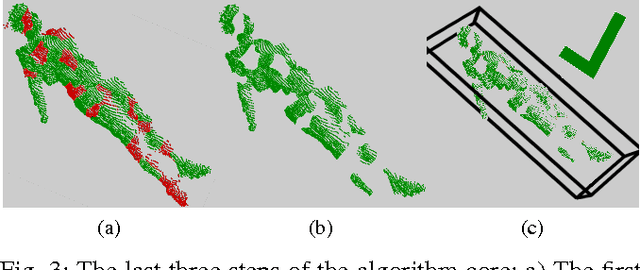

Abstract:This paper deals with the problem of detecting fallen people lying on the floor by means of a mobile robot equipped with a 3D depth sensor. In the proposed algorithm, inspired by semantic segmentation techniques, the 3D scene is over-segmented into small patches. Fallen people are then detected by means of two SVM classifiers: the first one labels each patch, while the second one captures the spatial relations between them. This novel approach showed to be robust and fast. Indeed, thanks to the use of small patches, fallen people in real cluttered scenes with objects side by side are correctly detected. Moreover, the algorithm can be executed on a mobile robot fitted with a standard laptop making it possible to exploit the 2D environmental map built by the robot and the multiple points of view obtained during the robot navigation. Additionally, this algorithm is robust to illumination changes since it does not rely on RGB data but on depth data. All the methods have been thoroughly validated on the IASLAB-RGBD Fallen Person Dataset, which is published online as a further contribution. It consists of several static and dynamic sequences with 15 different people and 2 different environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge