Marc Antonini

Implicit Neural Multiple Description for DNA-based data storage

Sep 13, 2023

Abstract:DNA exhibits remarkable potential as a data storage solution due to its impressive storage density and long-term stability, stemming from its inherent biomolecular structure. However, developing this novel medium comes with its own set of challenges, particularly in addressing errors arising from storage and biological manipulations. These challenges are further conditioned by the structural constraints of DNA sequences and cost considerations. In response to these limitations, we have pioneered a novel compression scheme and a cutting-edge Multiple Description Coding (MDC) technique utilizing neural networks for DNA data storage. Our MDC method introduces an innovative approach to encoding data into DNA, specifically designed to withstand errors effectively. Notably, our new compression scheme overperforms classic image compression methods for DNA-data storage. Furthermore, our approach exhibits superiority over conventional MDC methods reliant on auto-encoders. Its distinctive strengths lie in its ability to bypass the need for extensive model training and its enhanced adaptability for fine-tuning redundancy levels. Experimental results demonstrate that our solution competes favorably with the latest DNA data storage methods in the field, offering superior compression rates and robust noise resilience.

INR-MDSQC: Implicit Neural Representation Multiple Description Scalar Quantization for robust image Coding

Jun 24, 2023Abstract:Multiple Description Coding (MDC) is an error-resilient source coding method designed for transmission over noisy channels. We present a novel MDC scheme employing a neural network based on implicit neural representation. This involves overfitting the neural representation for images. Each description is transmitted along with model parameters and its respective latent spaces. Our method has advantages over traditional MDC that utilizes auto-encoders, such as eliminating the need for model training and offering high flexibility in redundancy adjustment. Experiments demonstrate that our solution is competitive with autoencoder-based MDC and classic MDC based on HEVC, delivering superior visual quality.

MQ-Coder inspired arithmetic coder for synthetic DNA data storage

Jun 22, 2023

Abstract:Over the past years, the ever-growing trend on data storage demand, more specifically for "cold" data (i.e. rarely accessed), has motivated research for alternative systems of data storage. Because of its biochemical characteristics, synthetic DNA molecules are now considered as serious candidates for this new kind of storage. This paper introduces a novel arithmetic coder for DNA data storage, and presents some results on a lossy JPEG 2000 based image compression method adapted for DNA data storage that uses this novel coder. The DNA coding algorithms presented here have been designed to efficiently compress images, encode them into a quaternary code, and finally store them into synthetic DNA molecules. This work also aims at making the compression models better fit the problematic that we encounter when storing data into DNA, namely the fact that the DNA writing, storing and reading methods are error prone processes. The main take away of this work is our arithmetic coder and it's integration into a performant image codec.

Image storage on synthetic DNA using compressive autoencoders and DNA-adapted entropy coders

Jun 22, 2023

Abstract:Over the past years, the ever-growing trend on data storage demand, more specifically for "cold" data (rarely accessed data), has motivated research for alternative systems of data storage. Because of its biochemical characteristics, synthetic DNA molecules are now considered as serious candidates for this new kind of storage. This paper presents some results on lossy image compression methods based on convolutional autoencoders adapted to DNA data storage, with synthetic DNA-adapted entropic and fixed-length codes. The model architectures presented here have been designed to efficiently compress images, encode them into a quaternary code, and finally store them into synthetic DNA molecules. This work also aims at making the compression models better fit the problematics that we encounter when storing data into DNA, namely the fact that the DNA writing, storing and reading methods are error prone processes. The main take aways of this kind of compressive autoencoder are our latent space quantization and the different DNA adapted entropy coders used to encode the quantized latent space, which are an improvement over the fixed length DNA adapted coders that were previously used.

* arXiv admin note: substantial text overlap with arXiv:2203.09981

Rotating labeling of entropy coders for synthetic DNA data storage

Apr 02, 2023Abstract:Over the past years, the ever-growing trend on data storage demand, more specifically for "cold" data (i.e. rarely accessed), has motivated research for alternative systems of data storage. Because of its biochemical characteristics, synthetic DNA molecules are considered as potential candidates for a new storage paradigm. Because of this trend, several coding solutions have been proposed over the past years for the storage of digital information into DNA. Despite being a promising solution, DNA storage faces two major obstacles: the large cost of synthesis and the noise introduced during sequencing. Additionally, this noise increases when biochemically defined coding constraints are not respected: avoiding homopolymers and patterns, as well as balancing the GC content. This paper describes a novel entropy coder which can be embedded to any block-based image-coding schema and aims to robustify the decoded results. Our proposed solution introduces variability in the generated quaternary streams, reduces the amount of homopolymers and repeated patterns to reduce the probability of errors occurring. In this paper, we integrate the proposed entropy coder into four existing JPEG-inspired DNA coders. We then evaluate the quality -- in terms of biochemical constraints -- of the encoded data for all the different methods.

Multiple description video coding for real-time applications using HEVC

Mar 10, 2023Abstract:Remote control vehicles require the transmission of large amounts of data, and video is one of the most important sources for the driver. To ensure reliable video transmission, the encoded video stream is transmitted simultaneously over multiple channels. However, this solution incurs a high transmission cost due to the wireless channel's unreliable and random bit loss characteristics. To address this issue, it is necessary to use more efficient video encoding methods that can make the video stream robust to noise. In this paper, we propose a low-complexity, low-latency 2-channel Multiple Description Coding (MDC) solution with an adaptive Instantaneous Decoder Refresh (IDR) frame period, which is compatible with the HEVC standard. This method shows better resistance to high packet loss rates with lower complexity.

Quantization for decentralized learning under subspace constraints

Sep 16, 2022

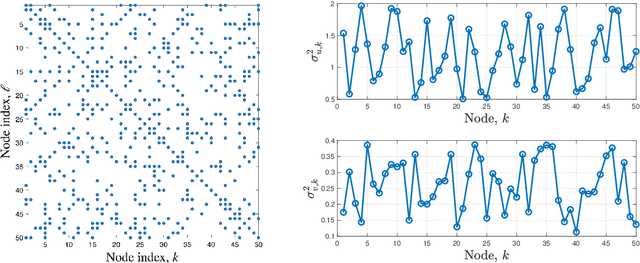

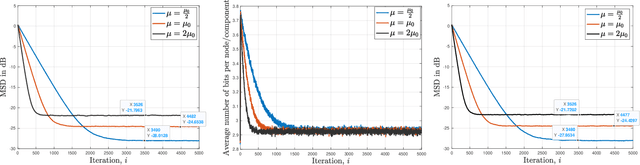

Abstract:In this paper, we consider decentralized optimization problems where agents have individual cost functions to minimize subject to subspace constraints that require the minimizers across the network to lie in low-dimensional subspaces. This constrained formulation includes consensus or single-task optimization as special cases, and allows for more general task relatedness models such as multitask smoothness and coupled optimization. In order to cope with communication constraints, we propose and study an adaptive decentralized strategy where the agents employ differential randomized quantizers to compress their estimates before communicating with their neighbors. The analysis shows that, under some general conditions on the quantization noise, and for sufficiently small step-sizes $\mu$, the strategy is stable both in terms of mean-square error and average bit rate: by reducing $\mu$, it is possible to keep the estimation errors small (on the order of $\mu$) without increasing indefinitely the bit rate as $\mu\rightarrow 0$. Simulations illustrate the theoretical findings and the effectiveness of the proposed approach, revealing that decentralized learning is achievable at the expense of only a few bits.

Learning sparse auto-encoders for green AI image coding

Sep 09, 2022

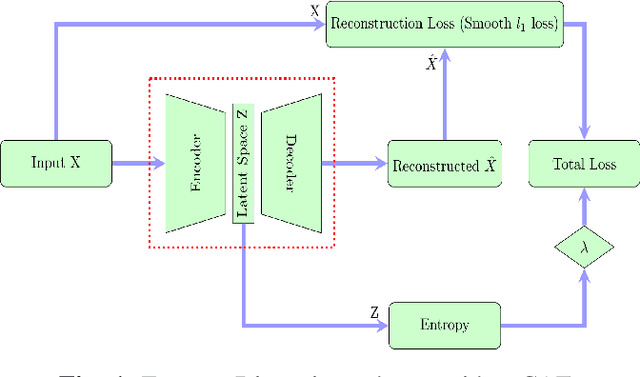

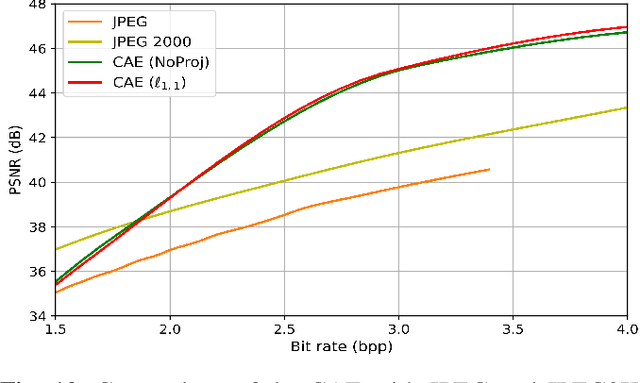

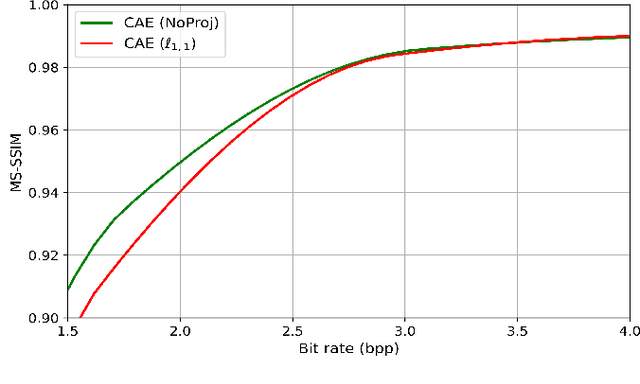

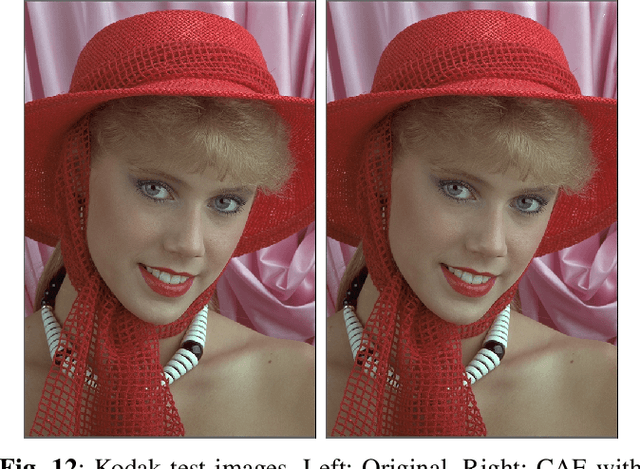

Abstract:Recently, convolutional auto-encoders (CAE) were introduced for image coding. They achieved performance improvements over the state-of-the-art JPEG2000 method. However, these performances were obtained using massive CAEs featuring a large number of parameters and whose training required heavy computational power.\\ In this paper, we address the problem of lossy image compression using a CAE with a small memory footprint and low computational power usage. In order to overcome the computational cost issue, the majority of the literature uses Lagrangian proximal regularization methods, which are time consuming themselves.\\ In this work, we propose a constrained approach and a new structured sparse learning method. We design an algorithm and test it on three constraints: the classical $\ell_1$ constraint, the $\ell_{1,\infty}$ and the new $\ell_{1,1}$ constraint. Experimental results show that the $\ell_{1,1}$ constraint provides the best structured sparsity, resulting in a high reduction of memory and computational cost, with similar rate-distortion performance as with dense networks.

A constrained Shannon-Fano entropy coder for image storage in synthetic DNA

Mar 18, 2022

Abstract:The exponentially increasing demand for data storage has been facing more and more challenges during the past years. The energy costs that it represents are also increasing, and the availability of the storage hardware is not able to follow the storage demand's trend. The short lifespan of conventional storage media -- 10 to 20 years - forces the duplication of the hardware and worsens the situation. The majority of this storage demand concerns "cold" data, data very rarely accessed but that has to be kept for long periods of time. The coding abilities of synthetic DNA, and its long durability (several hundred years), make it a serious candidate as an alternative storage media for "cold" data. In this paper, we propose a variable-length coding algorithm adapted to DNA data storage with improved performance. The proposed algorithm is based on a modified Shannon-Fano code that respects some biochemichal constraints imposed by the synthesis chemistry. We have inserted this code in a JPEG compression algorithm adapted to DNA image storage and we highlighted an improvement of the compression ratio ranging from 0.5 up to 2 bits per nucleotide compared to the state-of-the-art solution, without affecting the reconstruction quality.

Image Storage on Synthetic DNA Using Autoencoders

Mar 18, 2022

Abstract:Over the past years, the ever-growing trend on data storage demand, more specifically for "cold" data (rarely accessed data), has motivated research for alternative systems of data storage. Because of its biochemical characteristics, synthetic DNA molecules are now considered as serious candidates for this new kind of storage. This paper presents some results on lossy image compression methods based on convolutional autoencoders adapted to DNA data storage. The model architectures presented here have been designed to efficiently compress images, encode them into a quaternary code, and finally store them into synthetic DNA molecules. This work also aims at making the compression models better fit the problematics that we encounter when storing data into DNA, namely the fact that the DNA writing, storing and reading methods are error prone processes. The main take away of this kind of compressive autoencoder is our quantization and the robustness to substitution errors thanks to the noise model that we use during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge