Marco Carpentiero

Doubly Adaptive Social Learning

Apr 24, 2025Abstract:In social learning, a network of agents assigns probability scores (beliefs) to some hypotheses of interest, which rule the generation of local streaming data observed by each agent. Belief formation takes place by means of an iterative two-step procedure where: i) the agents update locally their beliefs by using some likelihood model; and ii) the updated beliefs are combined with the beliefs of the neighboring agents, using a pooling rule. This procedure can fail to perform well in the presence of dynamic drifts, leading the agents to incorrect decision making. Here, we focus on the fully online setting where both the true hypothesis and the likelihood models can change over time. We propose the doubly adaptive social learning ($\text{A}^2\text{SL}$) strategy, which infuses social learning with the necessary adaptation capabilities. This goal is achieved by exploiting two adaptation stages: i) a stochastic gradient descent update to learn and track the drifts in the decision model; ii) and an adaptive belief update to track the true hypothesis changing over time. These stages are controlled by two adaptation parameters that govern the evolution of the error probability for each agent. We show that all agents learn consistently for sufficiently small adaptation parameters, in the sense that they ultimately place all their belief mass on the true hypothesis. In particular, the probability of choosing the wrong hypothesis converges to values on the order of the adaptation parameters. The theoretical analysis is illustrated both on synthetic data and by applying the $\text{A}^2\text{SL}$ strategy to a social learning problem in the online setting using real data.

Differential error feedback for communication-efficient decentralized learning

Jun 26, 2024

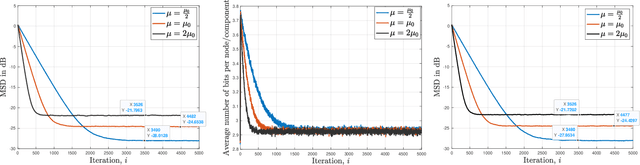

Abstract:Communication-constrained algorithms for decentralized learning and optimization rely on local updates coupled with the exchange of compressed signals. In this context, differential quantization is an effective technique to mitigate the negative impact of compression by leveraging correlations between successive iterates. In addition, the use of error feedback, which consists of incorporating the compression error into subsequent steps, is a powerful mechanism to compensate for the bias caused by the compression. Under error feedback, performance guarantees in the literature have so far focused on algorithms employing a fusion center or a special class of contractive compressors that cannot be implemented with a finite number of bits. In this work, we propose a new decentralized communication-efficient learning approach that blends differential quantization with error feedback. The approach is specifically tailored for decentralized learning problems where agents have individual risk functions to minimize subject to subspace constraints that require the minimizers across the network to lie in low-dimensional subspaces. This constrained formulation includes consensus or single-task optimization as special cases, and allows for more general task relatedness models such as multitask smoothness and coupled optimization. We show that, under some general conditions on the compression noise, and for sufficiently small step-sizes $\mu$, the resulting communication-efficient strategy is stable both in terms of mean-square error and average bit rate: by reducing $\mu$, it is possible to keep the estimation errors small (on the order of $\mu$) without increasing indefinitely the bit rate as $\mu\rightarrow 0$. The results establish that, in the small step-size regime and with a finite number of bits, it is possible to attain the performance achievable in the absence of compression.

Compressed Regression over Adaptive Networks

Apr 07, 2023

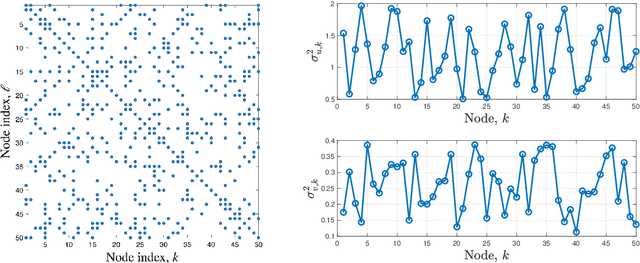

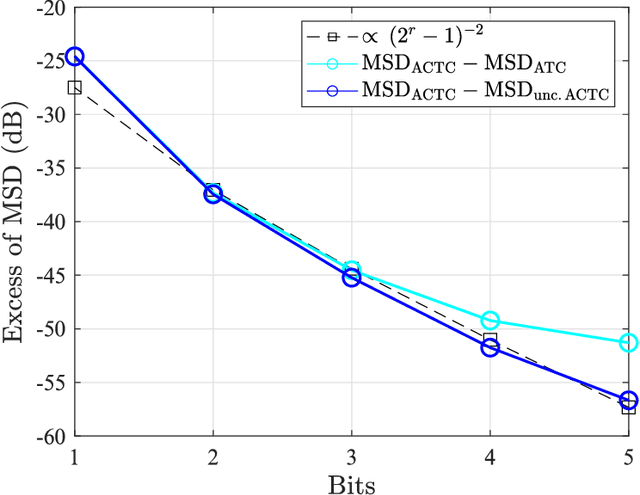

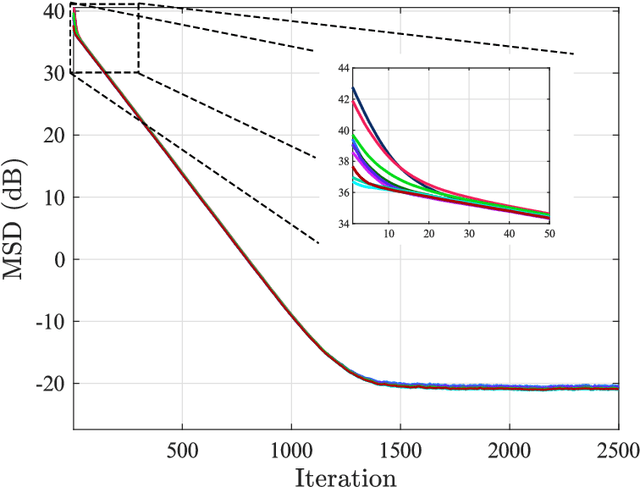

Abstract:In this work we derive the performance achievable by a network of distributed agents that solve, adaptively and in the presence of communication constraints, a regression problem. Agents employ the recently proposed ACTC (adapt-compress-then-combine) diffusion strategy, where the signals exchanged locally by neighboring agents are encoded with randomized differential compression operators. We provide a detailed characterization of the mean-square estimation error, which is shown to comprise a term related to the error that agents would achieve without communication constraints, plus a term arising from compression. The analysis reveals quantitative relationships between the compression loss and fundamental attributes of the distributed regression problem, in particular, the stochastic approximation error caused by the gradient noise and the network topology (through the Perron eigenvector). We show that knowledge of such relationships is critical to allocate optimally the communication resources across the agents, taking into account their individual attributes, such as the quality of their data or their degree of centrality in the network topology. We devise an optimized allocation strategy where the parameters necessary for the optimization can be learned online by the agents. Illustrative examples show that a significant performance improvement, as compared to a blind (i.e., uniform) resource allocation, can be achieved by optimizing the allocation by means of the provided mean-square-error formulas.

Quantization for decentralized learning under subspace constraints

Sep 16, 2022

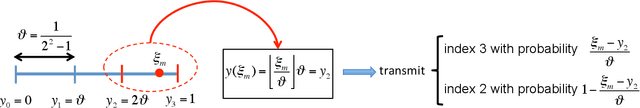

Abstract:In this paper, we consider decentralized optimization problems where agents have individual cost functions to minimize subject to subspace constraints that require the minimizers across the network to lie in low-dimensional subspaces. This constrained formulation includes consensus or single-task optimization as special cases, and allows for more general task relatedness models such as multitask smoothness and coupled optimization. In order to cope with communication constraints, we propose and study an adaptive decentralized strategy where the agents employ differential randomized quantizers to compress their estimates before communicating with their neighbors. The analysis shows that, under some general conditions on the quantization noise, and for sufficiently small step-sizes $\mu$, the strategy is stable both in terms of mean-square error and average bit rate: by reducing $\mu$, it is possible to keep the estimation errors small (on the order of $\mu$) without increasing indefinitely the bit rate as $\mu\rightarrow 0$. Simulations illustrate the theoretical findings and the effectiveness of the proposed approach, revealing that decentralized learning is achievable at the expense of only a few bits.

Distributed Adaptive Learning Under Communication Constraints

Dec 03, 2021

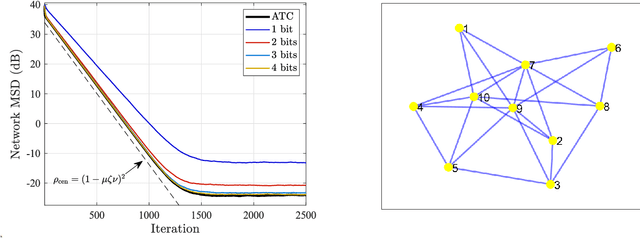

Abstract:This work examines adaptive distributed learning strategies designed to operate under communication constraints. We consider a network of agents that must solve an online optimization problem from continual observation of streaming data. The agents implement a distributed cooperative strategy where each agent is allowed to perform local exchange of information with its neighbors. In order to cope with communication constraints, the exchanged information must be unavoidably compressed. We propose a diffusion strategy nicknamed as ACTC (Adapt-Compress-Then-Combine), which relies on the following steps: i) an adaptation step where each agent performs an individual stochastic-gradient update with constant step-size; ii) a compression step that leverages a recently introduced class of stochastic compression operators; and iii) a combination step where each agent combines the compressed updates received from its neighbors. The distinguishing elements of this work are as follows. First, we focus on adaptive strategies, where constant (as opposed to diminishing) step-sizes are critical to respond in real time to nonstationary variations. Second, we consider the general class of directed graphs and left-stochastic combination policies, which allow us to enhance the interplay between topology and learning. Third, in contrast with related works that assume strong convexity for all individual agents' cost functions, we require strong convexity only at a network level, a condition satisfied even if a single agent has a strongly-convex cost and the remaining agents have non-convex costs. Fourth, we focus on a diffusion (as opposed to consensus) strategy. Under the demanding setting of compressed information, we establish that the ACTC iterates fluctuate around the desired optimizer, achieving remarkable savings in terms of bits exchanged between neighboring agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge