Mara Chinea-Rios

Zero and Few-shot Learning for Author Profiling

Apr 22, 2022

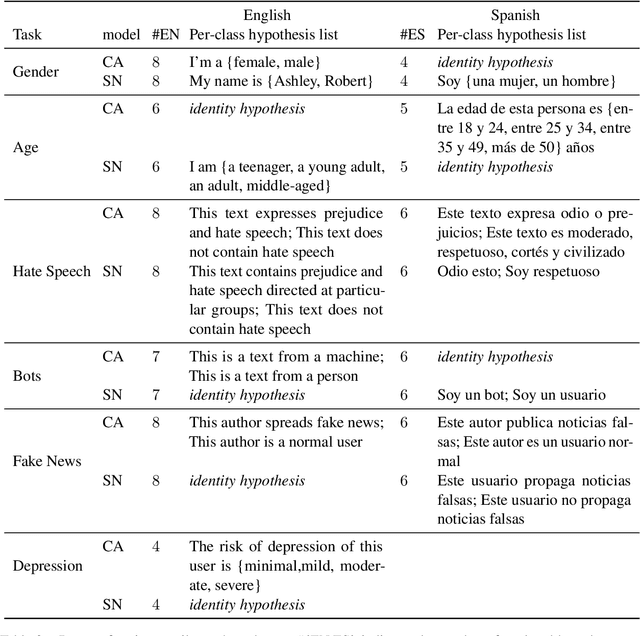

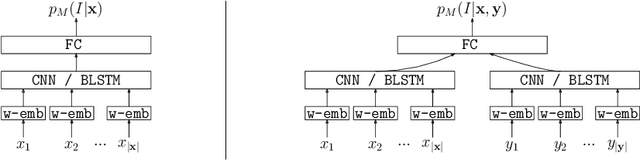

Abstract:Author profiling classifies author characteristics by analyzing how language is shared among people. In this work, we study that task from a low-resource viewpoint: using little or no training data. We explore different zero and few-shot models based on entailment and evaluate our systems on several profiling tasks in Spanish and English. In addition, we study the effect of both the entailment hypothesis and the size of the few-shot training sample. We find that entailment-based models out-perform supervised text classifiers based on roberta-XLM and that we can reach 80% of the accuracy of previous approaches using less than 50\% of the training data on average.

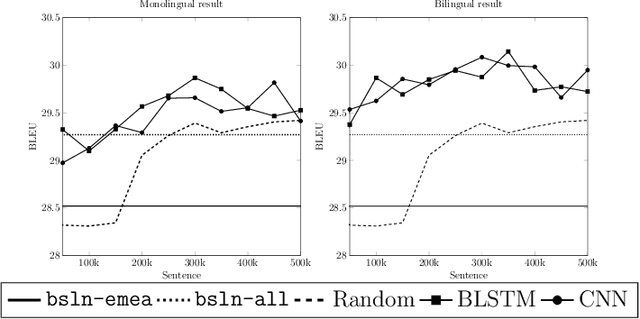

Neural Networks Classifier for Data Selection in Statistical Machine Translation

Dec 21, 2016

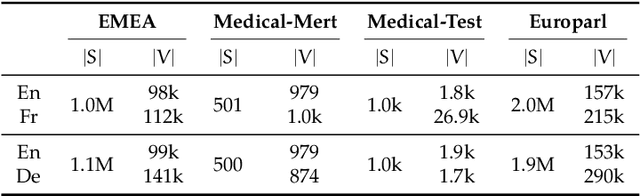

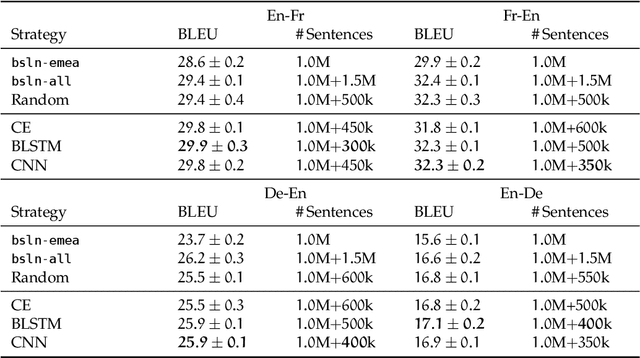

Abstract:We address the data selection problem in statistical machine translation (SMT) as a classification task. The new data selection method is based on a neural network classifier. We present a new method description and empirical results proving that our data selection method provides better translation quality, compared to a state-of-the-art method (i.e., Cross entropy). Moreover, the empirical results reported are coherent across different language pairs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge