Manfred Paulini

on behalf of the CMS Collaboration

Autoencoder-based Online Data Quality Monitoring for the CMS Electromagnetic Calorimeter

Aug 31, 2023

Abstract:The online Data Quality Monitoring system (DQM) of the CMS electromagnetic calorimeter (ECAL) is a crucial operational tool that allows ECAL experts to quickly identify, localize, and diagnose a broad range of detector issues that would otherwise hinder physics-quality data taking. Although the existing ECAL DQM system has been continuously updated to respond to new problems, it remains one step behind newer and unforeseen issues. Using unsupervised deep learning, a real-time autoencoder-based anomaly detection system is developed that is able to detect ECAL anomalies unseen in past data. After accounting for spatial variations in the response of the ECAL and the temporal evolution of anomalies, the new system is able to efficiently detect anomalies while maintaining an estimated false discovery rate between $10^{-2}$ to $10^{-4}$, beating existing benchmarks by about two orders of magnitude. The real-world performance of the system is validated using anomalies found in 2018 and 2022 LHC collision data. Additionally, first results from deploying the autoencoder-based system in the CMS online DQM workflow for the ECAL barrel during Run 3 of the LHC are presented, showing its promising performance in detecting obscure issues that could have been missed in the existing DQM system.

DeepSNR: A deep learning foundation for offline gravitational wave detection

Jul 11, 2022

Abstract:All scientific claims of gravitational wave discovery to date rely on the offline statistical analysis of candidate observations in order to quantify significance relative to background processes. The current foundation in such offline detection pipelines in experiments at LIGO is the matched-filter algorithm, which produces a signal-to-noise-ratio-based statistic for ranking candidate observations. Existing deep-learning-based attempts to detect gravitational waves, which have shown promise in both signal sensitivity and computational efficiency, output probability scores. However, probability scores are not easily integrated into discovery workflows, limiting the use of deep learning thus far to non-discovery-oriented applications. In this paper, the Deep Learning Signal-to-Noise Ratio (DeepSNR) detection pipeline, which uses a novel method for generating a signal-to-noise ratio ranking statistic from deep learning classifiers, is introduced, providing the first foundation for the use of deep learning algorithms in discovery-oriented pipelines. The performance of DeepSNR is demonstrated by identifying binary black hole merger candidates versus noise sources in open LIGO data from the first observation run. High-fidelity simulations of the LIGO detector responses are used to present the first sensitivity estimates of deep learning models in terms of physical observables. The robustness of DeepSNR under various experimental considerations is also investigated. The results pave the way for DeepSNR to be used in the scientific discovery of gravitational waves and rare signals in broader contexts, potentially enabling the detection of fainter signals and never-before-observed phenomena.

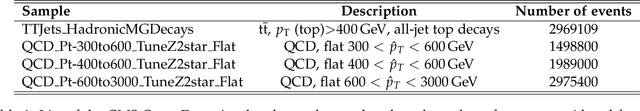

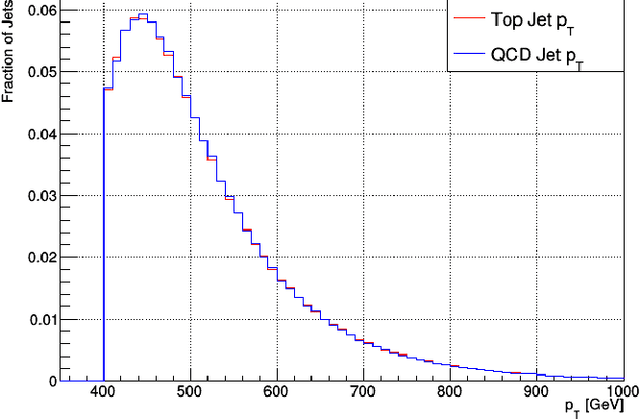

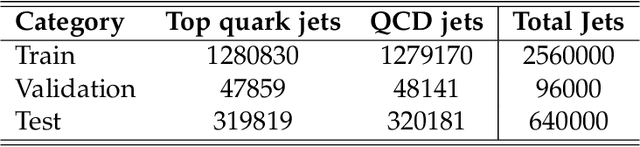

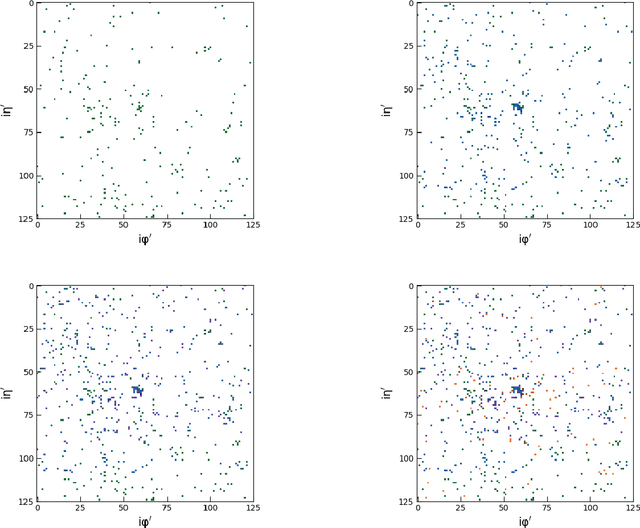

End-to-End Jet Classification of Boosted Top Quarks with the CMS Open Data

Apr 19, 2021

Abstract:We describe a novel application of the end-to-end deep learning technique to the task of discriminating top quark-initiated jets from those originating from the hadronization of a light quark or a gluon. The end-to-end deep learning technique combines deep learning algorithms and low-level detector representation of the high-energy collision event. In this study, we use low-level detector information from the simulated CMS Open Data samples to construct the top jet classifiers. To optimize classifier performance we progressively add low-level information from the CMS tracking detector, including pixel detector reconstructed hits and impact parameters, and demonstrate the value of additional tracking information even when no new spatial structures are added. Relying only on calorimeter energy deposits and reconstructed pixel detector hits, the end-to-end classifier achieves an AUC score of 0.975$\pm$0.002 for the task of classifying boosted top quark jets. After adding derived track quantities, the classifier AUC score increases to 0.9824$\pm$0.0013, serving as the first performance benchmark for these CMS Open Data samples. We additionally provide a timing performance comparison of different processor unit architectures for training the network.

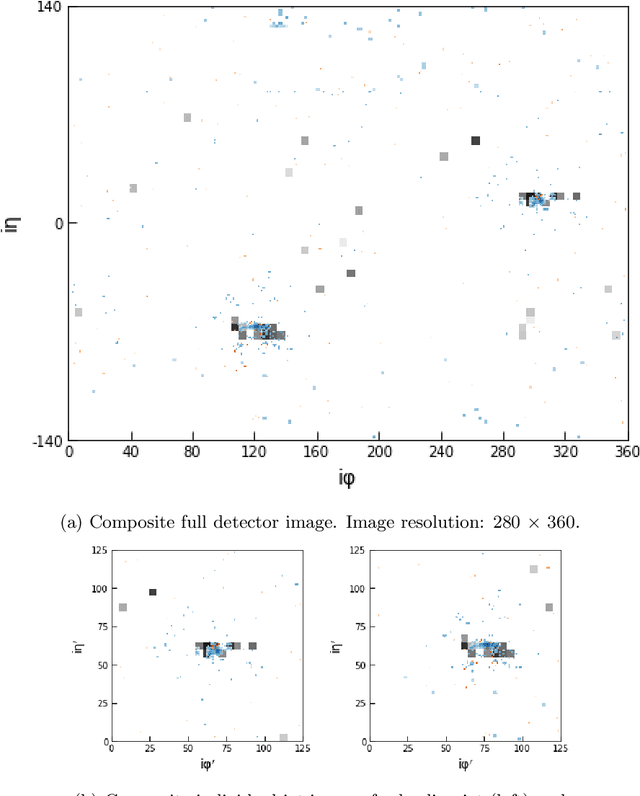

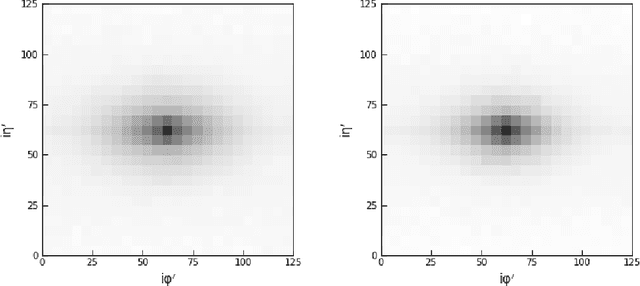

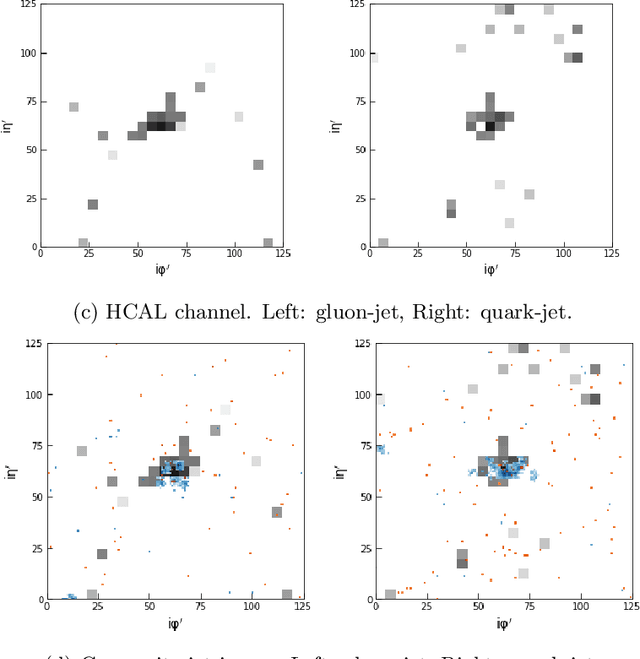

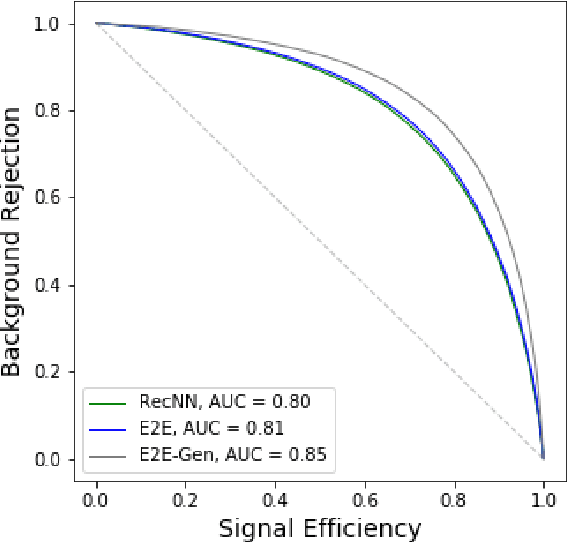

End-to-End Jet Classification of Quarks and Gluons with the CMS Open Data

Feb 21, 2019

Abstract:We describe the construction of end-to-end jet image classifiers based on simulated low-level detector data to discriminate quark- vs. gluon-initiated jets with high-fidelity simulated CMS Open Data. We highlight the importance of precise spatial information and demonstrate competitive performance to existing state-of-the-art jet classifiers. We further generalize the end-to-end approach to event-level classification of quark vs. gluon di-jet QCD events. We compare the fully end-to-end approach to using hand-engineered features and demonstrate that the end-to-end algorithm is robust against the effects of underlying event and pile-up.

End-to-End Physics Event Classification with the CMS Open Data: Applying Image-based Deep Learning on Detector Data to Directly Classify Collision Events at the LHC

Jul 31, 2018

Abstract:We describe the construction of a class of general, end-to-end, image-based physics event classifiers that directly use simulated raw detector data to discriminate signal and background processes in collision events at the LHC. To better understand what such classifiers are able to learn and to address some of the challenges associated with their use, we attempt to distinguish the Standard Model Higgs Boson decaying to two photons from its leading backgrounds using high-fidelity simulated detector data from the 2012 CMS Open Data. We demonstrate the ability of end-to-end classifiers to learn from the angular distribution of the electromagnetic showers, their shape, and the energy scale of their constituent hits, even when the underlying particles are not fully resolved.

Machine Learning in High Energy Physics Community White Paper

Jul 08, 2018

Abstract:Machine learning is an important research area in particle physics, beginning with applications to high-level physics analysis in the 1990s and 2000s, followed by an explosion of applications in particle and event identification and reconstruction in the 2010s. In this document we discuss promising future research and development areas in machine learning in particle physics with a roadmap for their implementation, software and hardware resource requirements, collaborative initiatives with the data science community, academia and industry, and training the particle physics community in data science. The main objective of the document is to connect and motivate these areas of research and development with the physics drivers of the High-Luminosity Large Hadron Collider and future neutrino experiments and identify the resource needs for their implementation. Additionally we identify areas where collaboration with external communities will be of great benefit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge