Malte Probst

Responsibility and Engagement -- Evaluating Interactions in Social Robot Navigation

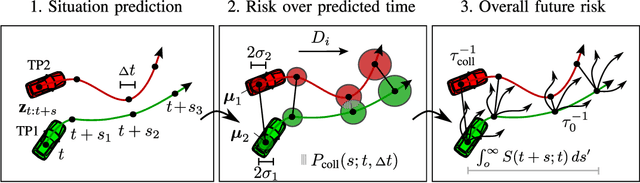

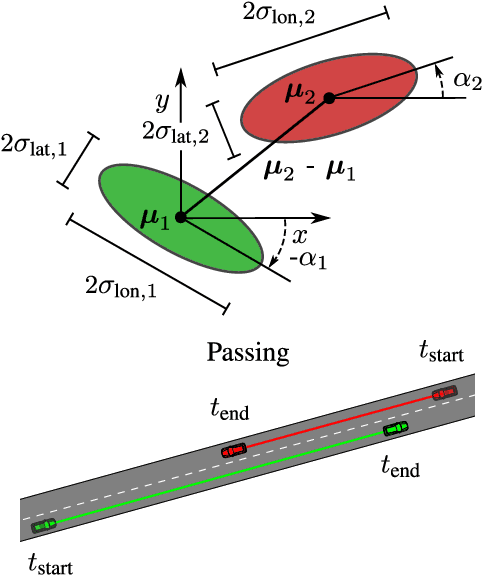

Sep 16, 2025Abstract:In Social Robot Navigation (SRN), the availability of meaningful metrics is crucial for evaluating trajectories from human-robot interactions. In the SRN context, such interactions often relate to resolving conflicts between two or more agents. Correspondingly, the shares to which agents contribute to the resolution of such conflicts are important. This paper builds on recent work, which proposed a Responsibility metric capturing such shares. We extend this framework in two directions: First, we model the conflict buildup phase by introducing a time normalization. Second, we propose the related Engagement metric, which captures how the agents' actions intensify a conflict. In a comprehensive series of simulated scenarios with dyadic, group and crowd interactions, we show that the metrics carry meaningful information about the cooperative resolution of conflicts in interactions. They can be used to assess behavior quality and foresightedness. We extensively discuss applicability, design choices and limitations of the proposed metrics.

Spotting the Unfriendly Robot -- Towards better Metrics for Interactions

Sep 16, 2025Abstract:Establishing standardized metrics for Social Robot Navigation (SRN) algorithms for assessing the quality and social compliance of robot behavior around humans is essential for SRN research. Currently, commonly used evaluation metrics lack the ability to quantify how cooperative an agent behaves in interaction with humans. Concretely, in a simple frontal approach scenario, no metric specifically captures if both agents cooperate or if one agent stays on collision course and the other agent is forced to evade. To address this limitation, we propose two new metrics, a conflict intensity metric and the responsibility metric. Together, these metrics are capable of evaluating the quality of human-robot interactions by showing how much a given algorithm has contributed to reducing a conflict and which agent actually took responsibility of the resolution. This work aims to contribute to the development of a comprehensive and standardized evaluation methodology for SRN, ultimately enhancing the safety, efficiency, and social acceptance of robots in human-centric environments.

Considering Human Factors in Risk Maps for Robust and Foresighted Driver Warning

Jun 06, 2023Abstract:Driver support systems that include human states in the support process is an active research field. Many recent approaches allow, for example, to sense the driver's drowsiness or awareness of the driving situation. However, so far, this rich information has not been utilized much for improving the effectiveness of support systems. In this paper, we therefore propose a warning system that uses human states in the form of driver errors and can warn users in some cases of upcoming risks several seconds earlier than the state of the art systems not considering human factors. The system consists of a behavior planner Risk Maps which directly changes its prediction of the surrounding driving situation based on the sensed driver errors. By checking if this driver's behavior plan is objectively safe, a more robust and foresighted driver warning is achieved. In different simulations of a dynamic lane change and intersection scenarios, we show how the driver's behavior plan can become unsafe, given the estimate of driver errors, and experimentally validate the advantages of considering human factors.

Online and Predictive Warning System for Forced Lane Changes using Risk Maps

Mar 15, 2023

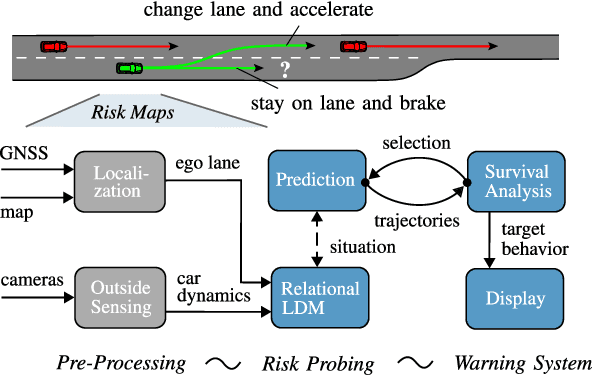

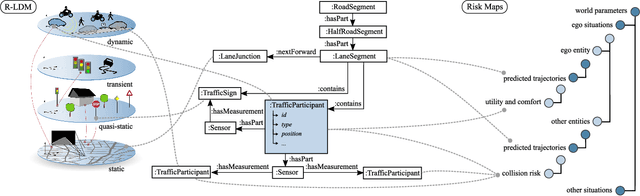

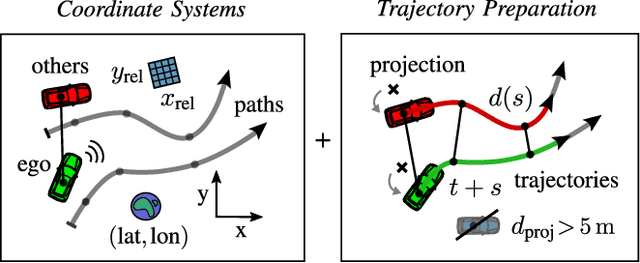

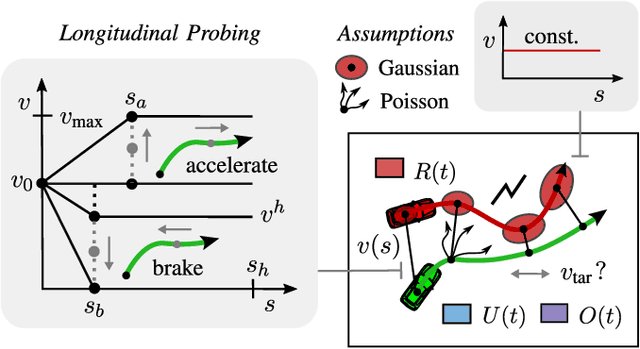

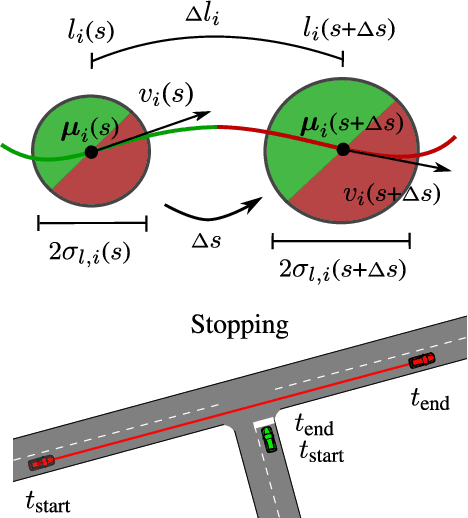

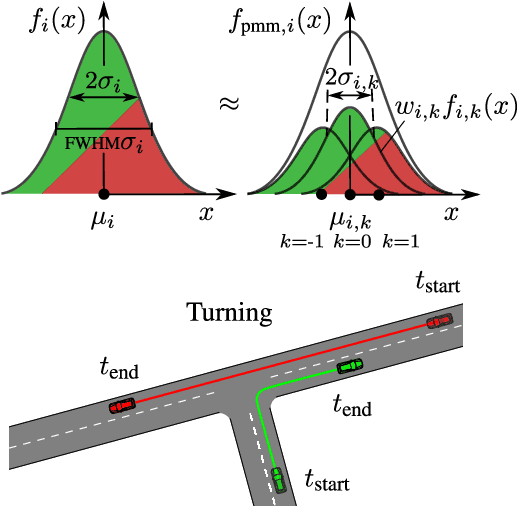

Abstract:The survival analysis of driving trajectories allows for holistic evaluations of car-related risks caused by collisions or curvy roads. This analysis has advantages over common Time-To-X indicators, such as its predictive and probabilistic nature. However, so far, the theoretical risks have not been demonstrated in real-world environments. In this paper, we therefore present Risk Maps (RM) for online warning support in situations with forced lane changes, due to the end of roads. For this purpose, we first unify sensor data in a Relational Local Dynamic Map (R-LDM). RM is afterwards able to be run in real-time and efficiently probes a range of situations in order to determine risk-minimizing behaviors. Hereby, we focus on the improvement of uncertainty-awareness and transparency of the system. Risk, utility and comfort costs are included in a single formula and are intuitively visualized to the driver. In the conducted experiments, a low-cost sensor setup with a GNSS receiver for localization and multiple cameras for object detection are leveraged. The final system is successfully applied on two-lane roads and recommends lane change advices, which are separated in gap and no-gap indications. These results are promising and present an important step towards interpretable safety.

Comfortable Priority Handling with Predictive Velocity Optimization for Intersection Crossings

Mar 14, 2023Abstract:We address the problem of motion planning for four-way intersection crossings with right-of-ways. Road safety typically assigns liability to the follower in rear-end collisions and to the approaching vehicle required to yield in side crashes. As an alternative to previous models based on heuristic state machines, we propose a planning framework which changes the prediction model of other cars (e.g. their prototypical accelerations and decelerations) depending on the given longitudinal or lateral priority rules. Combined with a state-of-the-art trajectory optimization approach ROPT (Risk Optimization Method) this allows to find ego velocity profiles minimizing risks from curves and all involved vehicles while maximizing utility (needed time to arrive at a goal) and comfort (change and duration of acceleration) under the presence of regulatory conditions. Analytical and statistical evaluations show that our method is able to follow right-of-ways for a wide range of other vehicle behaviors and path geometries. Even when the other cars drive in a non-priority-compliant way, ROPT achieves good risk-comfort tradeoffs.

Probabilistic Uncertainty-Aware Risk Spot Detector for Naturalistic Driving

Mar 13, 2023

Abstract:Risk assessment is a central element for the development and validation of Autonomous Vehicles (AV). It comprises a combination of occurrence probability and severity of future critical events. Time Headway (TH) as well as Time-To-Contact (TTC) are commonly used risk metrics and have qualitative relations to occurrence probability. However, they lack theoretical derivations and additionally they are designed to only cover special types of traffic scenarios (e.g. following between single car pairs). In this paper, we present a probabilistic situation risk model based on survival analysis considerations and extend it to naturally incorporate sensory, temporal and behavioral uncertainties as they arise in real-world scenarios. The resulting Risk Spot Detector (RSD) is applied and tested on naturalistic driving data of a multi-lane boulevard with several intersections, enabling the visualization of road criticality maps. Compared to TH and TTC, our approach is more selective and specific in predicting risk. RSD concentrates on driving sections of high vehicle density where large accelerations and decelerations or approaches with high velocity occur.

Optimization of Velocity Ramps with Survival Analysis for Intersection Merge-Ins

Mar 13, 2023Abstract:We consider the problem of correct motion planning for T-intersection merge-ins of arbitrary geometry and vehicle density. A merge-in support system has to estimate the chances that a gap between two consecutive vehicles can be taken successfully. In contrast to previous models based on heuristic gap size rules, we present an approach which optimizes the integral risk of the situation using parametrized velocity ramps. It accounts for the risks from curves and all involved vehicles (front and rear on all paths) with a so-called survival analysis. For comparison, we also introduce a specially designed extension of the Intelligent Driver Model (IDM) for entering intersections. We show in a quantitative statistical evaluation that the survival method provides advantages in terms of lower absolute risk (i.e., no crash happens) and better risk-utility tradeoff (i.e., making better use of appearing gaps). Furthermore, our approach generalizes to more complex situations with additional risk sources.

Importance Filtering with Risk Models for Complex Driving Situations

Mar 13, 2023

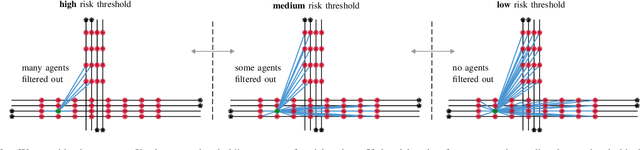

Abstract:Self-driving cars face complex driving situations with a large amount of agents when moving in crowded cities. However, some of the agents are actually not influencing the behavior of the self-driving car. Filtering out unimportant agents would inherently simplify the behavior or motion planning task for the system. The planning system can then focus on fewer agents to find optimal behavior solutions for the ego~agent. This is helpful especially in terms of computational efficiency. In this paper, therefore, the research topic of importance filtering with driving risk models is introduced. We give an overview of state-of-the-art risk models and present newly adapted risk models for filtering. Their capability to filter out surrounding unimportant agents is compared in a large-scale experiment. As it turns out, the novel trajectory distance balances performance, robustness and efficiency well. Based on the results, we can further derive a novel filter architecture with multiple filter steps, for which risk models are recommended for each step, to further improve the robustness. We are confident that this will enable current behavior planning systems to better solve complex situations in everyday driving.

Relate to Predict: Towards Task-Independent Knowledge Representations for Reinforcement Learning

Dec 10, 2022

Abstract:Reinforcement Learning (RL) can enable agents to learn complex tasks. However, it is difficult to interpret the knowledge and reuse it across tasks. Inductive biases can address such issues by explicitly providing generic yet useful decomposition that is otherwise difficult or expensive to learn implicitly. For example, object-centered approaches decompose a high dimensional observation into individual objects. Expanding on this, we utilize an inductive bias for explicit object-centered knowledge separation that provides further decomposition into semantic representations and dynamics knowledge. For this, we introduce a semantic module that predicts an objects' semantic state based on its context. The resulting affordance-like object state can then be used to enrich perceptual object representations. With a minimal setup and an environment that enables puzzle-like tasks, we demonstrate the feasibility and benefits of this approach. Specifically, we compare three different methods of integrating semantic representations into a model-based RL architecture. Our experiments show that the degree of explicitness in knowledge separation correlates with faster learning, better accuracy, better generalization, and better interpretability.

Deep Boltzmann Machines in Estimation of Distribution Algorithms for Combinatorial Optimization

Aug 08, 2016

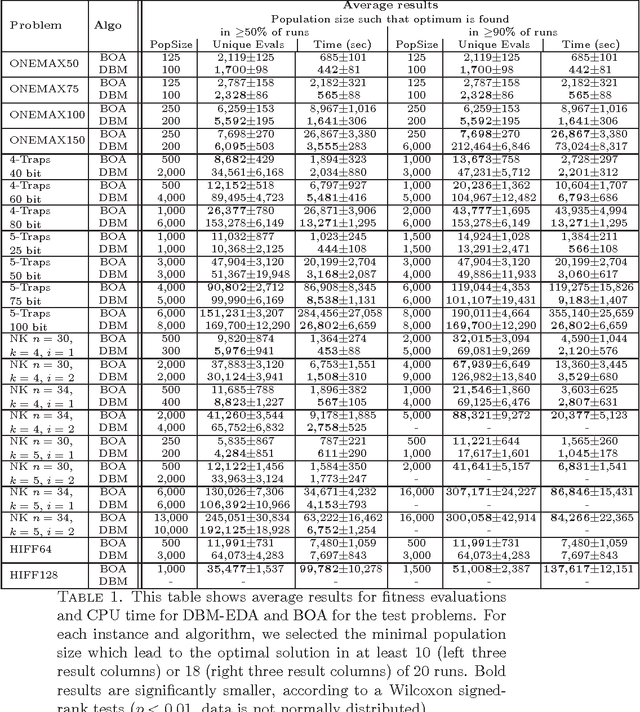

Abstract:Estimation of Distribution Algorithms (EDAs) require flexible probability models that can be efficiently learned and sampled. Deep Boltzmann Machines (DBMs) are generative neural networks with these desired properties. We integrate a DBM into an EDA and evaluate the performance of this system in solving combinatorial optimization problems with a single objective. We compare the results to the Bayesian Optimization Algorithm. The performance of DBM-EDA was superior to BOA for difficult additively decomposable functions, i.e., concatenated deceptive traps of higher order. For most other benchmark problems, DBM-EDA cannot clearly outperform BOA, or other neural network-based EDAs. In particular, it often yields optimal solutions for a subset of the runs (with fewer evaluations than BOA), but is unable to provide reliable convergence to the global optimum competitively. At the same time, the model building process is computationally more expensive than that of other EDAs using probabilistic models from the neural network family, such as DAE-EDA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge