Raphael Wenzel

Spotting the Unfriendly Robot -- Towards better Metrics for Interactions

Sep 16, 2025Abstract:Establishing standardized metrics for Social Robot Navigation (SRN) algorithms for assessing the quality and social compliance of robot behavior around humans is essential for SRN research. Currently, commonly used evaluation metrics lack the ability to quantify how cooperative an agent behaves in interaction with humans. Concretely, in a simple frontal approach scenario, no metric specifically captures if both agents cooperate or if one agent stays on collision course and the other agent is forced to evade. To address this limitation, we propose two new metrics, a conflict intensity metric and the responsibility metric. Together, these metrics are capable of evaluating the quality of human-robot interactions by showing how much a given algorithm has contributed to reducing a conflict and which agent actually took responsibility of the resolution. This work aims to contribute to the development of a comprehensive and standardized evaluation methodology for SRN, ultimately enhancing the safety, efficiency, and social acceptance of robots in human-centric environments.

Responsibility and Engagement -- Evaluating Interactions in Social Robot Navigation

Sep 16, 2025Abstract:In Social Robot Navigation (SRN), the availability of meaningful metrics is crucial for evaluating trajectories from human-robot interactions. In the SRN context, such interactions often relate to resolving conflicts between two or more agents. Correspondingly, the shares to which agents contribute to the resolution of such conflicts are important. This paper builds on recent work, which proposed a Responsibility metric capturing such shares. We extend this framework in two directions: First, we model the conflict buildup phase by introducing a time normalization. Second, we propose the related Engagement metric, which captures how the agents' actions intensify a conflict. In a comprehensive series of simulated scenarios with dyadic, group and crowd interactions, we show that the metrics carry meaningful information about the cooperative resolution of conflicts in interactions. They can be used to assess behavior quality and foresightedness. We extensively discuss applicability, design choices and limitations of the proposed metrics.

Considering Human Factors in Risk Maps for Robust and Foresighted Driver Warning

Jun 06, 2023Abstract:Driver support systems that include human states in the support process is an active research field. Many recent approaches allow, for example, to sense the driver's drowsiness or awareness of the driving situation. However, so far, this rich information has not been utilized much for improving the effectiveness of support systems. In this paper, we therefore propose a warning system that uses human states in the form of driver errors and can warn users in some cases of upcoming risks several seconds earlier than the state of the art systems not considering human factors. The system consists of a behavior planner Risk Maps which directly changes its prediction of the surrounding driving situation based on the sensed driver errors. By checking if this driver's behavior plan is objectively safe, a more robust and foresighted driver warning is achieved. In different simulations of a dynamic lane change and intersection scenarios, we show how the driver's behavior plan can become unsafe, given the estimate of driver errors, and experimentally validate the advantages of considering human factors.

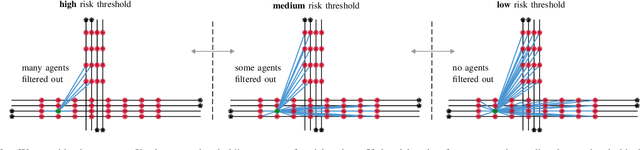

Importance Filtering with Risk Models for Complex Driving Situations

Mar 13, 2023

Abstract:Self-driving cars face complex driving situations with a large amount of agents when moving in crowded cities. However, some of the agents are actually not influencing the behavior of the self-driving car. Filtering out unimportant agents would inherently simplify the behavior or motion planning task for the system. The planning system can then focus on fewer agents to find optimal behavior solutions for the ego~agent. This is helpful especially in terms of computational efficiency. In this paper, therefore, the research topic of importance filtering with driving risk models is introduced. We give an overview of state-of-the-art risk models and present newly adapted risk models for filtering. Their capability to filter out surrounding unimportant agents is compared in a large-scale experiment. As it turns out, the novel trajectory distance balances performance, robustness and efficiency well. Based on the results, we can further derive a novel filter architecture with multiple filter steps, for which risk models are recommended for each step, to further improve the robustness. We are confident that this will enable current behavior planning systems to better solve complex situations in everyday driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge