M. Hassan Najafi

AMS-HD: Hyperdimensional Computing for Real-Time and Energy-Efficient Acute Mountain Sickness Detection

Feb 09, 2026Abstract:Altitude sickness is a potentially life-threatening condition that impacts many individuals traveling to elevated altitudes. Timely detection is critical as symptoms can escalate rapidly. Early recognition enables simple interventions such as descent, oxygen, or medication, and prompt treatment can save lives by significantly lowering the risk of severe complications. Although conventional machine learning (ML) techniques have been applied to identify altitude sickness using physiological signals, such as heart rate, oxygen saturation, respiration rate, blood pressure, and body temperature, they often struggle to balance predictive performance with low hardware demands. In contrast, hyperdimensional computing (HDC) remains under-explored for this task with limited biomedical features, where it may offer a compelling alternative to existing classification models. Its vector symbolic framework is inherently suited to hardware-efficient design, making it a strong candidate for low-power systems like wearables. Leveraging lightweight computation and efficient streamlined memory usage, HDC enables real-time detection of altitude sickness from physiological parameters collected by wearable devices, achieving accuracy comparable to that of traditional ML models. We present AMS-HD, a novel system that integrates tailored feature extraction and Hadamard HV encoding to enhance both the precision and efficiency of HDC-based detection. This framework is well-positioned for deployment in wearable health monitoring platforms, enabling continuous, on-the-go tracking of acute altitude sickness.

TranSC: Hardware-Aware Design of Transcendental Functions Using Stochastic Logic

Jan 12, 2026Abstract:The hardware-friendly implementation of transcendental functions remains a longstanding challenge in design automation. These functions, which cannot be expressed as finite combinations of algebraic operations, pose significant complexity in digital circuit design. This study introduces a novel approach, TranSC, that utilizes stochastic computing (SC) for lightweight yet accurate implementation of transcendental functions. Building on established SC techniques, our method explores alternative random sources-specifically, quasi-random Van der Corput low-discrepancy (LD) sequences-instead of conventional pseudo-randomness. This shift enhances both the accuracy and efficiency of SC-based computations. We validate our approach through extensive experiments on various function types, including trigonometric, hyperbolic, and activation functions. The proposed design approach significantly reduces MSE by up to 98% compared to the state-of-the-art solutions while reducing hardware area, power consumption, and energy usage by 33%, 72%, and 64%, respectively.

Improved Data Encoding for Emerging Computing Paradigms: From Stochastic to Hyperdimensional Computing

Jan 06, 2025

Abstract:Data encoding is a fundamental step in emerging computing paradigms, particularly in stochastic computing (SC) and hyperdimensional computing (HDC), where it plays a crucial role in determining the overall system performance and hardware cost efficiency. This study presents an advanced encoding strategy that leverages a hardware-friendly class of low-discrepancy (LD) sequences, specifically powers-of-2 bases of Van der Corput (VDC) sequences (VDC-2^n), as sources for random number generation. Our approach significantly enhances the accuracy and efficiency of SC and HDC systems by addressing challenges associated with randomness. By employing LD sequences, we improve correlation properties and reduce hardware complexity. Experimental results demonstrate significant improvements in accuracy and energy savings for SC and HDC systems. Our solution provides a robust framework for integrating SC and HDC in resource-constrained environments, paving the way for efficient and scalable AI implementations.

Regional Weather Variable Predictions by Machine Learning with Near-Surface Observational and Atmospheric Numerical Data

Dec 11, 2024

Abstract:Accurate and timely regional weather prediction is vital for sectors dependent on weather-related decisions. Traditional prediction methods, based on atmospheric equations, often struggle with coarse temporal resolutions and inaccuracies. This paper presents a novel machine learning (ML) model, called MiMa (short for Micro-Macro), that integrates both near-surface observational data from Kentucky Mesonet stations (collected every five minutes, known as Micro data) and hourly atmospheric numerical outputs (termed as Macro data) for fine-resolution weather forecasting. The MiMa model employs an encoder-decoder transformer structure, with two encoders for processing multivariate data from both datasets and a decoder for forecasting weather variables over short time horizons. Each instance of the MiMa model, called a modelet, predicts the values of a specific weather parameter at an individual Mesonet station. The approach is extended with Re-MiMa modelets, which are designed to predict weather variables at ungauged locations by training on multivariate data from a few representative stations in a region, tagged with their elevations. Re-MiMa (short for Regional-MiMa) can provide highly accurate predictions across an entire region, even in areas without observational stations. Experimental results show that MiMa significantly outperforms current models, with Re-MiMa offering precise short-term forecasts for ungauged locations, marking a significant advancement in weather forecasting accuracy and applicability.

Sobol Sequence Optimization for Hardware-Efficient Vector Symbolic Architectures

Nov 17, 2023

Abstract:Hyperdimensional computing (HDC) is an emerging computing paradigm with significant promise for efficient and robust learning. In HDC, objects are encoded with high-dimensional vector symbolic sequences called hypervectors. The quality of hypervectors, defined by their distribution and independence, directly impacts the performance of HDC systems. Despite a large body of work on the processing parts of HDC systems, little to no attention has been paid to data encoding and the quality of hypervectors. Most prior studies have generated hypervectors using inherent random functions, such as MATLAB`s or Python`s random function. This work introduces an optimization technique for generating hypervectors by employing quasi-random sequences. These sequences have recently demonstrated their effectiveness in achieving accurate and low-discrepancy data encoding in stochastic computing systems. The study outlines the optimization steps for utilizing Sobol sequences to produce high-quality hypervectors in HDC systems. An optimization algorithm is proposed to select the most suitable Sobol sequences for generating minimally correlated hypervectors, particularly in applications related to symbol-oriented architectures. The performance of the proposed technique is evaluated in comparison to two traditional approaches of generating hypervectors based on linear-feedback shift registers and MATLAB random function. The evaluation is conducted for two applications: (i) language and (ii) headline classification. Our experimental results demonstrate accuracy improvements of up to 10.79%, depending on the vector size. Additionally, the proposed encoding hardware exhibits reduced energy consumption and a superior area-delay product.

uHD: Unary Processing for Lightweight and Dynamic Hyperdimensional Computing

Nov 16, 2023

Abstract:Hyperdimensional computing (HDC) is a novel computational paradigm that operates on long-dimensional vectors known as hypervectors. The hypervectors are constructed as long bit-streams and form the basic building blocks of HDC systems. In HDC, hypervectors are generated from scalar values without taking their bit significance into consideration. HDC has been shown to be efficient and robust in various data processing applications, including computer vision tasks. To construct HDC models for vision applications, the current state-of-the-art practice utilizes two parameters for data encoding: pixel intensity and pixel position. However, the intensity and position information embedded in high-dimensional vectors are generally not generated dynamically in the HDC models. Consequently, the optimal design of hypervectors with high model accuracy requires powerful computing platforms for training. A more efficient approach to generating hypervectors is to create them dynamically during the training phase, which results in accurate, low-cost, and highly performable vectors. To this aim, we use low-discrepancy sequences to generate intensity hypervectors only, while avoiding position hypervectors. By doing so, the multiplication step in vector encoding is eliminated, resulting in a power-efficient HDC system. For the first time in the literature, our proposed approach employs lightweight vector generators utilizing unary bit-streams for efficient encoding of data instead of using conventional comparator-based generators.

Learning from Hypervectors: A Survey on Hypervector Encoding

Aug 01, 2023Abstract:Hyperdimensional computing (HDC) is an emerging computing paradigm that imitates the brain's structure to offer a powerful and efficient processing and learning model. In HDC, the data are encoded with long vectors, called hypervectors, typically with a length of 1K to 10K. The literature provides several encoding techniques to generate orthogonal or correlated hypervectors, depending on the intended application. The existing surveys in the literature often focus on the overall aspects of HDC systems, including system inputs, primary computations, and final outputs. However, this study takes a more specific approach. It zeroes in on the HDC system input and the generation of hypervectors, directly influencing the hypervector encoding process. This survey brings together various methods for hypervector generation from different studies and explores the limitations, challenges, and potential benefits they entail. Through a comprehensive exploration of this survey, readers will acquire a profound understanding of various encoding types in HDC and gain insights into the intricate process of hypervector generation for diverse applications.

TaxoNN: A Light-Weight Accelerator for Deep Neural Network Training

Oct 11, 2020

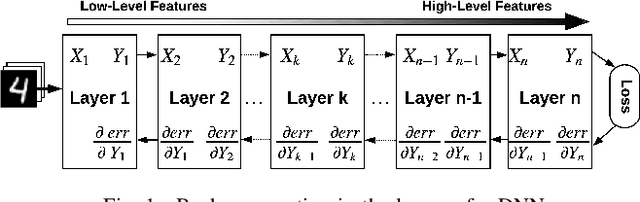

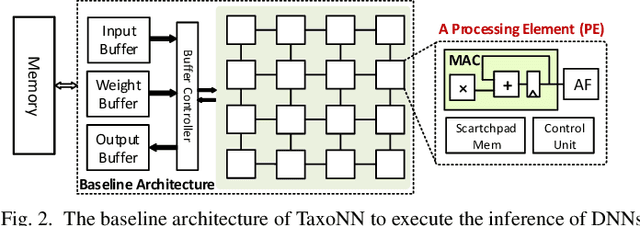

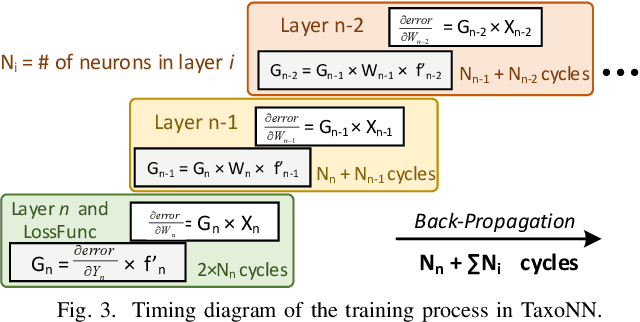

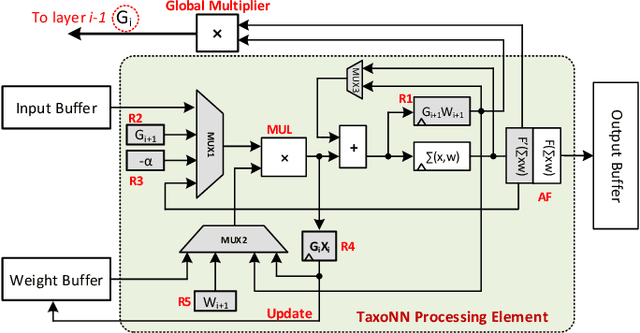

Abstract:Emerging intelligent embedded devices rely on Deep Neural Networks (DNNs) to be able to interact with the real-world environment. This interaction comes with the ability to retrain DNNs, since environmental conditions change continuously in time. Stochastic Gradient Descent (SGD) is a widely used algorithm to train DNNs by optimizing the parameters over the training data iteratively. In this work, first we present a novel approach to add the training ability to a baseline DNN accelerator (inference only) by splitting the SGD algorithm into simple computational elements. Then, based on this heuristic approach we propose TaxoNN, a light-weight accelerator for DNN training. TaxoNN can easily tune the DNN weights by reusing the hardware resources used in the inference process using a time-multiplexing approach and low-bitwidth units. Our experimental results show that TaxoNN delivers, on average, 0.97% higher misclassification rate compared to a full-precision implementation. Moreover, TaxoNN provides 2.1$\times$ power saving and 1.65$\times$ area reduction over the state-of-the-art DNN training accelerator.

* Accepted to ISCAS 2020. 5 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge