Lukasz Golab

GASTON: Graph-Aware Social Transformer for Online Networks

Jan 26, 2026Abstract:Online communities have become essential places for socialization and support, yet they also possess toxicity, echo chambers, and misinformation. Detecting this harmful content is difficult because the meaning of an online interaction stems from both what is written (textual content) and where it is posted (social norms). We propose GASTON (Graph-Aware Social Transformer for Online Networks), which learns text and user embeddings that are grounded in their local norms, providing the necessary context for downstream tasks. The heart of our solution is a contrastive initialization strategy that pretrains community embeddings based on user membership patterns, capturing a community's user base before processing any text. This allows GASTON to distinguish between communities (e.g., a support group vs. a hate group) based on who interacts there, even if they share similar vocabulary. Experiments on tasks such as stress detection, toxicity scoring, and norm violation demonstrate that the embeddings produced by GASTON outperform state-of-the-art baselines.

The Alignment Game: A Theory of Long-Horizon Alignment Through Recursive Curation

Nov 16, 2025Abstract:In self-consuming generative models that train on their own outputs, alignment with user preferences becomes a recursive rather than one-time process. We provide the first formal foundation for analyzing the long-term effects of such recursive retraining on alignment. Under a two-stage curation mechanism based on the Bradley-Terry (BT) model, we model alignment as an interaction between two factions: the Model Owner, who filters which outputs should be learned by the model, and the Public User, who determines which outputs are ultimately shared and retained through interactions with the model. Our analysis reveals three structural convergence regimes depending on the degree of preference alignment: consensus collapse, compromise on shared optima, and asymmetric refinement. We prove a fundamental impossibility theorem: no recursive BT-based curation mechanism can simultaneously preserve diversity, ensure symmetric influence, and eliminate dependence on initialization. Framing the process as dynamic social choice, we show that alignment is not a static goal but an evolving equilibrium, shaped both by power asymmetries and path dependence.

Rule-Based Explanations for Retrieval-Augmented LLM Systems

Oct 26, 2025Abstract:If-then rules are widely used to explain machine learning models; e.g., "if employed = no, then loan application = rejected." We present the first proposal to apply rules to explain the emerging class of large language models (LLMs) with retrieval-augmented generation (RAG). Since RAG enables LLM systems to incorporate retrieved information sources at inference time, rules linking the presence or absence of sources can explain output provenance; e.g., "if a Times Higher Education ranking article is retrieved, then the LLM ranks Oxford first." To generate such rules, a brute force approach would probe the LLM with all source combinations and check if the presence or absence of any sources leads to the same output. We propose optimizations to speed up rule generation, inspired by Apriori-like pruning from frequent itemset mining but redefined within the scope of our novel problem. We conclude with qualitative and quantitative experiments demonstrating our solutions' value and efficiency.

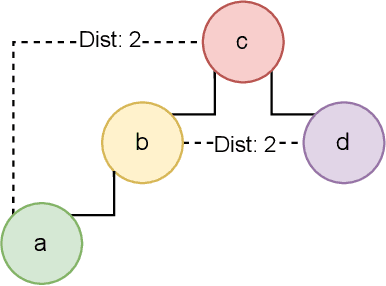

Explaining Expert Search and Team Formation Systems with ExES

May 21, 2024Abstract:Expert search and team formation systems operate on collaboration networks, with nodes representing individuals, labeled with their skills, and edges denoting collaboration relationships. Given a keyword query corresponding to the desired skills, these systems identify experts that best match the query. However, state-of-the-art solutions to this problem lack transparency. To address this issue, we propose ExES, a tool designed to explain expert search and team formation systems using factual and counterfactual methods from the field of explainable artificial intelligence (XAI). ExES uses factual explanations to highlight important skills and collaborations, and counterfactual explanations to suggest new skills and collaborations to increase the likelihood of being identified as an expert. Towards a practical deployment as an interactive explanation tool, we present and experimentally evaluate a suite of pruning strategies to speed up the explanation search. In many cases, our pruning strategies make ExES an order of magnitude faster than exhaustive search, while still producing concise and actionable explanations.

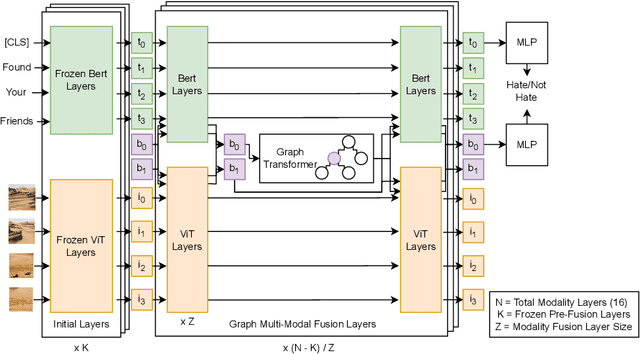

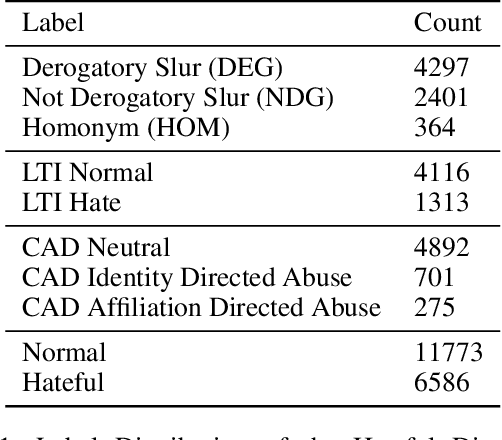

Multi-Modal Discussion Transformer: Integrating Text, Images and Graph Transformers to Detect Hate Speech on Social Media

Jul 18, 2023

Abstract:We present the Multi-Modal Discussion Transformer (mDT), a novel multi-modal graph-based transformer model for detecting hate speech in online social networks. In contrast to traditional text-only methods, our approach to labelling a comment as hate speech centers around the holistic analysis of text and images. This is done by leveraging graph transformers to capture the contextual relationships in the entire discussion that surrounds a comment, with interwoven fusion layers to combine text and image embeddings instead of processing different modalities separately. We compare the performance of our model to baselines that only process text; we also conduct extensive ablation studies. We conclude with future work for multimodal solutions to deliver social value in online contexts, arguing that capturing a holistic view of a conversation greatly advances the effort to detect anti-social behavior.

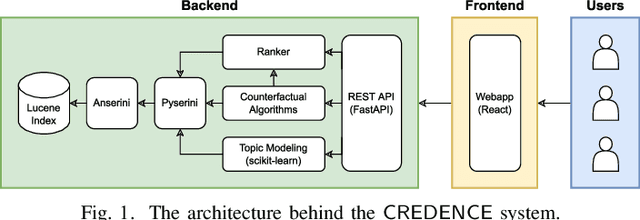

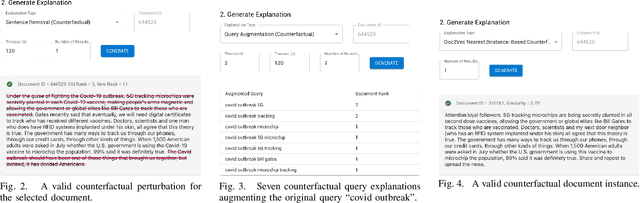

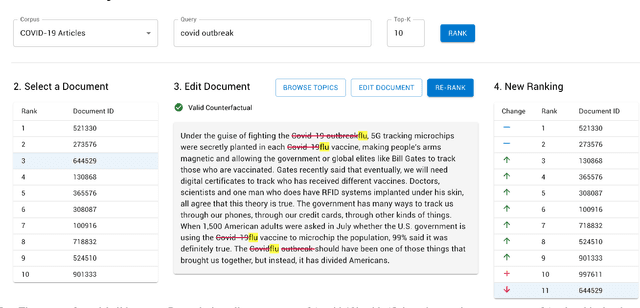

CREDENCE: Counterfactual Explanations for Document Ranking

Feb 10, 2023

Abstract:Towards better explainability in the field of information retrieval, we present CREDENCE, an interactive tool capable of generating counterfactual explanations for document rankers. Embracing the unique properties of the ranking problem, we present counterfactual explanations in terms of document perturbations, query perturbations, and even other documents. Additionally, users may build and test their own perturbations, and extract insights about their query, documents, and ranker.

Qualitative Analysis of a Graph Transformer Approach to Addressing Hate Speech: Adapting to Dynamically Changing Content

Jan 25, 2023Abstract:Our work advances an approach for predicting hate speech in social media, drawing out the critical need to consider the discussions that follow a post to successfully detect when hateful discourse may arise. Using graph transformer networks, coupled with modelling attention and BERT-level natural language processing, our approach can capture context and anticipate upcoming anti-social behaviour. In this paper, we offer a detailed qualitative analysis of this solution for hate speech detection in social networks, leading to insights into where the method has the most impressive outcomes in comparison with competitors and identifying scenarios where there are challenges to achieving ideal performance. Included is an exploration of the kinds of posts that permeate social media today, including the use of hateful images. This suggests avenues for extending our model to be more comprehensive. A key insight is that the focus on reasoning about the concept of context positions us well to be able to support multi-modal analysis of online posts. We conclude with a reflection on how the problem we are addressing relates especially well to the theme of dynamic change, a critical concern for all AI solutions for social impact. We also comment briefly on how mental health well-being can be advanced with our work, through curated content attuned to the extent of hate in posts.

Predicting Hateful Discussions on Reddit using Graph Transformer Networks and Communal Context

Jan 10, 2023Abstract:We propose a system to predict harmful discussions on social media platforms. Our solution uses contextual deep language models and proposes the novel idea of integrating state-of-the-art Graph Transformer Networks to analyze all conversations that follow an initial post. This framework also supports adapting to future comments as the conversation unfolds. In addition, we study whether a community-specific analysis of hate speech leads to more effective detection of hateful discussions. We evaluate our approach on 333,487 Reddit discussions from various communities. We find that community-specific modeling improves performance two-fold and that models which capture wider-discussion context improve accuracy by 28\% (35\% for the most hateful content) compared to limited context models.

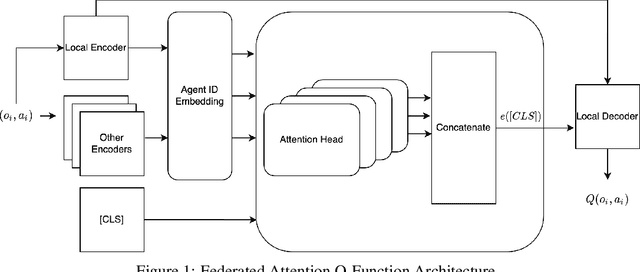

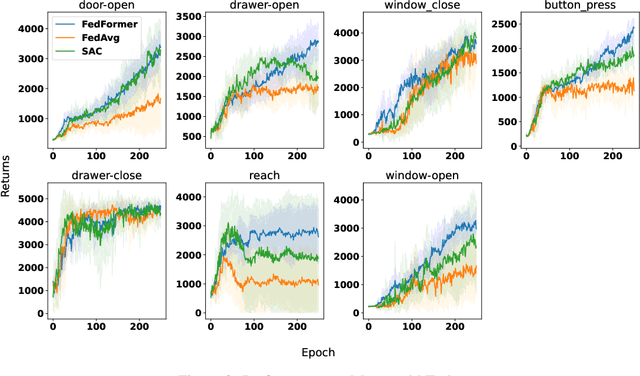

FedFormer: Contextual Federation with Attention in Reinforcement Learning

May 30, 2022

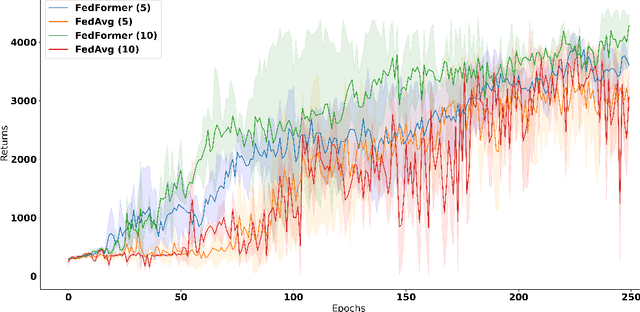

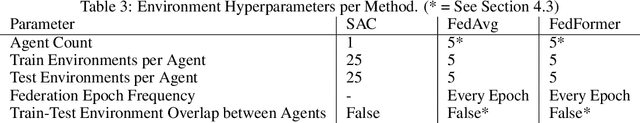

Abstract:A core issue in federated reinforcement learning is defining how to aggregate insights from multiple agents into one. This is commonly done by taking the average of each participating agent's model weights into one common model (FedAvg). We instead propose FedFormer, a novel federation strategy that utilizes Transformer Attention to contextually aggregate embeddings from models originating from different learner agents. In so doing, we attentively weigh contributions of other agents with respect to the current agent's environment and learned relationships, thus providing more effective and efficient federation. We evaluate our methods on the Meta-World environment and find that our approach yields significant improvements over FedAvg and non-federated Soft Actor Critique single agent methods. Our results compared to Soft Actor Critique show that FedFormer performs better while still abiding by the privacy constraints of federated learning. In addition, we demonstrate nearly linear improvements in effectiveness with increased agent pools in certain tasks. This is contrasted by FedAvg, which fails to make noticeable improvements when scaled.

GRS: Combining Generation and Revision in Unsupervised Sentence Simplification

Mar 22, 2022

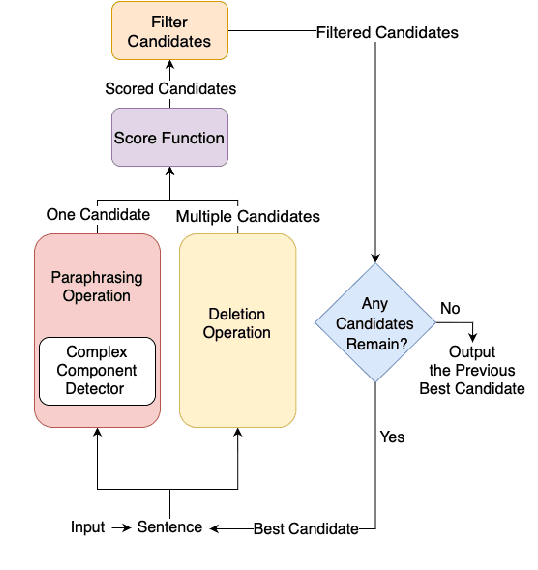

Abstract:We propose GRS: an unsupervised approach to sentence simplification that combines text generation and text revision. We start with an iterative framework in which an input sentence is revised using explicit edit operations, and add paraphrasing as a new edit operation. This allows us to combine the advantages of generative and revision-based approaches: paraphrasing captures complex edit operations, and the use of explicit edit operations in an iterative manner provides controllability and interpretability. We demonstrate these advantages of GRS compared to existing methods on the Newsela and ASSET datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge