Luca Canzian

Performance Limits for Signals of Opportunity-Based Navigation

Jul 23, 2024

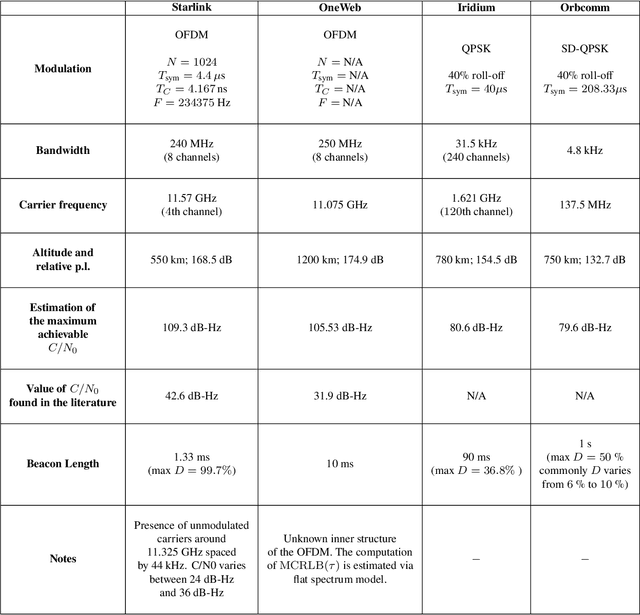

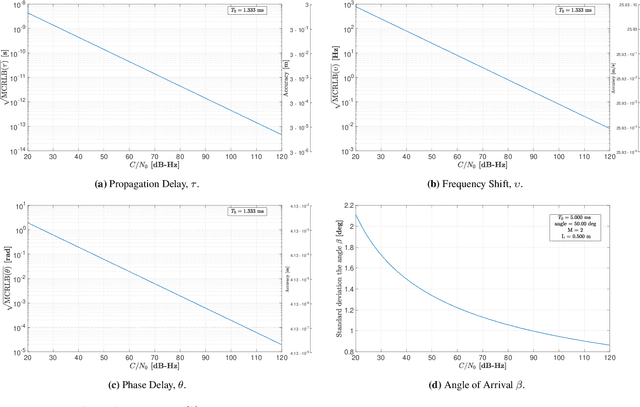

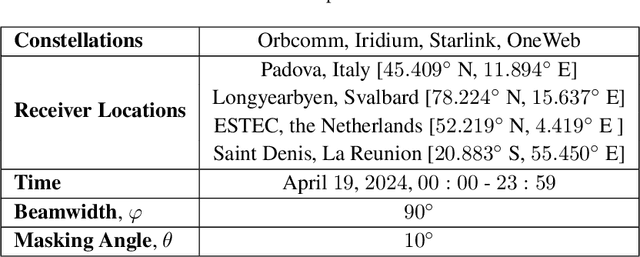

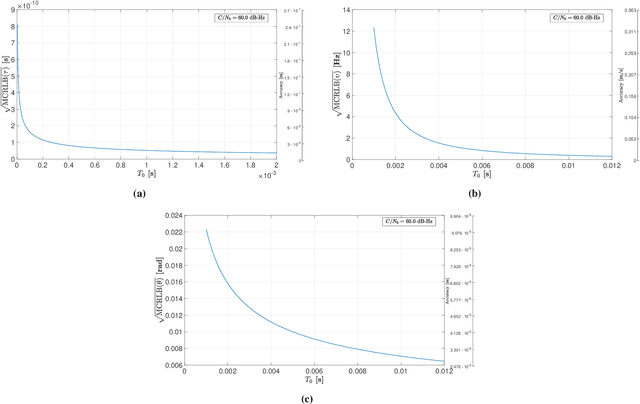

Abstract:This paper investigates the potential of non-terrestrial and terrestrial signals of opportunity (SOOP) for navigation applications. Non-terrestrial SOOP analysis employs modified Cram\`er-Rao lower bound (MCRLB) to establish a relationship between SOOP characteristics and the accuracy of ranging information. This approach evaluates hybrid navigation module performance without direct signal simulation. The MCRLB is computed for ranging accuracy, considering factors like propagation delay, frequency offset, phase offset, and angle-of-arrival (AOA), across diverse non-terrestrial SOOP candidates. Additionally, Geometric Dilution of Precision (GDOP) and low earth orbit (LEO) SOOP availability are assessed. Validation involves comparing MCRLB predictions with actual ranging measurements obtained in a realistic simulated scenario. Furthermore, a qualitative evaluation examines terrestrial SOOP, considering signal availability, accuracy attainability, and infrastructure demands.

Ensemble of Distributed Learners for Online Classification of Dynamic Data Streams

Aug 24, 2013

Abstract:We present an efficient distributed online learning scheme to classify data captured from distributed, heterogeneous, and dynamic data sources. Our scheme consists of multiple distributed local learners, that analyze different streams of data that are correlated to a common event that needs to be classified. Each learner uses a local classifier to make a local prediction. The local predictions are then collected by each learner and combined using a weighted majority rule to output the final prediction. We propose a novel online ensemble learning algorithm to update the aggregation rule in order to adapt to the underlying data dynamics. We rigorously determine a bound for the worst case misclassification probability of our algorithm which depends on the misclassification probabilities of the best static aggregation rule, and of the best local classifier. Importantly, the worst case misclassification probability of our algorithm tends asymptotically to 0 if the misclassification probability of the best static aggregation rule or the misclassification probability of the best local classifier tend to 0. Then we extend our algorithm to address challenges specific to the distributed implementation and we prove new bounds that apply to these settings. Finally, we test our scheme by performing an evaluation study on several data sets. When applied to data sets widely used by the literature dealing with dynamic data streams and concept drift, our scheme exhibits performance gains ranging from 34% to 71% with respect to state of the art solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge