Louis Tay

Understanding Critical Thinking in Generative Artificial Intelligence Use: Development, Validation, and Correlates of the Critical Thinking in AI Use Scale

Dec 13, 2025Abstract:Generative AI tools are increasingly embedded in everyday work and learning, yet their fluency, opacity, and propensity to hallucinate mean that users must critically evaluate AI outputs rather than accept them at face value. The present research conceptualises critical thinking in AI use as a dispositional tendency to verify the source and content of AI-generated information, to understand how models work and where they fail, and to reflect on the broader implications of relying on AI. Across six studies (N = 1365), we developed and validated the 13-item critical thinking in AI use scale and mapped its nomological network. Study 1 generated and content-validated scale items. Study 2 supported a three-factor structure (Verification, Motivation, and Reflection). Studies 3, 4, and 5 confirmed this higher-order model, demonstrated internal consistency and test-retest reliability, strong factor loadings, sex invariance, and convergent and discriminant validity. Studies 3 and 4 further revealed that critical thinking in AI use was positively associated with openness, extraversion, positive trait affect, and frequency of AI use. Lastly, Study 6 demonstrated criterion validity of the scale, with higher critical thinking in AI use scores predicting more frequent and diverse verification strategies, greater veracity-judgement accuracy in a novel and naturalistic ChatGPT-powered fact-checking task, and deeper reflection about responsible AI. Taken together, the current work clarifies why and how people exercise oversight over generative AI outputs and provides a validated scale and ecologically grounded task paradigm to support theory testing, cross-group, and longitudinal research on critical engagement with generative AI outputs.

Robust Bias Detection in MLMs and its Application to Human Trait Ratings

Feb 21, 2025Abstract:There has been significant prior work using templates to study bias against demographic attributes in MLMs. However, these have limitations: they overlook random variability of templates and target concepts analyzed, assume equality amongst templates, and overlook bias quantification. Addressing these, we propose a systematic statistical approach to assess bias in MLMs, using mixed models to account for random effects, pseudo-perplexity weights for sentences derived from templates and quantify bias using statistical effect sizes. Replicating prior studies, we match on bias scores in magnitude and direction with small to medium effect sizes. Next, we explore the novel problem of gender bias in the context of $\textit{personality}$ and $\textit{character}$ traits, across seven MLMs (base and large). We find that MLMs vary; ALBERT is unbiased for binary gender but the most biased for non-binary $\textit{neo}$, while RoBERTa-large is the most biased for binary gender but shows small to no bias for $\textit{neo}$. There is some alignment of MLM bias and findings in psychology (human perspective) - in $\textit{agreeableness}$ with RoBERTa-large and $\textit{emotional stability}$ with BERT-large. There is general agreement for the remaining 3 personality dimensions: both sides observe at most small differences across gender. For character traits, human studies on gender bias are limited thus comparisons are not feasible.

Whither Bias Goes, I Will Go: An Integrative, Systematic Review of Algorithmic Bias Mitigation

Oct 21, 2024

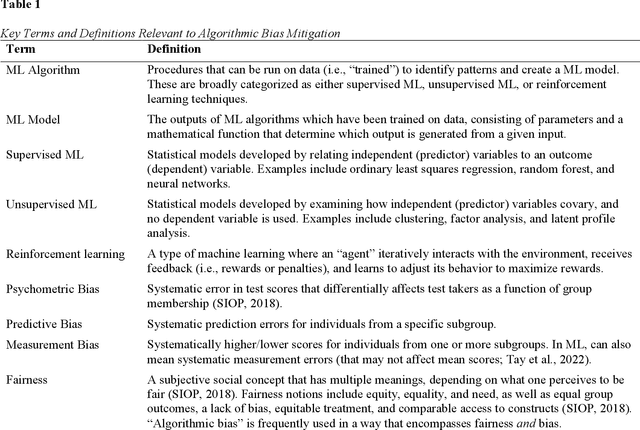

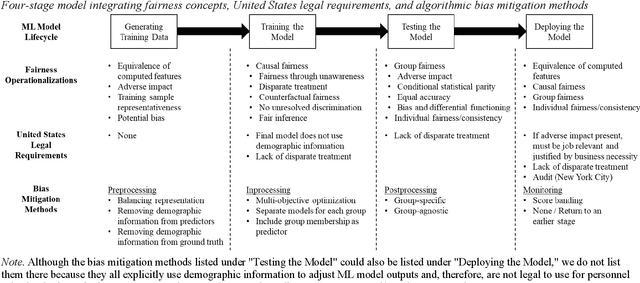

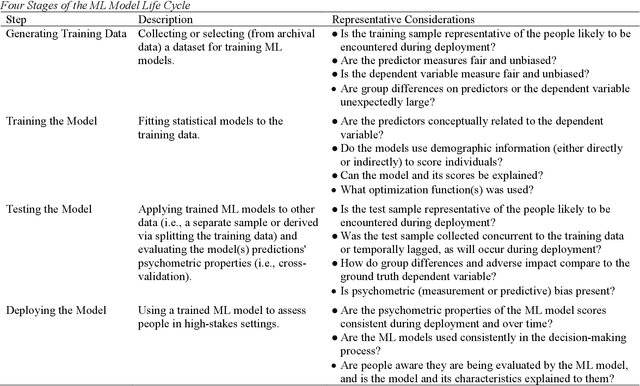

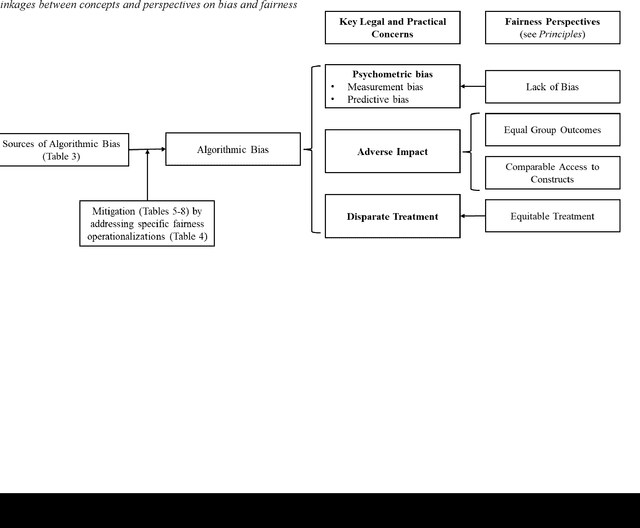

Abstract:Machine learning (ML) models are increasingly used for personnel assessment and selection (e.g., resume screeners, automatically scored interviews). However, concerns have been raised throughout society that ML assessments may be biased and perpetuate or exacerbate inequality. Although organizational researchers have begun investigating ML assessments from traditional psychometric and legal perspectives, there is a need to understand, clarify, and integrate fairness operationalizations and algorithmic bias mitigation methods from the computer science, data science, and organizational research literatures. We present a four-stage model of developing ML assessments and applying bias mitigation methods, including 1) generating the training data, 2) training the model, 3) testing the model, and 4) deploying the model. When introducing the four-stage model, we describe potential sources of bias and unfairness at each stage. Then, we systematically review definitions and operationalizations of algorithmic bias, legal requirements governing personnel selection from the United States and Europe, and research on algorithmic bias mitigation across multiple domains and integrate these findings into our framework. Our review provides insights for both research and practice by elucidating possible mechanisms of algorithmic bias while identifying which bias mitigation methods are legal and effective. This integrative framework also reveals gaps in the knowledge of algorithmic bias mitigation that should be addressed by future collaborative research between organizational researchers, computer scientists, and data scientists. We provide recommendations for developing and deploying ML assessments, as well as recommendations for future research into algorithmic bias and fairness.

Language-based Valence and Arousal Expressions between the United States and China: a Cross-Cultural Examination

Jan 11, 2024Abstract:Although affective expressions of individuals have been extensively studied using social media, research has primarily focused on the Western context. There are substantial differences among cultures that contribute to their affective expressions. This paper examines the differences between Twitter (X) in the United States and Sina Weibo posts in China on two primary dimensions of affect - valence and arousal. We study the difference in the functional relationship between arousal and valence (so-called V-shaped) among individuals in the US and China and explore the associated content differences. Furthermore, we correlate word usage and topics in both platforms to interpret their differences. We observe that for Twitter users, the variation in emotional intensity is less distinct between negative and positive emotions compared to Weibo users, and there is a sharper escalation in arousal corresponding with heightened emotions. From language features, we discover that affective expressions are associated with personal life and feelings on Twitter, while on Weibo such discussions are about socio-political topics in the society. These results suggest a West-East difference in the V-shaped relationship between valence and arousal of affective expressions on social media influenced by content differences. Our findings have implications for applications and theories related to cultural differences in affective expressions.

Integrating Psychometrics and Computing Perspectives on Bias and Fairness in Affective Computing: A Case Study of Automated Video Interviews

May 04, 2023Abstract:We provide a psychometric-grounded exposition of bias and fairness as applied to a typical machine learning pipeline for affective computing. We expand on an interpersonal communication framework to elucidate how to identify sources of bias that may arise in the process of inferring human emotions and other psychological constructs from observed behavior. Various methods and metrics for measuring fairness and bias are discussed along with pertinent implications within the United States legal context. We illustrate how to measure some types of bias and fairness in a case study involving automatic personality and hireability inference from multimodal data collected in video interviews for mock job applications. We encourage affective computing researchers and practitioners to encapsulate bias and fairness in their research processes and products and to consider their role, agency, and responsibility in promoting equitable and just systems.

* 21 pages, 4 figures

Studying Cultural Differences in Emoji Usage across the East and the West

Apr 04, 2019

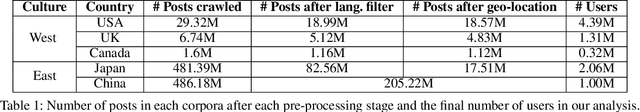

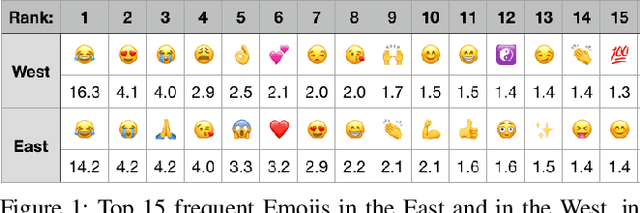

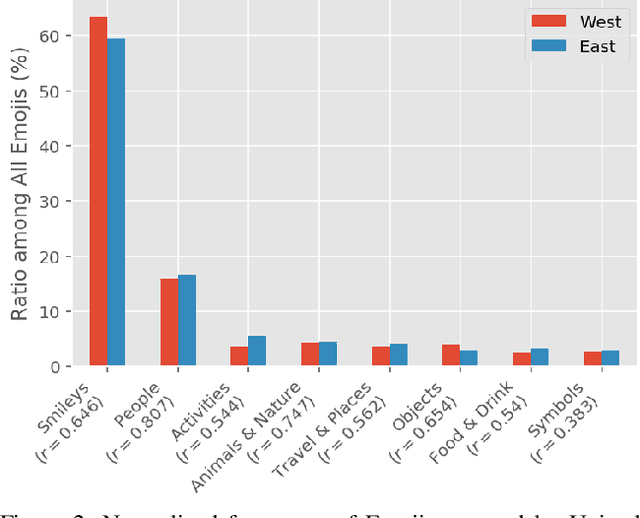

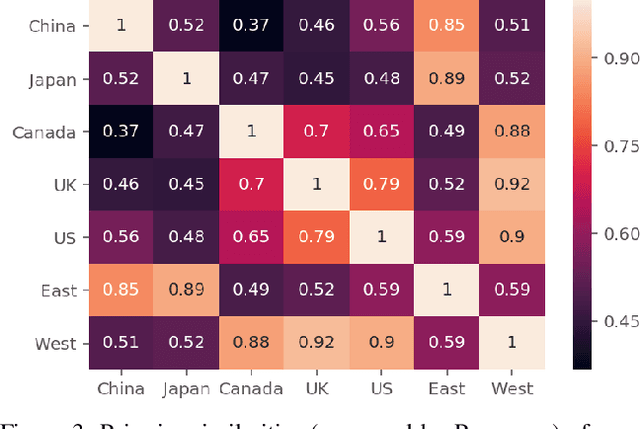

Abstract:Global acceptance of Emojis suggests a cross-cultural, normative use of Emojis. Meanwhile, nuances in Emoji use across cultures may also exist due to linguistic differences in expressing emotions and diversity in conceptualizing topics. Indeed, literature in cross-cultural psychology has found both normative and culture-specific ways in which emotions are expressed. In this paper, using social media, we compare the Emoji usage based on frequency, context, and topic associations across countries in the East (China and Japan) and the West (United States, United Kingdom, and Canada). Across the East and the West, our study examines a) similarities and differences on the usage of different categories of Emojis such as People, Food \& Drink, Travel \& Places etc., b) potential mapping of Emoji use differences with previously identified cultural differences in users' expression about diverse concepts such as death, money emotions and family, and c) relative correspondence of validated psycho-linguistic categories with Ekman's emotions. The analysis of Emoji use in the East and the West reveals recognizable normative and culture specific patterns. This research reveals the ways in which Emojis can be used for cross-cultural communication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge