Logan Cummins

AAD-LLM: Adaptive Anomaly Detection Using Large Language Models

Nov 01, 2024

Abstract:For data-constrained, complex and dynamic industrial environments, there is a critical need for transferable and multimodal methodologies to enhance anomaly detection and therefore, prevent costs associated with system failures. Typically, traditional PdM approaches are not transferable or multimodal. This work examines the use of Large Language Models (LLMs) for anomaly detection in complex and dynamic manufacturing systems. The research aims to improve the transferability of anomaly detection models by leveraging Large Language Models (LLMs) and seeks to validate the enhanced effectiveness of the proposed approach in data-sparse industrial applications. The research also seeks to enable more collaborative decision-making between the model and plant operators by allowing for the enriching of input series data with semantics. Additionally, the research aims to address the issue of concept drift in dynamic industrial settings by integrating an adaptability mechanism. The literature review examines the latest developments in LLM time series tasks alongside associated adaptive anomaly detection methods to establish a robust theoretical framework for the proposed architecture. This paper presents a novel model framework (AAD-LLM) that doesn't require any training or finetuning on the dataset it is applied to and is multimodal. Results suggest that anomaly detection can be converted into a "language" task to deliver effective, context-aware detection in data-constrained industrial applications. This work, therefore, contributes significantly to advancements in anomaly detection methodologies.

Explainable Anomaly Detection: Counterfactual driven What-If Analysis

Aug 21, 2024

Abstract:There exists three main areas of study inside of the field of predictive maintenance: anomaly detection, fault diagnosis, and remaining useful life prediction. Notably, anomaly detection alerts the stakeholder that an anomaly is occurring. This raises two fundamental questions: what is causing the fault and how can we fix it? Inside of the field of explainable artificial intelligence, counterfactual explanations can give that information in the form of what changes to make to put the data point into the opposing class, in this case "healthy". The suggestions are not always actionable which may raise the interest in asking "what if we do this instead?" In this work, we provide a proof of concept for utilizing counterfactual explanations as what-if analysis. We perform this on the PRONOSTIA dataset with a temporal convolutional network as the anomaly detector. Our method presents the counterfactuals in the form of a what-if analysis for this base problem to inspire future work for more complex systems and scenarios.

A Survey of Transformer Enabled Time Series Synthesis

Jun 04, 2024

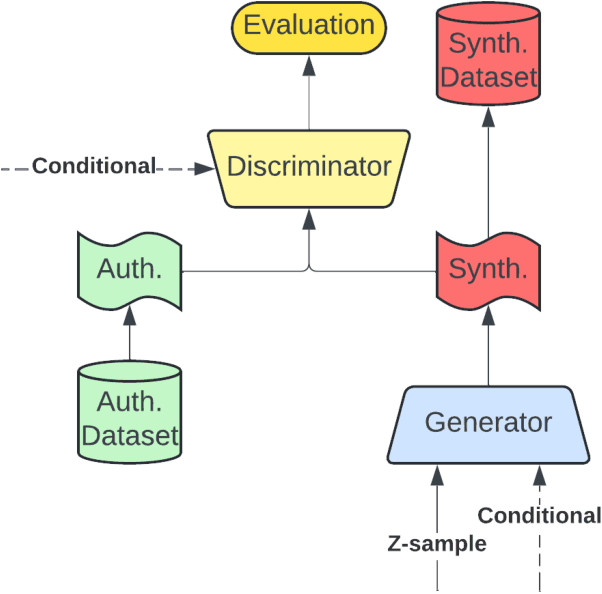

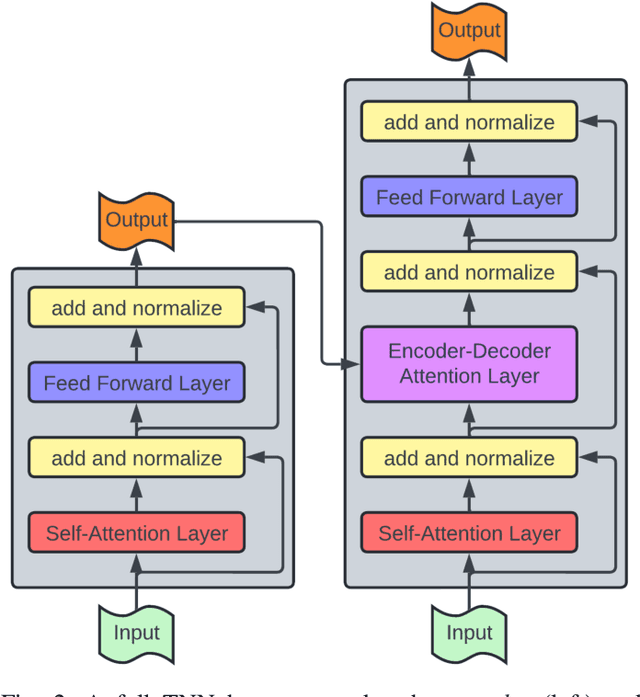

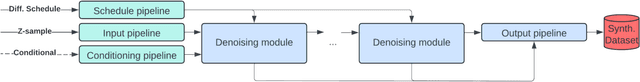

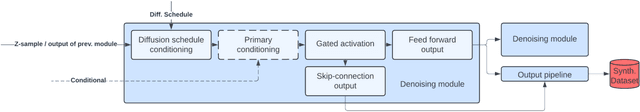

Abstract:Generative AI has received much attention in the image and language domains, with the transformer neural network continuing to dominate the state of the art. Application of these models to time series generation is less explored, however, and is of great utility to machine learning, privacy preservation, and explainability research. The present survey identifies this gap at the intersection of the transformer, generative AI, and time series data, and reviews works in this sparsely populated subdomain. The reviewed works show great variety in approach, and have not yet converged on a conclusive answer to the problems the domain poses. GANs, diffusion models, state space models, and autoencoders were all encountered alongside or surrounding the transformers which originally motivated the survey. While too open a domain to offer conclusive insights, the works surveyed are quite suggestive, and several recommendations for best practice, and suggestions of valuable future work, are provided.

Generating Synthetic Time Series Data for Cyber-Physical Systems

Apr 12, 2024

Abstract:Data augmentation is an important facilitator of deep learning applications in the time series domain. A gap is identified in the literature, demonstrating sparse exploration of the transformer, the dominant sequence model, for data augmentation in time series. A architecture hybridizing several successful priors is put forth and tested using a powerful time domain similarity metric. Results suggest the challenge of this domain, and several valuable directions for future work.

Explainable Predictive Maintenance: A Survey of Current Methods, Challenges and Opportunities

Jan 15, 2024

Abstract:Predictive maintenance is a well studied collection of techniques that aims to prolong the life of a mechanical system by using artificial intelligence and machine learning to predict the optimal time to perform maintenance. The methods allow maintainers of systems and hardware to reduce financial and time costs of upkeep. As these methods are adopted for more serious and potentially life-threatening applications, the human operators need trust the predictive system. This attracts the field of Explainable AI (XAI) to introduce explainability and interpretability into the predictive system. XAI brings methods to the field of predictive maintenance that can amplify trust in the users while maintaining well-performing systems. This survey on explainable predictive maintenance (XPM) discusses and presents the current methods of XAI as applied to predictive maintenance while following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 guidelines. We categorize the different XPM methods into groups that follow the XAI literature. Additionally, we include current challenges and a discussion on future research directions in XPM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge