Lisa Brown

Moments in Time Dataset: one million videos for event understanding

Jan 09, 2018

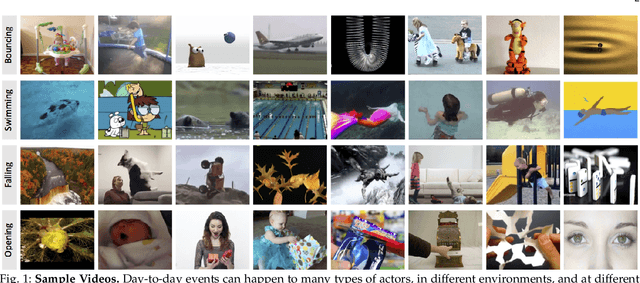

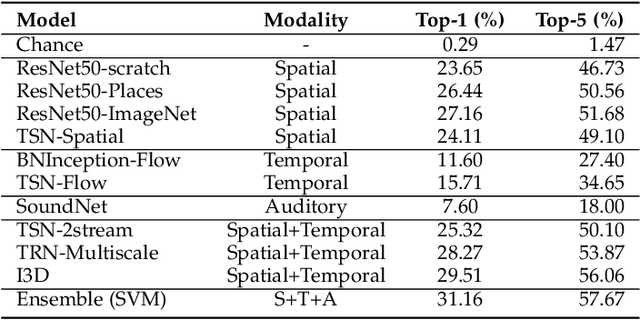

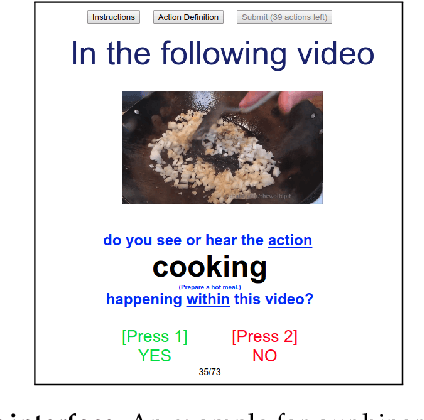

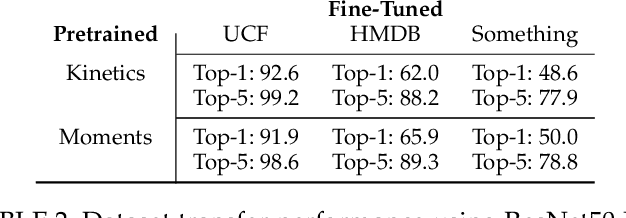

Abstract:We present the Moments in Time Dataset, a large-scale human-annotated collection of one million short videos corresponding to dynamic events unfolding within three seconds. Modeling the spatial-audio-temporal dynamics even for actions occurring in 3 second videos poses many challenges: meaningful events do not include only people, but also objects, animals, and natural phenomena; visual and auditory events can be symmetrical or not in time ("opening" means "closing" in reverse order), and transient or sustained. We describe the annotation process of our dataset (each video is tagged with one action or activity label among 339 different classes), analyze its scale and diversity in comparison to other large-scale video datasets for action recognition, and report results of several baseline models addressing separately and jointly three modalities: spatial, temporal and auditory. The Moments in Time dataset designed to have a large coverage and diversity of events in both visual and auditory modalities, can serve as a new challenge to develop models that scale to the level of complexity and abstract reasoning that a human processes on a daily basis.

A Data-Driven Approach to Pre-Operative Evaluation of Lung Cancer Patients

Jul 21, 2017

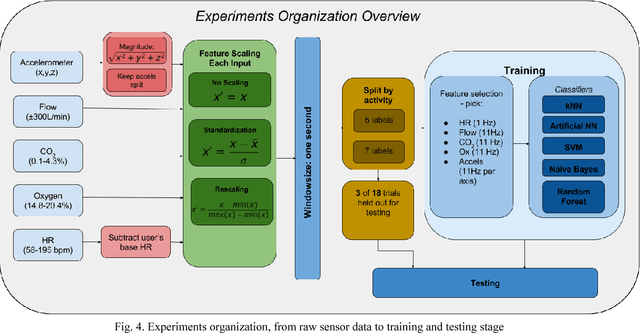

Abstract:Lung cancer is the number one cause of cancer deaths. Many early stage lung cancer patients have resectable tumors; however, their cardiopulmonary function needs to be properly evaluated before they are deemed operative candidates. Consequently, a subset of such patients is asked to undergo standard pulmonary function tests, such as cardiopulmonary exercise tests (CPET) or stair climbs, to have their pulmonary function evaluated. The standard tests are expensive, labor intensive, and sometimes ineffective due to co-morbidities, such as limited mobility. Recovering patients would benefit greatly from a device that can be worn at home, is simple to use, and is relatively inexpensive. Using advances in information technology, the goal is to design a continuous, inexpensive, mobile and patient-centric mechanism for evaluation of a patient's pulmonary function. A light mobile mask is designed, fitted with CO2, O2, flow volume, and accelerometer sensors and tested on 18 subjects performing 15 minute exercises. The data collected from the device is stored in a cloud service and machine learning algorithms are used to train and predict a user's activity .Several classification techniques are compared - K Nearest Neighbor, Random Forest, Support Vector Machine, Artificial Neural Network, and Naive Bayes. One useful area of interest involves comparing a patient's predicted activity levels, especially using only breath data, to that of a normal person's, using the classification models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge