Soheil Ghiasi

Deep Harmonic Finesse: Signal Separation in Wearable Systems with Limited Data

Jul 01, 2024

Abstract:We present a method, referred to as Deep Harmonic Finesse (DHF), for separation of non-stationary quasi-periodic signals when limited data is available. The problem frequently arises in wearable systems in which, a combination of quasi-periodic physiological phenomena give rise to the sensed signal, and excessive data collection is prohibitive. Our approach utilizes prior knowledge of time-frequency patterns in the signals to mask and in-paint spectrograms. This is achieved through an application-inspired deep harmonic neural network coupled with an integrated pattern alignment component. The network's structure embeds the implicit harmonic priors within the time-frequency domain, while the pattern-alignment method transforms the sensed signal, ensuring a strong alignment with the network. The effectiveness of the algorithm is demonstrated in the context of non-invasive fetal monitoring using both synthesized and in vivo data. When applied to the synthesized data, our method exhibits significant improvements in signal-to-distortion ratio (26% on average) and mean squared error (80% on average), compared to the best competing method. When applied to in vivo data captured in pregnant animal studies, our method improves the correlation error between estimated fetal blood oxygen saturation and the ground truth by 80.5% compared to the state of the art.

* Presented at the 61st ACM/IEEE Design Automation Conference (DAC '24)

Distill-Net: Application-Specific Distillation of Deep Convolutional Neural Networks for Resource-Constrained IoT Platforms

Dec 16, 2018

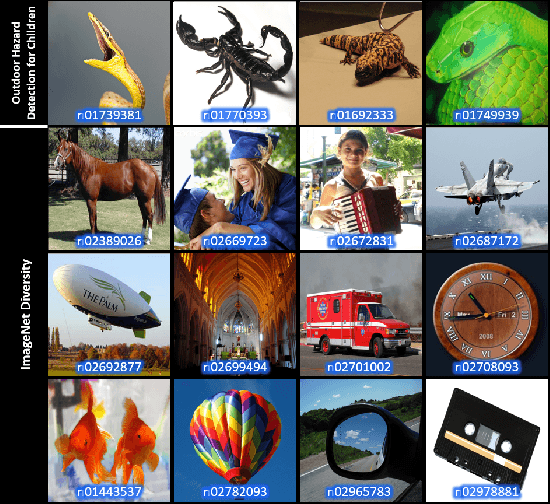

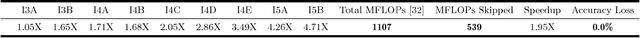

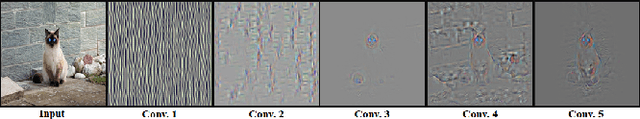

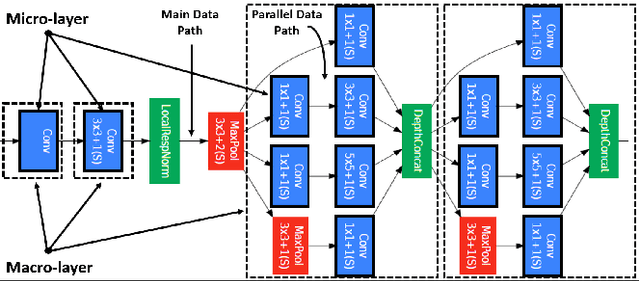

Abstract:Many Internet-of-Things (IoT) applications demand fast and accurate understanding of a few key events in their surrounding environment. Deep Convolutional Neural Networks (CNNs) have emerged as an effective approach to understand speech, images, and similar high dimensional data types. Algorithmic performance of modern CNNs, however, fundamentally relies on learning class-agnostic hierarchical features that only exist in comprehensive training datasets with many classes. As a result, fast inference using CNNs trained on such datasets is prohibitive for most resource-constrained IoT platforms. To bridge this gap, we present a principled and practical methodology for distilling a complex modern CNN that is trained to effectively recognize many different classes of input data into an application-dependent essential core that not only recognizes the few classes of interest to the application accurately, but also runs efficiently on platforms with limited resources. Experimental results confirm that our approach strikes a favorable balance between classification accuracy (application constraint), inference efficiency (platform constraint), and productive development of new applications (business constraint).

Resource-Scalable CNN Synthesis for IoT Applications

Dec 16, 2018

Abstract:State-of-the-art image recognition systems use sophisticated Convolutional Neural Networks (CNNs) that are designed and trained to identify numerous object classes. Such networks are fairly resource intensive to compute, prohibiting their deployment on resource-constrained embedded platforms. On one hand, the ability to classify an exhaustive list of categories is excessive for the demands of most IoT applications. On the other hand, designing a new custom-designed CNN for each new IoT application is impractical, due to the inherent difficulty in developing competitive models and time-to-market pressure. To address this problem, we investigate the question of: "Can one utilize an existing optimized CNN model to automatically build a competitive CNN for an IoT application whose objects of interest are a fraction of categories that the original CNN was designed to classify, such that the resource requirement is proportionally scaled down?" We use the term resource scalability to refer to this concept, and develop a methodology for automated synthesis of resource scalable CNNs from an existing optimized baseline CNN. The synthesized CNN has sufficient learning capacity for handling the given IoT application requirements, and yields competitive accuracy. The proposed approach is fast, and unlike the presently common practice of CNN design, does not require iterative rounds of training trial and error.

A Data-Driven Approach to Pre-Operative Evaluation of Lung Cancer Patients

Jul 21, 2017

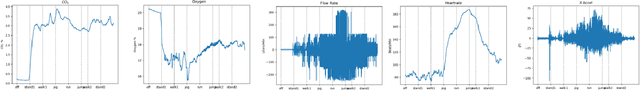

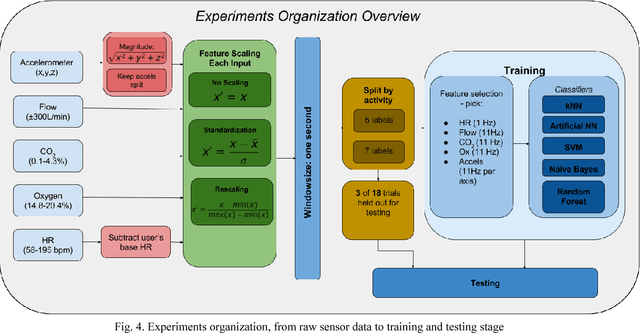

Abstract:Lung cancer is the number one cause of cancer deaths. Many early stage lung cancer patients have resectable tumors; however, their cardiopulmonary function needs to be properly evaluated before they are deemed operative candidates. Consequently, a subset of such patients is asked to undergo standard pulmonary function tests, such as cardiopulmonary exercise tests (CPET) or stair climbs, to have their pulmonary function evaluated. The standard tests are expensive, labor intensive, and sometimes ineffective due to co-morbidities, such as limited mobility. Recovering patients would benefit greatly from a device that can be worn at home, is simple to use, and is relatively inexpensive. Using advances in information technology, the goal is to design a continuous, inexpensive, mobile and patient-centric mechanism for evaluation of a patient's pulmonary function. A light mobile mask is designed, fitted with CO2, O2, flow volume, and accelerometer sensors and tested on 18 subjects performing 15 minute exercises. The data collected from the device is stored in a cloud service and machine learning algorithms are used to train and predict a user's activity .Several classification techniques are compared - K Nearest Neighbor, Random Forest, Support Vector Machine, Artificial Neural Network, and Naive Bayes. One useful area of interest involves comparing a patient's predicted activity levels, especially using only breath data, to that of a normal person's, using the classification models.

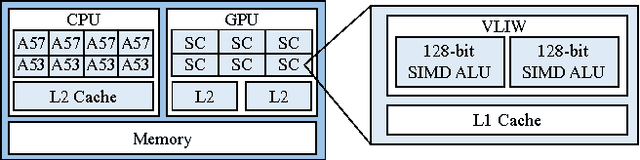

Fast and Energy-Efficient CNN Inference on IoT Devices

Nov 22, 2016

Abstract:Convolutional Neural Networks (CNNs) exhibit remarkable performance in various machine learning tasks. As sensor-equipped internet of things (IoT) devices permeate into every aspect of modern life, it is increasingly important to run CNN inference, a computationally intensive application, on resource constrained devices. We present a technique for fast and energy-efficient CNN inference on mobile SoC platforms, which are projected to be a major player in the IoT space. We propose techniques for efficient parallelization of CNN inference targeting mobile GPUs, and explore the underlying tradeoffs. Experiments with running Squeezenet on three different mobile devices confirm the effectiveness of our approach. For further study, please refer to the project repository available on our GitHub page: https://github.com/mtmd/Mobile_ConvNet

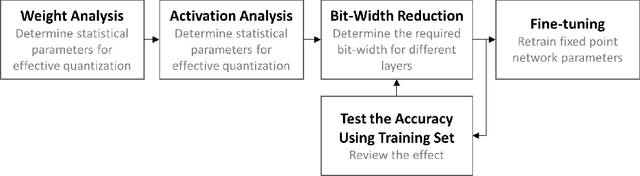

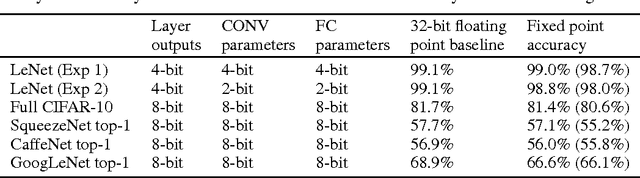

Hardware-oriented Approximation of Convolutional Neural Networks

Oct 20, 2016

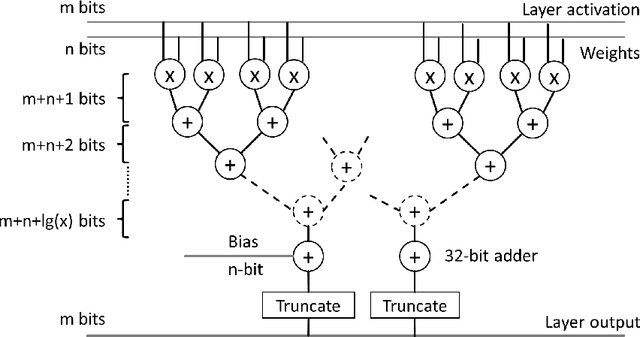

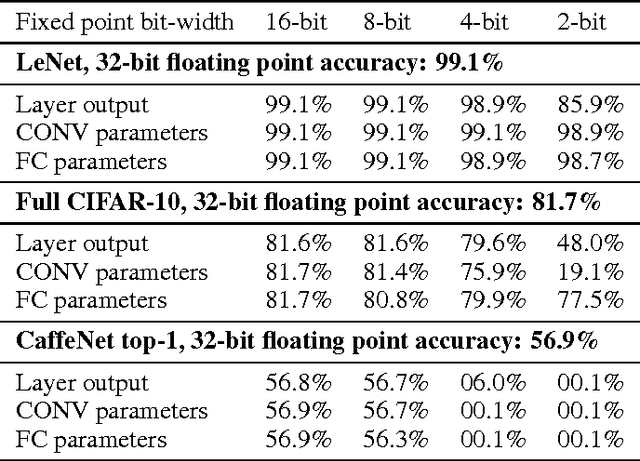

Abstract:High computational complexity hinders the widespread usage of Convolutional Neural Networks (CNNs), especially in mobile devices. Hardware accelerators are arguably the most promising approach for reducing both execution time and power consumption. One of the most important steps in accelerator development is hardware-oriented model approximation. In this paper we present Ristretto, a model approximation framework that analyzes a given CNN with respect to numerical resolution used in representing weights and outputs of convolutional and fully connected layers. Ristretto can condense models by using fixed point arithmetic and representation instead of floating point. Moreover, Ristretto fine-tunes the resulting fixed point network. Given a maximum error tolerance of 1%, Ristretto can successfully condense CaffeNet and SqueezeNet to 8-bit. The code for Ristretto is available.

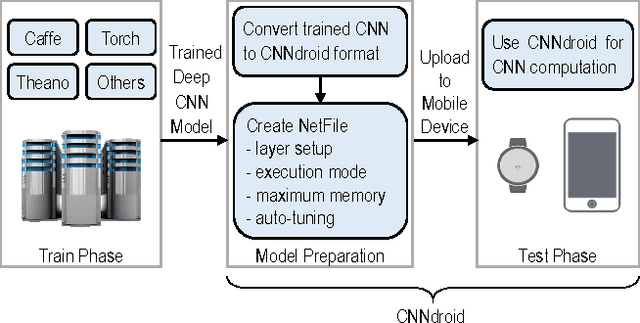

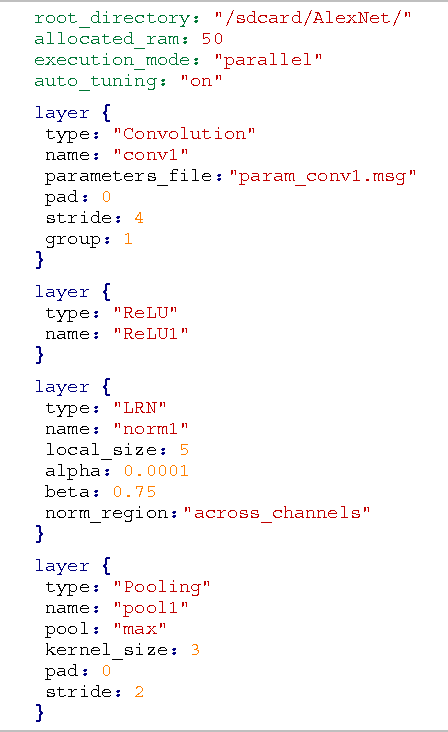

CNNdroid: GPU-Accelerated Execution of Trained Deep Convolutional Neural Networks on Android

Oct 15, 2016

Abstract:Many mobile applications running on smartphones and wearable devices would potentially benefit from the accuracy and scalability of deep CNN-based machine learning algorithms. However, performance and energy consumption limitations make the execution of such computationally intensive algorithms on mobile devices prohibitive. We present a GPU-accelerated library, dubbed CNNdroid, for execution of trained deep CNNs on Android-based mobile devices. Empirical evaluations show that CNNdroid achieves up to 60X speedup and 130X energy saving on current mobile devices. The CNNdroid open source library is available for download at https://github.com/ENCP/CNNdroid

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge