Libby Ferland

Under the Surface: Tracking the Artifactuality of LLM-Generated Data

Jan 30, 2024

Abstract:This work delves into the expanding role of large language models (LLMs) in generating artificial data. LLMs are increasingly employed to create a variety of outputs, including annotations, preferences, instruction prompts, simulated dialogues, and free text. As these forms of LLM-generated data often intersect in their application, they exert mutual influence on each other and raise significant concerns about the quality and diversity of the artificial data incorporated into training cycles, leading to an artificial data ecosystem. To the best of our knowledge, this is the first study to aggregate various types of LLM-generated text data, from more tightly constrained data like "task labels" to more lightly constrained "free-form text". We then stress test the quality and implications of LLM-generated artificial data, comparing it with human data across various existing benchmarks. Despite artificial data's capability to match human performance, this paper reveals significant hidden disparities, especially in complex tasks where LLMs often miss the nuanced understanding of intrinsic human-generated content. This study critically examines diverse LLM-generated data and emphasizes the need for ethical practices in data creation and when using LLMs. It highlights the LLMs' shortcomings in replicating human traits and behaviors, underscoring the importance of addressing biases and artifacts produced in LLM-generated content for future research and development. All data and code are available on our project page.

Evaluating Older Users' Experiences with Commercial Dialogue Systems: Implications for Future Design and Development

Jan 30, 2019

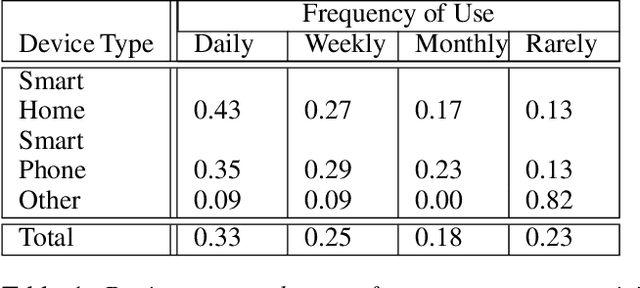

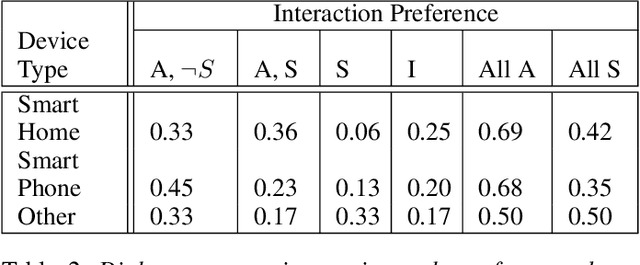

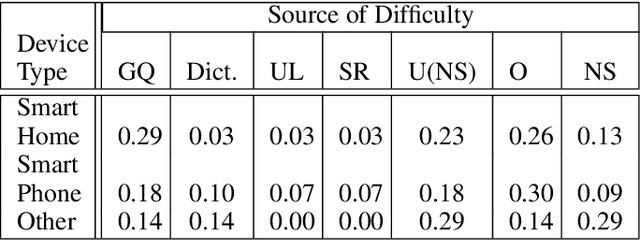

Abstract:Understanding the needs of a variety of distinct user groups is vital in designing effective, desirable dialogue systems that will be adopted by the largest possible segment of the population. Despite the increasing popularity of dialogue systems in both mobile and home formats, user studies remain relatively infrequent and often sample a segment of the user population that is not representative of the needs of the potential user population as a whole. This is especially the case for users who may be more reluctant adopters, such as older adults. In this paper we discuss the results of a recent user study performed over a large population of age 50 and over adults in the Midwestern United States that have experience using a variety of commercial dialogue systems. We show the common preferences, use cases, and feature gaps identified by older adult users in interacting with these systems. Based on these results, we propose a new, robust user modeling framework that addresses common issues facing older adult users, which can then be generalized to the wider user population.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge