Li Luo

Physical Transformer

Jan 05, 2026Abstract:Digital AI systems spanning large language models, vision models, and generative architectures that operate primarily in symbolic, linguistic, or pixel domains. They have achieved striking progress, but almost all of this progress lives in virtual spaces. These systems transform embeddings and tokens, yet do not themselves touch the world and rarely admit a physical interpretation. In this work we propose a physical transformer that couples modern transformer style computation with geometric representation and physical dynamics. At the micro level, attention heads, and feed-forward blocks are modeled as interacting spins governed by effective Hamiltonians plus non-Hamiltonian bath terms. At the meso level, their aggregated state evolves on a learned Neural Differential Manifold (NDM) under Hamiltonian flows and Hamilton, Jacobi, Bellman (HJB) optimal control, discretized by symplectic layers that approximately preserve geometric and energetic invariants. At the macro level, the model maintains a generative semantic workspace and a two-dimensional information-phase portrait that tracks uncertainty and information gain over a reasoning trajectory. Within this hierarchy, reasoning tasks are formulated as controlled information flows on the manifold, with solutions corresponding to low cost trajectories that satisfy geometric, energetic, and workspace-consistency constraints. On simple toy problems involving numerical integration and dynamical systems, the physical transformer outperforms naive baselines in stability and long-horizon accuracy, highlighting the benefits of respecting underlying geometric and Hamiltonian structure. More broadly, the framework suggests a path toward physical AI that unify digital reasoning with physically grounded manifolds, opening a route to more interpretable and potentially unified models of reasoning, control, and interaction with the real world.

S-Crescendo: A Nested Transformer Weaving Framework for Scalable Nonlinear System in S-Domain Representation

May 17, 2025Abstract:Simulation of high-order nonlinear system requires extensive computational resources, especially in modern VLSI backend design where bifurcation-induced instability and chaos-like transient behaviors pose challenges. We present S-Crescendo - a nested transformer weaving framework that synergizes S-domain with neural operators for scalable time-domain prediction in high-order nonlinear networks, alleviating the computational bottlenecks of conventional solvers via Newton-Raphson method. By leveraging the partial-fraction decomposition of an n-th order transfer function into first-order modal terms with repeated poles and residues, our method bypasses the conventional Jacobian matrix-based iterations and efficiently reduces computational complexity from cubic $O(n^3)$ to linear $O(n)$.The proposed architecture seamlessly integrates an S-domain encoder with an attention-based correction operator to simultaneously isolate dominant response and adaptively capture higher-order non-linearities. Validated on order-1 to order-10 networks, our method achieves up to 0.99 test-set ($R^2$) accuracy against HSPICE golden waveforms and accelerates simulation by up to 18(X), providing a scalable, physics-aware framework for high-dimensional nonlinear modeling.

Fusing Global and Local: Transformer-CNN Synergy for Next-Gen Current Estimation

Apr 08, 2025Abstract:This paper presents a hybrid model combining Transformer and CNN for predicting the current waveform in signal lines. Unlike traditional approaches such as current source models, driver linear representations, waveform functional fitting, or equivalent load capacitance methods, our model does not rely on fixed simplified models of standard-cell drivers or RC loads. Instead, it replaces the complex Newton iteration process used in traditional SPICE simulations, leveraging the powerful sequence modeling capabilities of the Transformer framework to directly predict current responses without iterative solving steps. The hybrid architecture effectively integrates the global feature-capturing ability of Transformers with the local feature extraction advantages of CNNs, significantly improving the accuracy of current waveform predictions. Experimental results demonstrate that, compared to traditional SPICE simulations, the proposed algorithm achieves an error of only 0.0098. These results highlight the algorithm's superior capabilities in predicting signal line current waveforms, timing analysis, and power evaluation, making it suitable for a wide range of technology nodes, from 40nm to 3nm.

On the Performance Analysis of Momentum Method: A Frequency Domain Perspective

Nov 29, 2024

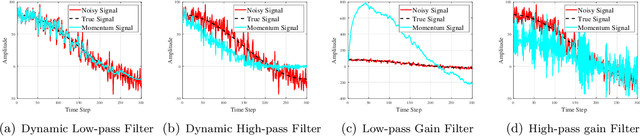

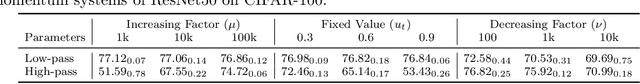

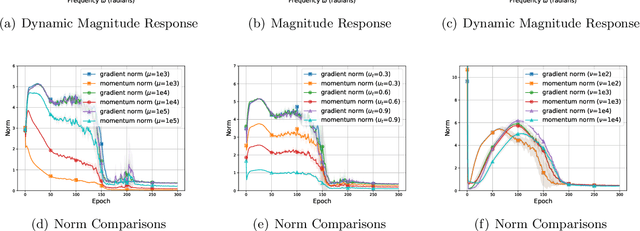

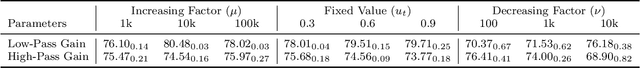

Abstract:Momentum-based optimizers are widely adopted for training neural networks. However, the optimal selection of momentum coefficients remains elusive. This uncertainty impedes a clear understanding of the role of momentum in stochastic gradient methods. In this paper, we present a frequency domain analysis framework that interprets the momentum method as a time-variant filter for gradients, where adjustments to momentum coefficients modify the filter characteristics. Our experiments support this perspective and provide a deeper understanding of the mechanism involved. Moreover, our analysis reveals the following significant findings: high-frequency gradient components are undesired in the late stages of training; preserving the original gradient in the early stages, and gradually amplifying low-frequency gradient components during training both enhance generalization performance. Based on these insights, we propose Frequency Stochastic Gradient Descent with Momentum (FSGDM), a heuristic optimizer that dynamically adjusts the momentum filtering characteristic with an empirically effective dynamic magnitude response. Experimental results demonstrate the superiority of FSGDM over conventional momentum optimizers.

Using a CNN Model to Assess Visual Artwork's Creativity

Aug 02, 2024

Abstract:Assessing artistic creativity has long challenged researchers, with traditional methods proving time-consuming. Recent studies have applied machine learning to evaluate creativity in drawings, but not paintings. Our research addresses this gap by developing a CNN model to automatically assess the creativity of students' paintings. Using a dataset of 600 paintings by professionals and children, our model achieved 90% accuracy and faster evaluation times than human raters. This approach demonstrates the potential of machine learning in advancing artistic creativity assessment, offering a more efficient alternative to traditional methods.

Distance Guided Channel Weighting for Semantic Segmentation

May 05, 2020

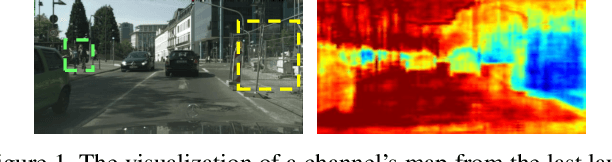

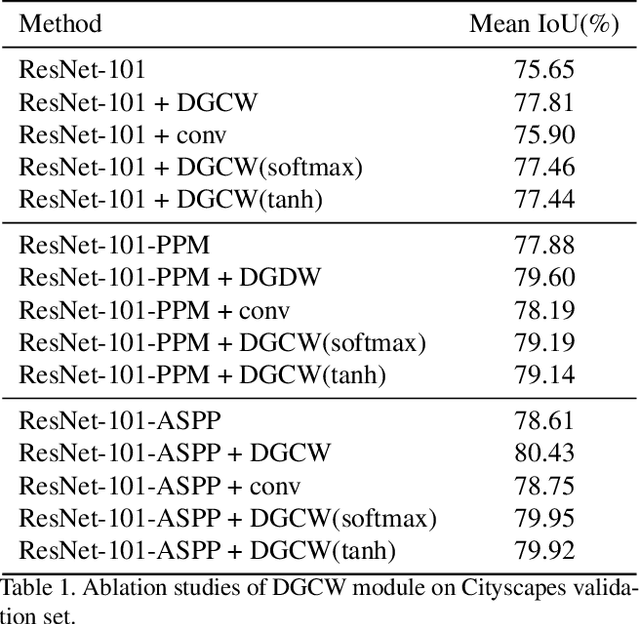

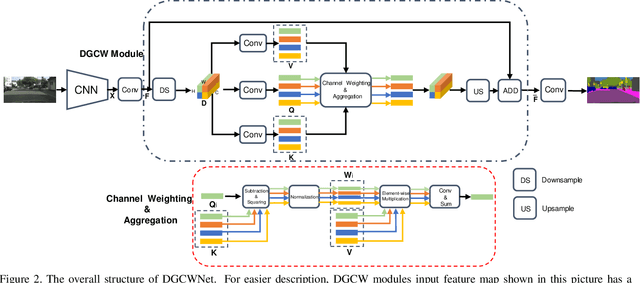

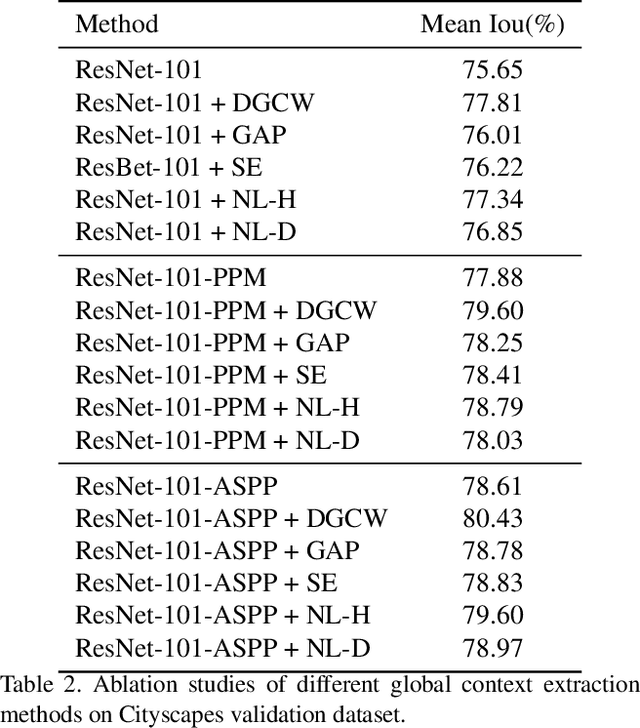

Abstract:Recent works have achieved great success in improving the performance of multiple computer vision tasks by capturing features with a high channel number utilizing deep neural networks. However, many channels of extracted features are not discriminative and contain a lot of redundant information. In this paper, we address above issue by introducing the Distance Guided Channel Weighting (DGCW) Module. The DGCW module is constructed in a pixel-wise context extraction manner, which enhances the discriminativeness of features by weighting different channels of each pixel's feature vector when modeling its relationship with other pixels. It can make full use of the high-discriminative information while ignore the low-discriminative information containing in feature maps, as well as capture the long-range dependencies. Furthermore, by incorporating the DGCW module with a baseline segmentation network, we propose the Distance Guided Channel Weighting Network (DGCWNet). We conduct extensive experiments to demonstrate the effectiveness of DGCWNet. In particular, it achieves 81.6% mIoU on Cityscapes with only fine annotated data for training, and also gains satisfactory performance on another two semantic segmentation datasets, i.e. Pascal Context and ADE20K. Code will be available soon at https://github.com/LanyunZhu/DGCWNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge