Lauge Sorensen

Augmentation based unsupervised domain adaptation

Feb 23, 2022

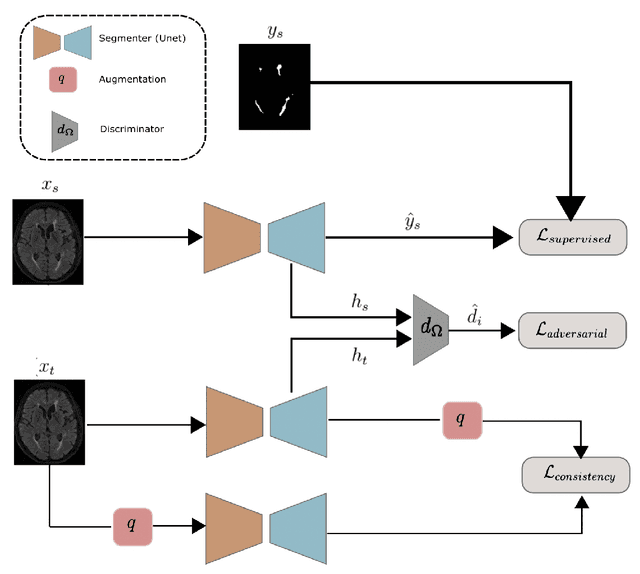

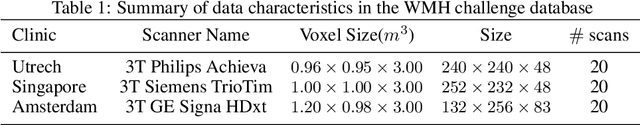

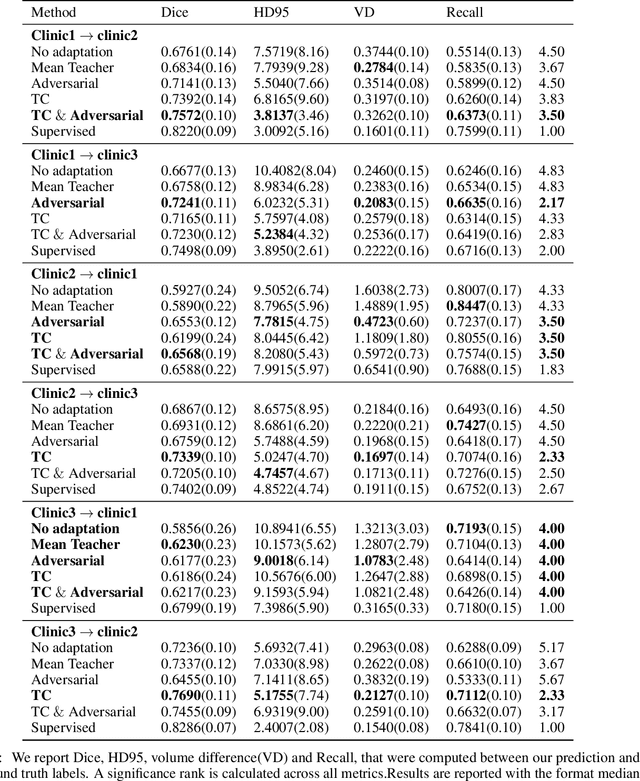

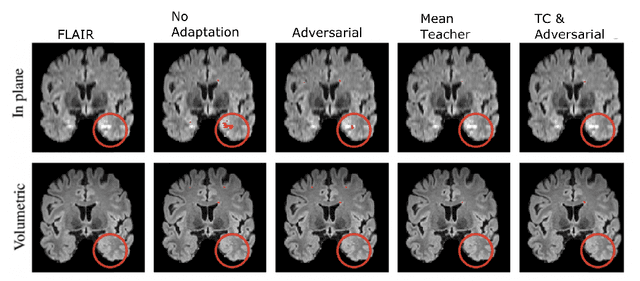

Abstract:The insertion of deep learning in medical image analysis had lead to the development of state-of-the art strategies in several applications such a disease classification, as well as abnormality detection and segmentation. However, even the most advanced methods require a huge and diverse amount of data to generalize. Because in realistic clinical scenarios, data acquisition and annotation is expensive, deep learning models trained on small and unrepresentative data tend to outperform when deployed in data that differs from the one used for training (e.g data from different scanners). In this work, we proposed a domain adaptation methodology to alleviate this problem in segmentation models. Our approach takes advantage of the properties of adversarial domain adaptation and consistency training to achieve more robust adaptation. Using two datasets with white matter hyperintensities (WMH) annotations, we demonstrated that the proposed method improves model generalization even in corner cases where individual strategies tend to fail.

On the Initialization of Long Short-Term Memory Networks

Dec 22, 2019

Abstract:Weight initialization is important for faster convergence and stability of deep neural networks training. In this paper, a robust initialization method is developed to address the training instability in long short-term memory (LSTM) networks. It is based on a normalized random initialization of the network weights that aims at preserving the variance of the network input and output in the same range. The method is applied to standard LSTMs for univariate time series regression and to LSTMs robust to missing values for multivariate disease progression modeling. The results show that in all cases, the proposed initialization method outperforms the state-of-the-art initialization techniques in terms of training convergence and generalization performance of the obtained solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge