Larry Chan

The Who in Explainable AI: How AI Background Shapes Perceptions of AI Explanations

Jul 28, 2021

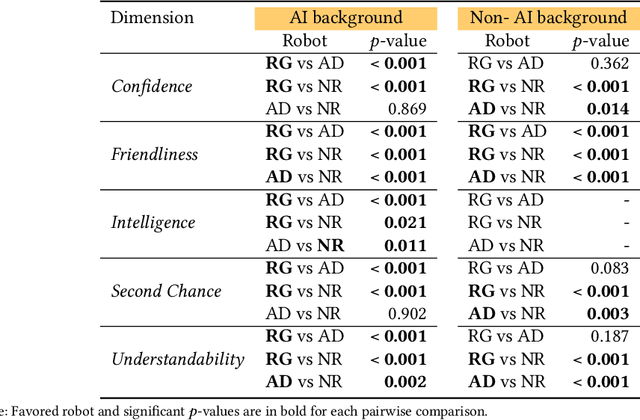

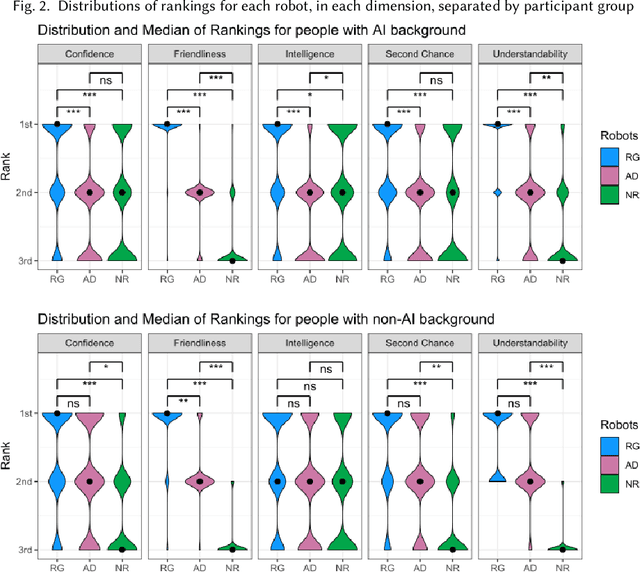

Abstract:Explainability of AI systems is critical for users to take informed actions and hold systems accountable. While "opening the opaque box" is important, understanding who opens the box can govern if the Human-AI interaction is effective. In this paper, we conduct a mixed-methods study of how two different groups of whos--people with and without a background in AI--perceive different types of AI explanations. These groups were chosen to look at how disparities in AI backgrounds can exacerbate the creator-consumer gap. We quantitatively share what the perceptions are along five dimensions: confidence, intelligence, understandability, second chance, and friendliness. Qualitatively, we highlight how the AI background influences each group's interpretations and elucidate why the differences might exist through the lenses of appropriation and cognitive heuristics. We find that (1) both groups had unwarranted faith in numbers, to different extents and for different reasons, (2) each group found explanatory values in different explanations that went beyond the usage we designed them for, and (3) each group had different requirements of what counts as humanlike explanations. Using our findings, we discuss potential negative consequences such as harmful manipulation of user trust and propose design interventions to mitigate them. By bringing conscious awareness to how and why AI backgrounds shape perceptions of potential creators and consumers in XAI, our work takes a formative step in advancing a pluralistic Human-centered Explainable AI discourse.

Automated Rationale Generation: A Technique for Explainable AI and its Effects on Human Perceptions

Jan 11, 2019

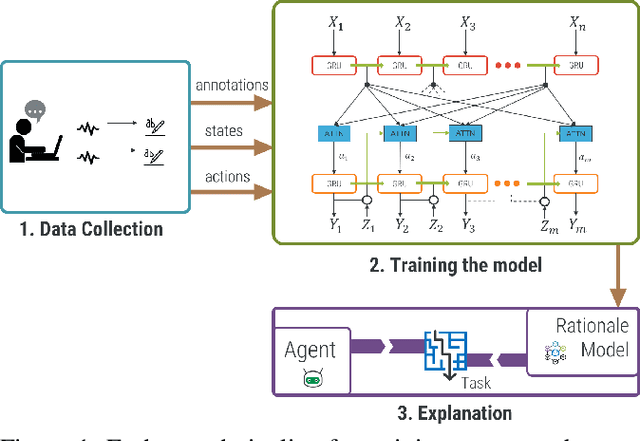

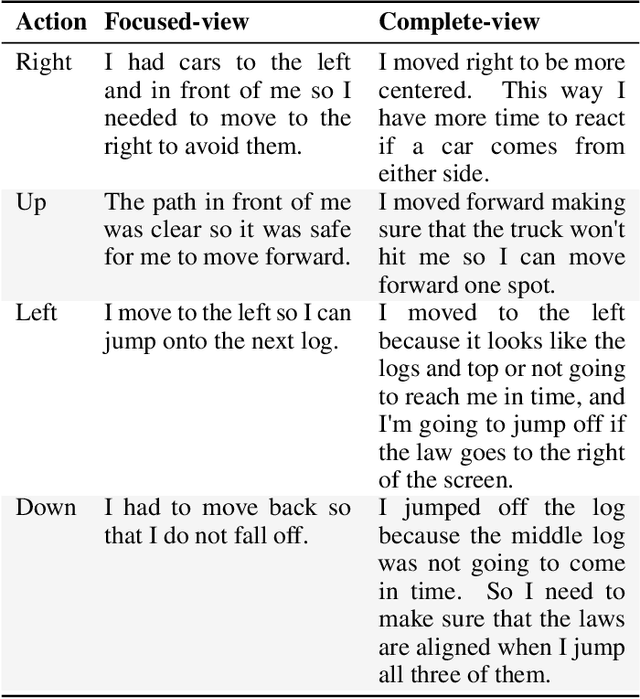

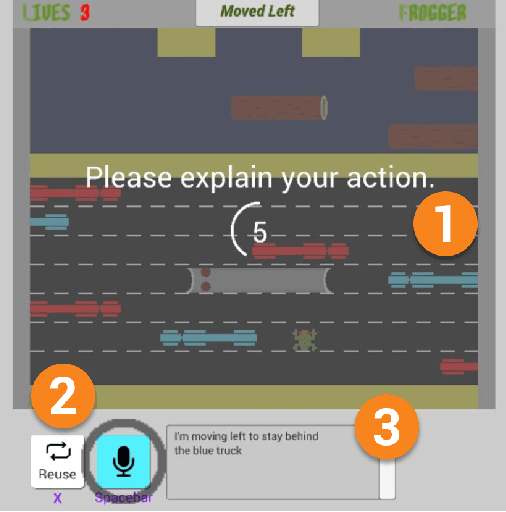

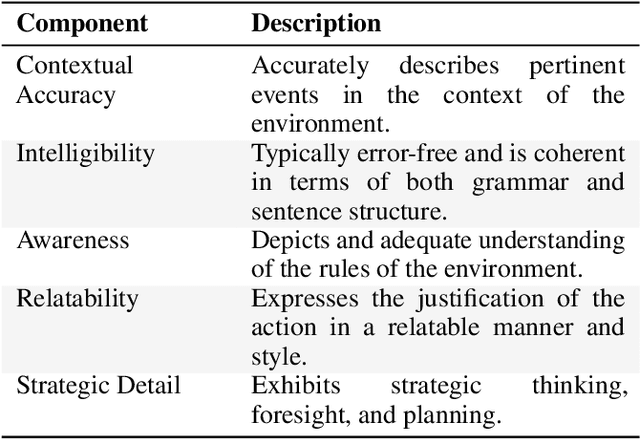

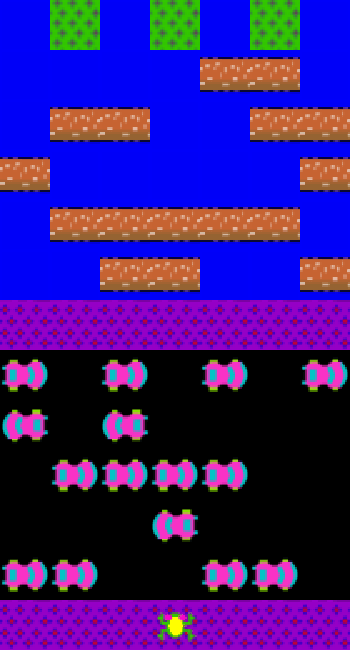

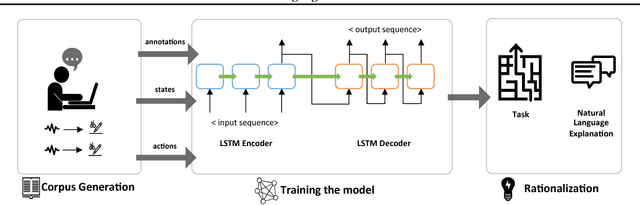

Abstract:Automated rationale generation is an approach for real-time explanation generation whereby a computational model learns to translate an autonomous agent's internal state and action data representations into natural language. Training on human explanation data can enable agents to learn to generate human-like explanations for their behavior. In this paper, using the context of an agent that plays Frogger, we describe (a) how to collect a corpus of explanations, (b) how to train a neural rationale generator to produce different styles of rationales, and (c) how people perceive these rationales. We conducted two user studies. The first study establishes the plausibility of each type of generated rationale and situates their user perceptions along the dimensions of confidence, humanlike-ness, adequate justification, and understandability. The second study further explores user preferences between the generated rationales with regard to confidence in the autonomous agent, communicating failure and unexpected behavior. Overall, we find alignment between the intended differences in features of the generated rationales and the perceived differences by users. Moreover, context permitting, participants preferred detailed rationales to form a stable mental model of the agent's behavior.

Rationalization: A Neural Machine Translation Approach to Generating Natural Language Explanations

Dec 19, 2017

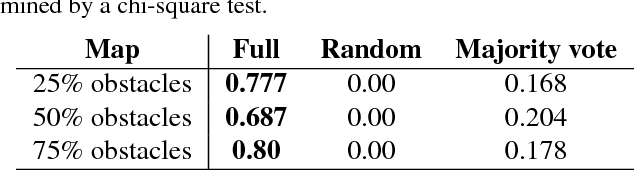

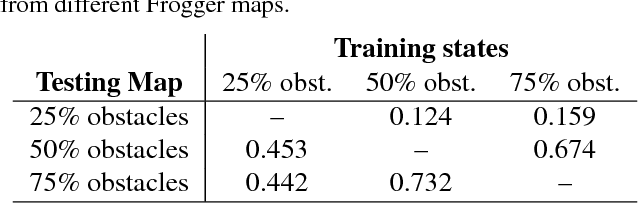

Abstract:We introduce AI rationalization, an approach for generating explanations of autonomous system behavior as if a human had performed the behavior. We describe a rationalization technique that uses neural machine translation to translate internal state-action representations of an autonomous agent into natural language. We evaluate our technique in the Frogger game environment, training an autonomous game playing agent to rationalize its action choices using natural language. A natural language training corpus is collected from human players thinking out loud as they play the game. We motivate the use of rationalization as an approach to explanation generation and show the results of two experiments evaluating the effectiveness of rationalization. Results of these evaluations show that neural machine translation is able to accurately generate rationalizations that describe agent behavior, and that rationalizations are more satisfying to humans than other alternative methods of explanation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge