Kourosh Parand

BridgeNet: A Hybrid, Physics-Informed Machine Learning Framework for Solving High-Dimensional Fokker-Planck Equations

Jun 09, 2025Abstract:BridgeNet is a novel hybrid framework that integrates convolutional neural networks with physics-informed neural networks to efficiently solve non-linear, high-dimensional Fokker-Planck equations (FPEs). Traditional PINNs, which typically rely on fully connected architectures, often struggle to capture complex spatial hierarchies and enforce intricate boundary conditions. In contrast, BridgeNet leverages adaptive CNN layers for effective local feature extraction and incorporates a dynamically weighted loss function that rigorously enforces physical constraints. Extensive numerical experiments across various test cases demonstrate that BridgeNet not only achieves significantly lower error metrics and faster convergence compared to conventional PINN approaches but also maintains robust stability in high-dimensional settings. This work represents a substantial advancement in computational physics, offering a scalable and accurate solution methodology with promising applications in fields ranging from financial mathematics to complex system dynamics.

PINNIES: An Efficient Physics-Informed Neural Network Framework to Integral Operator Problems

Sep 03, 2024Abstract:This paper introduces an efficient tensor-vector product technique for the rapid and accurate approximation of integral operators within physics-informed deep learning frameworks. Our approach leverages neural network architectures to evaluate problem dynamics at specific points, while employing Gaussian quadrature formulas to approximate the integral components, even in the presence of infinite domains or singularities. We demonstrate the applicability of this method to both Fredholm and Volterra integral operators, as well as to optimal control problems involving continuous time. Additionally, we outline how this approach can be extended to approximate fractional derivatives and integrals and propose a fast matrix-vector product algorithm for efficiently computing the fractional Caputo derivative. In the numerical section, we conduct comprehensive experiments on forward and inverse problems. For forward problems, we evaluate the performance of our method on over 50 diverse mathematical problems, including multi-dimensional integral equations, systems of integral equations, partial and fractional integro-differential equations, and various optimal control problems in delay, fractional, multi-dimensional, and nonlinear configurations. For inverse problems, we test our approach on several integral equations and fractional integro-differential problems. Finally, we introduce the pinnies Python package to facilitate the implementation and usability of the proposed method.

Hermite Neural Network Simulation for Solving the 2D Schrodinger Equation

Feb 16, 2024

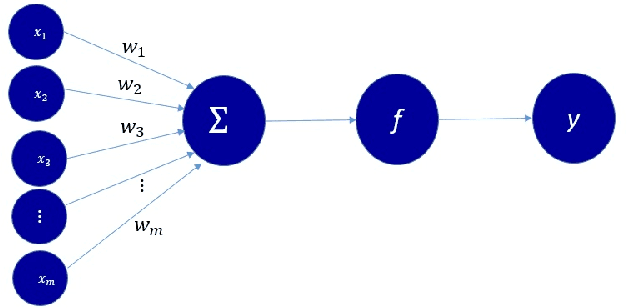

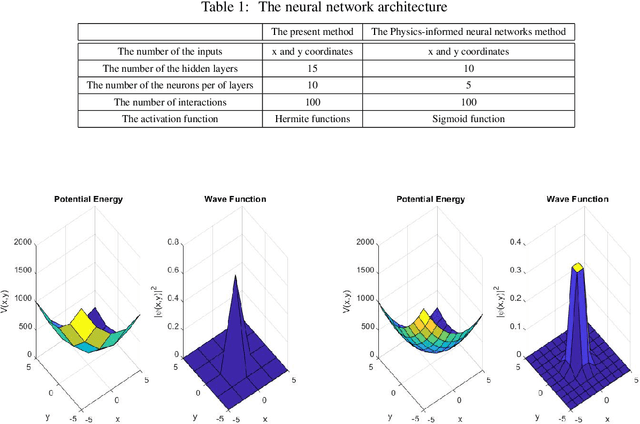

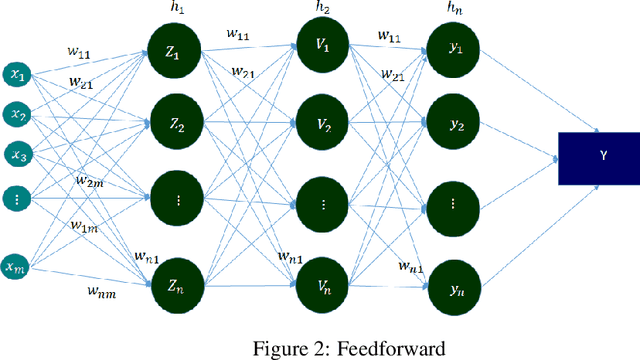

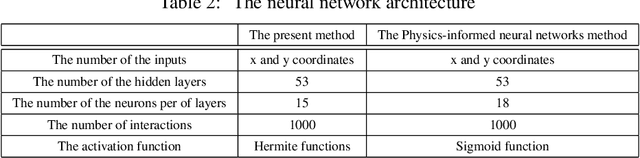

Abstract:The Schrodinger equation is a mathematical equation describing the wave function's behavior in a quantum-mechanical system. It is a partial differential equation that provides valuable insights into the fundamental principles of quantum mechanics. In this paper, the aim was to solve the Schrodinger equation with sufficient accuracy by using a mixture of neural networks with the collocation method base Hermite functions. Initially, the Hermite functions roots were employed as collocation points, enhancing the efficiency of the solution. The Schrodinger equation is defined in an infinite domain, the use of Hermite functions as activation functions resulted in excellent precision. Finally, the proposed method was simulated using MATLAB's Simulink tool. The results were then compared with those obtained using Physics-informed neural networks and the presented method.

Accelerating Fractional PINNs using Operational Matrices of Derivative

Jan 25, 2024Abstract:This paper presents a novel operational matrix method to accelerate the training of fractional Physics-Informed Neural Networks (fPINNs). Our approach involves a non-uniform discretization of the fractional Caputo operator, facilitating swift computation of fractional derivatives within Caputo-type fractional differential problems with $0<\alpha<1$. In this methodology, the operational matrix is precomputed, and during the training phase, automatic differentiation is replaced with a matrix-vector product. While our methodology is compatible with any network, we particularly highlight its successful implementation in PINNs, emphasizing the enhanced accuracy achieved when utilizing the Legendre Neural Block (LNB) architecture. LNB incorporates Legendre polynomials into the PINN structure, providing a significant boost in accuracy. The effectiveness of our proposed method is validated across diverse differential equations, including Delay Differential Equations (DDEs) and Systems of Differential Algebraic Equations (DAEs). To demonstrate its versatility, we extend the application of the method to systems of differential equations, specifically addressing nonlinear Pantograph fractional-order DDEs/DAEs. The results are supported by a comprehensive analysis of numerical outcomes.

An Orthogonal Polynomial Kernel-Based Machine Learning Model for Differential-Algebraic Equations

Jan 25, 2024Abstract:The recent introduction of the Least-Squares Support Vector Regression (LS-SVR) algorithm for solving differential and integral equations has sparked interest. In this study, we expand the application of this algorithm to address systems of differential-algebraic equations (DAEs). Our work presents a novel approach to solving general DAEs in an operator format by establishing connections between the LS-SVR machine learning model, weighted residual methods, and Legendre orthogonal polynomials. To assess the effectiveness of our proposed method, we conduct simulations involving various DAE scenarios, such as nonlinear systems, fractional-order derivatives, integro-differential, and partial DAEs. Finally, we carry out comparisons between our proposed method and currently established state-of-the-art approaches, demonstrating its reliability and effectiveness.

Using Singular Value Decomposition in a Convolutional Neural Network to Improve Brain Tumor Segmentation Accuracy

Jan 04, 2024Abstract:A brain tumor consists of cells showing abnormal brain growth. The area of the brain tumor significantly affects choosing the type of treatment and following the course of the disease during the treatment. At the same time, pictures of Brain MRIs are accompanied by noise. Eliminating existing noises can significantly impact the better segmentation and diagnosis of brain tumors. In this work, we have tried using the analysis of eigenvalues. We have used the MSVD algorithm, reducing the image noise and then using the deep neural network to segment the tumor in the images. The proposed method's accuracy was increased by 2.4% compared to using the original images. With Using the MSVD method, convergence speed has also increased, showing the proposed method's effectiveness

deepFDEnet: A Novel Neural Network Architecture for Solving Fractional Differential Equations

Sep 14, 2023Abstract:The primary goal of this research is to propose a novel architecture for a deep neural network that can solve fractional differential equations accurately. A Gaussian integration rule and a $L_1$ discretization technique are used in the proposed design. In each equation, a deep neural network is used to approximate the unknown function. Three forms of fractional differential equations have been examined to highlight the method's versatility: a fractional ordinary differential equation, a fractional order integrodifferential equation, and a fractional order partial differential equation. The results show that the proposed architecture solves different forms of fractional differential equations with excellent precision.

Solving Falkner-Skan type equations via Legendre and Chebyshev Neural Blocks

Aug 07, 2023Abstract:In this paper, a new deep-learning architecture for solving the non-linear Falkner-Skan equation is proposed. Using Legendre and Chebyshev neural blocks, this approach shows how orthogonal polynomials can be used in neural networks to increase the approximation capability of artificial neural networks. In addition, utilizing the mathematical properties of these functions, we overcome the computational complexity of the backpropagation algorithm by using the operational matrices of the derivative. The efficiency of the proposed method is carried out by simulating various configurations of the Falkner-Skan equation.

A least squares support vector regression for anisotropic diffusion filtering

Jan 30, 2022

Abstract:Anisotropic diffusion filtering for signal smoothing as a low-pass filter has the advantage of the edge-preserving, i.e., it does not affect the edges that contain more critical data than the other parts of the signal. In this paper, we present a numerical algorithm based on least squares support vector regression by using Legendre orthogonal kernel with the discretization of the nonlinear diffusion problem in time by the Crank-Nicolson method. This method transforms the signal smoothing process into solving an optimization problem that can be solved by efficient numerical algorithms. In the final analysis, we have reported some numerical experiments to show the effectiveness of the proposed machine learning based approach for signal smoothing.

Legendre Deep Neural Network (LDNN) and its application for approximation of nonlinear Volterra Fredholm Hammerstein integral equations

Jun 27, 2021

Abstract:Various phenomena in biology, physics, and engineering are modeled by differential equations. These differential equations including partial differential equations and ordinary differential equations can be converted and represented as integral equations. In particular, Volterra Fredholm Hammerstein integral equations are the main type of these integral equations and researchers are interested in investigating and solving these equations. In this paper, we propose Legendre Deep Neural Network (LDNN) for solving nonlinear Volterra Fredholm Hammerstein integral equations (VFHIEs). LDNN utilizes Legendre orthogonal polynomials as activation functions of the Deep structure. We present how LDNN can be used to solve nonlinear VFHIEs. We show using the Gaussian quadrature collocation method in combination with LDNN results in a novel numerical solution for nonlinear VFHIEs. Several examples are given to verify the performance and accuracy of LDNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge