Klaus Diepold

Technical University of Munich

A Practical Multilevel Governance Framework for Autonomous and Intelligent Systems

Apr 21, 2024

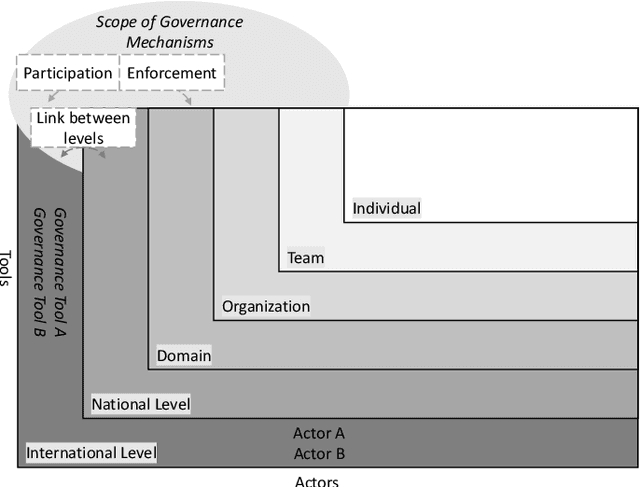

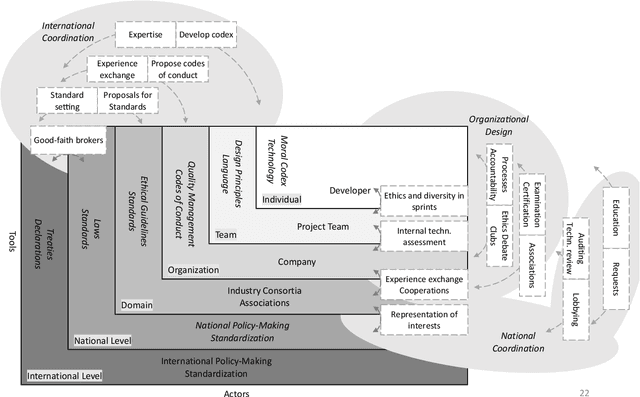

Abstract:Autonomous and intelligent systems (AIS) facilitate a wide range of beneficial applications across a variety of different domains. However, technical characteristics such as unpredictability and lack of transparency, as well as potential unintended consequences, pose considerable challenges to the current governance infrastructure. Furthermore, the speed of development and deployment of applications outpaces the ability of existing governance institutions to put in place effective ethical-legal oversight. New approaches for agile, distributed and multilevel governance are needed. This work presents a practical framework for multilevel governance of AIS. The framework enables mapping actors onto six levels of decision-making including the international, national and organizational levels. Furthermore, it offers the ability to identify and evolve existing tools or create new tools for guiding the behavior of actors within the levels. Governance mechanisms enable actors to shape and enforce regulations and other tools, which when complemented with good practices contribute to effective and comprehensive governance.

Reinforcement Learning with Ensemble Model Predictive Safety Certification

Feb 06, 2024

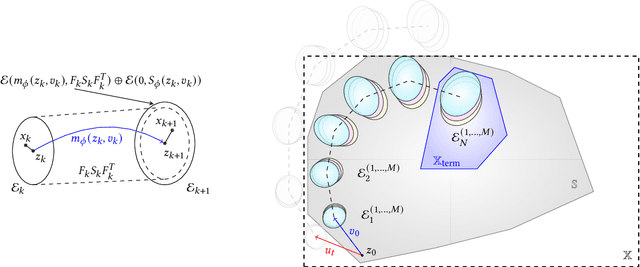

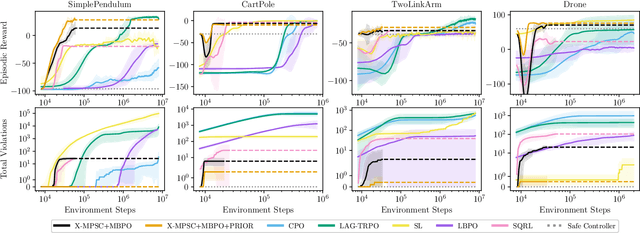

Abstract:Reinforcement learning algorithms need exploration to learn. However, unsupervised exploration prevents the deployment of such algorithms on safety-critical tasks and limits real-world deployment. In this paper, we propose a new algorithm called Ensemble Model Predictive Safety Certification that combines model-based deep reinforcement learning with tube-based model predictive control to correct the actions taken by a learning agent, keeping safety constraint violations at a minimum through planning. Our approach aims to reduce the amount of prior knowledge about the actual system by requiring only offline data generated by a safe controller. Our results show that we can achieve significantly fewer constraint violations than comparable reinforcement learning methods.

Towards Interpretable Classification of Leukocytes based on Deep Learning

Nov 24, 2023

Abstract:Label-free approaches are attractive in cytological imaging due to their flexibility and cost efficiency. They are supported by machine learning methods, which, despite the lack of labeling and the associated lower contrast, can classify cells with high accuracy where the human observer has little chance to discriminate cells. In order to better integrate these workflows into the clinical decision making process, this work investigates the calibration of confidence estimation for the automated classification of leukocytes. In addition, different visual explanation approaches are compared, which should bring machine decision making closer to professional healthcare applications. Furthermore, we were able to identify general detection patterns in neural networks and demonstrate the utility of the presented approaches in different scenarios of blood cell analysis.

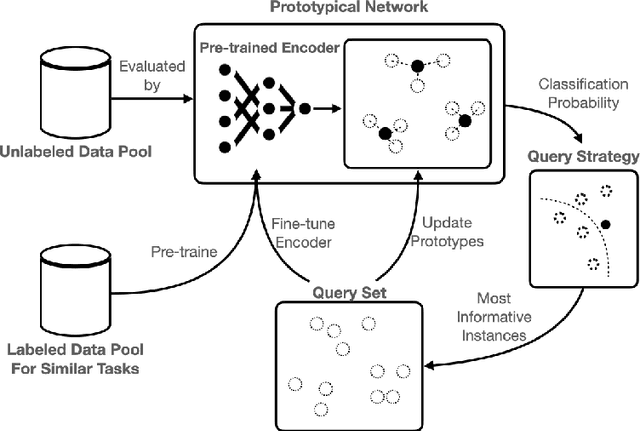

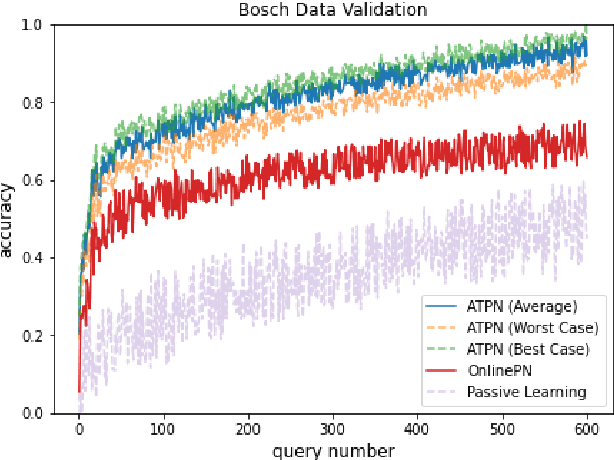

Active Transfer Prototypical Network: An Efficient Labeling Algorithm for Time-Series Data

Sep 28, 2022

Abstract:The paucity of labeled data is a typical challenge in the automotive industry. Annotating time-series measurements requires solid domain knowledge and in-depth exploratory data analysis, which implies a high labeling effort. Conventional Active Learning (AL) addresses this issue by actively querying the most informative instances based on the estimated classification probability and retraining the model iteratively. However, the learning efficiency strongly relies on the initial model, resulting in the trade-off between the size of the initial dataset and the query number. This paper proposes a novel Few-Shot Learning (FSL)-based AL framework, which addresses the trade-off problem by incorporating a Prototypical Network (ProtoNet) in the AL iterations. The results show an improvement, on the one hand, in the robustness to the initial model and, on the other hand, in the learning efficiency of the ProtoNet through the active selection of the support set in each iteration. This framework was validated on UCI HAR/HAPT dataset and a real-world braking maneuver dataset. The learning performance significantly surpasses traditional AL algorithms on both datasets, achieving 90% classification accuracy with 10% and 5% labeling effort, respectively.

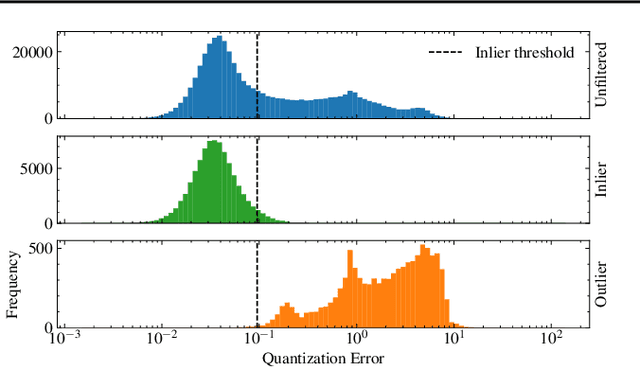

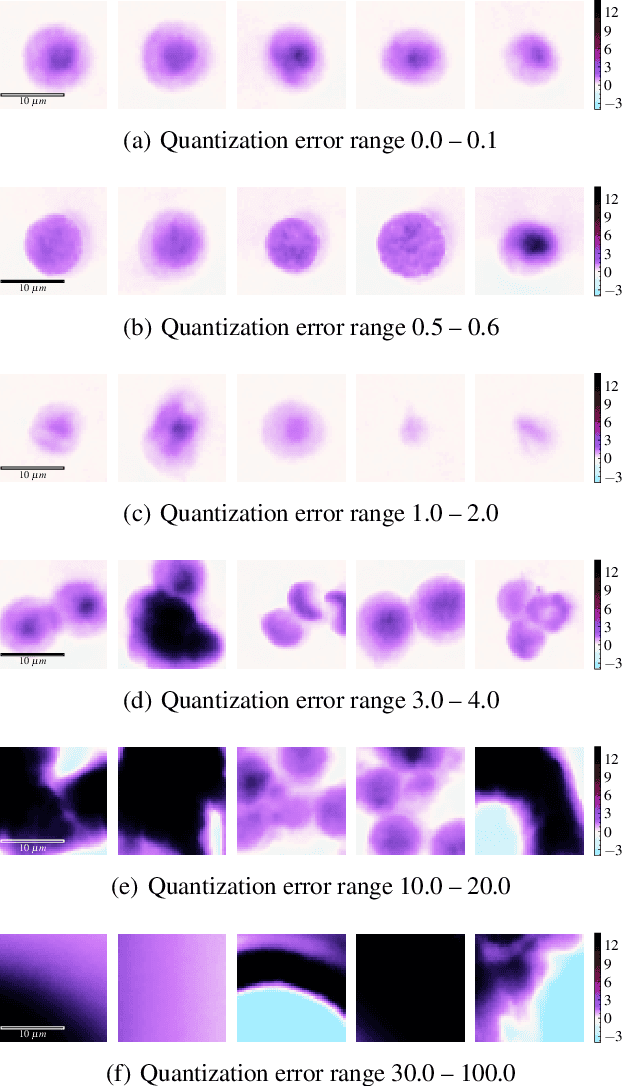

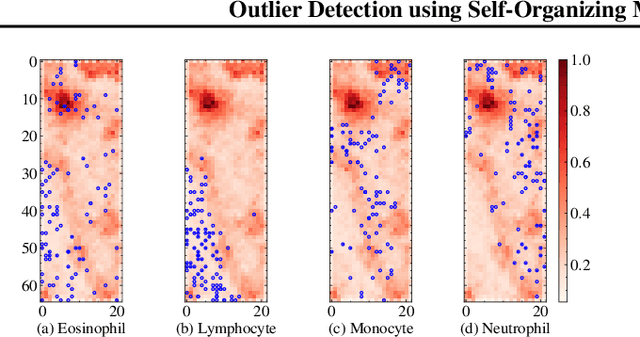

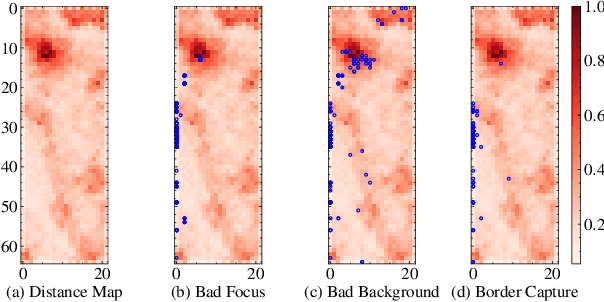

Outlier Detection using Self-Organizing Maps for Automated Blood Cell Analysis

Aug 18, 2022

Abstract:The quality of datasets plays a crucial role in the successful training and deployment of deep learning models. Especially in the medical field, where system performance may impact the health of patients, clean datasets are a safety requirement for reliable predictions. Therefore, outlier detection is an essential process when building autonomous clinical decision systems. In this work, we assess the suitability of Self-Organizing Maps for outlier detection specifically on a medical dataset containing quantitative phase images of white blood cells. We detect and evaluate outliers based on quantization errors and distance maps. Our findings confirm the suitability of Self-Organizing Maps for unsupervised Out-Of-Distribution detection on the dataset at hand. Self-Organizing Maps perform on par with a manually specified filter based on expert domain knowledge. Additionally, they show promise as a tool in the exploration and cleaning of medical datasets. As a direction for future research, we suggest a combination of Self-Organizing Maps and feature extraction based on deep learning.

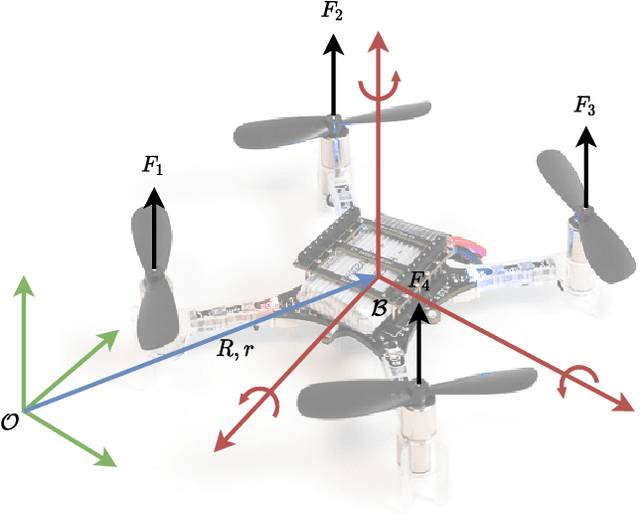

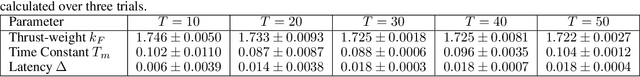

Using Simulation Optimization to Improve Zero-shot Policy Transfer of Quadrotors

Jan 04, 2022

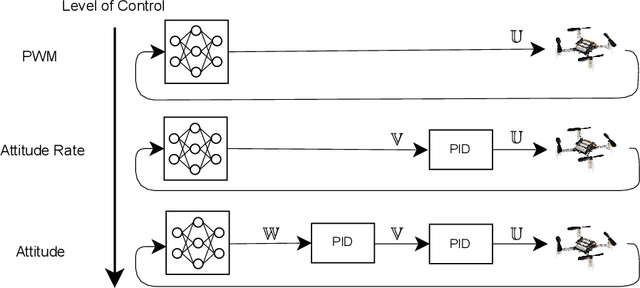

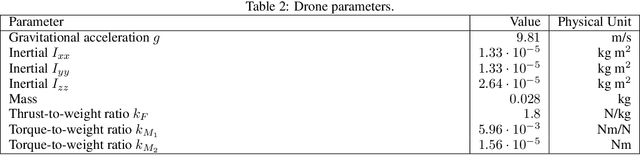

Abstract:In this work, we show that it is possible to train low-level control policies with reinforcement learning entirely in simulation and, then, deploy them on a quadrotor robot without using real-world data to fine-tune. To render zero-shot policy transfers feasible, we apply simulation optimization to narrow the reality gap. Our neural network-based policies use only onboard sensor data and run entirely on the embedded drone hardware. In extensive real-world experiments, we compare three different control structures ranging from low-level pulse-width-modulated motor commands to high-level attitude control based on nested proportional-integral-derivative controllers. Our experiments show that low-level controllers trained with reinforcement learning require a more accurate simulation than higher-level control policies.

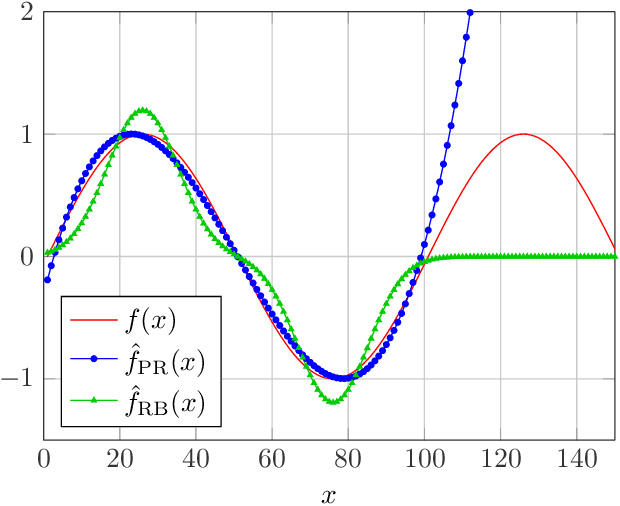

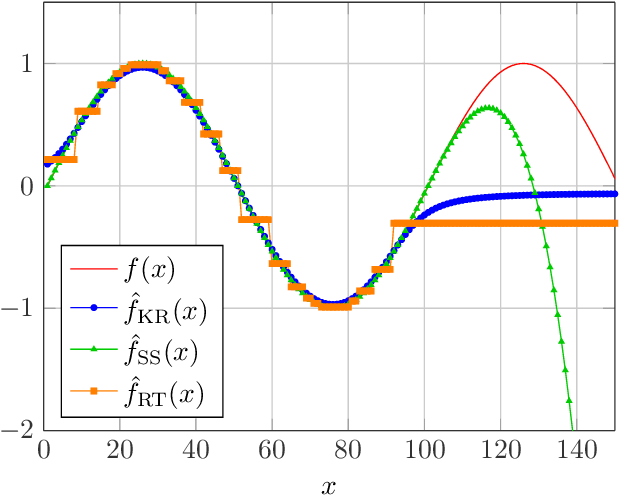

Analysis and Optimisation of Bellman Residual Errors with Neural Function Approximation

Jun 17, 2021

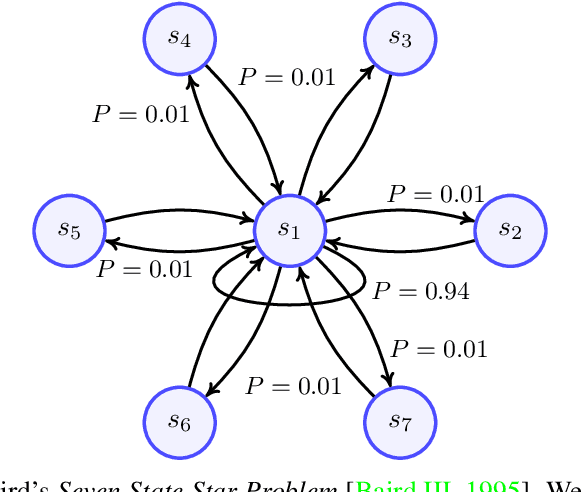

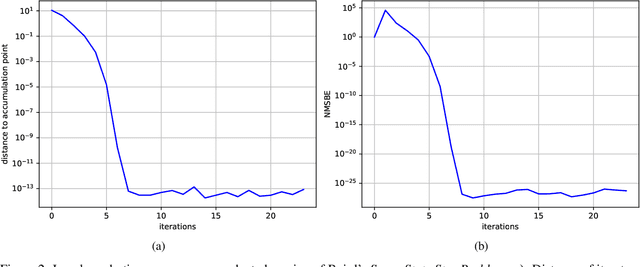

Abstract:Recent development of Deep Reinforcement Learning has demonstrated superior performance of neural networks in solving challenging problems with large or even continuous state spaces. One specific approach is to deploy neural networks to approximate value functions by minimising the Mean Squared Bellman Error function. Despite great successes of Deep Reinforcement Learning, development of reliable and efficient numerical algorithms to minimise the Bellman Error is still of great scientific interest and practical demand. Such a challenge is partially due to the underlying optimisation problem being highly non-convex or using incorrect gradient information as done in Semi-Gradient algorithms. In this work, we analyse the Mean Squared Bellman Error from a smooth optimisation perspective combined with a Residual Gradient formulation. Our contribution is two-fold. First, we analyse critical points of the error function and provide technical insights on the optimisation procure and design choices for neural networks. When the existence of global minima is assumed and the objective fulfils certain conditions we can eliminate suboptimal local minima when using over-parametrised neural networks. We can construct an efficient Approximate Newton's algorithm based on our analysis and confirm theoretical properties of this algorithm such as being locally quadratically convergent to a global minimum numerically. Second, we demonstrate feasibility and generalisation capabilities of the proposed algorithm empirically using continuous control problems and provide a numerical verification of our critical point analysis. We outline the short coming of Semi-Gradients. To benefit from an approximate Newton's algorithm complete derivatives of the Mean Squared Bellman error must be considered during training.

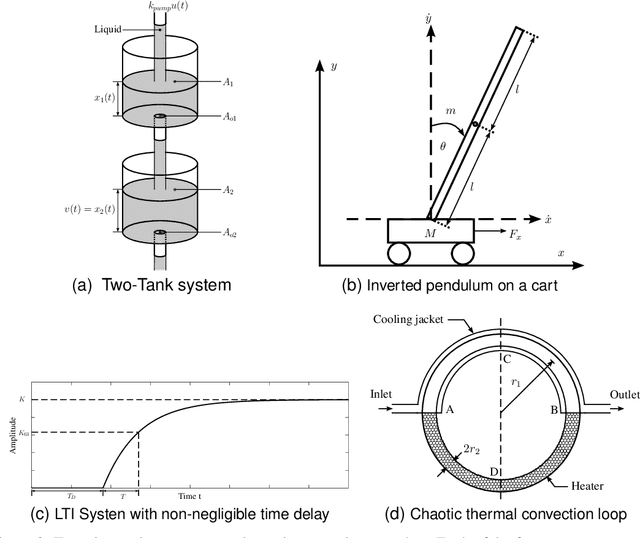

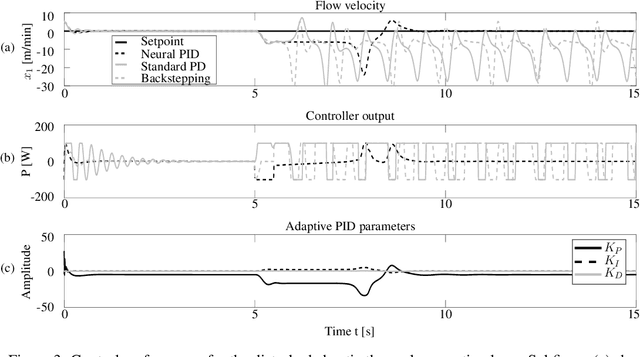

General Dynamic Neural Networks for explainable PID parameter tuning in control engineering: An extensive comparison

May 30, 2019

Abstract:Automation, the ability to run processes without human supervision, is one of the most important drivers of increased scalability and productivity. Modern automation largely relies on forms of closed loop control, wherein a controller interacts with a controlled process via actions, based on observations. Despite an increase in the use of machine learning for process control, most deployed controllers still are linear Proportional-Integral-Derivative (PID) controllers. PID controllers perform well on linear and near-linear systems but are not robust enough for more complex processes. As a main contribution of this paper, we examine the utility of extending standard PID controllers with General Dynamic Neural Networks (GDNN); we show that GDNN (neural) PID controllers perform well on a range of control systems and highlight what is needed to make them a stable, scalable, and interpretable option for control. To do so, we provide a comprehensive study using four different benchmark processes. All control environments are evaluated with and without noise as well as with and without disturbances. The neural PID controller performs better than standard PID control in 15 of 16 tasks and better than model-based control in 13 of 16 tasks. As a second contribution of this work, we address the Achilles heel that prevents neural networks from being used in real-world control processes so far: lack of interpretability. We use bounded-input bounded-output stability analysis to evaluate the parameters suggested by the neural network, thus making them understandable for human engineers. This combination of rigorous evaluation paired with better explainability is an important step towards the acceptance of neural-network-based control approaches for real-world systems. It is furthermore an important step towards explainable and safe applied artificial intelligence.

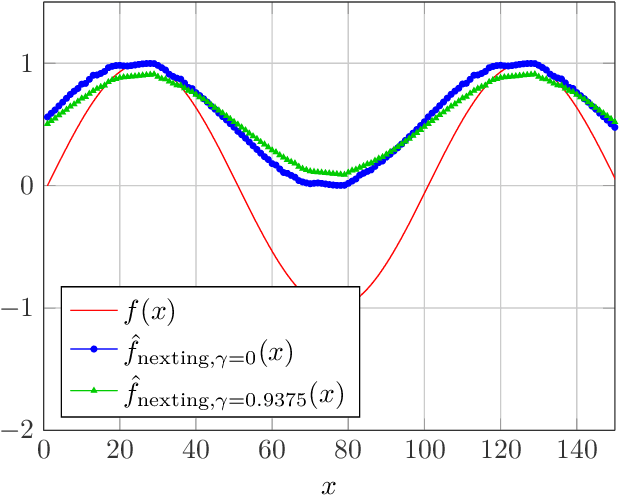

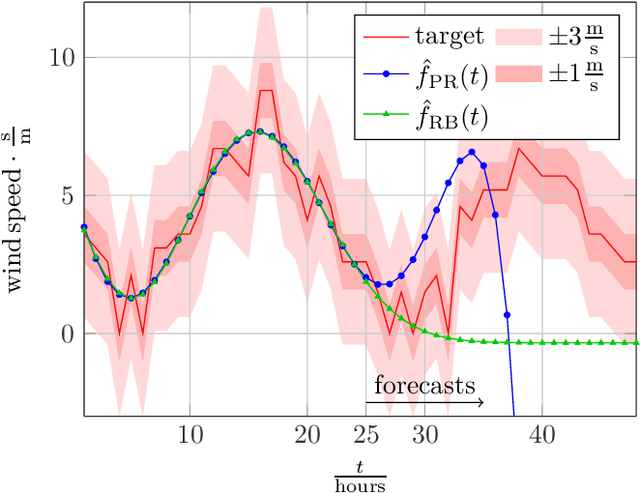

A Comparison of Prediction Algorithms and Nexting for Short Term Weather Forecasts

Mar 18, 2019

Abstract:This report first provides a brief overview of a number of supervised learning algorithms for regression tasks. Among those are neural networks, regression trees, and the recently introduced Nexting. Nexting has been presented in the context of reinforcement learning where it was used to predict a large number of signals at different timescales. In the second half of this report, we apply the algorithms to historical weather data in order to evaluate their suitability to forecast a local weather trend. Our experiments did not identify one clearly preferable method, but rather show that choosing an appropriate algorithm depends on the available side information. For slowly varying signals and a proficient number of training samples, Nexting achieved good results in the studied cases.

The Virtuous Machine - Old Ethics for New Technology?

Jun 27, 2018Abstract:Modern AI and robotic systems are characterized by a high and ever-increasing level of autonomy. At the same time, their applications in fields such as autonomous driving, service robotics and digital personal assistants move closer to humans. From the combination of both developments emerges the field of AI ethics which recognizes that the actions of autonomous machines entail moral dimensions and tries to answer the question of how we can build moral machines. In this paper we argue for taking inspiration from Aristotelian virtue ethics by showing that it forms a suitable combination with modern AI due to its focus on learning from experience. We furthermore propose that imitation learning from moral exemplars, a central concept in virtue ethics, can solve the value alignment problem. Finally, we show that an intelligent system endowed with the virtues of temperance and friendship to humans would not pose a control problem as it would not have the desire for limitless self-improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge