Kevin van Hecke

The NederDrone: A hybrid lift, hybrid energy hydrogen UAV

Nov 08, 2020

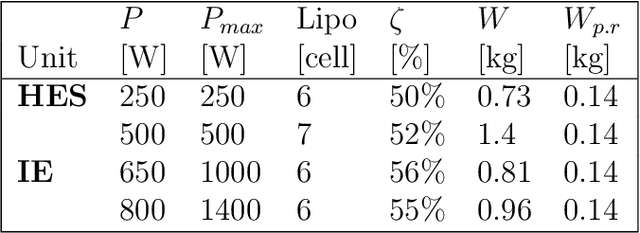

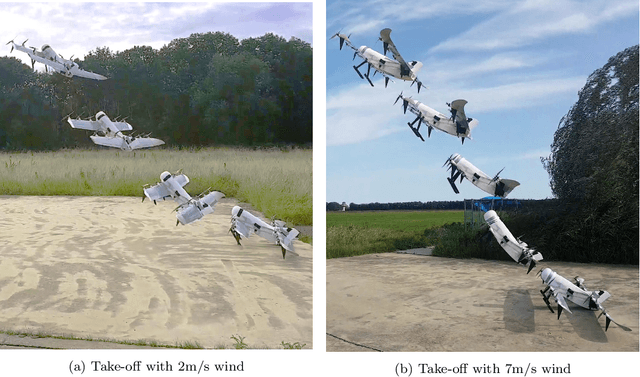

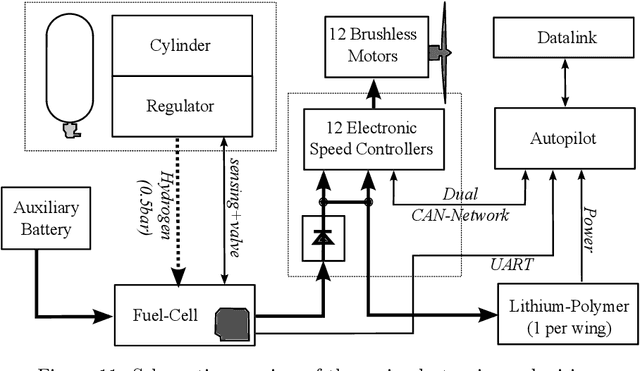

Abstract:A lot of UAV applications require vertical take-off and landing (VTOL) combined with very long-range or endurance. Transitioning UAVs have been proposed to combine the VTOL capabilities of helicopters with the efficient long-range flight properties of fixed-wing aircraft. But energy is still a bottleneck for many electric long endurance applications. While solar power technology and battery technology have improved a lot, in rougher conditions they still respectively lack the power or total amount of energy required for many real-world situations. In this paper, we introduce the NederDrone, a hybrid lift, hybrid energy hydrogen-powered UAV which can perform vertical take-off and landings using 12 propellers while flying efficiently in forward flight thanks to its fixed wings. The energy is supplied from a mix of hydrogen-driven fuel-cells to store large amounts of energy and battery power for high power situations. The hydrogen is stored in a pressurized cylinder around which the UAV is optimized. This paper analyses the selection of the concept, the implemented safety elements, the electronics and flight control and shows flight data including a 3h38 flight at sea, starting and landing on a small moving ship.

Fusion of stereo and still monocular depth estimates in a self-supervised learning context

Mar 20, 2018

Abstract:We study how autonomous robots can learn by themselves to improve their depth estimation capability. In particular, we investigate a self-supervised learning setup in which stereo vision depth estimates serve as targets for a convolutional neural network (CNN) that transforms a single still image to a dense depth map. After training, the stereo and mono estimates are fused with a novel fusion method that preserves high confidence stereo estimates, while leveraging the CNN estimates in the low-confidence regions. The main contribution of the article is that it is shown that the fused estimates lead to a higher performance than the stereo vision estimates alone. Experiments are performed on the KITTI dataset, and on board of a Parrot SLAMDunk, showing that even rather limited CNNs can help provide stereo vision equipped robots with more reliable depth maps for autonomous navigation.

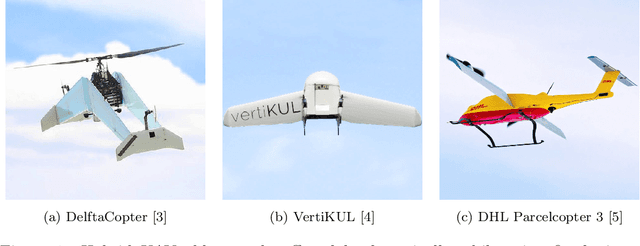

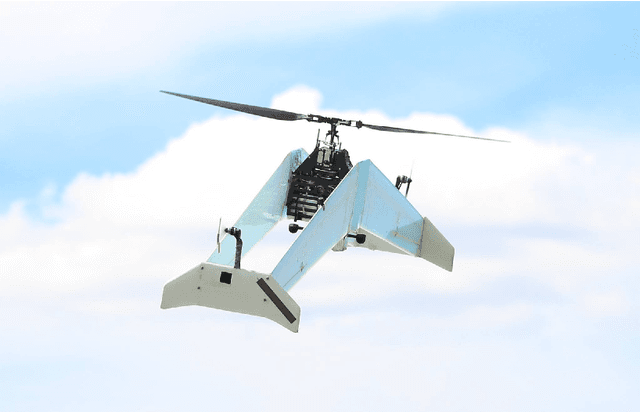

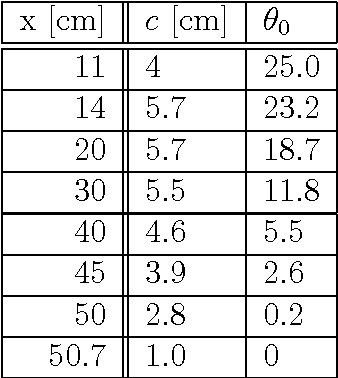

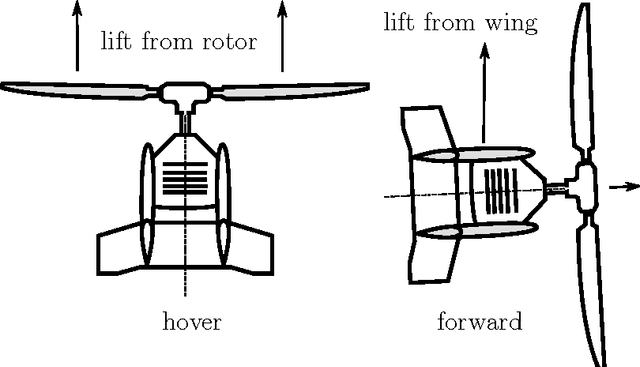

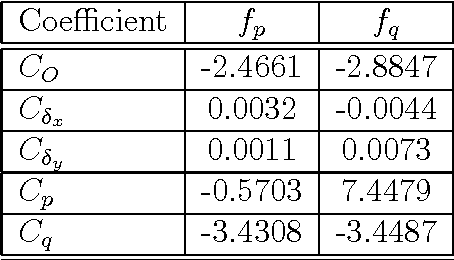

Design, Control and Visual Navigation of the DelftaCopter

Jan 03, 2017

Abstract:To participate in the Outback Medical Express UAV Challenge 2016, a vehicle was designed and tested that can hover precisely, take-off and land vertically, fly fast forward efficiently and use computer vision to locate a person and a suitable landing location. A rotor blade was designed that can deliver sufficient thrust in hover, while still being efficient in fast forward flight. Energy measurements and windtunnel tests were performed. A rotor-head and corresponding control algorithms were developed to allow transitioning flight with the non-conventional rotor dynamics. Dedicated electronics were designed that meet vehicle needs and regulations to allow safe flight beyond visual line of sight. Vision based search and guidance algorithms were developed and tested. Flight tests and a competition participation illustrate the applicability of the DelftaCopter concept.

Persistent self-supervised learning principle: from stereo to monocular vision for obstacle avoidance

Mar 25, 2016

Abstract:Self-Supervised Learning (SSL) is a reliable learning mechanism in which a robot uses an original, trusted sensor cue for training to recognize an additional, complementary sensor cue. We study for the first time in SSL how a robot's learning behavior should be organized, so that the robot can keep performing its task in the case that the original cue becomes unavailable. We study this persistent form of SSL in the context of a flying robot that has to avoid obstacles based on distance estimates from the visual cue of stereo vision. Over time it will learn to also estimate distances based on monocular appearance cues. A strategy is introduced that has the robot switch from stereo vision based flight to monocular flight, with stereo vision purely used as 'training wheels' to avoid imminent collisions. This strategy is shown to be an effective approach to the 'feedback-induced data bias' problem as also experienced in learning from demonstration. Both simulations and real-world experiments with a stereo vision equipped AR drone 2.0 show the feasibility of this approach, with the robot successfully using monocular vision to avoid obstacles in a 5 x 5 room. The experiments show the potential of persistent SSL as a robust learning approach to enhance the capabilities of robots. Moreover, the abundant training data coming from the own sensors allows to gather large data sets necessary for deep learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge