Kenji Kobayashi

SoccerNet 2023 Challenges Results

Sep 12, 2023

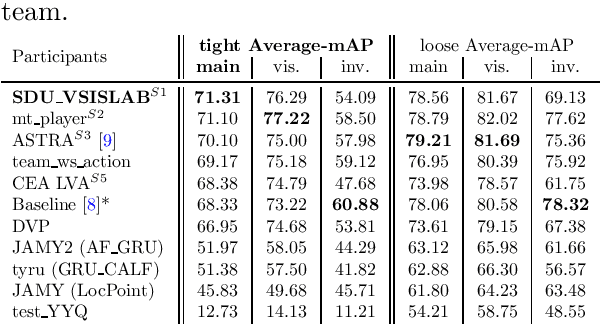

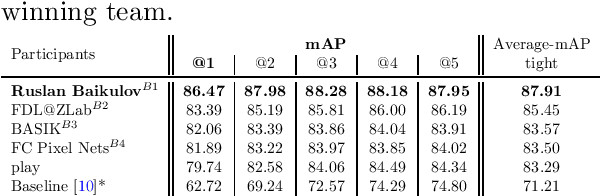

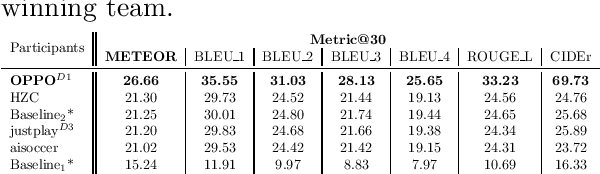

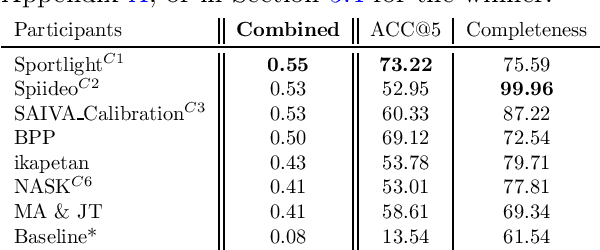

Abstract:The SoccerNet 2023 challenges were the third annual video understanding challenges organized by the SoccerNet team. For this third edition, the challenges were composed of seven vision-based tasks split into three main themes. The first theme, broadcast video understanding, is composed of three high-level tasks related to describing events occurring in the video broadcasts: (1) action spotting, focusing on retrieving all timestamps related to global actions in soccer, (2) ball action spotting, focusing on retrieving all timestamps related to the soccer ball change of state, and (3) dense video captioning, focusing on describing the broadcast with natural language and anchored timestamps. The second theme, field understanding, relates to the single task of (4) camera calibration, focusing on retrieving the intrinsic and extrinsic camera parameters from images. The third and last theme, player understanding, is composed of three low-level tasks related to extracting information about the players: (5) re-identification, focusing on retrieving the same players across multiple views, (6) multiple object tracking, focusing on tracking players and the ball through unedited video streams, and (7) jersey number recognition, focusing on recognizing the jersey number of players from tracklets. Compared to the previous editions of the SoccerNet challenges, tasks (2-3-7) are novel, including new annotations and data, task (4) was enhanced with more data and annotations, and task (6) now focuses on end-to-end approaches. More information on the tasks, challenges, and leaderboards are available on https://www.soccer-net.org. Baselines and development kits can be found on https://github.com/SoccerNet.

One-vs.-One Mitigation of Intersectional Bias: A General Method to Extend Fairness-Aware Binary Classification

Oct 26, 2020

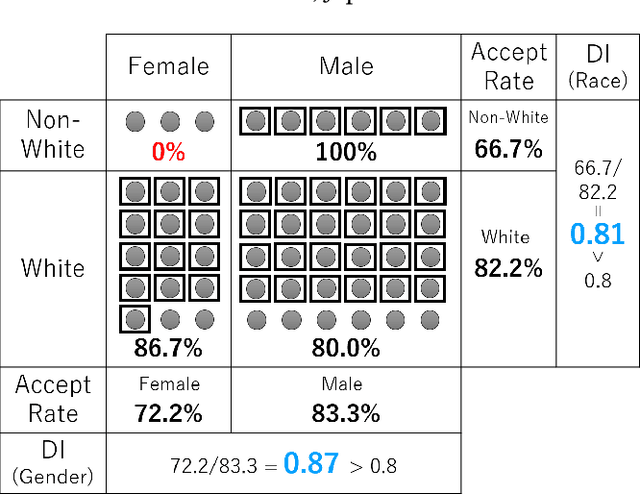

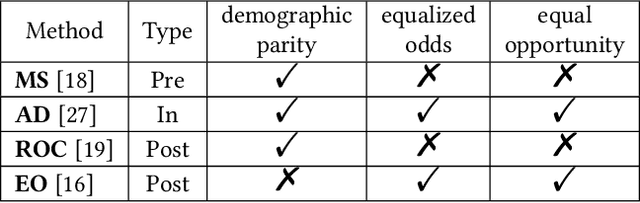

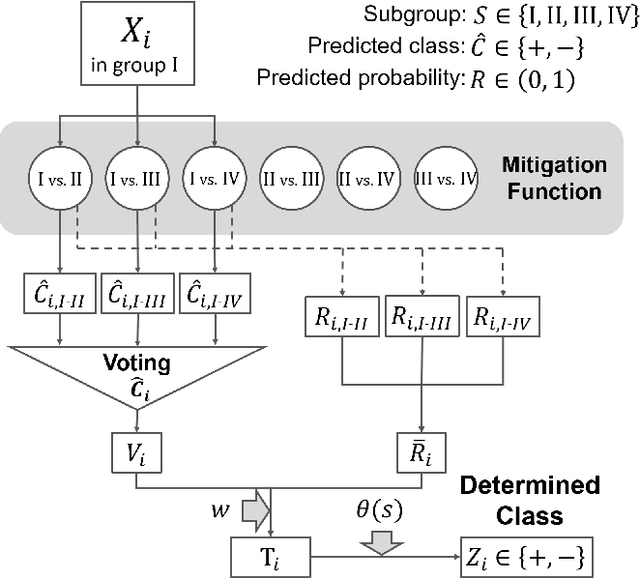

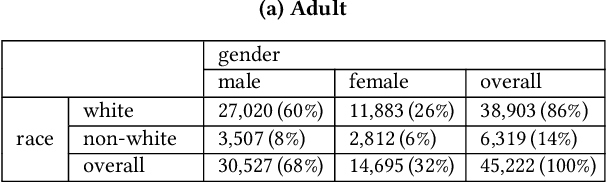

Abstract:With the widespread adoption of machine learning in the real world, the impact of the discriminatory bias has attracted attention. In recent years, various methods to mitigate the bias have been proposed. However, most of them have not considered intersectional bias, which brings unfair situations where people belonging to specific subgroups of a protected group are treated worse when multiple sensitive attributes are taken into consideration. To mitigate this bias, in this paper, we propose a method called One-vs.-One Mitigation by applying a process of comparison between each pair of subgroups related to sensitive attributes to the fairness-aware machine learning for binary classification. We compare our method and the conventional fairness-aware binary classification methods in comprehensive settings using three approaches (pre-processing, in-processing, and post-processing), six metrics (the ratio and difference of demographic parity, equalized odds, and equal opportunity), and two real-world datasets (Adult and COMPAS). As a result, our method mitigates the intersectional bias much better than conventional methods in all the settings. With the result, we open up the potential of fairness-aware binary classification for solving more realistic problems occurring when there are multiple sensitive attributes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge