Kedar Bellare

Learning to Rank for Maps at Airbnb

Jun 25, 2024

Abstract:As a two-sided marketplace, Airbnb brings together hosts who own listings for rent with prospective guests from around the globe. Results from a guest's search for listings are displayed primarily through two interfaces: (1) as a list of rectangular cards that contain on them the listing image, price, rating, and other details, referred to as list-results (2) as oval pins on a map showing the listing price, called map-results. Both these interfaces, since their inception, have used the same ranking algorithm that orders listings by their booking probabilities and selects the top listings for display. But some of the basic assumptions underlying ranking, built for a world where search results are presented as lists, simply break down for maps. This paper describes how we rebuilt ranking for maps by revising the mathematical foundations of how users interact with search results. Our iterative and experiment-driven approach led us through a path full of twists and turns, ending in a unified theory for the two interfaces. Our journey shows how assumptions taken for granted when designing machine learning algorithms may not apply equally across all user interfaces, and how they can be adapted. The net impact was one of the largest improvements in user experience for Airbnb which we discuss as a series of experimental validations.

Leveraging Multiple Online Sources for Accurate Income Verification

Jun 19, 2021

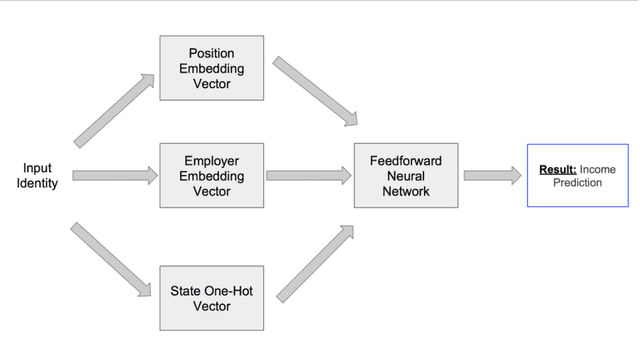

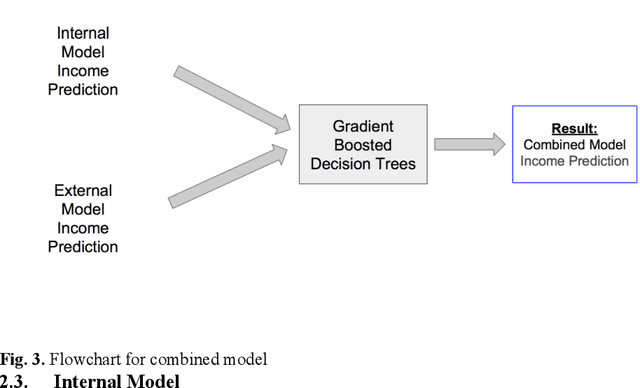

Abstract:Income verification is the problem of validating a person's stated income given basic identity information such as name, location, job title and employer. It is widely used in the context of mortgage lending, rental applications and other financial risk models. However, the current processes surrounding verification involve significant human effort and document gathering which can be both time-consuming and expensive. In this paper, we propose a novel model for verifying an individual's income given very limited identity information typically available in loan applications. Our model is a combination of a deep neural network and hand-engineered features. The hand engineered features are based upon matching the input information against income records extracted automatically from various publicly available online sources (e.g. payscale.com, H-1B filings, government employee salaries). We conduct experiments on two data sets, one simulated from H-1B records and the other from a real-world data set of peer-to-peer (P2P) loan applications obtained from one of the world's largest P2P lending platform. Our results show a significant reduction in error of 3-6% relative to several strong baselines. We also perform ablation studies to demonstrate that a combined model is indeed necessary to achieve state-of-the-art performance on this task.

A Conditional Random Field for Discriminatively-trained Finite-state String Edit Distance

Jul 04, 2012

Abstract:The need to measure sequence similarity arises in information extraction, object identity, data mining, biological sequence analysis, and other domains. This paper presents discriminative string-edit CRFs, a finitestate conditional random field model for edit sequences between strings. Conditional random fields have advantages over generative approaches to this problem, such as pair HMMs or the work of Ristad and Yianilos, because as conditionally-trained methods, they enable the use of complex, arbitrary actions and features of the input strings. As in generative models, the training data does not have to specify the edit sequences between the given string pairs. Unlike generative models, however, our model is trained on both positive and negative instances of string pairs. We present positive experimental results on several data sets.

Alternating Projections for Learning with Expectation Constraints

May 09, 2012

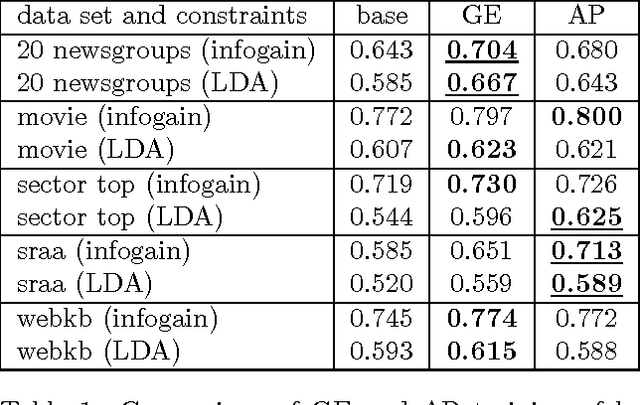

Abstract:We present an objective function for learning with unlabeled data that utilizes auxiliary expectation constraints. We optimize this objective function using a procedure that alternates between information and moment projections. Our method provides an alternate interpretation of the posterior regularization framework (Graca et al., 2008), maintains uncertainty during optimization unlike constraint-driven learning (Chang et al., 2007), and is more efficient than generalized expectation criteria (Mann & McCallum, 2008). Applications of this framework include minimally supervised learning, semisupervised learning, and learning with constraints that are more expressive than the underlying model. In experiments, we demonstrate comparable accuracy to generalized expectation criteria for minimally supervised learning, and use expressive structural constraints to guide semi-supervised learning, providing a 3%-6% improvement over stateof-the-art constraint-driven learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge