Kedan Li

Attention Distance: A Novel Metric for Directed Fuzzing with Large Language Models

Dec 19, 2025

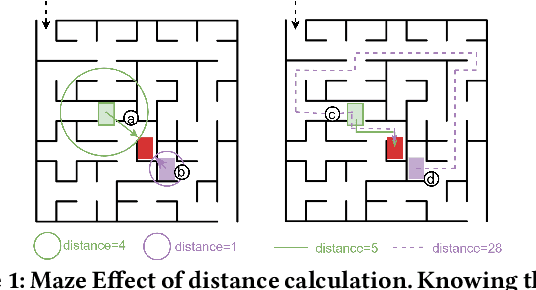

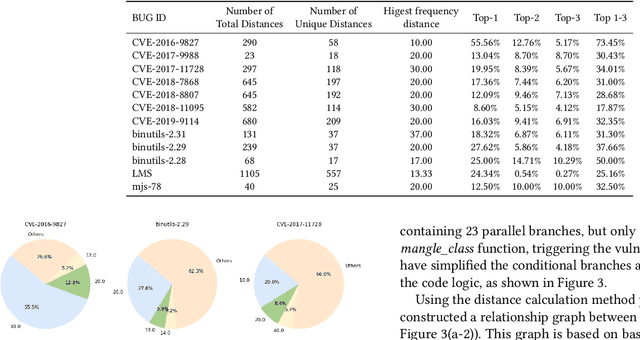

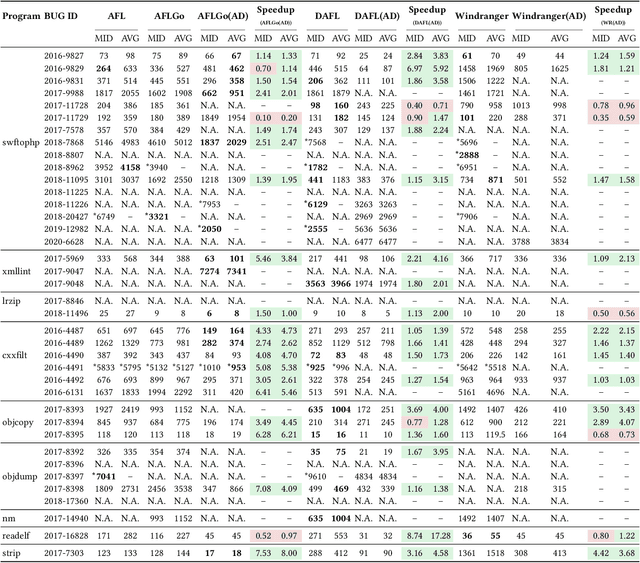

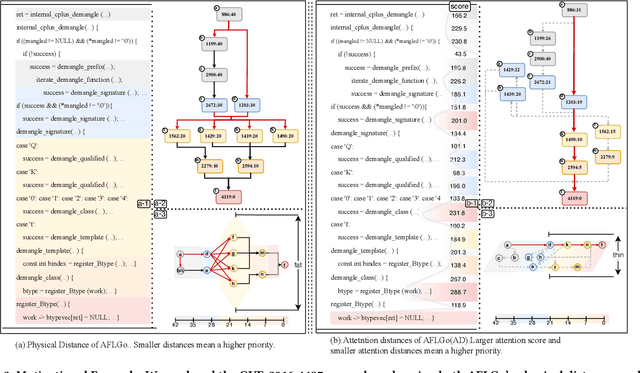

Abstract:In the domain of software security testing, Directed Grey-Box Fuzzing (DGF) has garnered widespread attention for its efficient target localization and excellent detection performance. However, existing approaches measure only the physical distance between seed execution paths and target locations, overlooking logical relationships among code segments. This omission can yield redundant or misleading guidance in complex binaries, weakening DGF's real-world effectiveness. To address this, we introduce \textbf{attention distance}, a novel metric that leverages a large language model's contextual analysis to compute attention scores between code elements and reveal their intrinsic connections. Under the same AFLGo configuration -- without altering any fuzzing components other than the distance metric -- replacing physical distances with attention distances across 38 real vulnerability reproduction experiments delivers a \textbf{3.43$\times$} average increase in testing efficiency over the traditional method. Compared to state-of-the-art directed fuzzers DAFL and WindRanger, our approach achieves \textbf{2.89$\times$} and \textbf{7.13$\times$} improvements, respectively. To further validate the generalizability of attention distance, we integrate it into DAFL and WindRanger, where it also consistently enhances their original performance. All related code and datasets are publicly available at https://github.com/TheBinKing/Attention\_Distance.git.

ACDG-VTON: Accurate and Contained Diffusion Generation for Virtual Try-On

Mar 20, 2024

Abstract:Virtual Try-on (VTON) involves generating images of a person wearing selected garments. Diffusion-based methods, in particular, can create high-quality images, but they struggle to maintain the identities of the input garments. We identified this problem stems from the specifics in the training formulation for diffusion. To address this, we propose a unique training scheme that limits the scope in which diffusion is trained. We use a control image that perfectly aligns with the target image during training. In turn, this accurately preserves garment details during inference. We demonstrate our method not only effectively conserves garment details but also allows for layering, styling, and shoe try-on. Our method runs multi-garment try-on in a single inference cycle and can support high-quality zoomed-in generations without training in higher resolutions. Finally, we show our method surpasses prior methods in accuracy and quality.

Preserving Image Properties Through Initializations in Diffusion Models

Jan 04, 2024Abstract:Retail photography imposes specific requirements on images. For instance, images may need uniform background colors, consistent model poses, centered products, and consistent lighting. Minor deviations from these standards impact a site's aesthetic appeal, making the images unsuitable for use. We show that Stable Diffusion methods, as currently applied, do not respect these requirements. The usual practice of training the denoiser with a very noisy image and starting inference with a sample of pure noise leads to inconsistent generated images during inference. This inconsistency occurs because it is easy to tell the difference between samples of the training and inference distributions. As a result, a network trained with centered retail product images with uniform backgrounds generates images with erratic backgrounds. The problem is easily fixed by initializing inference with samples from an approximation of noisy images. However, in using such an approximation, the joint distribution of text and noisy image at inference time still slightly differs from that at training time. This discrepancy is corrected by training the network with samples from the approximate noisy image distribution. Extensive experiments on real application data show significant qualitative and quantitative improvements in performance from adopting these procedures. Finally, our procedure can interact well with other control-based methods to further enhance the controllability of diffusion-based methods.

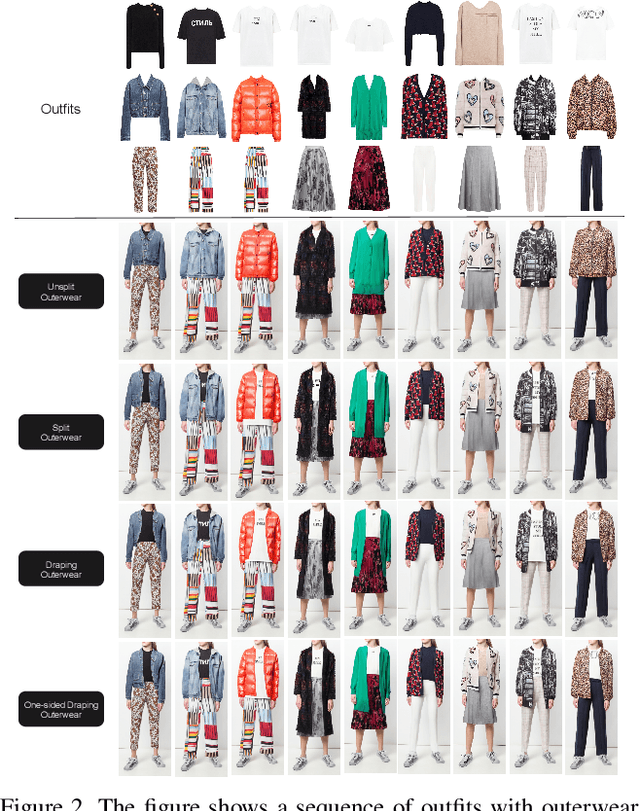

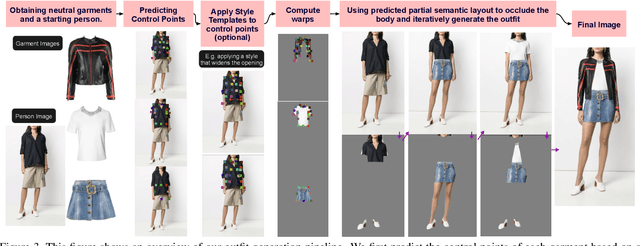

Wearing the Same Outfit in Different Ways -- A Controllable Virtual Try-on Method

Nov 29, 2022

Abstract:An outfit visualization method generates an image of a person wearing real garments from images of those garments. Current methods can produce images that look realistic and preserve garment identity, captured in details such as collar, cuffs, texture, hem, and sleeve length. However, no current method can both control how the garment is worn -- including tuck or untuck, opened or closed, high or low on the waist, etc.. -- and generate realistic images that accurately preserve the properties of the original garment. We describe an outfit visualization method that controls drape while preserving garment identity. Our system allows instance independent editing of garment drape, which means a user can construct an edit (e.g. tucking a shirt in a specific way) that can be applied to all shirts in a garment collection. Garment detail is preserved by relying on a warping procedure to place the garment on the body and a generator then supplies fine shading detail. To achieve instance independent control, we use control points with garment category-level semantics to guide the warp. The method produces state-of-the-art quality images, while allowing creative ways to style garments, including allowing tops to be tucked or untucked; jackets to be worn open or closed; skirts to be worn higher or lower on the waist; and so on. The method allows interactive control to correct errors in individual renderings too. Because the edits are instance independent, they can be applied to large pools of garments automatically and can be conditioned on garment metadata (e.g. all cropped jackets are worn closed or all bomber jackets are worn closed).

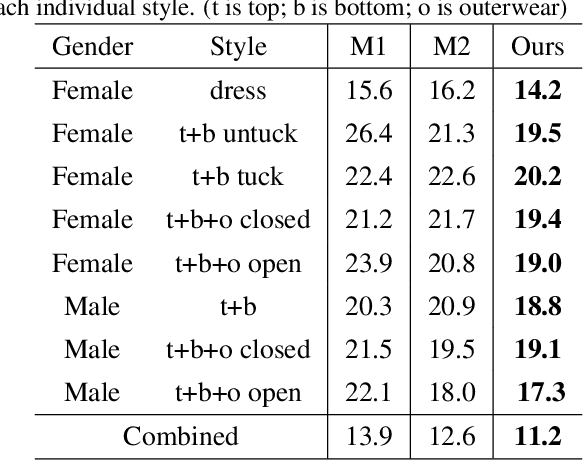

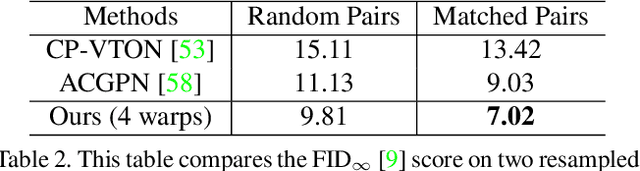

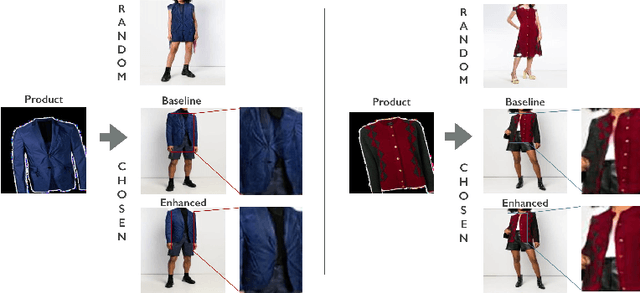

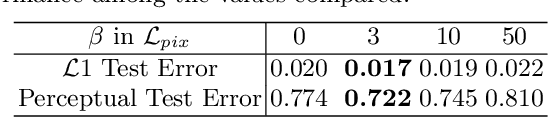

Toward Accurate and Realistic Outfits Visualization with Attention to Details

Jun 11, 2021

Abstract:Virtual try-on methods aim to generate images of fashion models wearing arbitrary combinations of garments. This is a challenging task because the generated image must appear realistic and accurately display the interaction between garments. Prior works produce images that are filled with artifacts and fail to capture important visual details necessary for commercial applications. We propose Outfit Visualization Net (OVNet) to capture these important details (e.g. buttons, shading, textures, realistic hemlines, and interactions between garments) and produce high quality multiple-garment virtual try-on images. OVNet consists of 1) a semantic layout generator and 2) an image generation pipeline using multiple coordinated warps. We train the warper to output multiple warps using a cascade loss, which refines each successive warp to focus on poorly generated regions of a previous warp and yields consistent improvements in detail. In addition, we introduce a method for matching outfits with the most suitable model and produce significant improvements for both our and other previous try-on methods. Through quantitative and qualitative analysis, we demonstrate our method generates substantially higher-quality studio images compared to prior works for multi-garment outfits. An interactive interface powered by this method has been deployed on fashion e-commerce websites and received overwhelmingly positive feedback.

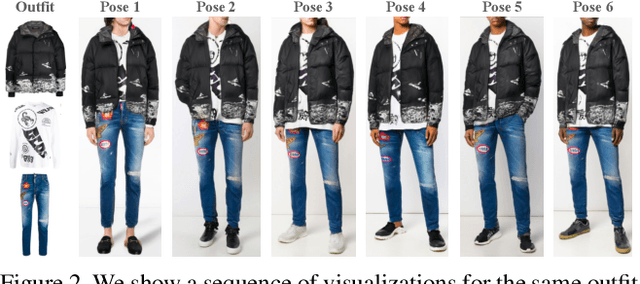

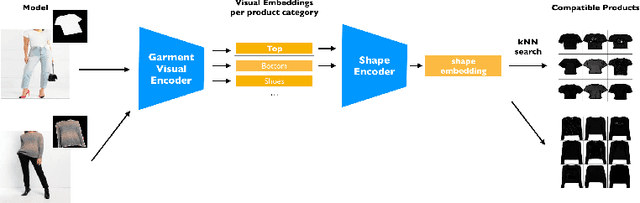

Toward Accurate and Realistic Virtual Try-on Through Shape Matching and Multiple Warps

Mar 27, 2020

Abstract:A virtual try-on method takes a product image and an image of a model and produces an image of the model wearing the product. Most methods essentially compute warps from the product image to the model image and combine using image generation methods. However, obtaining a realistic image is challenging because the kinematics of garments is complex and because outline, texture, and shading cues in the image reveal errors to human viewers. The garment must have appropriate drapes; texture must be warped to be consistent with the shape of a draped garment; small details (buttons, collars, lapels, pockets, etc.) must be placed appropriately on the garment, and so on. Evaluation is particularly difficult and is usually qualitative. This paper uses quantitative evaluation on a challenging, novel dataset to demonstrate that (a) for any warping method, one can choose target models automatically to improve results, and (b) learning multiple coordinated specialized warpers offers further improvements on results. Target models are chosen by a learned embedding procedure that predicts a representation of the products the model is wearing. This prediction is used to match products to models. Specialized warpers are trained by a method that encourages a second warper to perform well in locations where the first works poorly. The warps are then combined using a U-Net. Qualitative evaluation confirms that these improvements are wholesale over outline, texture shading, and garment details.

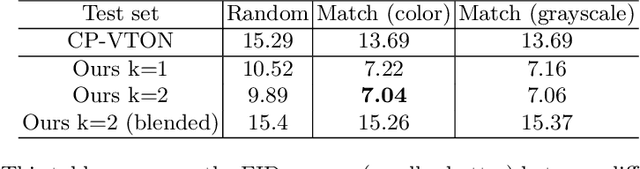

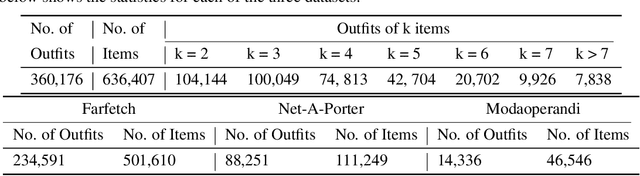

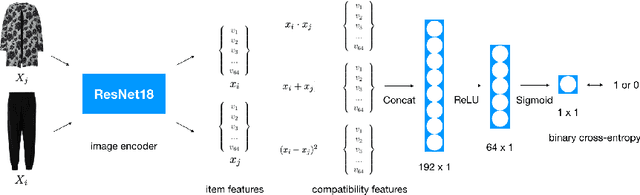

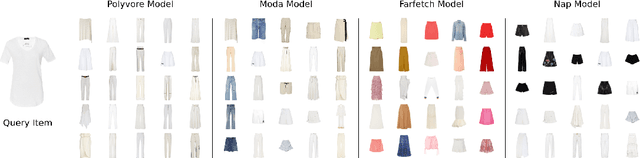

Using Discriminative Methods to Learn Fashion Compatibility Across Datasets

Jun 17, 2019

Abstract:Determining whether a pair of garments are compatible with each other is a challenging matching problem. Past works explored various embedding methods for learning such a relationship. This paper introduces using discriminative methods to learn compatibility, by formulating the task as a simple binary classification problem. We evaluate our approach using an established dataset of outfits created by non-experts and demonstrated an improvement of ~2.5% on established metrics over the state-of-the-art method. We introduce three new datasets of professionally curated outfits and show the consistent performance of our approach on expert-curated datasets. To facilitate comparing across outfit datasets, we propose a new metric which, unlike previously used metrics, is not biased by the average size of outfits. We also demonstrate that compatibility between two types of items can be query indirectly, and such query strategy yield improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge