Kechun Liu

Generating Seamless Virtual Immunohistochemical Whole Slide Images with Content and Color Consistency

Oct 01, 2024

Abstract:Immunohistochemical (IHC) stains play a vital role in a pathologist's analysis of medical images, providing crucial diagnostic information for various diseases. Virtual staining from hematoxylin and eosin (H&E)-stained whole slide images (WSIs) allows the automatic production of other useful IHC stains without the expensive physical staining process. However, current virtual WSI generation methods based on tile-wise processing often suffer from inconsistencies in content, texture, and color at tile boundaries. These inconsistencies lead to artifacts that compromise image quality and potentially hinder accurate clinical assessment and diagnoses. To address this limitation, we propose a novel consistent WSI synthesis network, CC-WSI-Net, that extends GAN models to produce seamless synthetic whole slide images. Our CC-WSI-Net integrates a content- and color-consistency supervisor, ensuring consistency across tiles and facilitating the generation of seamless synthetic WSIs while ensuring Sox10 immunohistochemistry accuracy in melanocyte detection. We validate our method through extensive image-quality analyses, objective detection assessments, and a subjective survey with pathologists. By generating high-quality synthetic WSIs, our method opens doors for advanced virtual staining techniques with broader applications in research and clinical care.

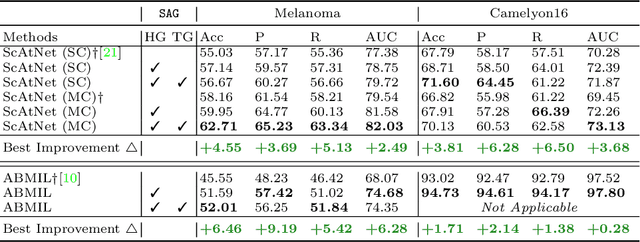

Semantics-Aware Attention Guidance for Diagnosing Whole Slide Images

Apr 16, 2024

Abstract:Accurate cancer diagnosis remains a critical challenge in digital pathology, largely due to the gigapixel size and complex spatial relationships present in whole slide images. Traditional multiple instance learning (MIL) methods often struggle with these intricacies, especially in preserving the necessary context for accurate diagnosis. In response, we introduce a novel framework named Semantics-Aware Attention Guidance (SAG), which includes 1) a technique for converting diagnostically relevant entities into attention signals, and 2) a flexible attention loss that efficiently integrates various semantically significant information, such as tissue anatomy and cancerous regions. Our experiments on two distinct cancer datasets demonstrate consistent improvements in accuracy, precision, and recall with two state-of-the-art baseline models. Qualitative analysis further reveals that the incorporation of heuristic guidance enables the model to focus on regions critical for diagnosis. SAG is not only effective for the models discussed here, but its adaptability extends to any attention-based diagnostic model. This opens up exciting possibilities for further improving the accuracy and efficiency of cancer diagnostics.

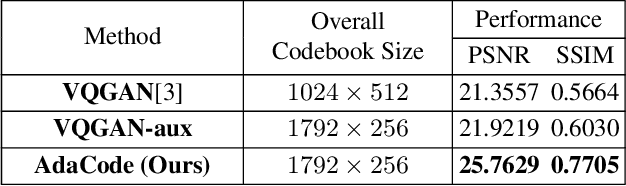

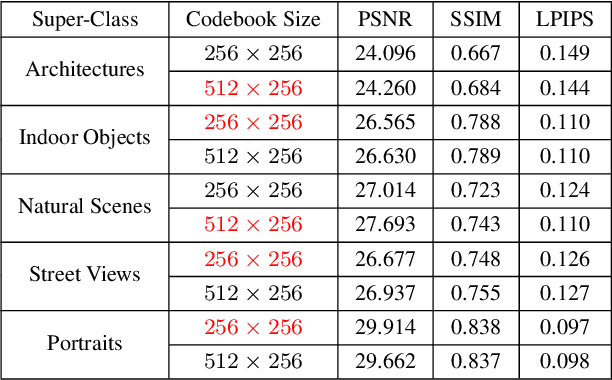

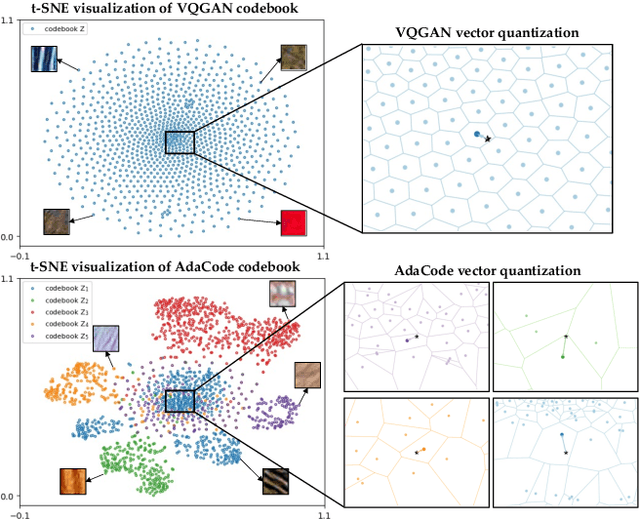

Learning Image-Adaptive Codebooks for Class-Agnostic Image Restoration

Jun 13, 2023

Abstract:Recent work on discrete generative priors, in the form of codebooks, has shown exciting performance for image reconstruction and restoration, as the discrete prior space spanned by the codebooks increases the robustness against diverse image degradations. Nevertheless, these methods require separate training of codebooks for different image categories, which limits their use to specific image categories only (e.g. face, architecture, etc.), and fail to handle arbitrary natural images. In this paper, we propose AdaCode for learning image-adaptive codebooks for class-agnostic image restoration. Instead of learning a single codebook for each image category, we learn a set of basis codebooks. For a given input image, AdaCode learns a weight map with which we compute a weighted combination of these basis codebooks for adaptive image restoration. Intuitively, AdaCode is a more flexible and expressive discrete generative prior than previous work. Experimental results demonstrate that AdaCode achieves state-of-the-art performance on image reconstruction and restoration tasks, including image super-resolution and inpainting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge