Karime Pereida

A Statistical Guarantee for Representation Transfer in Multitask Imitation Learning

Nov 02, 2023

Abstract:Transferring representation for multitask imitation learning has the potential to provide improved sample efficiency on learning new tasks, when compared to learning from scratch. In this work, we provide a statistical guarantee indicating that we can indeed achieve improved sample efficiency on the target task when a representation is trained using sufficiently diverse source tasks. Our theoretical results can be readily extended to account for commonly used neural network architectures with realistic assumptions. We conduct empirical analyses that align with our theoretical findings on four simulated environments$\unicode{x2014}$in particular leveraging more data from source tasks can improve sample efficiency on learning in the new task.

Bridging the Model-Reality Gap with Lipschitz Network Adaptation

Dec 07, 2021

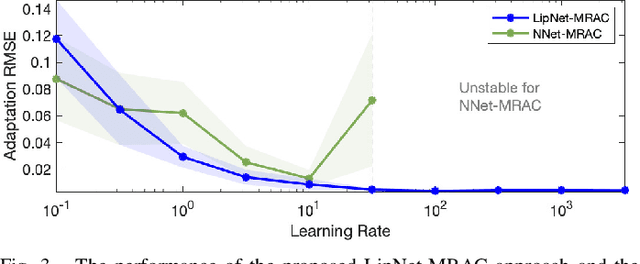

Abstract:As robots venture into the real world, they are subject to unmodeled dynamics and disturbances. Traditional model-based control approaches have been proven successful in relatively static and known operating environments. However, when an accurate model of the robot is not available, model-based design can lead to suboptimal and even unsafe behaviour. In this work, we propose a method that bridges the model-reality gap and enables the application of model-based approaches even if dynamic uncertainties are present. In particular, we present a learning-based model reference adaptation approach that makes a robot system, with possibly uncertain dynamics, behave as a predefined reference model. In turn, the reference model can be used for model-based controller design. In contrast to typical model reference adaptation control approaches, we leverage the representative power of neural networks to capture highly nonlinear dynamics uncertainties and guarantee stability by encoding a certifying Lipschitz condition in the architectural design of a special type of neural network called the Lipschitz network. Our approach applies to a general class of nonlinear control-affine systems even when our prior knowledge about the true robot system is limited. We demonstrate our approach in flying inverted pendulum experiments, where an off-the-shelf quadrotor is challenged to balance an inverted pendulum while hovering or tracking circular trajectories.

Catch the Ball: Accurate High-Speed Motions for Mobile Manipulators via Inverse Dynamics Learning

Mar 17, 2020

Abstract:Mobile manipulators consist of a mobile platform equipped with one or more robot arms and are of interest for a wide array of challenging tasks because of their extended workspace and dexterity. Typically, mobile manipulators are deployed in slow-motion collaborative robot scenarios. In this paper, we consider scenarios where accurate high-speed motions are required. We introduce a framework for this regime of tasks including two main components: (i) a bi-level motion optimization algorithm for real-time trajectory generation, which relies on Sequential Quadratic Programming (SQP) and Quadratic Programming (QP), respectively; and (ii) a learning-based controller optimized for precise tracking of high-speed motions via a learned inverse dynamics model. We evaluate our framework with a mobile manipulator platform through numerous high-speed ball catching experiments, where we show a success rate of 85.33%. To the best of our knowledge, this success rate exceeds the reported performance of existing related systems and sets a new state of the art.

Adaptive Model Predictive Control for High-Accuracy Trajectory Tracking in Changing Conditions

Aug 02, 2018

Abstract:Robots and automated systems are increasingly being introduced to unknown and dynamic environments where they are required to handle disturbances, unmodeled dynamics, and parametric uncertainties. Robust and adaptive control strategies are required to achieve high performance in these dynamic environments. In this paper, we propose a novel adaptive model predictive controller that combines model predictive control (MPC) with an underlying $\mathcal{L}_1$ adaptive controller to improve trajectory tracking of a system subject to unknown and changing disturbances. The $\mathcal{L}_1$ adaptive controller forces the system to behave in a predefined way, as specified by a reference model. A higher-level model predictive controller then uses this reference model to calculate the optimal reference input based on a cost function, while taking into account input and state constraints. We focus on the experimental validation of the proposed approach and demonstrate its effectiveness in experiments on a quadrotor. We show that the proposed approach has a lower trajectory tracking error compared to non-predictive, adaptive approaches and a predictive, non-adaptive approach, even when external wind disturbances are applied.

Transfer Learning for High-Precision Trajectory Tracking Through $\mathcal{L}_1$ Adaptive Feedback and Iterative Learning

Jul 13, 2018

Abstract:Robust and adaptive control strategies are needed when robots or automated systems are introduced to unknown and dynamic environments where they are required to cope with disturbances, unmodeled dynamics, and parametric uncertainties. In this paper, we demonstrate the capabilities of a combined $\mathcal{L}_1$ adaptive control and iterative learning control (ILC) framework to achieve high-precision trajectory tracking in the presence of unknown and changing disturbances. The $\mathcal{L}_1$ adaptive controller makes the system behave close to a reference model; however, it does not guarantee that perfect trajectory tracking is achieved, while ILC improves trajectory tracking performance based on previous iterations. The combined framework in this paper uses $\mathcal{L}_1$ adaptive control as an underlying controller that achieves a robust and repeatable behavior, while the ILC acts as a high-level adaptation scheme that mainly compensates for systematic tracking errors. We illustrate that this framework enables transfer learning between dynamically different systems, where learned experience of one system can be shown to be beneficial for another different system. Experimental results with two different quadrotors show the superior performance of the combined $\mathcal{L}_1$-ILC framework compared with approaches using ILC with an underlying proportional-derivative controller or proportional-integral-derivative controller. Results highlight that our $\mathcal{L}_1$-ILC framework can achieve high-precision trajectory tracking when unknown and changing disturbances are present and can achieve transfer of learned experience between dynamically different systems. Moreover, our approach is able to achieve precise trajectory tracking in the first attempt when the initial input is generated based on the reference model of the adaptive controller.

Data-Efficient Multirobot, Multitask Transfer Learning for Trajectory Tracking

Apr 02, 2018

Abstract:Transfer learning has the potential to reduce the burden of data collection and to decrease the unavoidable risks of the training phase. In this letter, we introduce a multirobot, multitask transfer learning framework that allows a system to complete a task by learning from a few demonstrations of another task executed on another system. We focus on the trajectory tracking problem where each trajectory represents a different task, since many robotic tasks can be described as a trajectory tracking problem. The proposed multirobot transfer learning framework is based on a combined $\mathcal{L}_1$ adaptive control and an iterative learning control approach. The key idea is that the adaptive controller forces dynamically different systems to behave as a specified reference model. The proposed multitask transfer learning framework uses theoretical control results (e.g., the concept of vector relative degree) to learn a map from desired trajectories to the inputs that make the system track these trajectories with high accuracy. This map is used to calculate the inputs for a new, unseen trajectory. Experimental results using two different quadrotor platforms and six different trajectories show that, on average, the proposed framework reduces the first-iteration tracking error by 74% when information from tracking a different single trajectory on a different quadrotor is utilized.

* 9 pages, 6 figures, submitted to RA-L 2017

High-Precision Trajectory Tracking in Changing Environments Through $\mathcal{L}_1$ Adaptive Feedback and Iterative Learning

May 12, 2017

Abstract:As robots and other automated systems are introduced to unknown and dynamic environments, robust and adaptive control strategies are required to cope with disturbances, unmodeled dynamics and parametric uncertainties. In this paper, we propose and provide theoretical proofs of a combined $\mathcal{L}_1$ adaptive feedback and iterative learning control (ILC) framework to improve trajectory tracking of a system subject to unknown and changing disturbances. The $\mathcal{L}_1$ adaptive controller forces the system to behave in a repeatable, predefined way, even in the presence of unknown and changing disturbances; however, this does not imply that perfect trajectory tracking is achieved. ILC improves the tracking performance based on experience from previous executions. The performance of ILC is limited by the robustness and repeatability of the underlying system, which, in this approach, is handled by the $\mathcal{L}_1$ adaptive controller. In particular, we are able to generalize learned trajectories across different system configurations because the $\mathcal{L}_1$ adaptive controller handles the underlying changes in the system. We demonstrate the improved trajectory tracking performance and generalization capabilities of the combined method compared to pure ILC in experiments with a quadrotor subject to unknown, dynamic disturbances. This is the first work to show $\mathcal{L}_1$ adaptive control combined with ILC in experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge