Kao Zhang

Enhancing Diffusion-Based Quantitatively Controllable Image Generation via Matrix-Form EDM and Adaptive Vicinal Training

Feb 02, 2026Abstract:Continuous Conditional Diffusion Model (CCDM) is a diffusion-based framework designed to generate high-quality images conditioned on continuous regression labels. Although CCDM has demonstrated clear advantages over prior approaches across a range of datasets, it still exhibits notable limitations and has recently been surpassed by a GAN-based method, namely CcGAN-AVAR. These limitations mainly arise from its reliance on an outdated diffusion framework and its low sampling efficiency due to long sampling trajectories. To address these issues, we propose an improved CCDM framework, termed iCCDM, which incorporates the more advanced \textit{Elucidated Diffusion Model} (EDM) framework with substantial modifications to improve both generation quality and sampling efficiency. Specifically, iCCDM introduces a novel matrix-form EDM formulation together with an adaptive vicinal training strategy. Extensive experiments on four benchmark datasets, spanning image resolutions from $64\times64$ to $256\times256$, demonstrate that iCCDM consistently outperforms existing methods, including state-of-the-art large-scale text-to-image diffusion models (e.g., Stable Diffusion 3, FLUX.1, and Qwen-Image), achieving higher generation quality while significantly reducing sampling cost.

CCDM: Continuous Conditional Diffusion Models for Image Generation

May 06, 2024

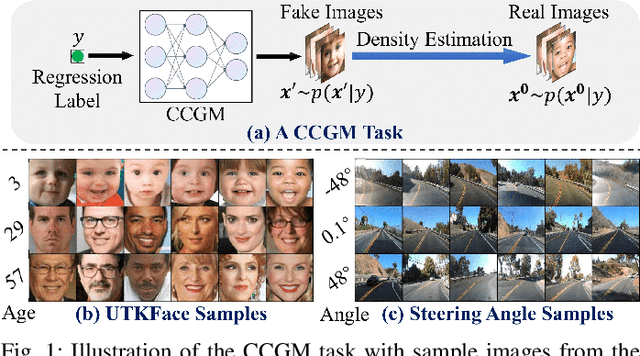

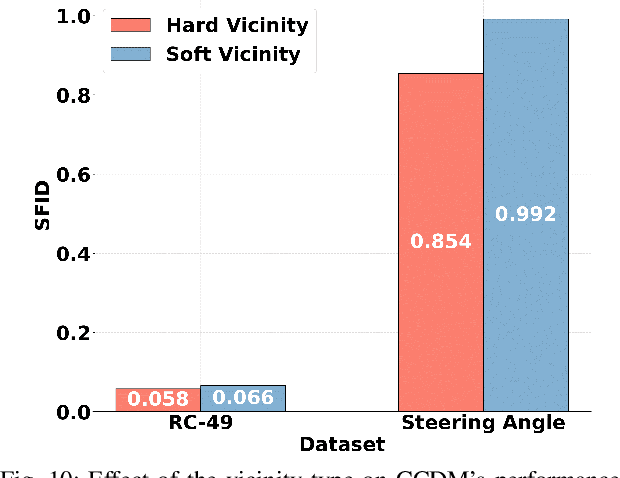

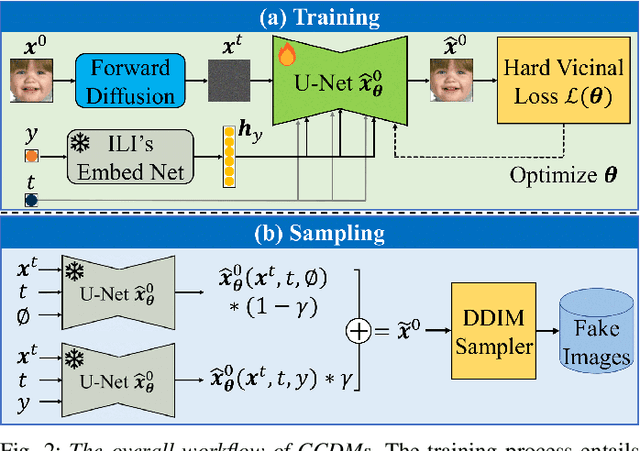

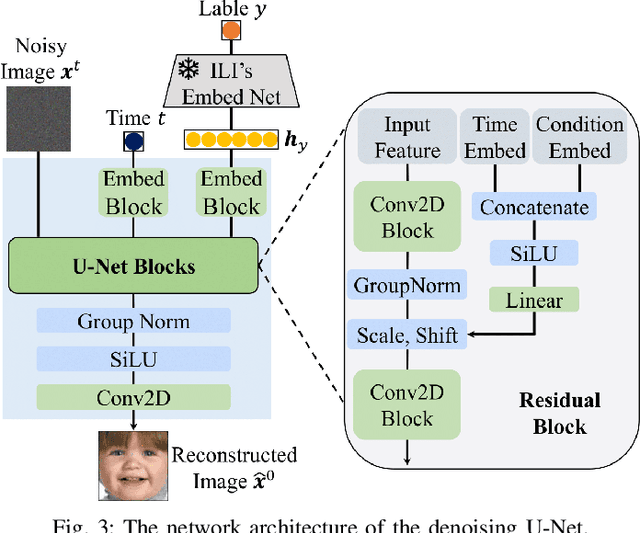

Abstract:Continuous Conditional Generative Modeling (CCGM) aims to estimate the distribution of high-dimensional data, typically images, conditioned on scalar continuous variables known as regression labels. While Continuous conditional Generative Adversarial Networks (CcGANs) were initially designed for this task, their adversarial training mechanism remains vulnerable to extremely sparse or imbalanced data, resulting in suboptimal outcomes. To enhance the quality of generated images, a promising alternative is to replace CcGANs with Conditional Diffusion Models (CDMs), renowned for their stable training process and ability to produce more realistic images. However, existing CDMs encounter challenges when applied to CCGM tasks due to several limitations such as inadequate U-Net architectures and deficient model fitting mechanisms for handling regression labels. In this paper, we introduce Continuous Conditional Diffusion Models (CCDMs), the first CDM designed specifically for the CCGM task. CCDMs address the limitations of existing CDMs by introducing specially designed conditional diffusion processes, a modified denoising U-Net with a custom-made conditioning mechanism, a novel hard vicinal loss for model fitting, and an efficient conditional sampling procedure. With comprehensive experiments on four datasets with varying resolutions ranging from 64x64 to 192x192, we demonstrate the superiority of the proposed CCDM over state-of-the-art CCGM models, establishing new benchmarks in CCGM. Extensive ablation studies validate the model design and implementation configuration of the proposed CCDM. Our code is publicly available at https://github.com/UBCDingXin/CCDM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge