Justyna P. Zwolak

Towards autonomous time-calibration of large quantum-dot devices: Detection, real-time feedback, and noise spectroscopy

Dec 31, 2025Abstract:The performance and scalability of semiconductor quantum-dot (QD) qubits are limited by electrostatic drift and charge noise that shift operating points and destabilize qubit parameters. As systems expand to large one- and two-dimensional arrays, manual recalibration becomes impractical, creating a need for autonomous stabilization frameworks. Here, we introduce a method that uses the full network of charge-transition lines in repeatedly acquired double-quantum-dot charge stability diagrams (CSDs) as a multidimensional probe of the local electrostatic environment. By accurately tracking the motion of selected transitions in time, we detect voltage drifts, identify abrupt charge reconfigurations, and apply compensating updates to maintain stable operating conditions. We demonstrate our approach on a 10-QD device, showing robust stabilization and real-time diagnostic access to dot-specific noise processes. The high acquisition rate of radio-frequency reflectometry CSD measurements also enables time-domain noise spectroscopy, allowing the extraction of noise power spectral densities, the identification of two-level fluctuators, and the analysis of spatial noise correlations across the array. From our analysis, we find that the background noise at 100~$μ$\si{\hertz} is dominated by drift with a power law of $1/f^2$, accompanied by a few dominant two-level fluctuators and an average linear correlation length of $(188 \pm 38)$~\si{\nano\meter} in the device. These capabilities form the basis of a scalable, autonomous calibration and characterization module for QD-based quantum processors, providing essential feedback for long-duration, high-fidelity qubit operations.

Automated electrostatic characterization of quantum dot devices in single- and bilayer heterostructures

Dec 31, 2025Abstract:As quantum dot (QD)-based spin qubits advance toward larger, more complex device architectures, rapid, automated device characterization and data analysis tools become critical. The orientation and spacing of transition lines in a charge stability diagram (CSD) contain a fingerprint of a QD device's capacitive environment, making these measurements useful tools for device characterization. However, manually interpreting these features is time-consuming, error-prone, and impractical at scale. Here, we present an automated protocol for extracting underlying capacitive properties from CSDs. Our method integrates machine learning, image processing, and object detection to identify and track charge transitions across large datasets without manual labeling. We demonstrate this method using experimentally measured data from a strained-germanium single-quantum-well (planar) and a strained-germanium double-quantum-well (bilayer) QD device. Unlike for planar QD devices, CSDs in bilayer germanium heterostructure exhibit a larger set of transitions, including interlayer tunneling and distinct loading lines for the vertically stacked QDs, making them a powerful testbed for automation methods. By analyzing the properties of many CSDs, we can statistically estimate physically relevant quantities, like relative lever arms and capacitive couplings. Thus, our protocol enables rapid extraction of useful, nontrivial information about QD devices.

QDFlow: A Python package for physics simulations of quantum dot devices

Sep 16, 2025Abstract:Recent advances in machine learning (ML) have accelerated progress in calibrating and operating quantum dot (QD) devices. However, most ML approaches rely on access to large, high-quality labeled datasets for training, benchmarking, and validation, with labels capturing key features in the data. Obtaining such datasets experimentally is challenging due to limited data availability and the labor-intensive nature of labeling. QDFlow is an open-source physics simulator for multi-QD arrays that generates realistic synthetic data with ground-truth labels. QDFlow combines a self-consistent Thomas-Fermi solver, a dynamic capacitance model, and flexible noise modules to produce charge stability diagrams and ray-based data closely resembling experiments. With extensive tunable parameters and customizable noise models, QDFlow supports the creation of large, diverse datasets for ML development, benchmarking, and quantum device research.

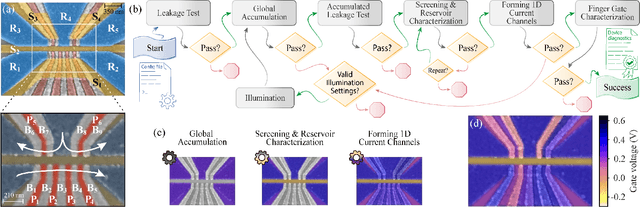

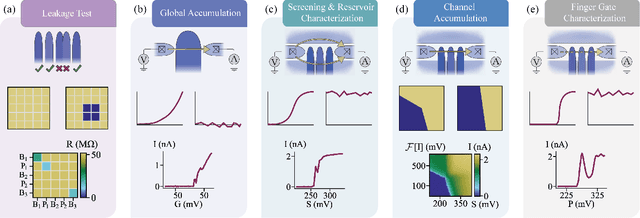

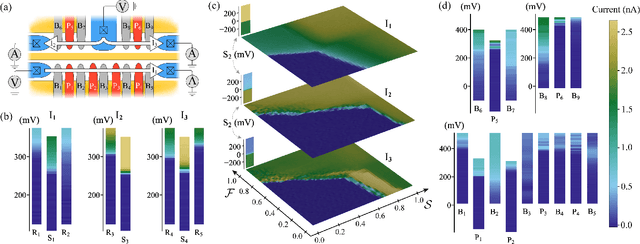

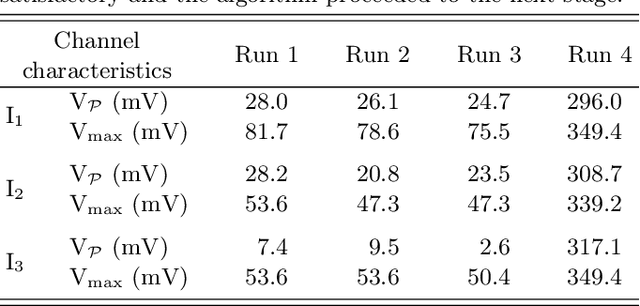

BATIS: Bootstrapping, Autonomous Testing, and Initialization System for Quantum Dot Devices

Dec 10, 2024

Abstract:Semiconductor quantum dot (QD) devices have become central to advancements in spin-based quantum computing. As the complexity of QD devices grows, manual tuning becomes increasingly infeasible, necessitating robust and scalable autotuning solutions. Tuning large arrays of QD qubits depends on efficient choices of automated protocols. Here, we introduce a bootstrapping, autonomous testing, and initialization system (BATIS), an automated framework designed to streamline QD device testing and initialization. BATIS navigates high-dimensional gate voltage spaces, automating essential steps such as leakage testing and gate characterization. The current channel formation protocol follows a novel and scalable approach that requires a single measurement regardless of the number of channels. Demonstrated at 1.3 K on a quad-QD Si/Si$_x$Ge$_{1-x}$ device, BATIS eliminates the need for deep cryogenic environments during initial device diagnostics, significantly enhancing scalability and reducing setup times. By requiring minimal prior knowledge of the device architecture, BATIS represents a platform-agnostic solution, adaptable to various QD systems, which bridges a critical gap in QD autotuning.

MAViS: Modular Autonomous Virtualization System for Two-Dimensional Semiconductor Quantum Dot Arrays

Nov 19, 2024Abstract:Arrays of gate-defined semiconductor quantum dots are among the leading candidates for building scalable quantum processors. High-fidelity initialization, control, and readout of spin qubit registers require exquisite and targeted control over key Hamiltonian parameters that define the electrostatic environment. However, due to the tight gate pitch, capacitive crosstalk between gates hinders independent tuning of chemical potentials and interdot couplings. While virtual gates offer a practical solution, determining all the required cross-capacitance matrices accurately and efficiently in large quantum dot registers is an open challenge. Here, we establish a Modular Automated Virtualization System (MAViS) -- a general and modular framework for autonomously constructing a complete stack of multi-layer virtual gates in real time. Our method employs machine learning techniques to rapidly extract features from two-dimensional charge stability diagrams. We then utilize computer vision and regression models to self-consistently determine all relative capacitive couplings necessary for virtualizing plunger and barrier gates in both low- and high-tunnel-coupling regimes. Using MAViS, we successfully demonstrate accurate virtualization of a dense two-dimensional array comprising ten quantum dots defined in a high-quality Ge/SiGe heterostructure. Our work offers an elegant and practical solution for the efficient control of large-scale semiconductor quantum dot systems.

Autonomous Bootstrapping of Quantum Dot Devices

Jul 29, 2024Abstract:Semiconductor quantum dots (QD) are a promising platform for multiple different qubit implementations, all of which are voltage-controlled by programmable gate electrodes. However, as the QD arrays grow in size and complexity, tuning procedures that can fully autonomously handle the increasing number of control parameters are becoming essential for enabling scalability. We propose a bootstrapping algorithm for initializing a depletion mode QD device in preparation for subsequent phases of tuning. During bootstrapping, the QD device functionality is validated, all gates are characterized, and the QD charge sensor is made operational. We demonstrate the bootstrapping protocol in conjunction with a coarse tuning module, showing that the combined algorithm can efficiently and reliably take a cooled-down QD device to a desired global state configuration in under 8 minutes with a success rate of 96 %. Importantly, by following heuristic approaches to QD device initialization and combining the efficient ray-based measurement with the rapid radio-frequency reflectometry measurements, the proposed algorithm establishes a reference in terms of performance, reliability, and efficiency against which alternative algorithms can be benchmarked.

Explainable Classification Techniques for Quantum Dot Device Measurements

Feb 23, 2024

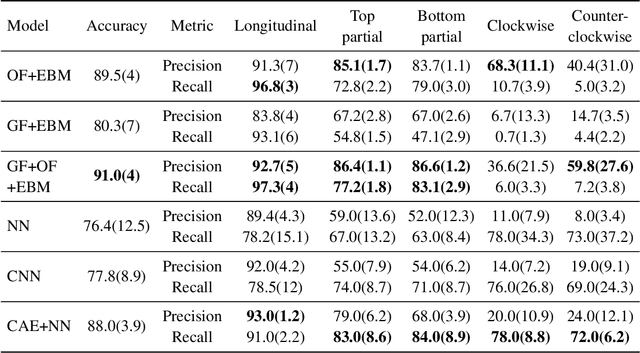

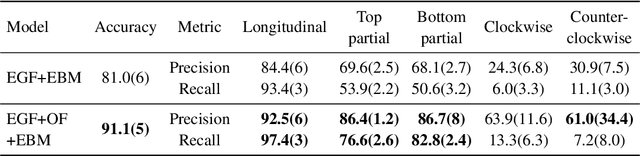

Abstract:In the physical sciences, there is an increased need for robust feature representations of image data: image acquisition, in the generalized sense of two-dimensional data, is now widespread across a large number of fields, including quantum information science, which we consider here. While traditional image features are widely utilized in such cases, their use is rapidly being supplanted by Neural Network-based techniques that often sacrifice explainability in exchange for high accuracy. To ameliorate this trade-off, we propose a synthetic data-based technique that results in explainable features. We show, using Explainable Boosting Machines (EBMs), that this method offers superior explainability without sacrificing accuracy. Specifically, we show that there is a meaningful benefit to this technique in the context of quantum dot tuning, where human intervention is necessary at the current stage of development.

* 5 pages, 3 figures

Data Needs and Challenges of Quantum Dot Devices Automation: Workshop Report

Dec 21, 2023Abstract:Gate-defined quantum dots are a promising candidate system to realize scalable, coupled qubit systems and serve as a fundamental building block for quantum computers. However, present-day quantum dot devices suffer from imperfections that must be accounted for, which hinders the characterization, tuning, and operation process. Moreover, with an increasing number of quantum dot qubits, the relevant parameter space grows sufficiently to make heuristic control infeasible. Thus, it is imperative that reliable and scalable autonomous tuning approaches are developed. In this report, we outline current challenges in automating quantum dot device tuning and operation with a particular focus on datasets, benchmarking, and standardization. We also present ideas put forward by the quantum dot community on how to overcome them.

QDA$^2$: A principled approach to automatically annotating charge stability diagrams

Dec 18, 2023Abstract:Gate-defined semiconductor quantum dot (QD) arrays are a promising platform for quantum computing. However, presently, the large configuration spaces and inherent noise make tuning of QD devices a nontrivial task and with the increasing number of QD qubits, the human-driven experimental control becomes unfeasible. Recently, researchers working with QD systems have begun putting considerable effort into automating device control, with a particular focus on machine-learning-driven methods. Yet, the reported performance statistics vary substantially in both the meaning and the type of devices used for testing. While systematic benchmarking of the proposed tuning methods is necessary for developing reliable and scalable tuning approaches, the lack of openly available standardized datasets of experimental data makes such testing impossible. The QD auto-annotator -- a classical algorithm for automatic interpretation and labeling of experimentally acquired data -- is a critical step toward rectifying this. QD auto-annotator leverages the principles of geometry to produce state labels for experimental double-QD charge stability diagrams and is a first step towards building a large public repository of labeled QD data.

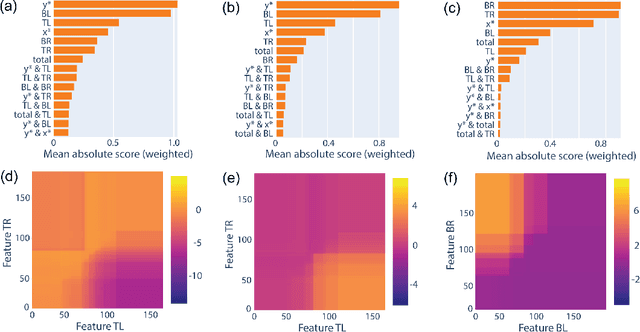

Extending Explainable Boosting Machines to Scientific Image Data

May 25, 2023

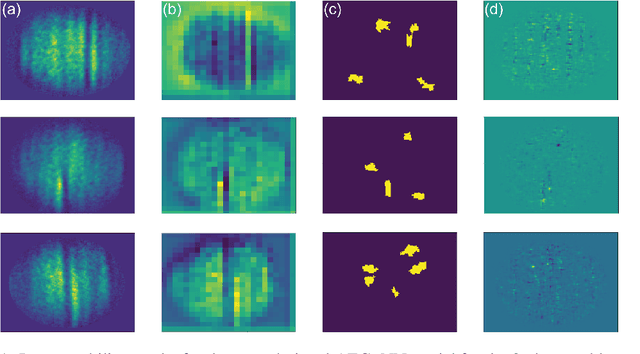

Abstract:As the deployment of computer vision technology becomes increasingly common in applications of consequence such as medicine or science, the need for explanations of the system output has become a focus of great concern. Unfortunately, many state-of-the-art computer vision models are opaque, making their use challenging from an explanation standpoint, and current approaches to explaining these opaque models have stark limitations and have been the subject of serious criticism. In contrast, Explainable Boosting Machines (EBMs) are a class of models that are easy to interpret and achieve performance on par with the very best-performing models, however, to date EBMs have been limited solely to tabular data. Driven by the pressing need for interpretable models in science, we propose the use of EBMs for scientific image data. Inspired by an important application underpinning the development of quantum technologies, we apply EBMs to cold-atom soliton image data, and, in doing so, demonstrate EBMs for image data for the first time. To tabularize the image data we employ Gabor Wavelet Transform-based techniques that preserve the spatial structure of the data. We show that our approach provides better explanations than other state-of-the-art explainability methods for images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge