Brian Weber

Michael Pokorny

Humanity's Last Exam

Jan 24, 2025Abstract:Benchmarks are important tools for tracking the rapid advancements in large language model (LLM) capabilities. However, benchmarks are not keeping pace in difficulty: LLMs now achieve over 90\% accuracy on popular benchmarks like MMLU, limiting informed measurement of state-of-the-art LLM capabilities. In response, we introduce Humanity's Last Exam (HLE), a multi-modal benchmark at the frontier of human knowledge, designed to be the final closed-ended academic benchmark of its kind with broad subject coverage. HLE consists of 3,000 questions across dozens of subjects, including mathematics, humanities, and the natural sciences. HLE is developed globally by subject-matter experts and consists of multiple-choice and short-answer questions suitable for automated grading. Each question has a known solution that is unambiguous and easily verifiable, but cannot be quickly answered via internet retrieval. State-of-the-art LLMs demonstrate low accuracy and calibration on HLE, highlighting a significant gap between current LLM capabilities and the expert human frontier on closed-ended academic questions. To inform research and policymaking upon a clear understanding of model capabilities, we publicly release HLE at https://lastexam.ai.

On the Abuse and Detection of Polyglot Files

Jul 01, 2024Abstract:A polyglot is a file that is valid in two or more formats. Polyglot files pose a problem for malware detection systems that route files to format-specific detectors/signatures, as well as file upload and sanitization tools. In this work we found that existing file-format and embedded-file detection tools, even those developed specifically for polyglot files, fail to reliably detect polyglot files used in the wild, leaving organizations vulnerable to attack. To address this issue, we studied the use of polyglot files by malicious actors in the wild, finding $30$ polyglot samples and $15$ attack chains that leveraged polyglot files. In this report, we highlight two well-known APTs whose cyber attack chains relied on polyglot files to bypass detection mechanisms. Using knowledge from our survey of polyglot usage in the wild -- the first of its kind -- we created a novel data set based on adversary techniques. We then trained a machine learning detection solution, PolyConv, using this data set. PolyConv achieves a precision-recall area-under-curve score of $0.999$ with an F1 score of $99.20$% for polyglot detection and $99.47$% for file-format identification, significantly outperforming all other tools tested. We developed a content disarmament and reconstruction tool, ImSan, that successfully sanitized $100$% of the tested image-based polyglots, which were the most common type found via the survey. Our work provides concrete tools and suggestions to enable defenders to better defend themselves against polyglot files, as well as directions for future work to create more robust file specifications and methods of disarmament.

The Path To Autonomous Cyber Defense

Apr 12, 2024

Abstract:Defenders are overwhelmed by the number and scale of attacks against their networks.This problem will only be exacerbated as attackers leverage artificial intelligence to automate their workflows. We propose a path to autonomous cyber agents able to augment defenders by automating critical steps in the cyber defense life cycle.

Data Needs and Challenges of Quantum Dot Devices Automation: Workshop Report

Dec 21, 2023Abstract:Gate-defined quantum dots are a promising candidate system to realize scalable, coupled qubit systems and serve as a fundamental building block for quantum computers. However, present-day quantum dot devices suffer from imperfections that must be accounted for, which hinders the characterization, tuning, and operation process. Moreover, with an increasing number of quantum dot qubits, the relevant parameter space grows sufficiently to make heuristic control infeasible. Thus, it is imperative that reliable and scalable autonomous tuning approaches are developed. In this report, we outline current challenges in automating quantum dot device tuning and operation with a particular focus on datasets, benchmarking, and standardization. We also present ideas put forward by the quantum dot community on how to overcome them.

QDA$^2$: A principled approach to automatically annotating charge stability diagrams

Dec 18, 2023Abstract:Gate-defined semiconductor quantum dot (QD) arrays are a promising platform for quantum computing. However, presently, the large configuration spaces and inherent noise make tuning of QD devices a nontrivial task and with the increasing number of QD qubits, the human-driven experimental control becomes unfeasible. Recently, researchers working with QD systems have begun putting considerable effort into automating device control, with a particular focus on machine-learning-driven methods. Yet, the reported performance statistics vary substantially in both the meaning and the type of devices used for testing. While systematic benchmarking of the proposed tuning methods is necessary for developing reliable and scalable tuning approaches, the lack of openly available standardized datasets of experimental data makes such testing impossible. The QD auto-annotator -- a classical algorithm for automatic interpretation and labeling of experimentally acquired data -- is a critical step toward rectifying this. QD auto-annotator leverages the principles of geometry to produce state labels for experimental double-QD charge stability diagrams and is a first step towards building a large public repository of labeled QD data.

Toward the Detection of Polyglot Files

Apr 13, 2022

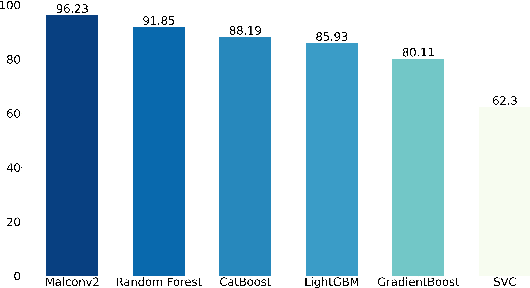

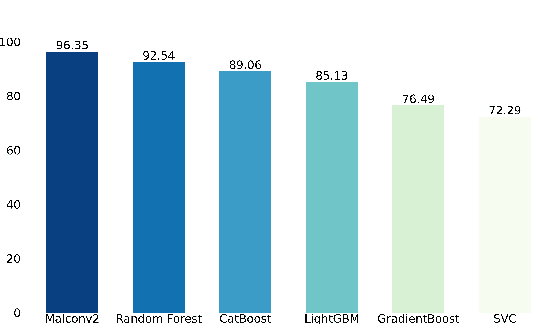

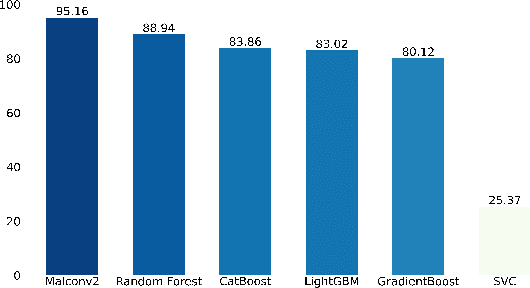

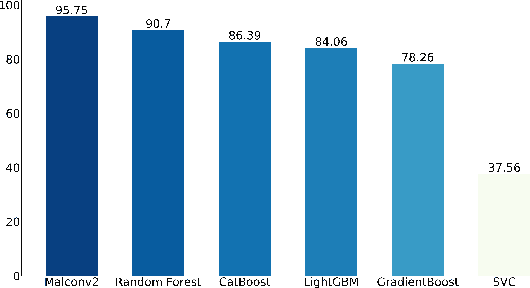

Abstract:Standardized file formats play a key role in the development and use of computer software. However, it is possible to abuse standardized file formats by creating a file that is valid in multiple file formats. The resulting polyglot (many languages) file can confound file format identification, allowing elements of the file to evade analysis.This is especially problematic for malware detection systems that rely on file format identification for feature extraction. File format identification processes that depend on file signatures can be easily evaded thanks to flexibility in the format specifications of certain file formats. Although work has been done to identify file formats using more comprehensive methods than file signatures, accurate identification of polyglot files remains an open problem. Since malware detection systems routinely perform file format-specific feature extraction, polyglot files need to be filtered out prior to ingestion by these systems. Otherwise, malicious content could pass through undetected. To address the problem of polyglot detection we assembled a data set using the mitra tool. We then evaluated the performance of the most commonly used file identification tool, file. Finally, we demonstrated the accuracy, precision, recall and F1 score of a range of machine and deep learning models. Malconv2 and Catboost demonstrated the highest recall on our data set with 95.16% and 95.45%, respectively. These models can be incorporated into a malware detector's file processing pipeline to filter out potentially malicious polyglots before file format-dependent feature extraction takes place.

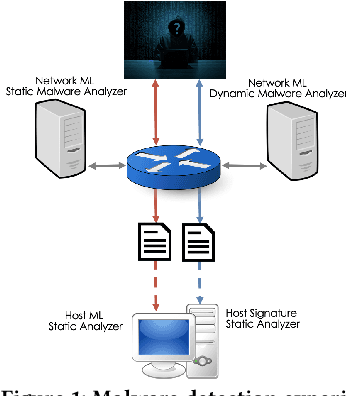

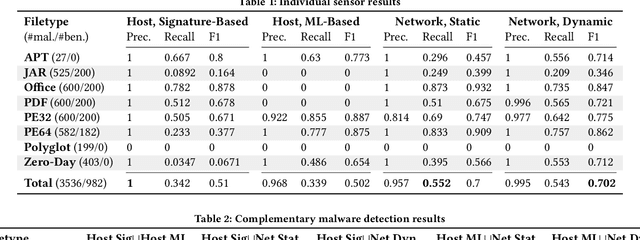

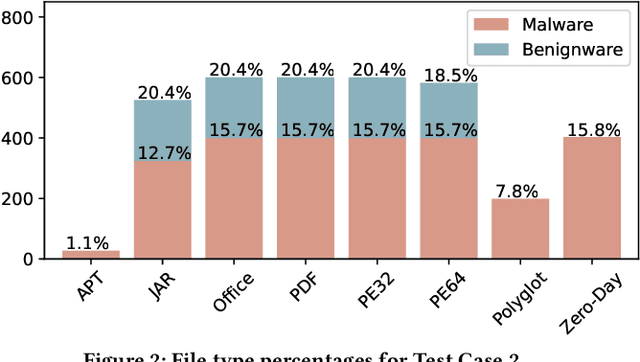

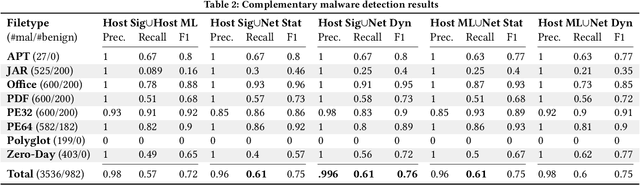

Beyond the Hype: A Real-World Evaluation of the Impact and Cost of Machine Learning--Based Malware Detection

Dec 16, 2020

Abstract:There is a lack of scientific testing of commercially available malware detectors, especially those that boast accurate classification of never-before-seen (zero-day) files using machine learning (ML). The result is that the efficacy and trade-offs among the different available approaches are opaque. In this paper, we address this gap in the scientific literature with an evaluation of commercially available malware detection tools. We tested each tool against 3,536 total files (2,554 72% malicious, 982 28% benign) including over 400 zero-day malware, and tested with a variety of file types and protocols for delivery. Specifically, we investigate three questions: Do ML-based malware detectors provide better detection than signature-based detectors? Is it worth purchasing a network-level malware detector to complement host-based detection? What is the trade-off in detection time and detection accuracy among commercially available tools using static and dynamic analysis? We present statistical results on detection time and accuracy, consider complementary analysis (using multiple tools together), and provide a novel application of a recent cost-benefit evaluation procedure by Iannaconne \& Bridges that incorporates all the above metrics into a single quantifiable cost to help security operation centers select the right tools for their use case. Our results show that while ML-based tools are more effective at detecting zero-days and malicious executables, they work best when used in combination with a signature-based solution. In addition, network-based tools had poor detection rates on protocols other than the HTTP or SMTP, making them a poor choice if used on their own. Surprisingly, we also found that all the tools tested had lower than expected detection rates, completely missing 37% of malicious files tested and failing to detect any polyglot files.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge