Juraj Peršić

Impact of Temporal Delay on Radar-Inertial Odometry

Mar 04, 2025

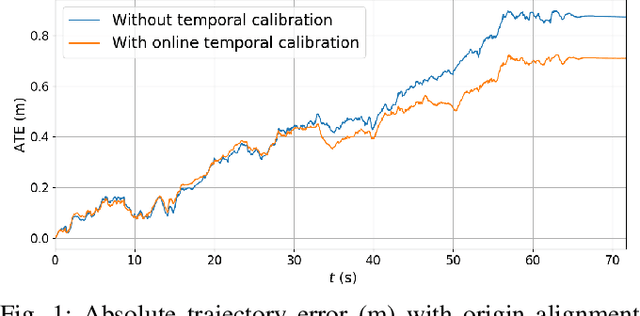

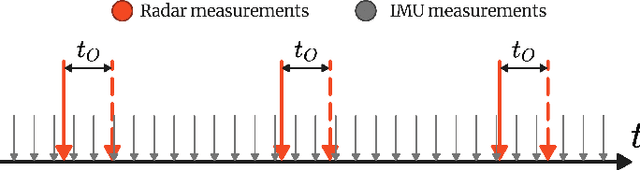

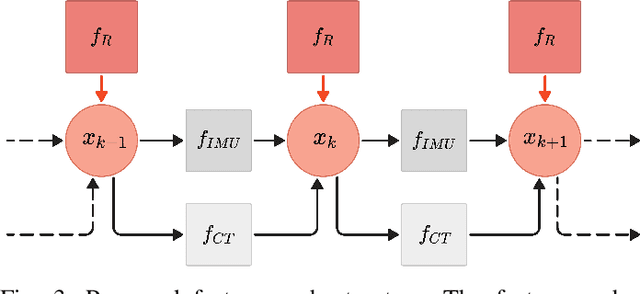

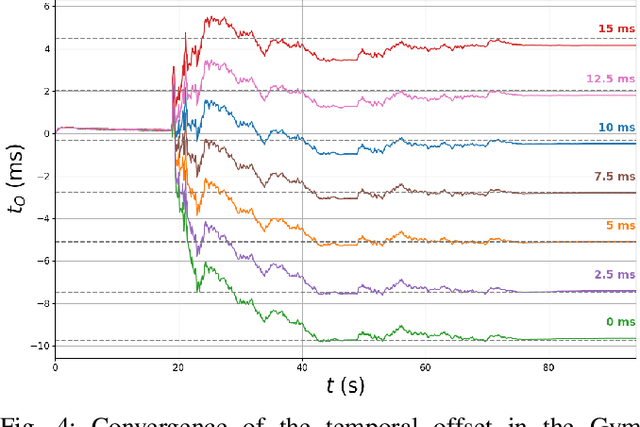

Abstract:Accurate ego-motion estimation is a critical component of any autonomous system. Conventional ego-motion sensors, such as cameras and LiDARs, may be compromised in adverse environmental conditions, such as fog, heavy rain, or dust. Automotive radars, known for their robustness to such conditions, present themselves as complementary sensors or a promising alternative within the ego-motion estimation frameworks. In this paper we propose a novel Radar-Inertial Odometry (RIO) system that integrates an automotive radar and an inertial measurement unit. The key contribution is the integration of online temporal delay calibration within the factor graph optimization framework that compensates for potential time offsets between radar and IMU measurements. To validate the proposed approach we have conducted thorough experimental analysis on real-world radar and IMU data. The results show that, even without scan matching or target tracking, integration of online temporal calibration significantly reduces localization error compared to systems that disregard time synchronization, thus highlighting the important role of, often neglected, accurate temporal alignment in radar-based sensor fusion systems for autonomous navigation.

RAVE: A Framework for Radar Ego-Velocity Estimation

Jun 27, 2024

Abstract:State estimation is an essential component of autonomous systems, usually relying on sensor fusion that integrates data from cameras, LiDARs and IMUs. Recently, radars have shown the potential to improve the accuracy and robustness of state estimation and perception, especially in challenging environmental conditions such as adverse weather and low-light scenarios. In this paper, we present a framework for ego-velocity estimation, which we call RAVE, that relies on 3D automotive radar data and encompasses zero velocity detection, outlier rejection, and velocity estimation. In addition, we propose a simple filtering method to discard infeasible ego-velocity estimates. We also conduct a systematic analysis of how different existing outlier rejection techniques and optimization loss functions impact estimation accuracy. Our evaluation on three open-source datasets demonstrates the effectiveness of the proposed filter and a significant positive impact of RAVE on the odometry accuracy. Furthermore, we release an open-source implementation of the proposed framework for radar ego-velocity estimation accompanied with a ROS interface.

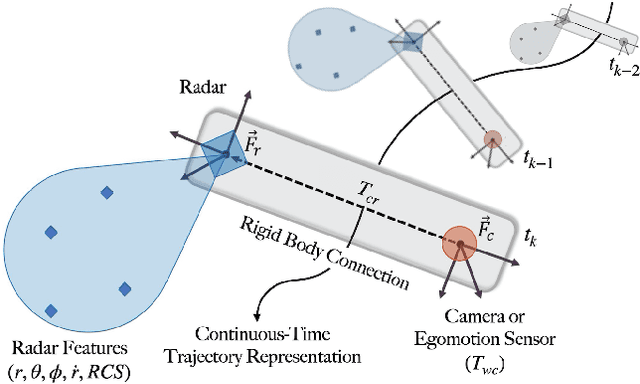

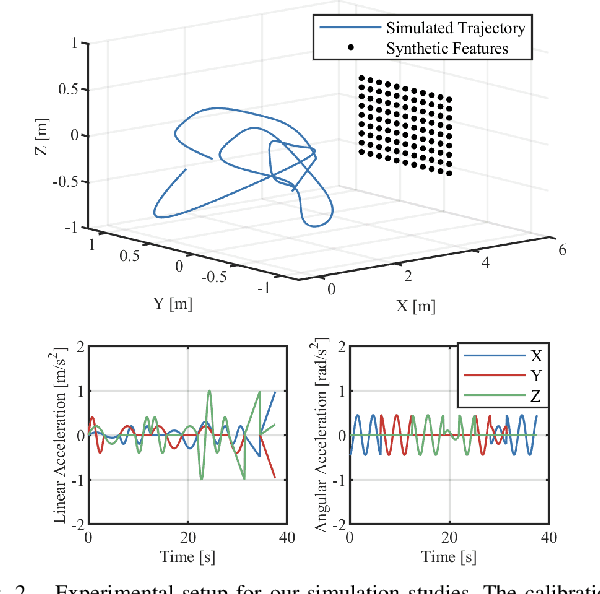

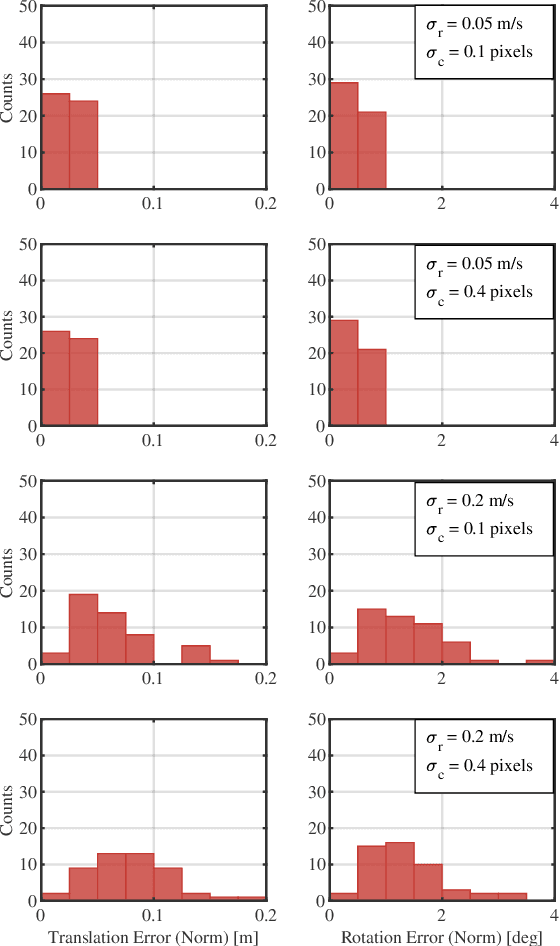

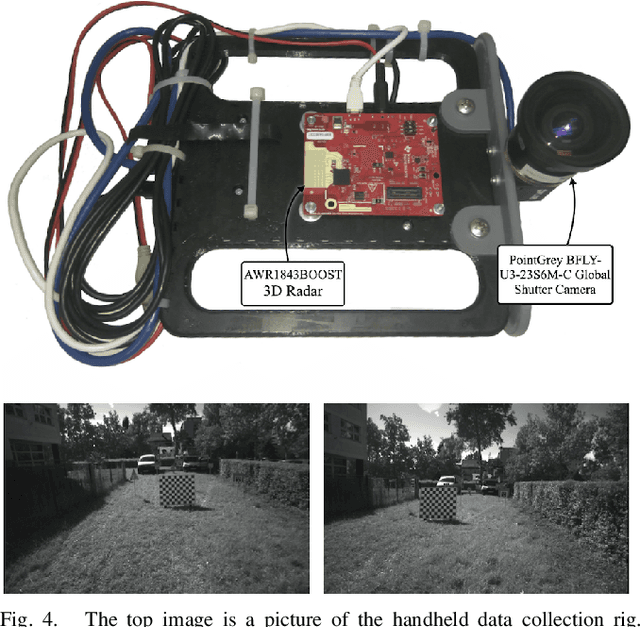

A Continuous-Time Approach for 3D Radar-to-Camera Extrinsic Calibration

Mar 12, 2021

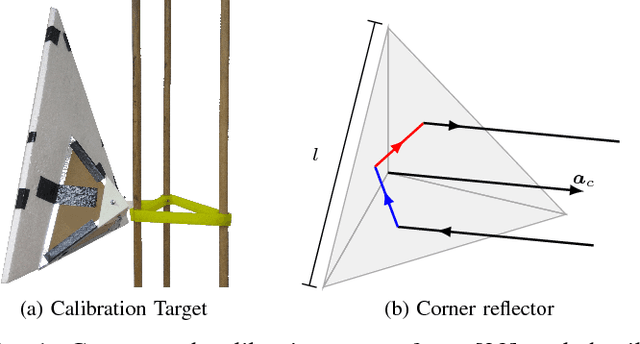

Abstract:Reliable operation in inclement weather is essential to the deployment of safe autonomous vehicles (AVs). Robustness and reliability can be achieved by fusing data from the standard AV sensor suite (i.e., lidars, cameras) with weather robust sensors, such as millimetre-wavelength radar. Critically, accurate sensor data fusion requires knowledge of the rigid-body transform between sensor pairs, which can be determined through the process of extrinsic calibration. A number of extrinsic calibration algorithms have been designed for 2D (planar) radar sensors - however, recently-developed, low-cost 3D millimetre-wavelength radars are set to displace their 2D counterparts in many applications. In this paper, we present a continuous-time 3D radar-to-camera extrinsic calibration algorithm that utilizes radar velocity measurements and, unlike the majority of existing techniques, does not require specialized radar retroreflectors to be present in the environment. We derive the observability properties of our formulation and demonstrate the efficacy of our algorithm through synthetic and real-world experiments.

Stochastic Optimization for Trajectory Planning with Heteroscedastic Gaussian Processes

Jul 17, 2019

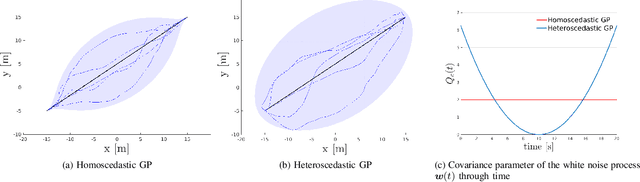

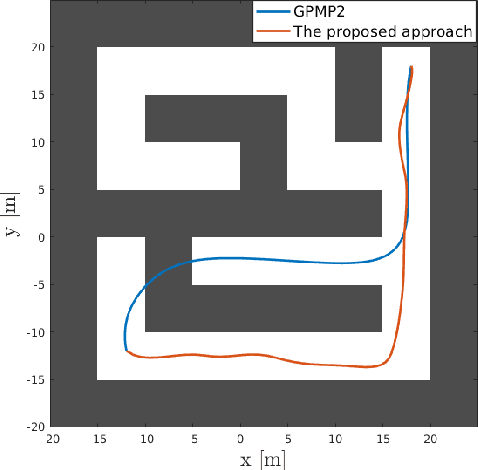

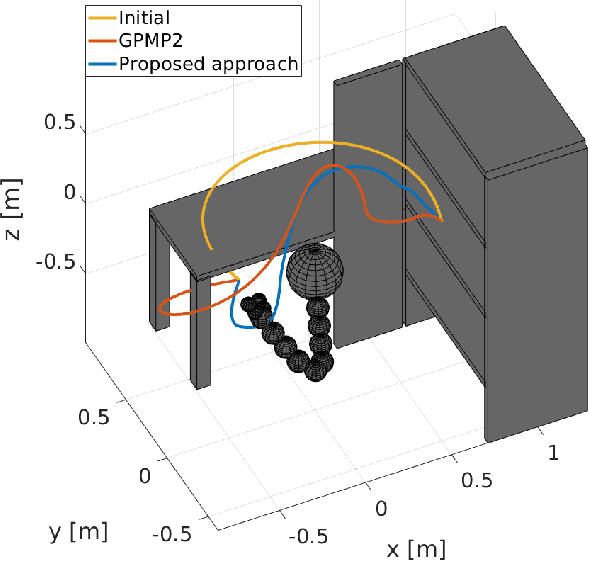

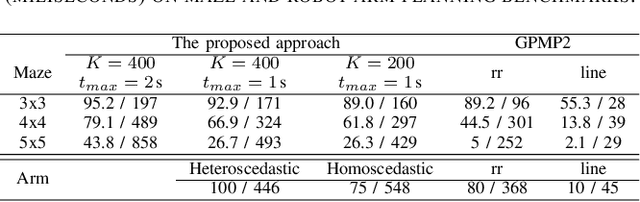

Abstract:Trajectory optimization methods for motion planning attempt to generate trajectories that minimize a suitable objective function. Such methods efficiently find solutions even for high degree-of-freedom robots. However, a globally optimal solution is often intractable in practice and state-of-the-art trajectory optimization methods are thus prone to local minima, especially in cluttered environments. In this paper, we propose a novel motion planning algorithm that employs stochastic optimization based on the cross-entropy method in order to tackle the local minima problem. We represent trajectories as samples from a continuous-time Gaussian process and introduce heteroscedasticity to generate powerful trajectory priors better suited for collision avoidance in motion planning problems. Our experimental evaluation shows that the proposed approach yields a more thorough exploration of the solution space and a higher success rate in complex environments than a current Gaussian process based state-of-the-art trajectory optimization method, namely GPMP2, while having comparable execution time.

Spatio-Temporal Multisensor Calibration Based on Gaussian Processes Moving Object Tracking

Apr 08, 2019

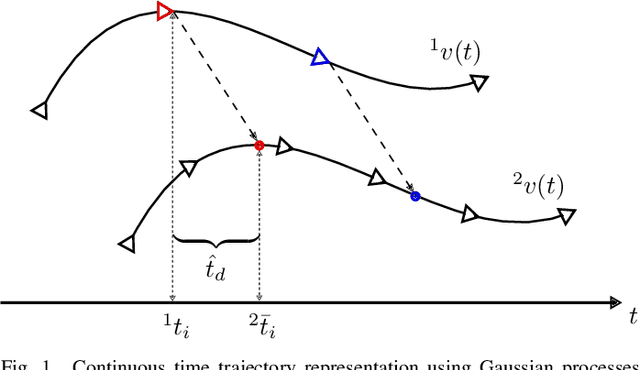

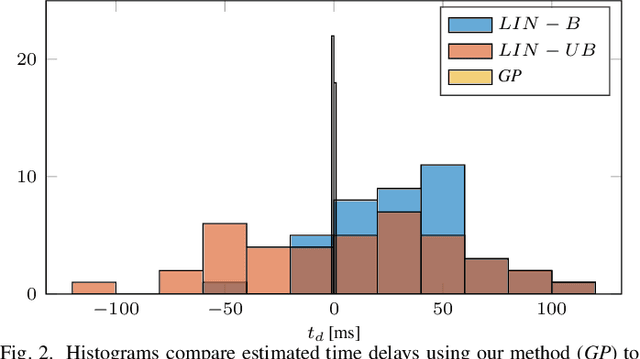

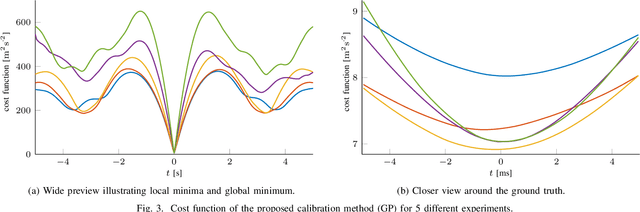

Abstract:Perception is one of the key abilities of autonomous mobile robotic systems, which often relies on fusion of heterogeneous sensors. Although this heterogeneity presents a challenge for sensor calibration, it is also the main prospect for reliability and robustness of autonomous systems. In this paper, we propose a method for multisensor calibration based on Gaussian processes (GPs) estimated moving object trajectories, resulting with temporal and extrinsic parameters. The appealing properties of the proposed temporal calibration method are: coordinate frame invariance, thus avoiding prior extrinsic calibration, theoretically grounded batch state estimation and interpolation using GPs, computational efficiency with O(n) complexity, leveraging data already available in autonomous robot platforms, and the end result enabling 3D point-to-point extrinsic multisensor calibration. The proposed method is validated both in simulations and real-world experiments. For real-world experiment we evaluated the method on two multisensor systems: an externally triggered stereo camera, thus having temporal ground truth readily available, and a heterogeneous combination of a camera and motion capture system. The results show that the estimated time delays are accurate up to a fraction of the fastest sensor sampling time.

Calibration of Heterogeneous Sensor Systems

Dec 29, 2018

Abstract:Environment perception is a key component of any autonomous system and is often based on a heterogeneous set of sensors and fusion thereof for which sensor sensor calibration plays fundamental role. It can be divided to intrinsic and extrinsic sensor calibration. Former seeks for internal parameters of each individual sensor, while latter provides coordinate frame transformation between sensors. Calibration techniques require correspondence registration in the measurements which is one of the main challenges in the extrinsic calibration of heterogeneous sensors, since generally, each sensor can operate on a different physical principle. Measurement correspondences can originate from a designated calibration target or from features in the environment. Additionally, environment features can be used to estimate motion of individual sensors and the calibration is found by aligning these estimates. Motion-based calibration is the most common approach in the online calibration since it is more practical than the target-based methods, although it can lack in accuracy. Furthermore, online calibration is beneficial for system robustness as it can detect and adjust recalibration of the system in runtime, which can be seen as a prerequisite for long-term autonomy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge