Spatio-Temporal Multisensor Calibration Based on Gaussian Processes Moving Object Tracking

Paper and Code

Apr 08, 2019

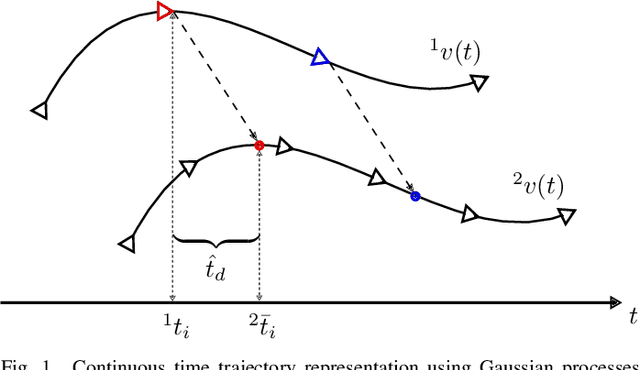

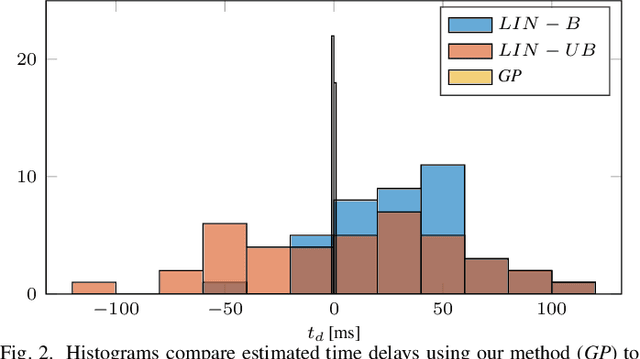

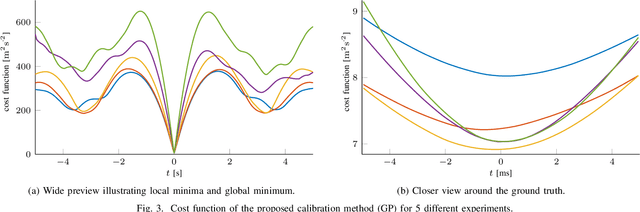

Perception is one of the key abilities of autonomous mobile robotic systems, which often relies on fusion of heterogeneous sensors. Although this heterogeneity presents a challenge for sensor calibration, it is also the main prospect for reliability and robustness of autonomous systems. In this paper, we propose a method for multisensor calibration based on Gaussian processes (GPs) estimated moving object trajectories, resulting with temporal and extrinsic parameters. The appealing properties of the proposed temporal calibration method are: coordinate frame invariance, thus avoiding prior extrinsic calibration, theoretically grounded batch state estimation and interpolation using GPs, computational efficiency with O(n) complexity, leveraging data already available in autonomous robot platforms, and the end result enabling 3D point-to-point extrinsic multisensor calibration. The proposed method is validated both in simulations and real-world experiments. For real-world experiment we evaluated the method on two multisensor systems: an externally triggered stereo camera, thus having temporal ground truth readily available, and a heterogeneous combination of a camera and motion capture system. The results show that the estimated time delays are accurate up to a fraction of the fastest sensor sampling time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge