Jung-Eun Kim

Decoupling Generalizability and Membership Privacy Risks in Neural Networks

Feb 02, 2026Abstract:A deep learning model usually has to sacrifice some utilities when it acquires some other abilities or characteristics. Privacy preservation has such trade-off relationships with utilities. The loss disparity between various defense approaches implies the potential to decouple generalizability and privacy risks to maximize privacy gain. In this paper, we identify that the model's generalization and privacy risks exist in different regions in deep neural network architectures. Based on the observations that we investigate, we propose Privacy-Preserving Training Principle (PPTP) to protect model components from privacy risks while minimizing the loss in generalizability. Through extensive evaluations, our approach shows significantly better maintenance in model generalizability while enhancing privacy preservation.

Impact of Layer Norm on Memorization and Generalization in Transformers

Nov 13, 2025

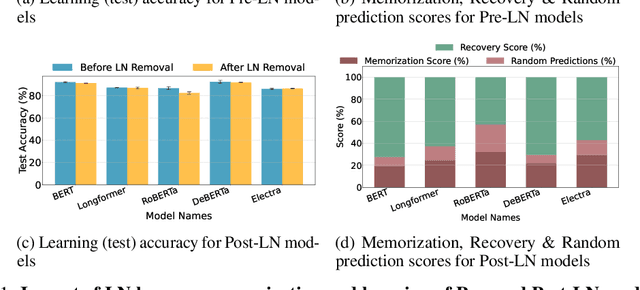

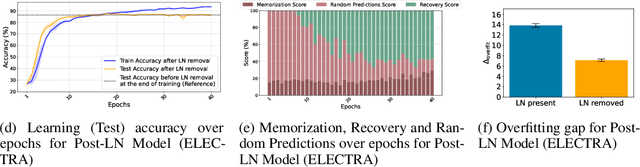

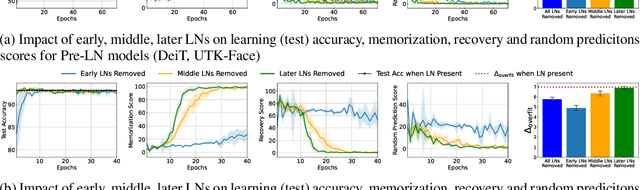

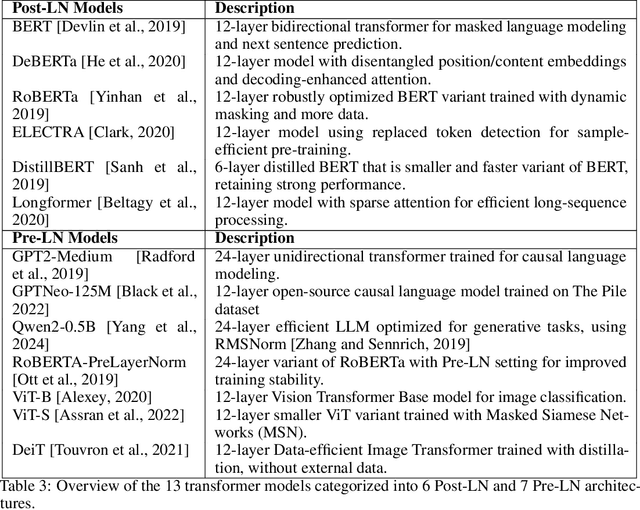

Abstract:Layer Normalization (LayerNorm) is one of the fundamental components in transformers that stabilizes training and improves optimization. In recent times, Pre-LayerNorm transformers have become the preferred choice over Post-LayerNorm transformers due to their stable gradient flow. However, the impact of LayerNorm on learning and memorization across these architectures remains unclear. In this work, we investigate how LayerNorm influences memorization and learning for Pre- and Post-LayerNorm transformers. We identify that LayerNorm serves as a key factor for stable learning in Pre-LayerNorm transformers, while in Post-LayerNorm transformers, it impacts memorization. Our analysis reveals that eliminating LayerNorm parameters in Pre-LayerNorm models exacerbates memorization and destabilizes learning, while in Post-LayerNorm models, it effectively mitigates memorization by restoring genuine labels. We further precisely identify that early layers LayerNorm are the most critical over middle/later layers and their influence varies across Pre and Post LayerNorm models. We have validated it through 13 models across 6 Vision and Language datasets. These insights shed new light on the role of LayerNorm in shaping memorization and learning in transformers.

Explaining How Quantization Disparately Skews a Model

Sep 08, 2025Abstract:Post Training Quantization (PTQ) is widely adopted due to its high compression capacity and speed with minimal impact on accuracy. However, we observed that disparate impacts are exacerbated by quantization, especially for minority groups. Our analysis explains that in the course of quantization there is a chain of factors attributed to a disparate impact across groups during forward and backward passes. We explore how the changes in weights and activations induced by quantization cause cascaded impacts in the network, resulting in logits with lower variance, increased loss, and compromised group accuracies. We extend our study to verify the influence of these impacts on group gradient norms and eigenvalues of the Hessian matrix, providing insights into the state of the network from an optimization point of view. To mitigate these effects, we propose integrating mixed precision Quantization Aware Training (QAT) with dataset sampling methods and weighted loss functions, therefore providing fair deployment of quantized neural networks.

RESQUE: Quantifying Estimator to Task and Distribution Shift for Sustainable Model Reusability

Dec 20, 2024

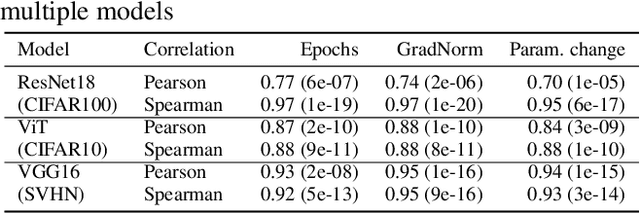

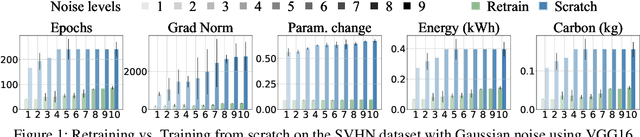

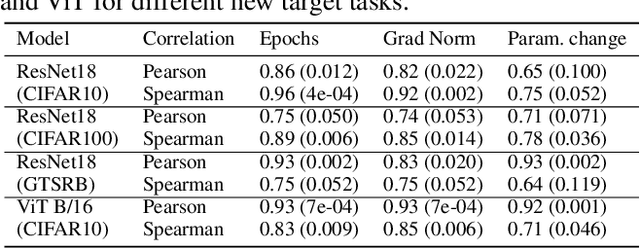

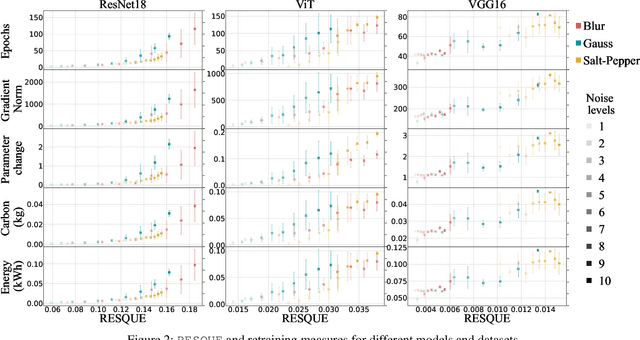

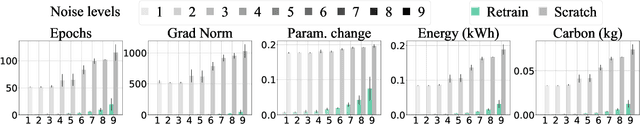

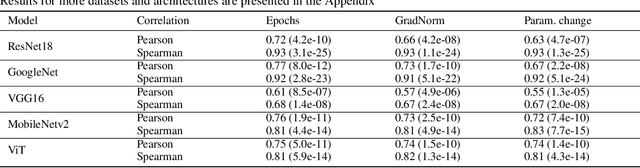

Abstract:As a strategy for sustainability of deep learning, reusing an existing model by retraining it rather than training a new model from scratch is critical. In this paper, we propose REpresentation Shift QUantifying Estimator (RESQUE), a predictive quantifier to estimate the retraining cost of a model to distributional shifts or change of tasks. It provides a single concise index for an estimate of resources required for retraining the model. Through extensive experiments, we show that RESQUE has a strong correlation with various retraining measures. Our results validate that RESQUE is an effective indicator in terms of epochs, gradient norms, changes of parameter magnitude, energy, and carbon emissions. These measures align well with RESQUE for new tasks, multiple noise types, and varying noise intensities. As a result, RESQUE enables users to make informed decisions for retraining to different tasks/distribution shifts and determine the most cost-effective and sustainable option, allowing for the reuse of a model with a much smaller footprint in the environment. The code for this work is available here: https://github.com/JEKimLab/AAAI2025RESQUE

Superficial Safety Alignment Hypothesis

Oct 07, 2024Abstract:As large language models (LLMs) are overwhelmingly more and more integrated into various applications, ensuring they generate safe and aligned responses is a pressing need. Previous research on alignment has largely focused on general instruction-following but has often overlooked the unique properties and challenges of safety alignment, such as the brittleness of safety mechanisms. To bridge the gap, we propose the Superficial Safety Alignment Hypothesis (SSAH), which posits that safety alignment should teach an otherwise unsafe model to choose the correct reasoning direction - interpreted as a specialized binary classification task - and incorporate a refusal mechanism with multiple reserved fallback options. Furthermore, through SSAH, we hypothesize that safety guardrails in LLMs can be established by just a small number of essential components. To verify this, we conduct an ablation study and successfully identify four types of attribute-critical components in safety-aligned LLMs: Exclusive Safety Unit (ESU), Exclusive Utility Unit (EUU), Complex Unit (CU), and Redundant Unit (RU). Our findings show that freezing certain safety-critical components 7.5\% during fine-tuning allows the model to retain its safety attributes while adapting to new tasks. Additionally, we show that leveraging redundant units 20\% in the pre-trained model as an ``alignment budget'' can effectively minimize the alignment tax while achieving the alignment goal. All considered, this paper concludes that the atomic functional unit for safety in LLMs is at the neuron level and underscores that safety alignment should not be complicated. We believe this work contributes to the foundation of efficient and scalable safety alignment for future LLMs.

Representation Magnitude has a Liability to Privacy Vulnerability

Jul 23, 2024Abstract:The privacy-preserving approaches to machine learning (ML) models have made substantial progress in recent years. However, it is still opaque in which circumstances and conditions the model becomes privacy-vulnerable, leading to a challenge for ML models to maintain both performance and privacy. In this paper, we first explore the disparity between member and non-member data in the representation of models under common training frameworks. We identify how the representation magnitude disparity correlates with privacy vulnerability and address how this correlation impacts privacy vulnerability. Based on the observations, we propose Saturn Ring Classifier Module (SRCM), a plug-in model-level solution to mitigate membership privacy leakage. Through a confined yet effective representation space, our approach ameliorates models' privacy vulnerability while maintaining generalizability. The code of this work can be found here: \url{https://github.com/JEKimLab/AIES2024_SRCM}

Estimating Environmental Cost Throughout Model's Adaptive Life Cycle

Jul 23, 2024

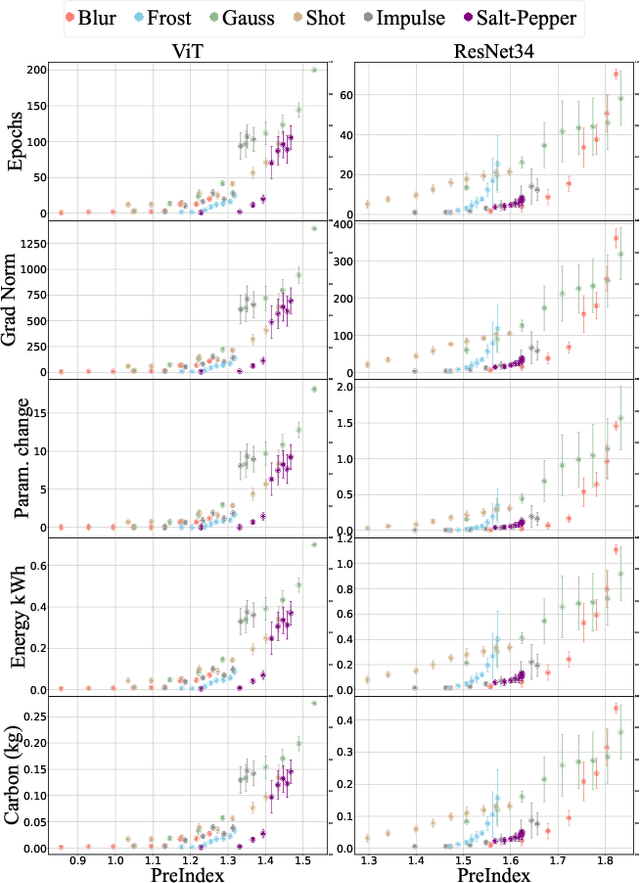

Abstract:With the rapid increase in the research, development, and application of neural networks in the current era, there is a proportional increase in the energy needed to train and use models. Crucially, this is accompanied by the increase in carbon emissions into the environment. A sustainable and socially beneficial approach to reducing the carbon footprint and rising energy demands associated with the modern age of AI/deep learning is the adaptive and continuous reuse of models with regard to changes in the environment of model deployment or variations/changes in the input data. In this paper, we propose PreIndex, a predictive index to estimate the environmental and compute resources associated with model retraining to distributional shifts in data. PreIndex can be used to estimate environmental costs such as carbon emissions and energy usage when retraining from current data distribution to new data distribution. It also correlates with and can be used to estimate other resource indicators associated with deep learning, such as epochs, gradient norm, and magnitude of model parameter change. PreIndex requires only one forward pass of the data, following which it provides a single concise value to estimate resources associated with retraining to the new distribution shifted data. We show that PreIndex can be reliably used across various datasets, model architectures, different types, and intensities of distribution shifts. Thus, PreIndex enables users to make informed decisions for retraining to different distribution shifts and determine the most cost-effective and sustainable option, allowing for the reuse of a model with a much smaller footprint in the environment. The code for this work is available here: https://github.com/JEKimLab/AIES2024PreIndex

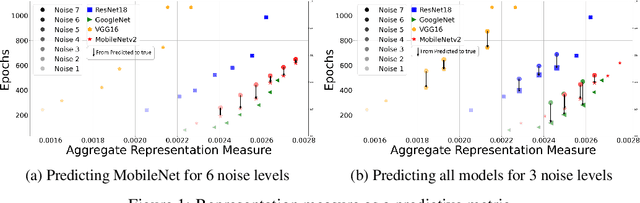

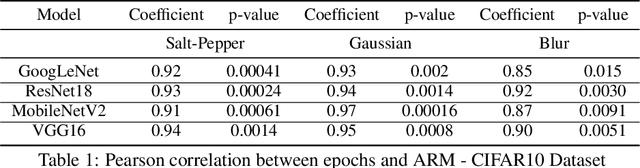

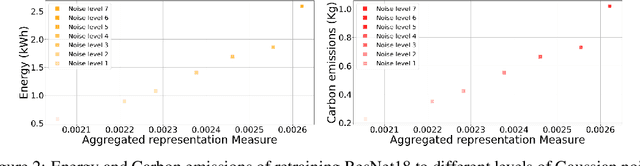

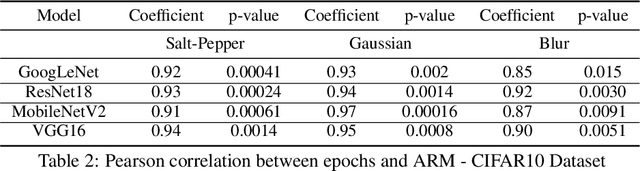

Aggregate Representation Measure for Predictive Model Reusability

May 15, 2024

Abstract:In this paper, we propose a predictive quantifier to estimate the retraining cost of a trained model in distribution shifts. The proposed Aggregated Representation Measure (ARM) quantifies the change in the model's representation from the old to new data distribution. It provides, before actually retraining the model, a single concise index of resources - epochs, energy, and carbon emissions - required for the retraining. This enables reuse of a model with a much lower cost than training a new model from scratch. The experimental results indicate that ARM reasonably predicts retraining costs for varying noise intensities and enables comparisons among multiple model architectures to determine the most cost-effective and sustainable option.

Center-Based Relaxed Learning Against Membership Inference Attacks

Apr 26, 2024Abstract:Membership inference attacks (MIAs) are currently considered one of the main privacy attack strategies, and their defense mechanisms have also been extensively explored. However, there is still a gap between the existing defense approaches and ideal models in performance and deployment costs. In particular, we observed that the privacy vulnerability of the model is closely correlated with the gap between the model's data-memorizing ability and generalization ability. To address this, we propose a new architecture-agnostic training paradigm called center-based relaxed learning (CRL), which is adaptive to any classification model and provides privacy preservation by sacrificing a minimal or no loss of model generalizability. We emphasize that CRL can better maintain the model's consistency between member and non-member data. Through extensive experiments on standard classification datasets, we empirically show that this approach exhibits comparable performance without requiring additional model capacity or data costs.

The Over-Certainty Phenomenon in Modern UDA Algorithms

Apr 24, 2024Abstract:When neural networks are confronted with unfamiliar data that deviate from their training set, this signifies a domain shift. While these networks output predictions on their inputs, they typically fail to account for their level of familiarity with these novel observations. This challenge becomes even more pronounced in resource-constrained settings, such as embedded systems or edge devices. To address such challenges, we aim to recalibrate a neural network's decision boundaries in relation to its cognizance of the data it observes, introducing an approach we coin as certainty distillation. While prevailing works navigate unsupervised domain adaptation (UDA) with the goal of curtailing model entropy, they unintentionally birth models that grapple with calibration inaccuracies - a dilemma we term the over-certainty phenomenon. In this paper, we probe the drawbacks of this traditional learning model. As a solution to the issue, we propose a UDA algorithm that not only augments accuracy but also assures model calibration, all while maintaining suitability for environments with limited computational resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge