Joshua Hoegerman

Combining and Decoupling Rigid and Soft Grippers to Enhance Robotic Manipulation

Apr 21, 2024

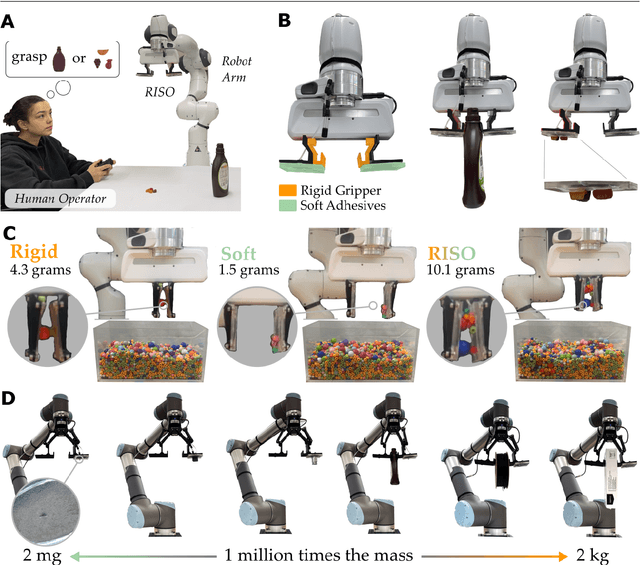

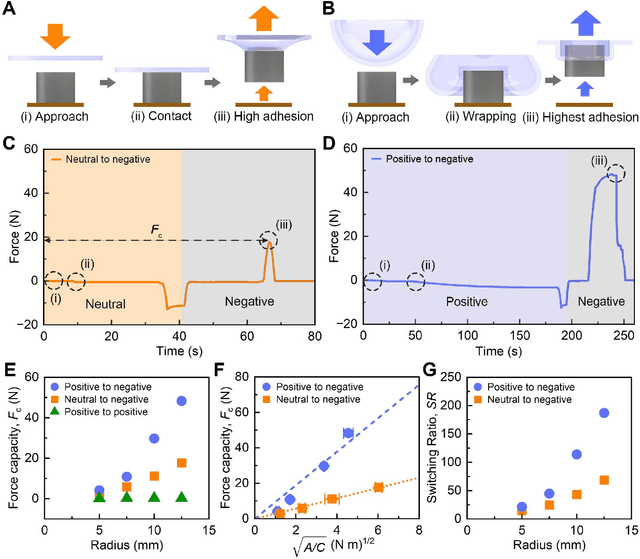

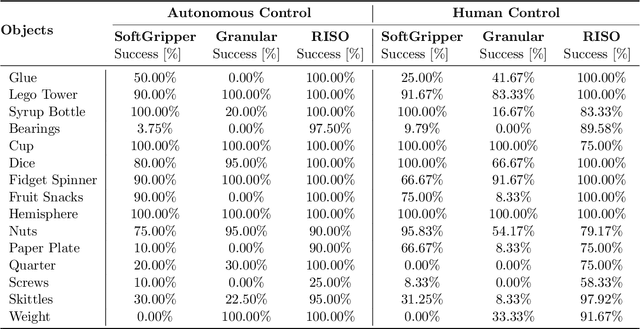

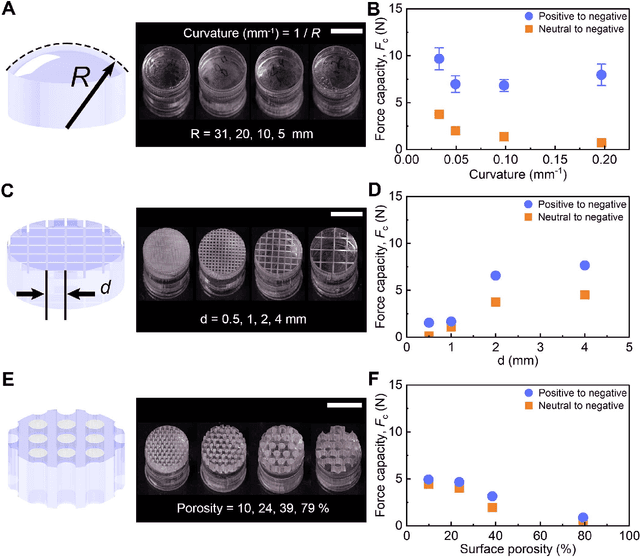

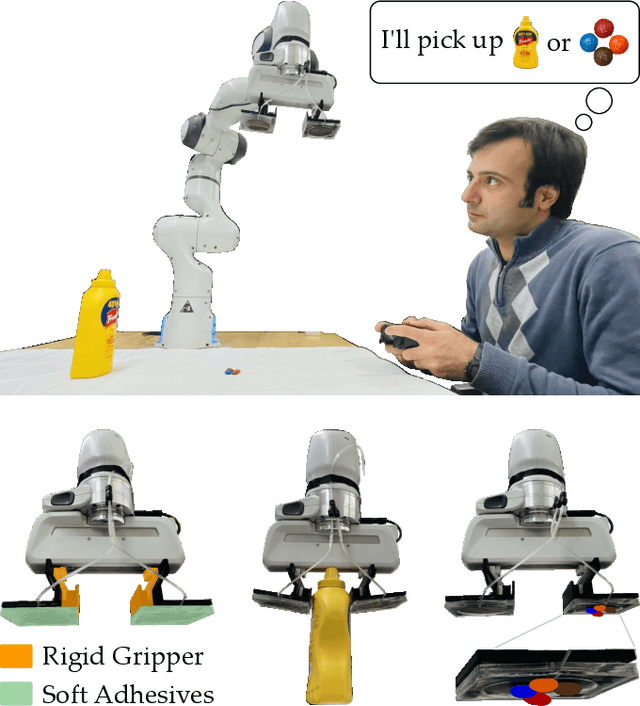

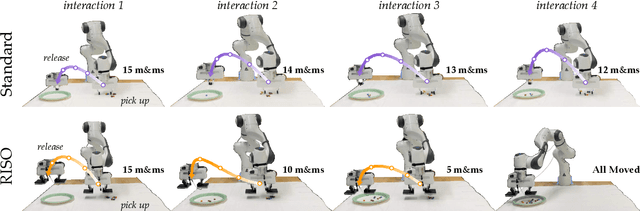

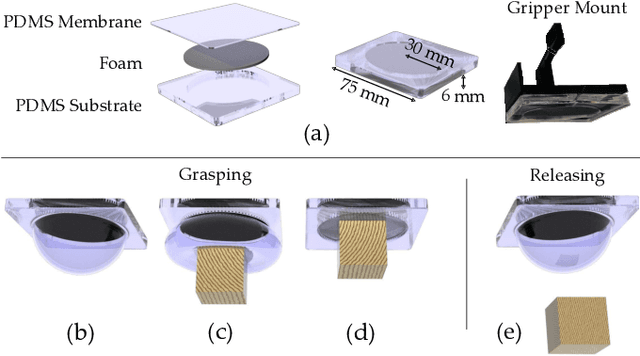

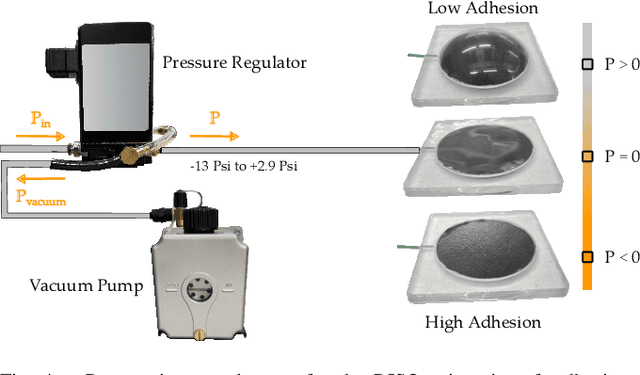

Abstract:For robot arms to perform everyday tasks in unstructured environments, these robots must be able to manipulate a diverse range of objects. Today's robots often grasp objects with either soft grippers or rigid end-effectors. However, purely rigid or purely soft grippers have fundamental limitations: soft grippers struggle with irregular, heavy objects, while rigid grippers often cannot grasp small, numerous items. In this paper we therefore introduce RISOs, a mechanics and controls approach for unifying traditional RIgid end-effectors with a novel class of SOft adhesives. When grasping an object, RISOs can use either the rigid end-effector (pinching the item between non-deformable fingers) and/or the soft materials (attaching and releasing items with switchable adhesives). This enhances manipulation capabilities by combining and decoupling rigid and soft mechanisms. With RISOs robots can perform grasps along a spectrum from fully rigid, to fully soft, to rigid-soft, enabling real time object manipulation across a 1 million times range in weight (from 2 mg to 2 kg). To develop RISOs we first model and characterize the soft switchable adhesives. We then mount sheets of these soft adhesives on the surfaces of rigid end-effectors, and develop control strategies that make it easier for robot arms and human operators to utilize RISOs. The resulting RISO grippers were able to pick-up, carry, and release a larger set of objects than existing grippers, and participants also preferred using RISO. Overall, our experimental and user study results suggest that RISOs provide an exceptional gripper range in both capacity and object diversity. See videos of our user studies here: https://youtu.be/du085R0gPFI

Aligning Learning with Communication in Shared Autonomy

Mar 18, 2024

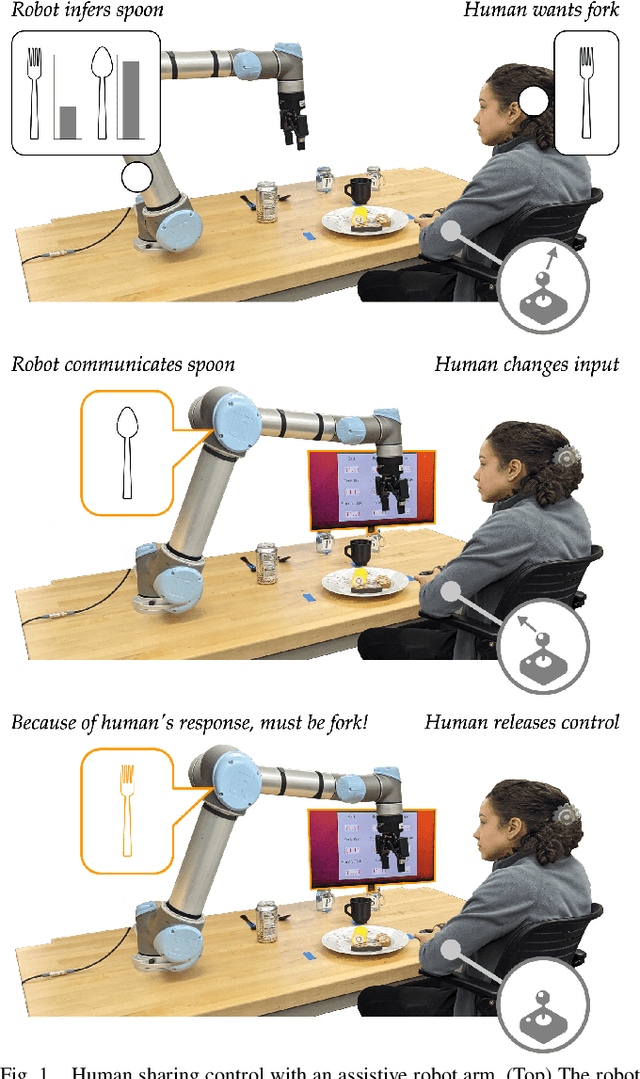

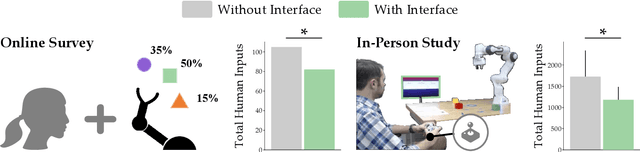

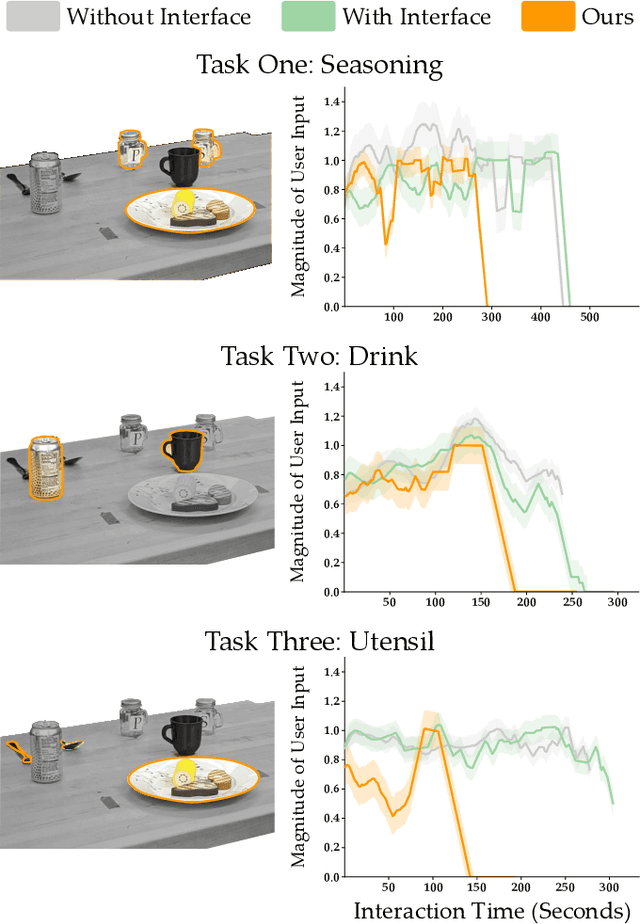

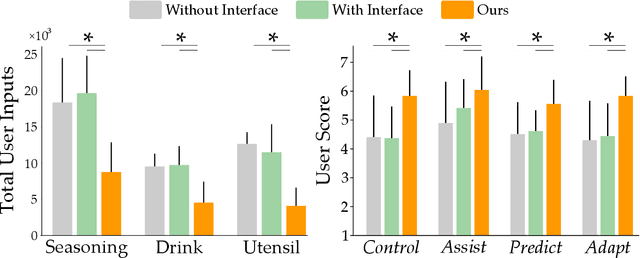

Abstract:Assistive robot arms can help humans by partially automating their desired tasks. Consider an adult with motor impairments controlling an assistive robot arm to eat dinner. The robot can reduce the number of human inputs -- and how precise those inputs need to be -- by recognizing what the human wants (e.g., a fork) and assisting for that task (e.g., moving towards the fork). Prior research has largely focused on learning the human's task and providing meaningful assistance. But as the robot learns and assists, we also need to ensure that the human understands the robot's intent (e.g., does the human know the robot is reaching for a fork?). In this paper, we study the effects of communicating learned assistance from the robot back to the human operator. We do not focus on the specific interfaces used for communication. Instead, we develop experimental and theoretical models of a) how communication changes the way humans interact with assistive robot arms, and b) how robots can harness these changes to better align with the human's intent. We first conduct online and in-person user studies where participants operate robots that provide partial assistance, and we measure how the human's inputs change with and without communication. With communication, we find that humans are more likely to intervene when the robot incorrectly predicts their intent, and more likely to release control when the robot correctly understands their task. We then use these findings to modify an established robot learning algorithm so that the robot can correctly interpret the human's inputs when communication is present. Our results from a second in-person user study suggest that this combination of communication and learning outperforms assistive systems that isolate either learning or communication.

Reward Learning with Intractable Normalizing Functions

May 16, 2023Abstract:Robots can learn to imitate humans by inferring what the human is optimizing for. One common framework for this is Bayesian reward learning, where the robot treats the human's demonstrations and corrections as observations of their underlying reward function. Unfortunately, this inference is doubly-intractable: the robot must reason over all the trajectories the person could have provided and all the rewards the person could have in mind. Prior work uses existing robotic tools to approximate this normalizer. In this paper, we group previous approaches into three fundamental classes and analyze the theoretical pros and cons of their approach. We then leverage recent research from the statistics community to introduce Double MH reward learning, a Monte Carlo method for asymptotically learning the human's reward in continuous spaces. We extend Double MH to conditionally independent settings (where each human correction is viewed as completely separate) and conditionally dependent environments (where the human's current correction may build on previous inputs). Across simulations and user studies, our proposed approach infers the human's reward parameters more accurately than the alternate approximations when learning from either demonstrations or corrections. See videos here: https://youtu.be/EkmT3o5K5ko

RISO: Combining Rigid Grippers with Soft Switchable Adhesives

Oct 27, 2022

Abstract:Robot arms that assist humans should be able to pick up, move, and release everyday objects. Today's assistive robot arms use rigid grippers to pinch items between fingers; while these rigid grippers are well suited for large and heavy objects, they often struggle to grasp small, numerous, or delicate items (such as foods). Soft grippers cover the opposite end of the spectrum; these grippers use adhesives or change shape to wrap around small and irregular items, but cannot exert the large forces needed to manipulate heavy objects. In this paper we introduce RIgid-SOft (RISO) grippers that combine switchable soft adhesives with standard rigid mechanisms to enable a diverse range of robotic grasping. We develop RISO grippers by leveraging a novel class of soft materials that change adhesion force in real-time through pneumatically controlled shape and rigidity tuning. By mounting these soft adhesives on the bottom of rigid fingers, we create a gripper that can interact with objects using either purely rigid grasps (pinching the object) or purely soft grasps (adhering to the object). This increased capability requires additional decision making, and we therefore formulate a shared control approach that partially automates the motion of the robot arm. In practice, this controller aligns the RISO gripper while inferring which object the human wants to grasp and how the human wants to grasp that item. Our user study demonstrates that RISO grippers can pick up, move, and release household items from existing datasets, and that the system performs grasps more successfully and efficiently when sharing control between the human and robot. See videos here: https://youtu.be/5uLUkBYcnwg

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge