Jos van der Westhuizen

Learning a Generative Model for Validity in Complex Discrete Structures

Nov 02, 2018

Abstract:Deep generative models have been successfully used to learn representations for high-dimensional discrete spaces by representing discrete objects as sequences and employing powerful sequence-based deep models. Unfortunately, these sequence-based models often produce invalid sequences: sequences which do not represent any underlying discrete structure; invalid sequences hinder the utility of such models. As a step towards solving this problem, we propose to learn a deep recurrent validator model, which can estimate whether a partial sequence can function as the beginning of a full, valid sequence. This validator provides insight as to how individual sequence elements influence the validity of the overall sequence, and can be used to constrain sequence based models to generate valid sequences -- and thus faithfully model discrete objects. Our approach is inspired by reinforcement learning, where an oracle which can evaluate validity of complete sequences provides a sparse reward signal. We demonstrate its effectiveness as a generative model of Python 3 source code for mathematical expressions, and in improving the ability of a variational autoencoder trained on SMILES strings to decode valid molecular structures.

The unreasonable effectiveness of the forget gate

Sep 13, 2018

Abstract:Given the success of the gated recurrent unit, a natural question is whether all the gates of the long short-term memory (LSTM) network are necessary. Previous research has shown that the forget gate is one of the most important gates in the LSTM. Here we show that a forget-gate-only version of the LSTM with chrono-initialized biases, not only provides computational savings but outperforms the standard LSTM on multiple benchmark datasets and competes with some of the best contemporary models. Our proposed network, the JANET, achieves accuracies of 99% and 92.5% on the MNIST and pMNIST datasets, outperforming the standard LSTM which yields accuracies of 98.5% and 91%.

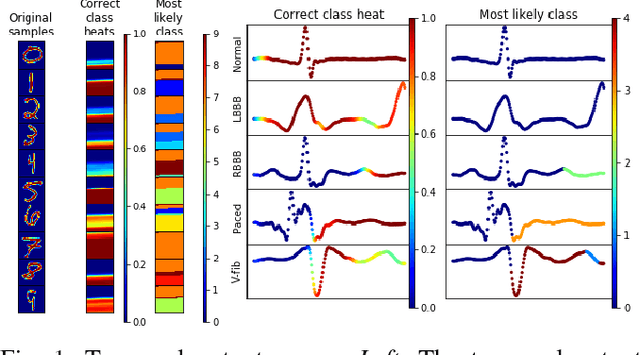

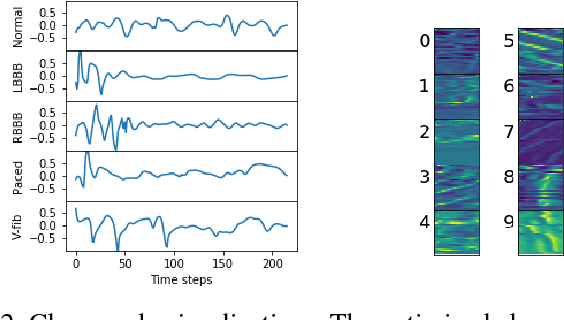

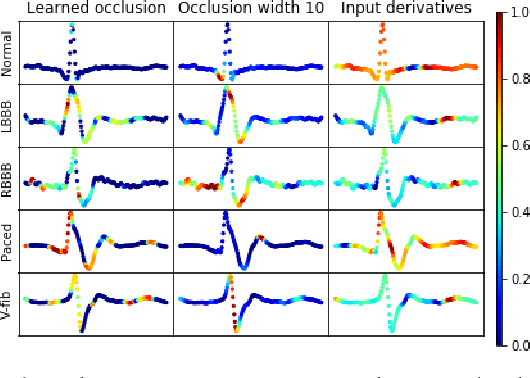

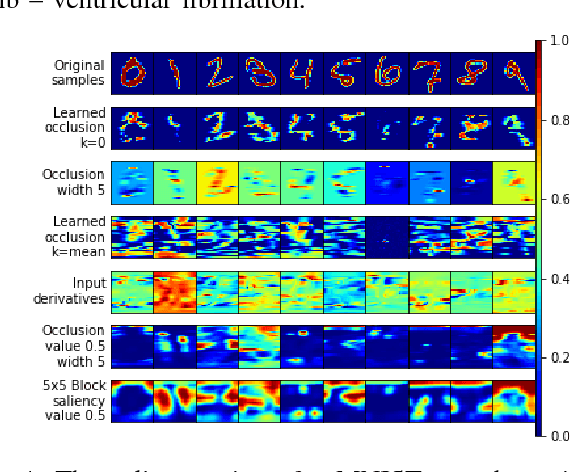

Techniques for visualizing LSTMs applied to electrocardiograms

Jun 15, 2018

Abstract:This paper explores four different visualization techniques for long short-term memory (LSTM) networks applied to continuous-valued time series. On the datasets analysed, we find that the best visualization technique is to learn an input deletion mask that optimally reduces the true class score. With a specific focus on single-lead electrocardiograms from the MIT-BIH arrhythmia dataset, we show that salient input features for the LSTM classifier align well with medical theory.

Actively Learning what makes a Discrete Sequence Valid

Aug 15, 2017

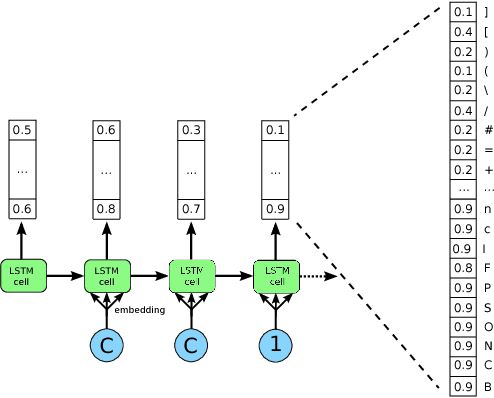

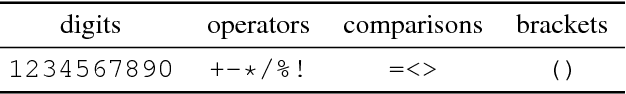

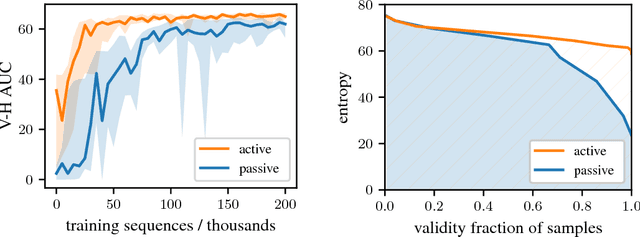

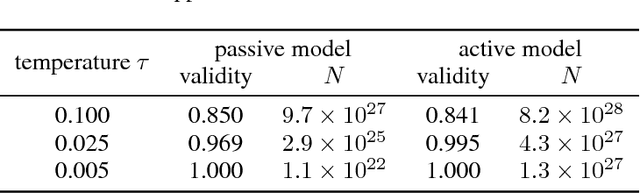

Abstract:Deep learning techniques have been hugely successful for traditional supervised and unsupervised machine learning problems. In large part, these techniques solve continuous optimization problems. Recently however, discrete generative deep learning models have been successfully used to efficiently search high-dimensional discrete spaces. These methods work by representing discrete objects as sequences, for which powerful sequence-based deep models can be employed. Unfortunately, these techniques are significantly hindered by the fact that these generative models often produce invalid sequences. As a step towards solving this problem, we propose to learn a deep recurrent validator model. Given a partial sequence, our model learns the probability of that sequence occurring as the beginning of a full valid sequence. Thus this identifies valid versus invalid sequences and crucially it also provides insight about how individual sequence elements influence the validity of discrete objects. To learn this model we propose an approach inspired by seminal work in Bayesian active learning. On a synthetic dataset, we demonstrate the ability of our model to distinguish valid and invalid sequences. We believe this is a key step toward learning generative models that faithfully produce valid discrete objects.

Bayesian LSTMs in medicine

Jun 05, 2017

Abstract:The medical field stands to see significant benefits from the recent advances in deep learning. Knowing the uncertainty in the decision made by any machine learning algorithm is of utmost importance for medical practitioners. This study demonstrates the utility of using Bayesian LSTMs for classification of medical time series. Four medical time series datasets are used to show the accuracy improvement Bayesian LSTMs provide over standard LSTMs. Moreover, we show cherry-picked examples of confident and uncertain classifications of the medical time series. With simple modifications of the common practice for deep learning, significant improvements can be made for the medical practitioner and patient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge