Joaquim Comas

CAST-Phys: Contactless Affective States Through Physiological signals Database

Jul 08, 2025Abstract:In recent years, affective computing and its applications have become a fast-growing research topic. Despite significant advancements, the lack of affective multi-modal datasets remains a major bottleneck in developing accurate emotion recognition systems. Furthermore, the use of contact-based devices during emotion elicitation often unintentionally influences the emotional experience, reducing or altering the genuine spontaneous emotional response. This limitation highlights the need for methods capable of extracting affective cues from multiple modalities without physical contact, such as remote physiological emotion recognition. To address this, we present the Contactless Affective States Through Physiological Signals Database (CAST-Phys), a novel high-quality dataset explicitly designed for multi-modal remote physiological emotion recognition using facial and physiological cues. The dataset includes diverse physiological signals, such as photoplethysmography (PPG), electrodermal activity (EDA), and respiration rate (RR), alongside high-resolution uncompressed facial video recordings, enabling the potential for remote signal recovery. Our analysis highlights the crucial role of physiological signals in realistic scenarios where facial expressions alone may not provide sufficient emotional information. Furthermore, we demonstrate the potential of remote multi-modal emotion recognition by evaluating the impact of individual and fused modalities, showcasing its effectiveness in advancing contactless emotion recognition technologies.

PhysFlow: Skin tone transfer for remote heart rate estimation through conditional normalizing flows

Jul 31, 2024Abstract:In recent years, deep learning methods have shown impressive results for camera-based remote physiological signal estimation, clearly surpassing traditional methods. However, the performance and generalization ability of Deep Neural Networks heavily depends on rich training data truly representing different factors of variation encountered in real applications. Unfortunately, many current remote photoplethysmography (rPPG) datasets lack diversity, particularly in darker skin tones, leading to biased performance of existing rPPG approaches. To mitigate this bias, we introduce PhysFlow, a novel method for augmenting skin diversity in remote heart rate estimation using conditional normalizing flows. PhysFlow adopts end-to-end training optimization, enabling simultaneous training of supervised rPPG approaches on both original and generated data. Additionally, we condition our model using CIELAB color space skin features directly extracted from the facial videos without the need for skin-tone labels. We validate PhysFlow on publicly available datasets, UCLA-rPPG and MMPD, demonstrating reduced heart rate error, particularly in dark skin tones. Furthermore, we demonstrate its versatility and adaptability across different data-driven rPPG methods.

Deep Pulse-Signal Magnification for remote Heart Rate Estimation in Compressed Videos

May 04, 2024

Abstract:Recent advancements in remote heart rate measurement (rPPG), motivated by data-driven approaches, have significantly improved accuracy. However, certain challenges, such as video compression, still remain: recovering the rPPG signal from highly compressed videos is particularly complex. Although several studies have highlighted the difficulties and impact of video compression for this, effective solutions remain limited. In this paper, we present a novel approach to address the impact of video compression on rPPG estimation, which leverages a pulse-signal magnification transformation to adapt compressed videos to an uncompressed data domain in which the rPPG signal is magnified. We validate the effectiveness of our model by exhaustive evaluations on two publicly available datasets, UCLA-rPPG and UBFC-rPPG, employing both intra- and cross-database performance at several compression rates. Additionally, we assess the robustness of our approach on two additional highly compressed and widely-used datasets, MAHNOB-HCI and COHFACE, which reveal outstanding heart rate estimation results.

Deep adaptative spectral zoom for improved remote heart rate estimation

Mar 11, 2024

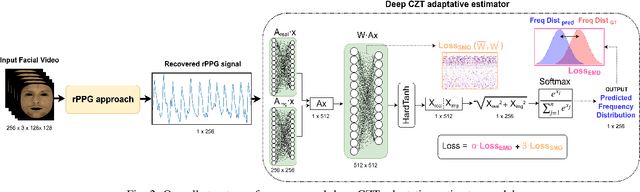

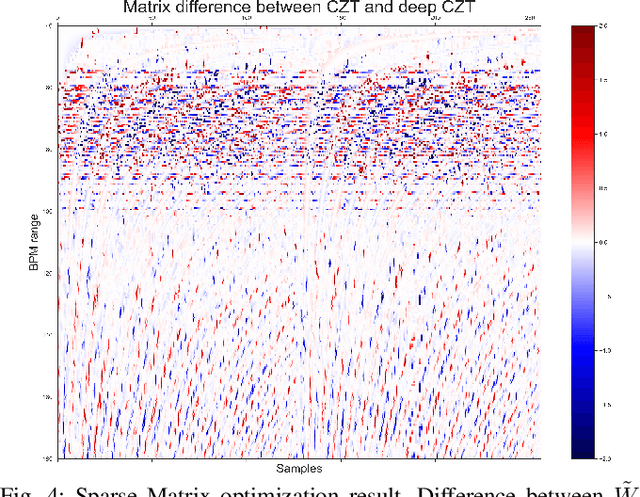

Abstract:Recent advances in remote heart rate measurement, motivated by data-driven approaches, have notably enhanced accuracy. However, these improvements primarily focus on recovering the rPPG signal, overlooking the implicit challenges of estimating the heart rate (HR) from the derived signal. While many methods employ the Fast Fourier Transform (FFT) for HR estimation, the performance of the FFT is inherently affected by a limited frequency resolution. In contrast, the Chirp-Z Transform (CZT), a generalization form of FFT, can refine the spectrum to the narrow-band range of interest for heart rate, providing improved frequential resolution and, consequently, more accurate estimation. This paper presents the advantages of employing the CZT for remote HR estimation and introduces a novel data-driven adaptive CZT estimator. The objective of our proposed model is to tailor the CZT to match the characteristics of each specific dataset sensor, facilitating a more optimal and accurate estimation of HR from the rPPG signal without compromising generalization across diverse datasets. This is achieved through a Sparse Matrix Optimization (SMO). We validate the effectiveness of our model through exhaustive evaluations on three publicly available datasets UCLA-rPPG, PURE, and UBFC-rPPG employing both intra- and cross-database performance metrics. The results reveal outstanding heart rate estimation capabilities, establishing the proposed approach as a robust and versatile estimator for any rPPG method.

Efficient Remote Photoplethysmography with Temporal Derivative Modules and Time-Shift Invariant Loss

Mar 21, 2022

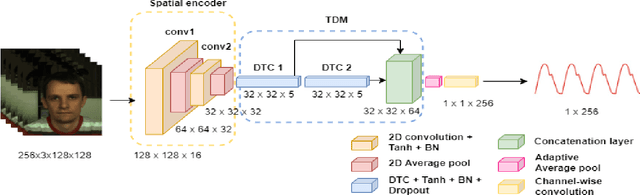

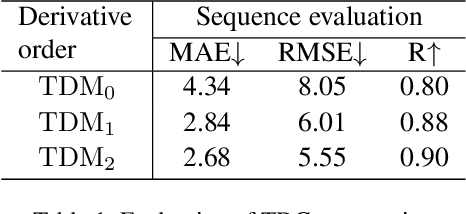

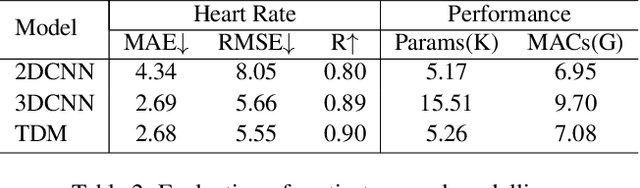

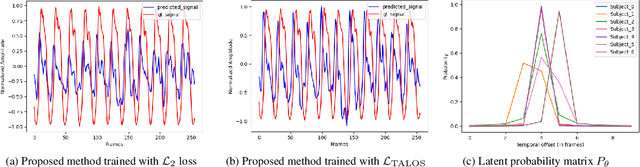

Abstract:We present a lightweight neural model for remote heart rate estimation focused on the efficient spatio-temporal learning of facial photoplethysmography (PPG) based on i) modelling of PPG dynamics by combinations of multiple convolutional derivatives, and ii) increased flexibility of the model to learn possible offsets between the video facial PPG and the ground truth. PPG dynamics are modelled by a Temporal Derivative Module (TDM) constructed by the incremental aggregation of multiple convolutional derivatives, emulating a Taylor series expansion up to the desired order. Robustness to ground truth offsets is handled by the introduction of TALOS (Temporal Adaptive LOcation Shift), a new temporal loss to train learning-based models. We verify the effectiveness of our model by reporting accuracy and efficiency metrics on the public PURE and UBFC-rPPG datasets. Compared to existing models, our approach shows competitive heart rate estimation accuracy with a much lower number of parameters and lower computational cost.

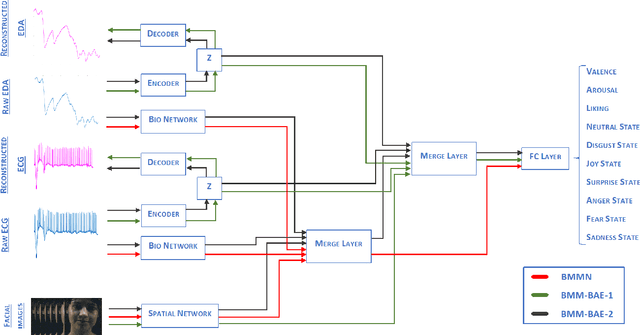

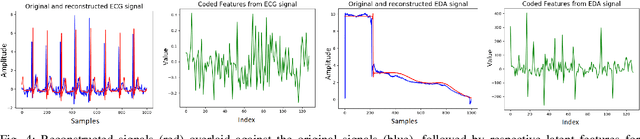

End-to-end facial and physiological model for Affective Computing and applications

Jan 20, 2020

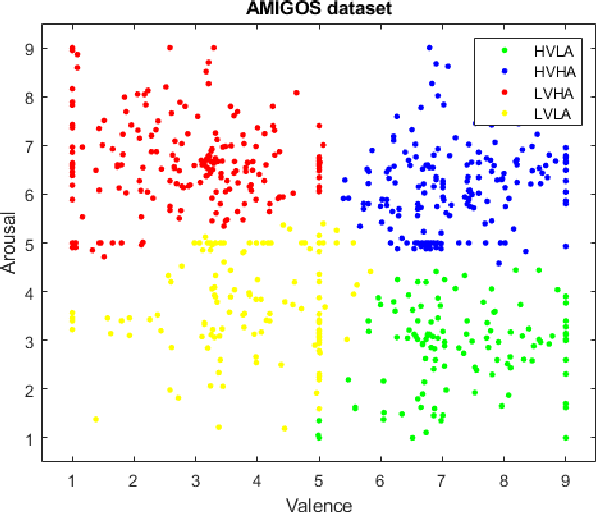

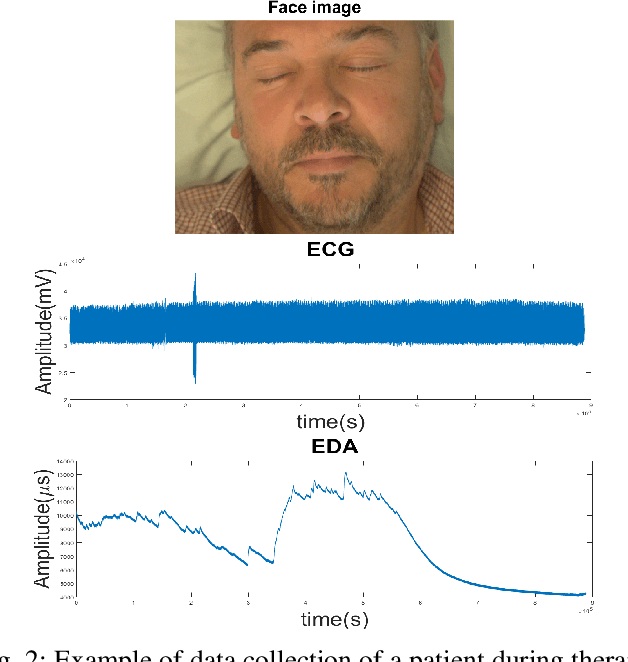

Abstract:In recent years, Affective Computing and its applications have become a fast-growing research topic. Furthermore, the rise of Deep Learning has introduced significant improvements in the emotion recognition system compared to classical methods. In this work, we propose a multi-modal emotion recognition model based on deep learning techniques using the combination of peripheral physiological signals and facial expressions. Moreover, we present an improvement to proposed models by introducing latent features extracted from our internal Bio Auto-Encoder (BAE). Both models are trained and evaluated on AMIGOS datasets reporting valence, arousal, and emotion state classification. Finally, to demonstrate a possible medical application in affective computing using deep learning techniques, we applied the proposed method to the assessment of anxiety therapy. To this purpose, a reduced multi-modal database has been collected by recording facial expressions and peripheral signals such as Electrocardiogram (ECG) and Galvanic Skin Response (GSR) of each patient. Valence and arousal estimation was extracted using the proposed model from the beginning until the end of the therapy, with successful evaluation to the different emotional changes in the temporal domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge