Efficient Remote Photoplethysmography with Temporal Derivative Modules and Time-Shift Invariant Loss

Paper and Code

Mar 21, 2022

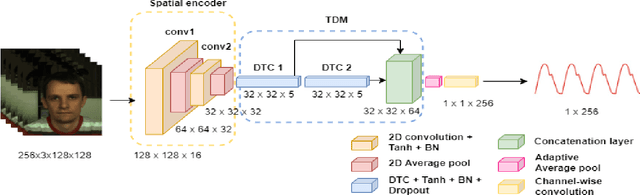

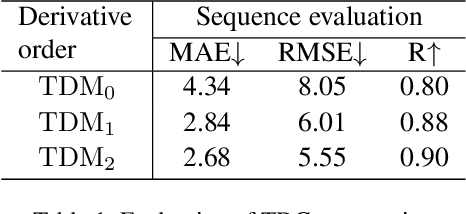

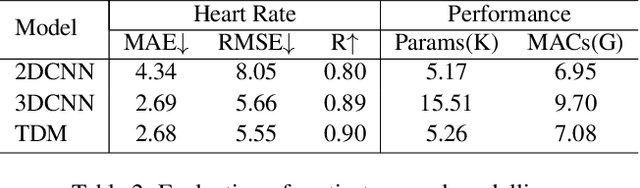

We present a lightweight neural model for remote heart rate estimation focused on the efficient spatio-temporal learning of facial photoplethysmography (PPG) based on i) modelling of PPG dynamics by combinations of multiple convolutional derivatives, and ii) increased flexibility of the model to learn possible offsets between the video facial PPG and the ground truth. PPG dynamics are modelled by a Temporal Derivative Module (TDM) constructed by the incremental aggregation of multiple convolutional derivatives, emulating a Taylor series expansion up to the desired order. Robustness to ground truth offsets is handled by the introduction of TALOS (Temporal Adaptive LOcation Shift), a new temporal loss to train learning-based models. We verify the effectiveness of our model by reporting accuracy and efficiency metrics on the public PURE and UBFC-rPPG datasets. Compared to existing models, our approach shows competitive heart rate estimation accuracy with a much lower number of parameters and lower computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge