End-to-end facial and physiological model for Affective Computing and applications

Paper and Code

Jan 20, 2020

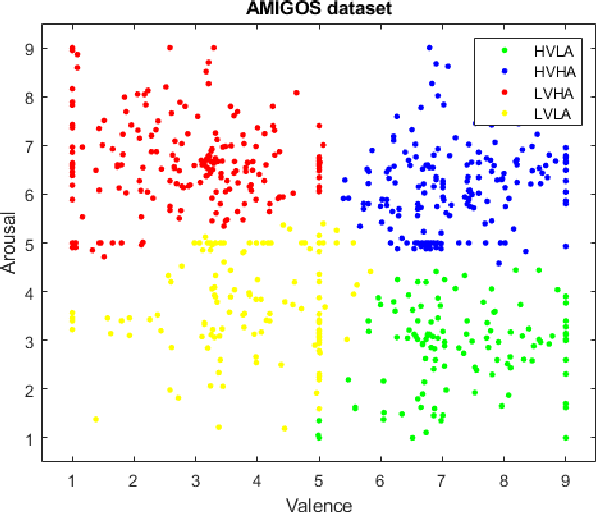

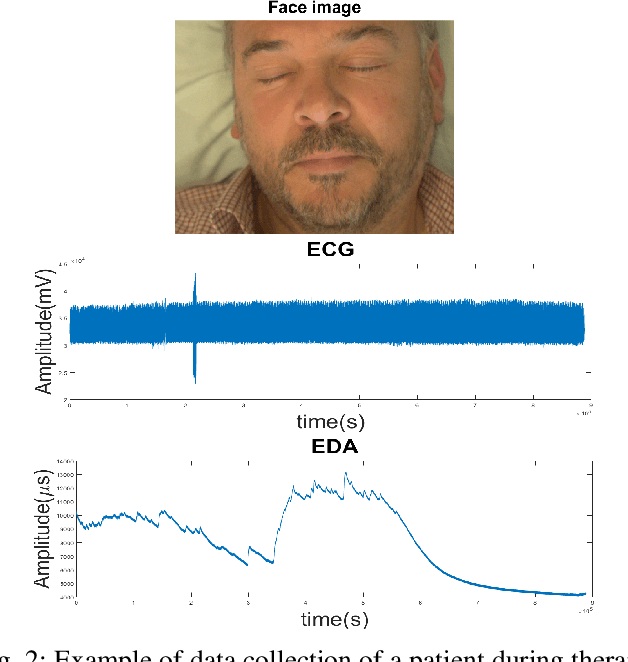

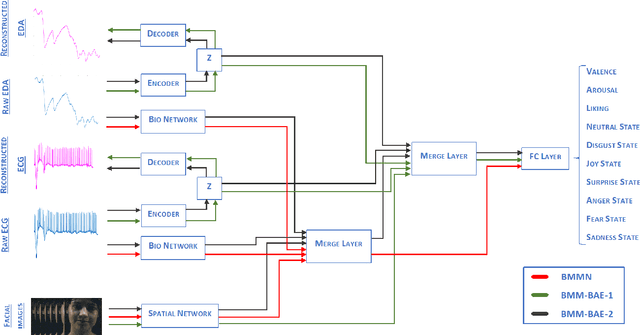

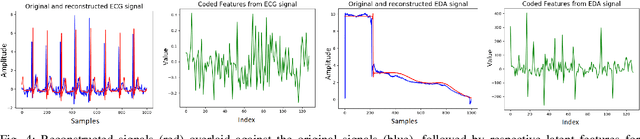

In recent years, Affective Computing and its applications have become a fast-growing research topic. Furthermore, the rise of Deep Learning has introduced significant improvements in the emotion recognition system compared to classical methods. In this work, we propose a multi-modal emotion recognition model based on deep learning techniques using the combination of peripheral physiological signals and facial expressions. Moreover, we present an improvement to proposed models by introducing latent features extracted from our internal Bio Auto-Encoder (BAE). Both models are trained and evaluated on AMIGOS datasets reporting valence, arousal, and emotion state classification. Finally, to demonstrate a possible medical application in affective computing using deep learning techniques, we applied the proposed method to the assessment of anxiety therapy. To this purpose, a reduced multi-modal database has been collected by recording facial expressions and peripheral signals such as Electrocardiogram (ECG) and Galvanic Skin Response (GSR) of each patient. Valence and arousal estimation was extracted using the proposed model from the beginning until the end of the therapy, with successful evaluation to the different emotional changes in the temporal domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge