João Mendes-Moreira

A Neighbor-based Approach to Pitch Ownership Models in Soccer

Jan 10, 2025

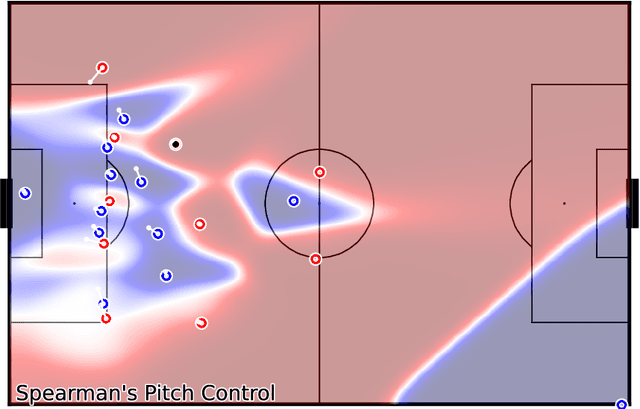

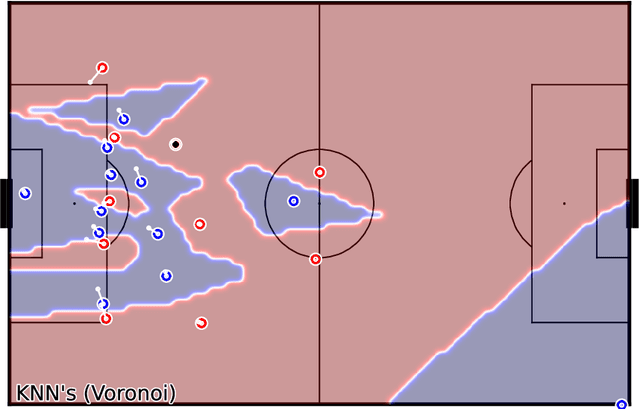

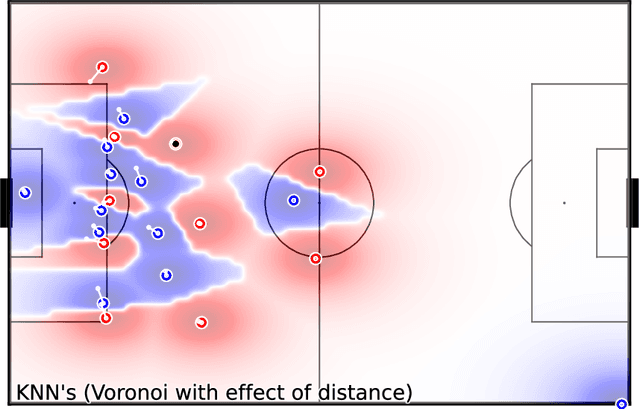

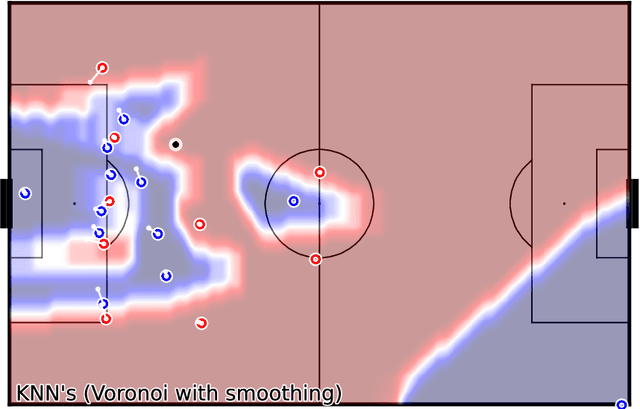

Abstract:Pitch ownership models allow many types of analysis in soccer and provide valuable assistance to tactical analysts in understanding the game's dynamics. The novelty they provide over event-based analysis is that tracking data incorporates context that event-based data does not possess, like player positioning. This paper proposes a novel approach to building pitch ownership models in soccer games using the K-Nearest Neighbors (KNN) algorithm. Our approach provides a fast inference mechanism that can model different approaches to pitch control using the same algorithm. Despite its flexibility, it uses only three hyperparameters to tune the model, facilitating the tuning process for different player skill levels. The flexibility of the approach allows for the emulation of different methods available in the literature by adjusting a small number of parameters, including adjusting for different levels of uncertainty. In summary, the proposed model provides a new and more flexible strategy for building pitch ownership models, extending beyond just replicating existing algorithms, and can provide valuable insights for tactical analysts and open up new avenues for future research. We thoroughly visualize several examples demonstrating the presented models' strengths and weaknesses. The code is available at github.com/nvsclub/KNNPitchControl.

Kernel Corrector LSTM

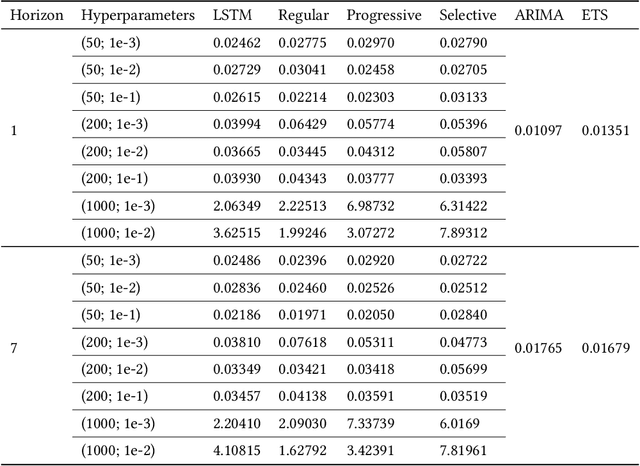

Apr 28, 2024Abstract:Forecasting methods are affected by data quality issues in two ways: 1. they are hard to predict, and 2. they may affect the model negatively when it is updated with new data. The latter issue is usually addressed by pre-processing the data to remove those issues. An alternative approach has recently been proposed, Corrector LSTM (cLSTM), which is a Read \& Write Machine Learning (RW-ML) algorithm that changes the data while learning to improve its predictions. Despite promising results being reported, cLSTM is computationally expensive, as it uses a meta-learner to monitor the hidden states of the LSTM. We propose a new RW-ML algorithm, Kernel Corrector LSTM (KcLSTM), that replaces the meta-learner of cLSTM with a simpler method: Kernel Smoothing. We empirically evaluate the forecasting accuracy and the training time of the new algorithm and compare it with cLSTM and LSTM. Results indicate that it is able to decrease the training time while maintaining a competitive forecasting accuracy.

Towards a Systematic Approach to Design New Ensemble Learning Algorithms

Feb 09, 2024

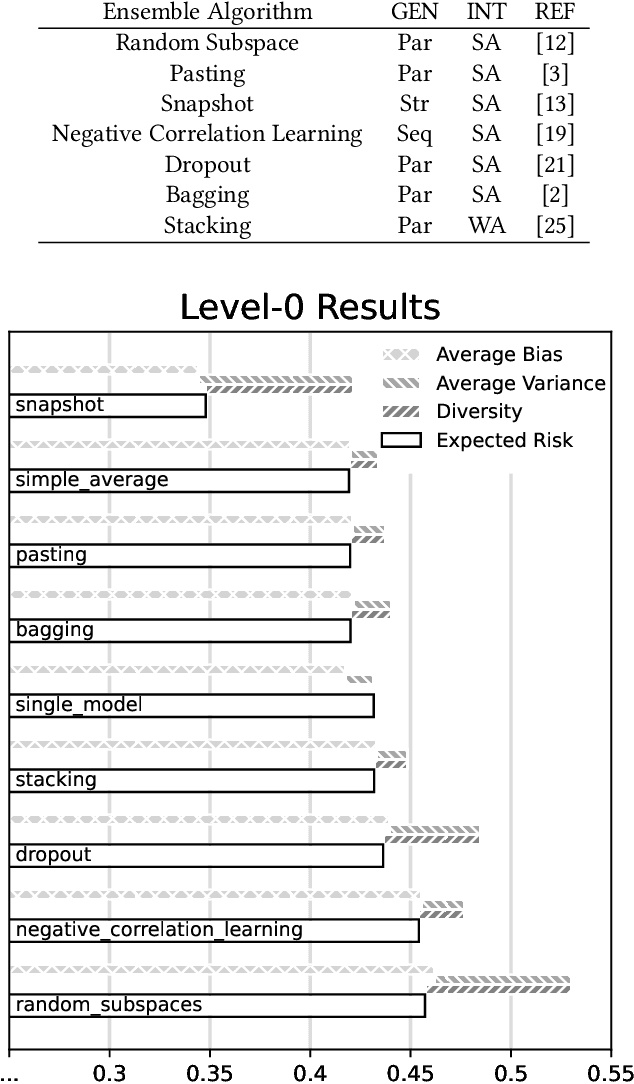

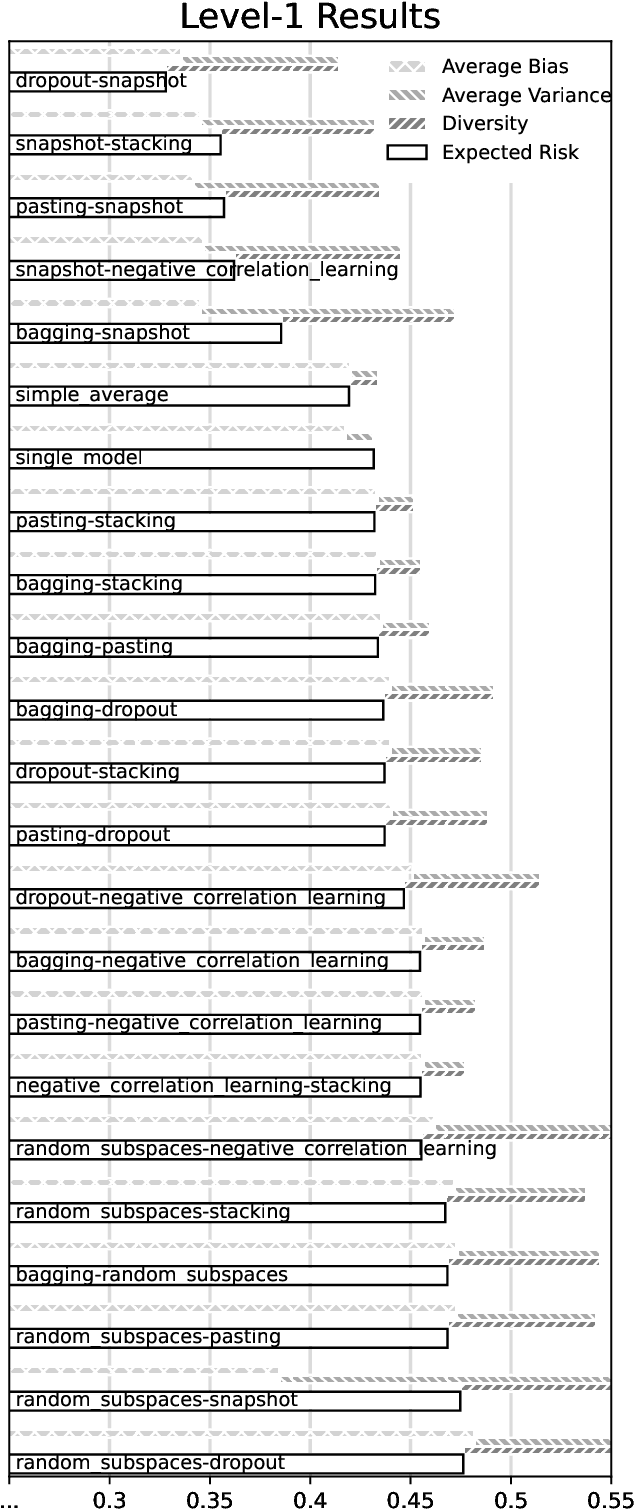

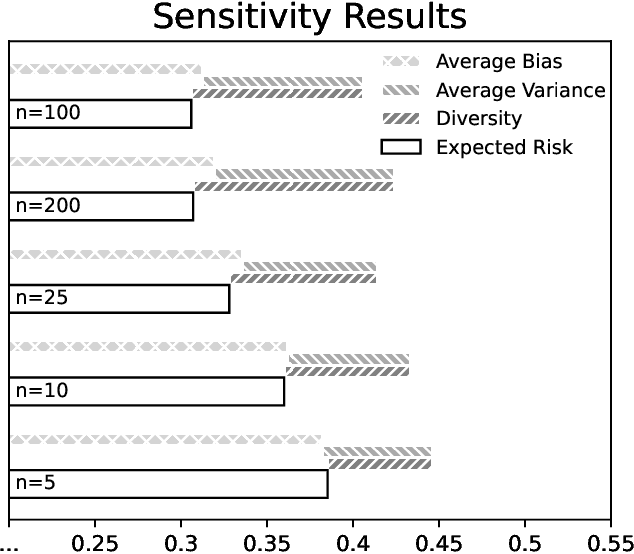

Abstract:Ensemble learning has been a focal point of machine learning research due to its potential to improve predictive performance. This study revisits the foundational work on ensemble error decomposition, historically confined to bias-variance-covariance analysis for regression problems since the 1990s. Recent advancements introduced a "unified theory of diversity," which proposes an innovative bias-variance-diversity decomposition framework. Leveraging this contemporary understanding, our research systematically explores the application of this decomposition to guide the creation of new ensemble learning algorithms. Focusing on regression tasks, we employ neural networks as base learners to investigate the practical implications of this theoretical framework. This approach used 7 simple ensemble methods, we name them strategies, for neural networks that were used to generate 21 new ensemble algorithms. Among these, most of the methods aggregated with the snapshot strategy, one of the 7 strategies used, showcase superior predictive performance across diverse datasets w.r.t. the Friedman rank test with the Conover post-hoc test. Our systematic design approach contributes a suite of effective new algorithms and establishes a structured pathway for future ensemble learning algorithm development.

Forecasting Events in Soccer Matches Through Language

Feb 09, 2024

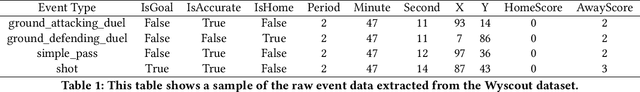

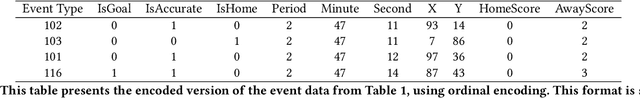

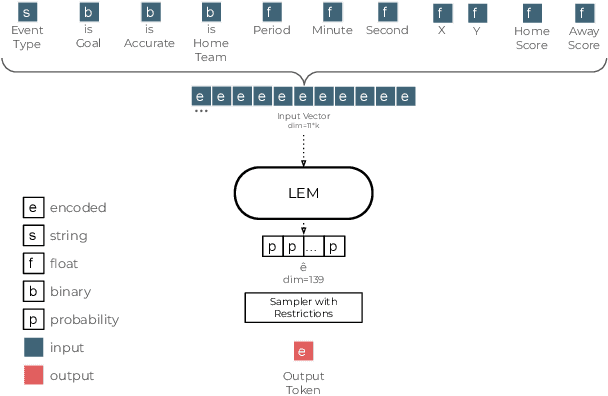

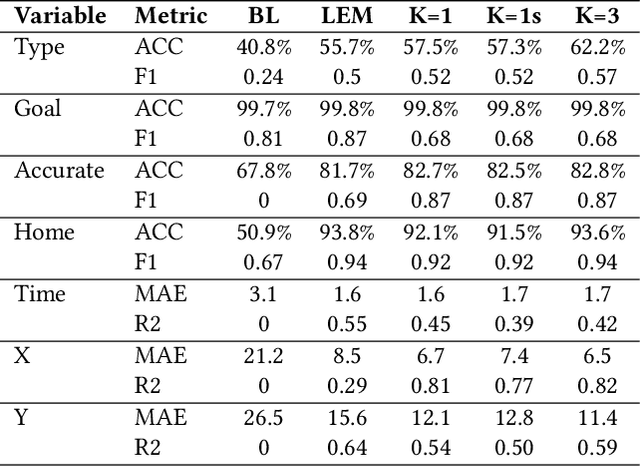

Abstract:This paper introduces an approach to predicting the next event in a soccer match, a challenge bearing remarkable similarities to the problem faced by Large Language Models (LLMs). Unlike other methods that severely limit event dynamics in soccer, often abstracting from many variables or relying on a mix of sequential models, our research proposes a novel technique inspired by the methodologies used in LLMs. These models predict a complete chain of variables that compose an event, significantly simplifying the construction of Large Event Models (LEMs) for soccer. Utilizing deep learning on the publicly available WyScout dataset, the proposed approach notably surpasses the performance of previous LEM proposals in critical areas, such as the prediction accuracy of the next event type. This paper highlights the utility of LEMs in various applications, including betting and match analytics. Moreover, we show that LEMs provide a simulation backbone on which many analytics pipelines can be built, an approach opposite to the current specialized single-purpose models. LEMs represent a pivotal advancement in soccer analytics, establishing a foundational framework for multifaceted analytics pipelines through a singular machine-learning model.

Estimating Player Performance in Different Contexts Using Fine-tuned Large Events Models

Feb 09, 2024

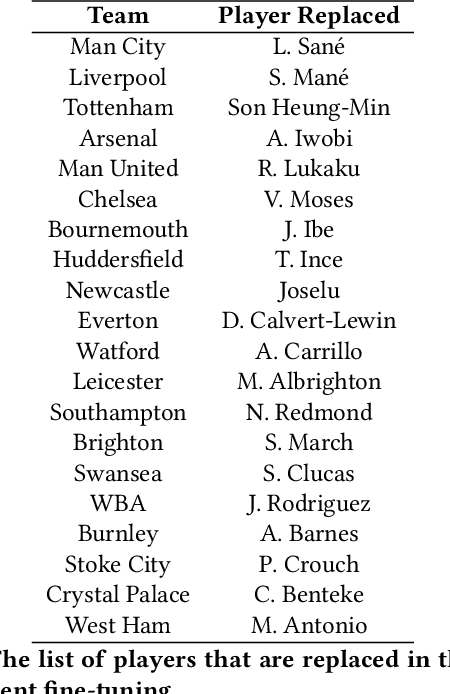

Abstract:This paper introduces an innovative application of Large Event Models (LEMs), akin to Large Language Models, to the domain of soccer analytics. By learning the "language" of soccer - predicting variables for subsequent events rather than words LEMs facilitate the simulation of matches and offer various applications, including player performance prediction across different team contexts. We focus on fine-tuning LEMs with the WyScout dataset for the 2017-2018 Premier League season to derive specific insights into player contributions and team strategies. Our methodology involves adapting these models to reflect the nuanced dynamics of soccer, enabling the evaluation of hypothetical transfers. Our findings confirm the effectiveness and limitations of LEMs in soccer analytics, highlighting the model's capability to forecast teams' expected standings and explore high-profile scenarios, such as the potential effects of transferring Cristiano Ronaldo or Lionel Messi to different teams in the Premier League. This analysis underscores the importance of context in evaluating player quality. While general metrics may suggest significant differences between players, contextual analyses reveal narrower gaps in performance within specific team frameworks.

A Survey of Advanced Computer Vision Techniques for Sports

Jan 18, 2023

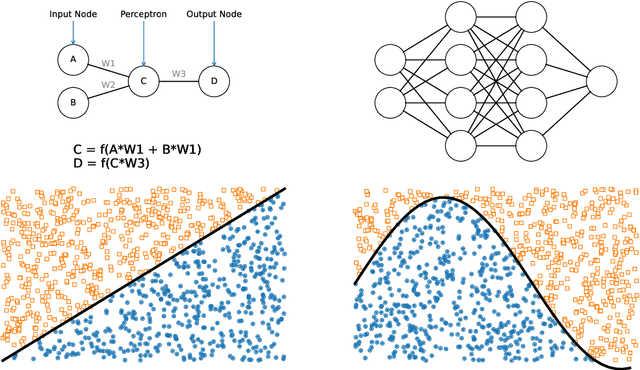

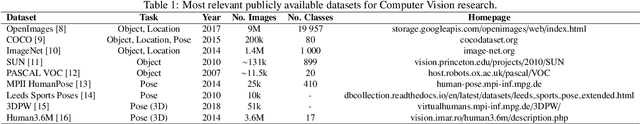

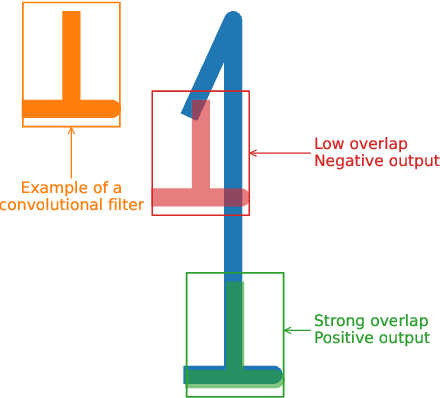

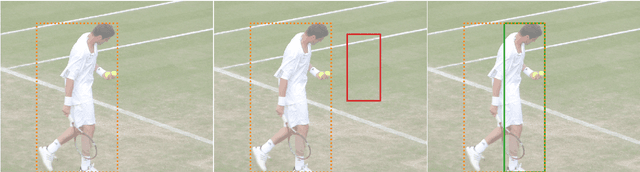

Abstract:Computer Vision developments are enabling significant advances in many fields, including sports. Many applications built on top of Computer Vision technologies, such as tracking data, are nowadays essential for every top-level analyst, coach, and even player. In this paper, we survey Computer Vision techniques that can help many sports-related studies gather vast amounts of data, such as Object Detection and Pose Estimation. We provide a use case for such data: building a model for shot speed estimation with pose data obtained using only Computer Vision models. Our model achieves a correlation of 67%. The possibility of estimating shot speeds enables much deeper studies about enabling the creation of new metrics and recommendation systems that will help athletes improve their performance, in any sport. The proposed methodology is easily replicable for many technical movements and is only limited by the availability of video data.

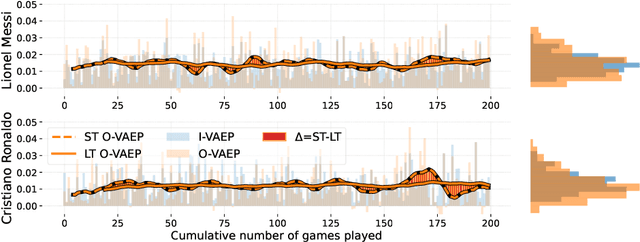

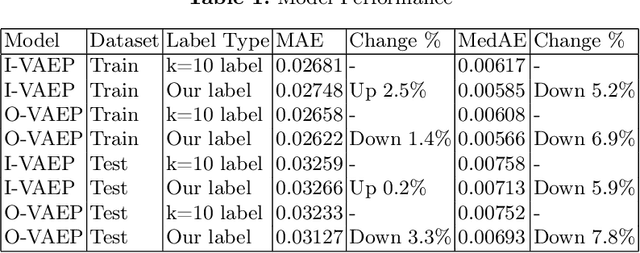

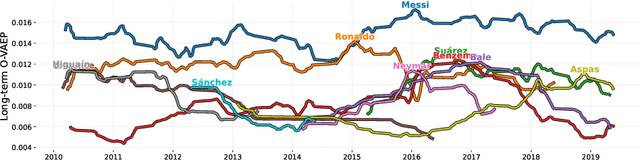

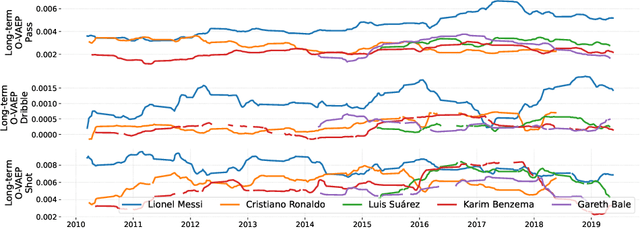

Valuing Players Over Time

Sep 08, 2022

Abstract:In soccer (or association football), players quickly go from heroes to zeroes, or vice-versa. Performance is not a static measure but a somewhat volatile one. Analyzing performance as a time series rather than a stationary point in time is crucial to making better decisions. This paper introduces and explores I-VAEP and O-VAEP models to evaluate actions and rate players' intention and execution. Then, we analyze these ratings over time and propose use cases to fundament our option of treating player ratings as a continuous problem. As a result, we present who were the best players and how their performance evolved, define volatility metrics to measure a player's consistency, and build a player development curve to assist decision-making.

Pastprop-RNN: improved predictions of the future by correcting the past

Jun 25, 2021

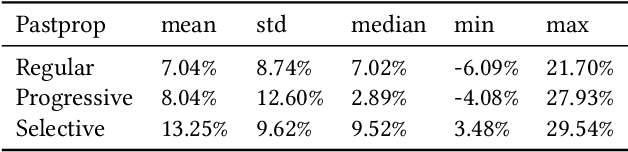

Abstract:Forecasting accuracy is reliant on the quality of available past data. Data disruptions can adversely affect the quality of the generated model (e.g. unexpected events such as out-of-stock products when forecasting demand). We address this problem by pastcasting: predicting how data should have been in the past to explain the future better. We propose Pastprop-LSTM, a data-centric backpropagation algorithm that assigns part of the responsibility for errors to the training data and changes it accordingly. We test three variants of Pastprop-LSTM on forecasting competition datasets, M4 and M5, plus the Numenta Anomaly Benchmark. Empirical evaluation indicates that the proposed method can improve forecasting accuracy, especially when the prediction errors of standard LSTM are high. It also demonstrates the potential of the algorithm on datasets containing anomalies.

Hierarchical Qualitative Clustering: clustering mixed datasets with critical qualitative information

Jul 06, 2020

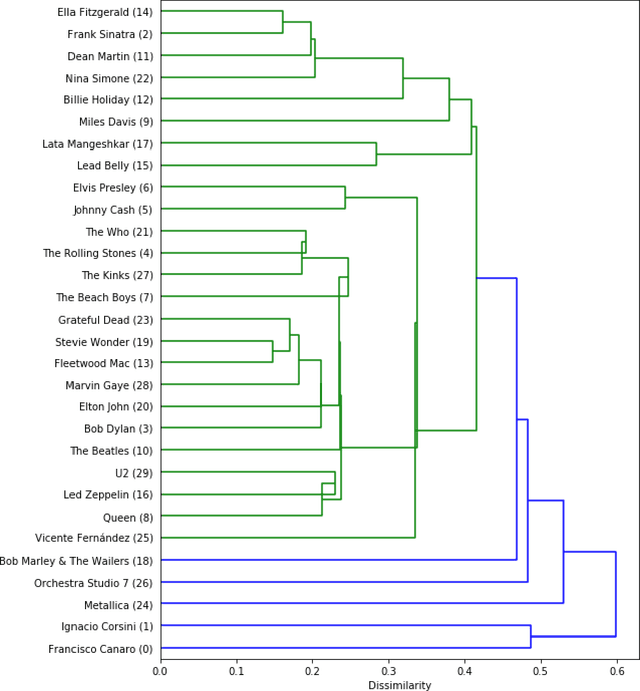

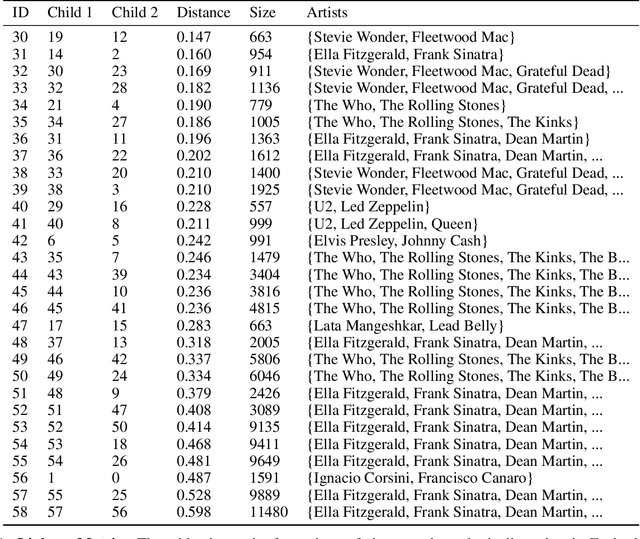

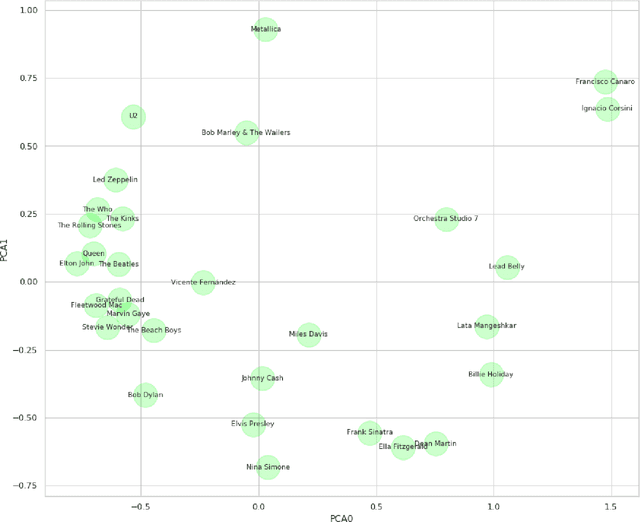

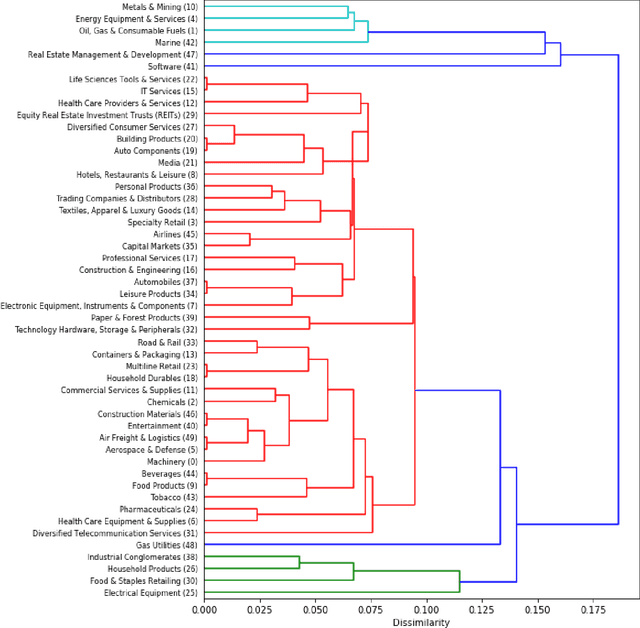

Abstract:Clustering can be used to extract insights from data or to verify some of the assumptions held by the domain experts, namely data segmentation. In the literature, few methods can be applied in clustering qualitative values using the context associated with other variables present in the data, without losing interpretability. Moreover, the metrics for calculating dissimilarity between qualitative values often scale poorly for high dimensional mixed datasets. In this study, we propose a novel method for clustering qualitative values, based on Hierarchical Clustering (HQC), and using Maximum Mean Discrepancy. HQC maintains the original interpretability of the qualitative information present in the dataset. We apply HQC to two datasets. Using a mixed dataset provided by Spotify, we showcase how our method can be used for clustering music artists based on the quantitative features of thousands of songs. In addition, using financial features of companies, we cluster company industries, and discuss the implications in investment portfolios diversification.

A Preliminary Study on Hyperparameter Configuration for Human Activity Recognition

Oct 25, 2018

Abstract:Human activity recognition (HAR) is a classification task that aims to classify human activities or predict human behavior by means of features extracted from sensors data. Typical HAR systems use wearable sensors and/or handheld and mobile devices with built-in sensing capabilities. Due to the widespread use of smartphones and to the inclusion of various sensors in all contemporary smartphones (e.g., accelerometers and gyroscopes), they are commonly used for extracting and collecting data from sensors and even for implementing HAR systems. When using mobile devices, e.g., smartphones, HAR systems need to deal with several constraints regarding battery, computation and memory. These constraints enforce the need of a system capable of managing its resources and maintain acceptable levels of classification accuracy. Moreover, several factors can influence activity recognition, such as classification models, sensors availability and size of data window for feature extraction, making stable accuracy a difficult task. In this paper, we present a semi-supervised classifier and a study regarding the influence of hyperparameter configuration in classification accuracy, depending on the user and the activities performed by each user. This study focuses on sensing data provided by the PAMAP2 dataset. Experimental results show that it is possible to maintain classification accuracy by adjusting hyperparameters, like window size and windows overlap factor, depending on user and activity performed. These experiments motivate the development of a system able to automatically adapt hyperparameter settings for the activity performed by each user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge