Towards a Systematic Approach to Design New Ensemble Learning Algorithms

Paper and Code

Feb 09, 2024

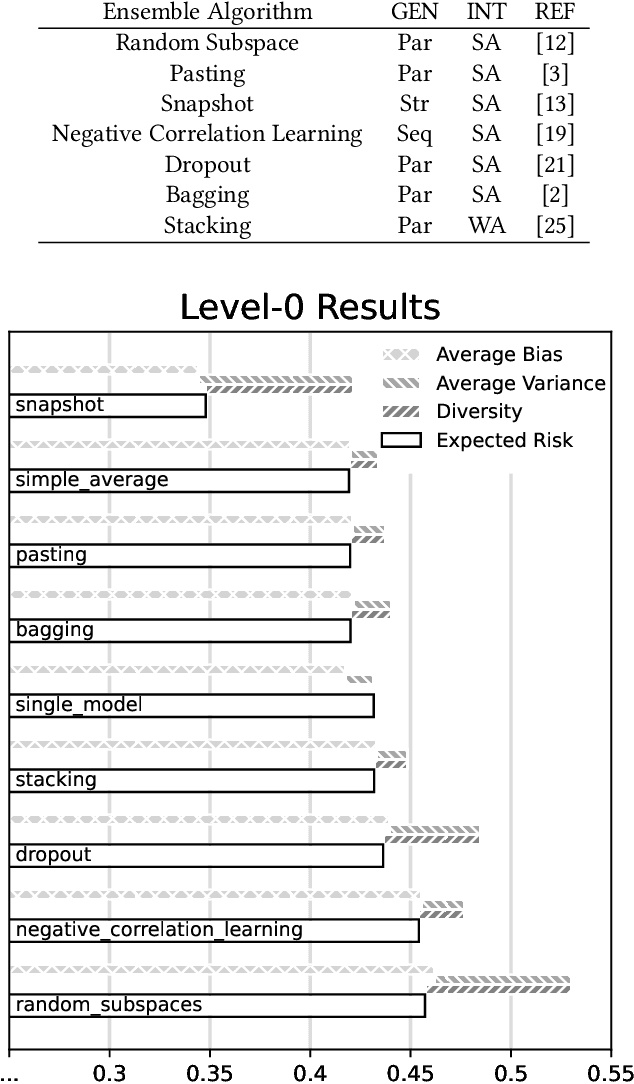

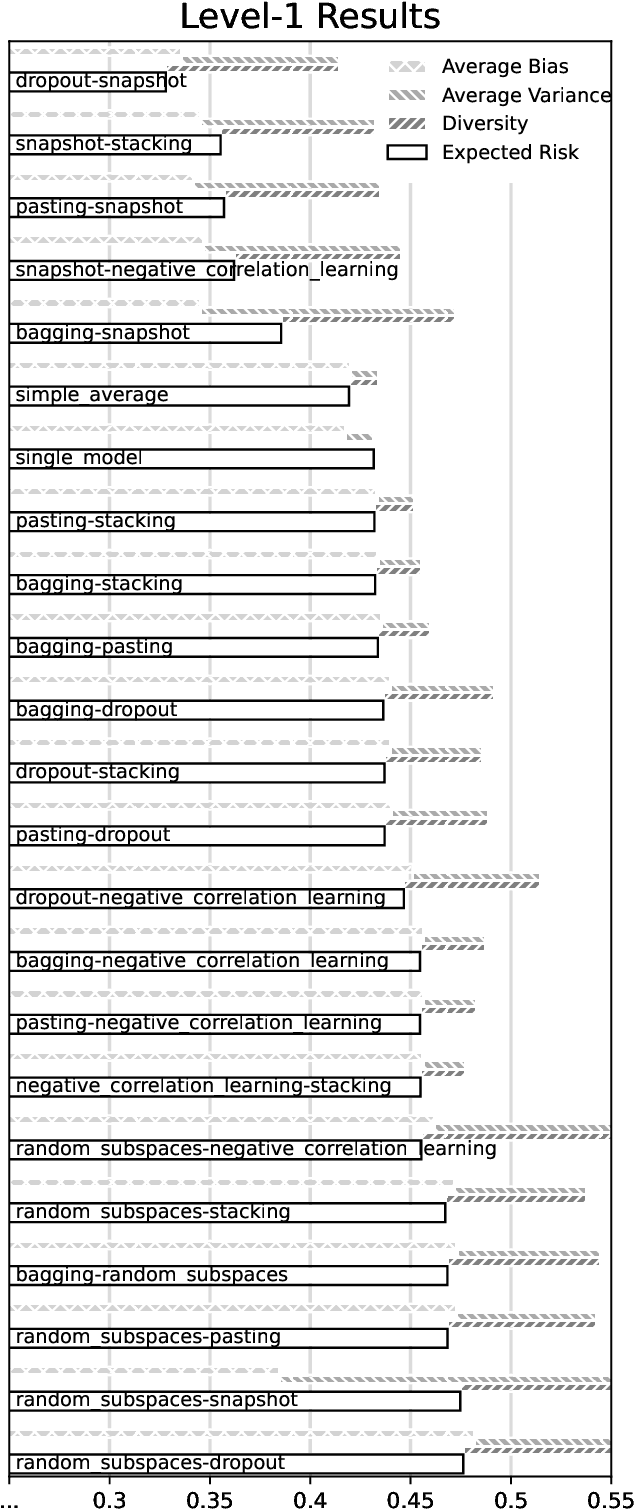

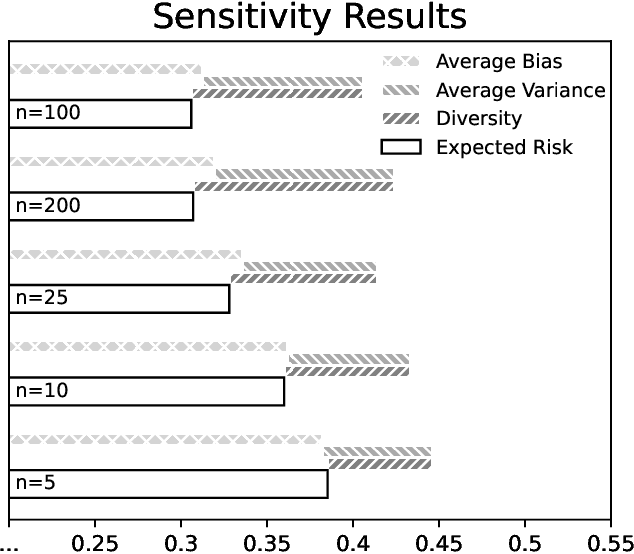

Ensemble learning has been a focal point of machine learning research due to its potential to improve predictive performance. This study revisits the foundational work on ensemble error decomposition, historically confined to bias-variance-covariance analysis for regression problems since the 1990s. Recent advancements introduced a "unified theory of diversity," which proposes an innovative bias-variance-diversity decomposition framework. Leveraging this contemporary understanding, our research systematically explores the application of this decomposition to guide the creation of new ensemble learning algorithms. Focusing on regression tasks, we employ neural networks as base learners to investigate the practical implications of this theoretical framework. This approach used 7 simple ensemble methods, we name them strategies, for neural networks that were used to generate 21 new ensemble algorithms. Among these, most of the methods aggregated with the snapshot strategy, one of the 7 strategies used, showcase superior predictive performance across diverse datasets w.r.t. the Friedman rank test with the Conover post-hoc test. Our systematic design approach contributes a suite of effective new algorithms and establishes a structured pathway for future ensemble learning algorithm development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge