Jiyu Cheng

Fellow, IEEE

Skill-Aware Diffusion for Generalizable Robotic Manipulation

Jan 16, 2026Abstract:Robust generalization in robotic manipulation is crucial for robots to adapt flexibly to diverse environments. Existing methods usually improve generalization by scaling data and networks, but model tasks independently and overlook skill-level information. Observing that tasks within the same skill share similar motion patterns, we propose Skill-Aware Diffusion (SADiff), which explicitly incorporates skill-level information to improve generalization. SADiff learns skill-specific representations through a skill-aware encoding module with learnable skill tokens, and conditions a skill-constrained diffusion model to generate object-centric motion flow. A skill-retrieval transformation strategy further exploits skill-specific trajectory priors to refine the mapping from 2D motion flow to executable 3D actions. Furthermore, we introduce IsaacSkill, a high-fidelity dataset containing fundamental robotic skills for comprehensive evaluation and sim-to-real transfer. Experiments in simulation and real-world settings show that SADiff achieves good performance and generalization across various manipulation tasks. Code, data, and videos are available at https://sites.google.com/view/sa-diff.

Dual-Attention Heterogeneous GNN for Multi-robot Collaborative Area Search via Deep Reinforcement Learning

Jan 07, 2026Abstract:In multi-robot collaborative area search, a key challenge is to dynamically balance the two objectives of exploring unknown areas and covering specific targets to be rescued. Existing methods are often constrained by homogeneous graph representations, thus failing to model and balance these distinct tasks. To address this problem, we propose a Dual-Attention Heterogeneous Graph Neural Network (DA-HGNN) trained using deep reinforcement learning. Our method constructs a heterogeneous graph that incorporates three entity types: robot nodes, frontier nodes, and interesting nodes, as well as their historical states. The dual-attention mechanism comprises the relational-aware attention and type-aware attention operations. The relational-aware attention captures the complex spatio-temporal relationships among robots and candidate goals. Building on this relational-aware heterogeneous graph, the type-aware attention separately computes the relevance between robots and each goal type (frontiers vs. points of interest), thereby decoupling the exploration and coverage from the unified tasks. Extensive experiments conducted in interactive 3D scenarios within the iGibson simulator, leveraging the Gibson and MatterPort3D datasets, validate the superior scalability and generalization capability of the proposed approach.

Autonomous and Adaptive Role Selection for Multi-robot Collaborative Area Search Based on Deep Reinforcement Learning

Dec 04, 2023Abstract:In the tasks of multi-robot collaborative area search, we propose the unified approach for simultaneous mapping for sensing more targets (exploration) while searching and locating the targets (coverage). Specifically, we implement a hierarchical multi-agent reinforcement learning algorithm to decouple task planning from task execution. The role concept is integrated into the upper-level task planning for role selection, which enables robots to learn the role based on the state status from the upper-view. Besides, an intelligent role switching mechanism enables the role selection module to function between two timesteps, promoting both exploration and coverage interchangeably. Then the primitive policy learns how to plan based on their assigned roles and local observation for sub-task execution. The well-designed experiments show the scalability and generalization of our method compared with state-of-the-art approaches in the scenes with varying complexity and number of robots.

Nowhere to Go: Benchmarking Multi-robot Collaboration in Target Trapping Environment

Aug 17, 2023Abstract:Collaboration is one of the most important factors in multi-robot systems. Considering certain real-world applications and to further promote its development, we propose a new benchmark to evaluate multi-robot collaboration in Target Trapping Environment (T2E). In T2E, two kinds of robots (called captor robot and target robot) share the same space. The captors aim to catch the target collaboratively, while the target will try to escape from the trap. Both the trapping and escaping process can use the environment layout to help achieve the corresponding objective, which requires high collaboration between robots and the utilization of the environment. For the benchmark, we present and evaluate multiple learning-based baselines in T2E, and provide insights into regimes of multi-robot collaboration. We also make our benchmark publicly available and encourage researchers from related robotics disciplines to propose, evaluate, and compare their solutions in this benchmark. Our project is released at https://github.com/Dr-Xiaogaren/T2E.

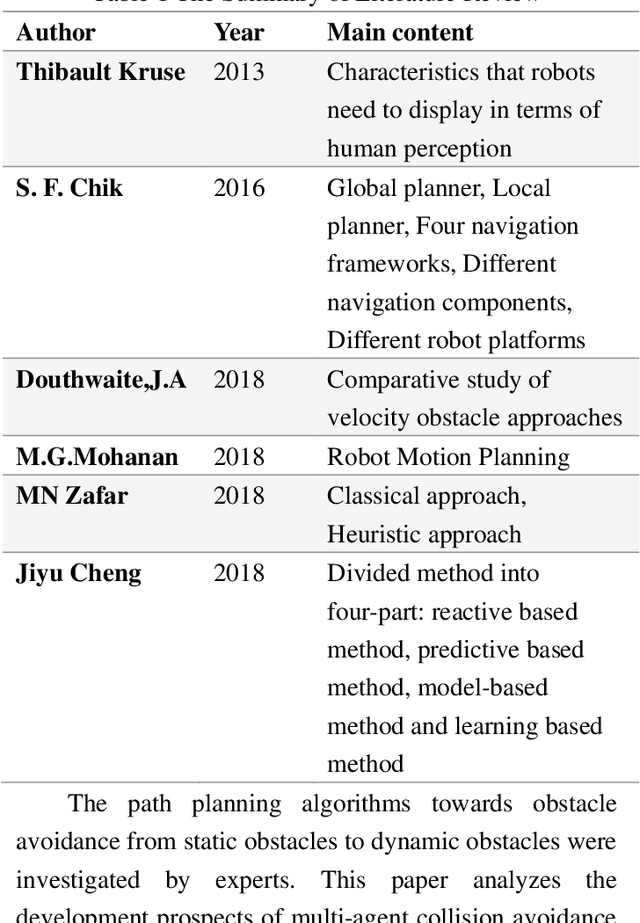

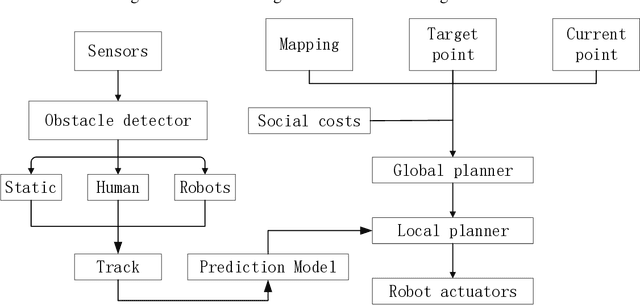

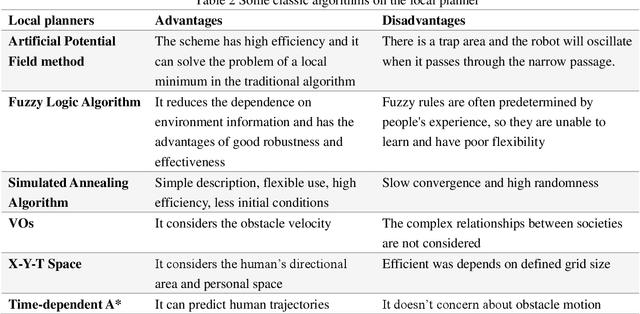

Mobile Robot Path Planning in Dynamic Environments: A Survey

Jun 25, 2020

Abstract:The environment that the robot operating in is becoming more and more complex, which poses great challenges on robot navigation. This paper gives an overview of the navigation framework for robot running in dense environment. The path planning in the navigation framework of mobile robots is divided into global planning and local planning according to the planning scope and the executability. Robot navigation is a multi-objective problem, which not only needs to complete the given tasks but also needs to simultaneously maintain the social comfort level. Consequently, we focus on the reinforcement learning-based path planning algorithms and analyze the development status, advantages, and disadvantages of the existing algorithms. Besides, path planning in a dynamic environment for robots will be further studied in the future in the areas of the advanced algorithm, hybrid algorithm, mu10.15878/j.cnki.instrumentation.2019.02.010lti-robot collaboration, social model, and artificial intelligence algorithm combination.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge