Jinpeng Lu

Johnson-Lindenstrauss Lemma Guided Network for Efficient 3D Medical Segmentation

Sep 26, 2025Abstract:Lightweight 3D medical image segmentation remains constrained by a fundamental "efficiency / robustness conflict", particularly when processing complex anatomical structures and heterogeneous modalities. In this paper, we study how to redesign the framework based on the characteristics of high-dimensional 3D images, and explore data synergy to overcome the fragile representation of lightweight methods. Our approach, VeloxSeg, begins with a deployable and extensible dual-stream CNN-Transformer architecture composed of Paired Window Attention (PWA) and Johnson-Lindenstrauss lemma-guided convolution (JLC). For each 3D image, we invoke a "glance-and-focus" principle, where PWA rapidly retrieves multi-scale information, and JLC ensures robust local feature extraction with minimal parameters, significantly enhancing the model's ability to operate with low computational budget. Followed by an extension of the dual-stream architecture that incorporates modal interaction into the multi-scale image-retrieval process, VeloxSeg efficiently models heterogeneous modalities. Finally, Spatially Decoupled Knowledge Transfer (SDKT) via Gram matrices injects the texture prior extracted by a self-supervised network into the segmentation network, yielding stronger representations than baselines at no extra inference cost. Experimental results on multimodal benchmarks show that VeloxSeg achieves a 26% Dice improvement, alongside increasing GPU throughput by 11x and CPU by 48x. Codes are available at https://github.com/JinPLu/VeloxSeg.

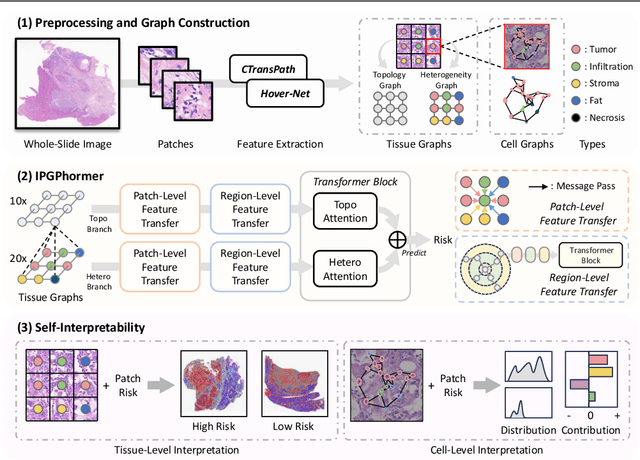

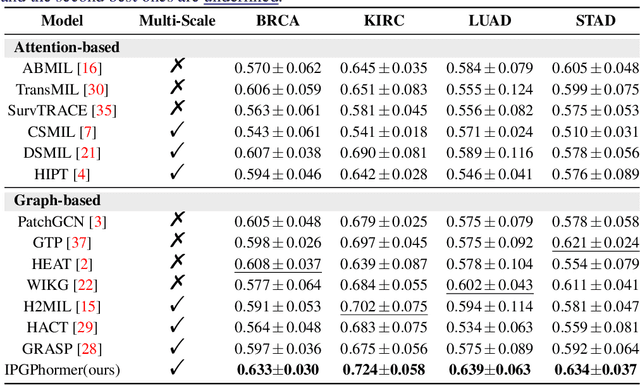

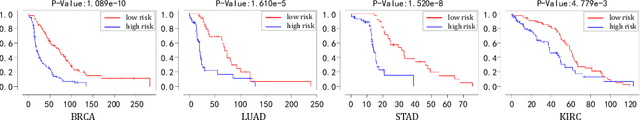

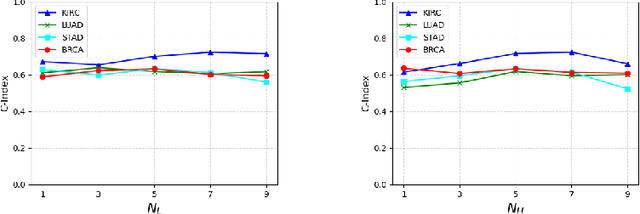

IPGPhormer: Interpretable Pathology Graph-Transformer for Survival Analysis

Aug 17, 2025

Abstract:Pathological images play an essential role in cancer prognosis, while survival analysis, which integrates computational techniques, can predict critical clinical events such as patient mortality or disease recurrence from whole-slide images (WSIs). Recent advancements in multiple instance learning have significantly improved the efficiency of survival analysis. However, existing methods often struggle to balance the modeling of long-range spatial relationships with local contextual dependencies and typically lack inherent interpretability, limiting their clinical utility. To address these challenges, we propose the Interpretable Pathology Graph-Transformer (IPGPhormer), a novel framework that captures the characteristics of the tumor microenvironment and models their spatial dependencies across the tissue. IPGPhormer uniquely provides interpretability at both tissue and cellular levels without requiring post-hoc manual annotations, enabling detailed analyses of individual WSIs and cross-cohort assessments. Comprehensive evaluations on four public benchmark datasets demonstrate that IPGPhormer outperforms state-of-the-art methods in both predictive accuracy and interpretability. In summary, our method, IPGPhormer, offers a promising tool for cancer prognosis assessment, paving the way for more reliable and interpretable decision-support systems in pathology. The code is publicly available at https://anonymous.4open.science/r/IPGPhormer-6EEB.

Rethinking Attention-Based Multiple Instance Learning for Whole-Slide Pathological Image Classification: An Instance Attribute Viewpoint

Mar 30, 2024

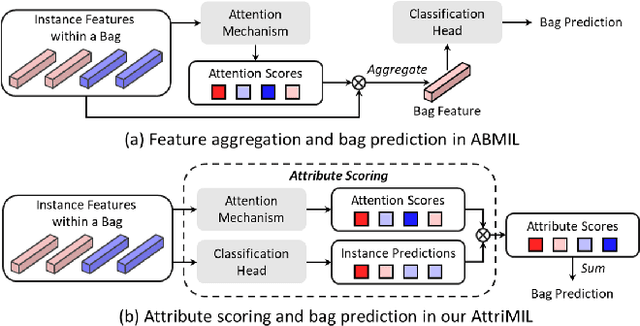

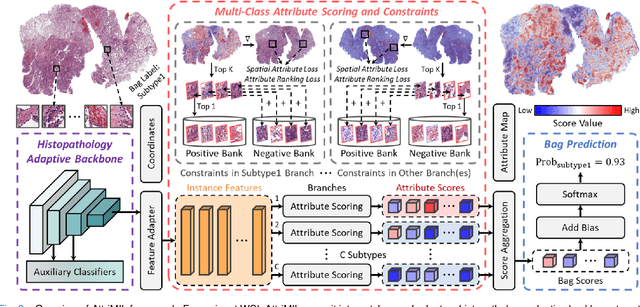

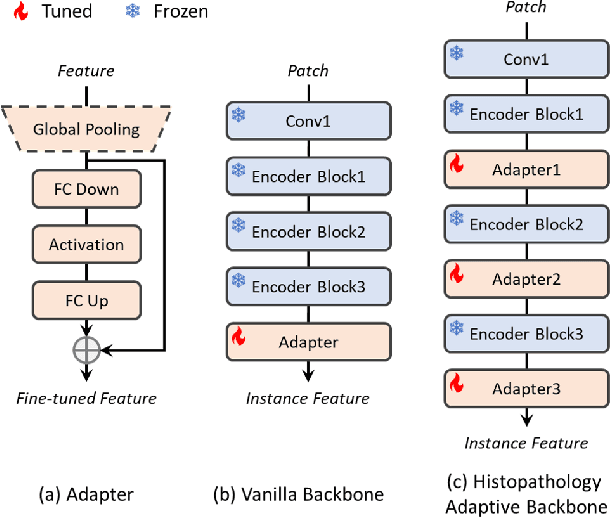

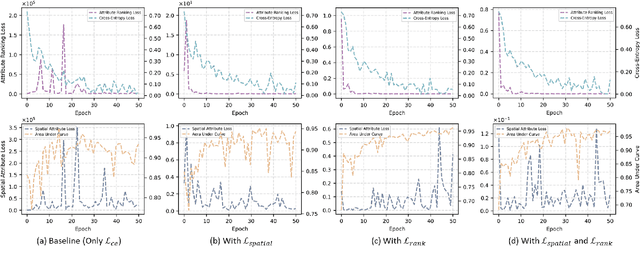

Abstract:Multiple instance learning (MIL) is a robust paradigm for whole-slide pathological image (WSI) analysis, processing gigapixel-resolution images with slide-level labels. As pioneering efforts, attention-based MIL (ABMIL) and its variants are increasingly becoming popular due to the characteristics of simultaneously handling clinical diagnosis and tumor localization. However, the attention mechanism exhibits limitations in discriminating between instances, which often misclassifies tissues and potentially impairs MIL performance. This paper proposes an Attribute-Driven MIL (AttriMIL) framework to address these issues. Concretely, we dissect the calculation process of ABMIL and present an attribute scoring mechanism that measures the contribution of each instance to bag prediction effectively, quantifying instance attributes. Based on attribute quantification, we develop a spatial attribute constraint and an attribute ranking constraint to model instance correlations within and across slides, respectively. These constraints encourage the network to capture the spatial correlation and semantic similarity of instances, improving the ability of AttriMIL to distinguish tissue types and identify challenging instances. Additionally, AttriMIL employs a histopathology adaptive backbone that maximizes the pre-trained model's feature extraction capability for collecting pathological features. Extensive experiments on three public benchmarks demonstrate that our AttriMIL outperforms existing state-of-the-art frameworks across multiple evaluation metrics. The implementation code is available at https://github.com/MedCAI/AttriMIL.

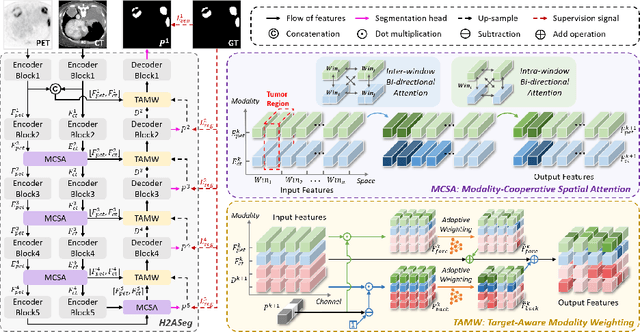

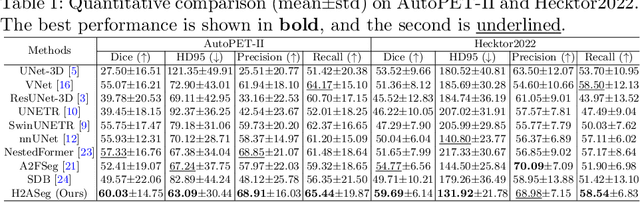

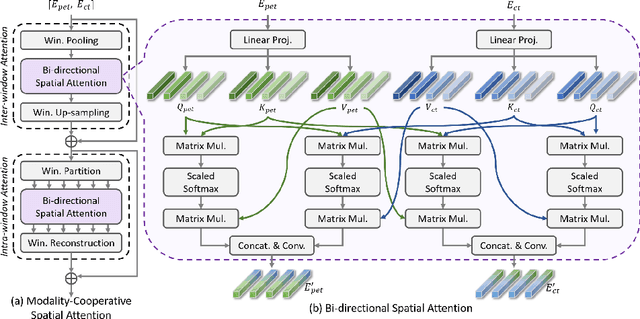

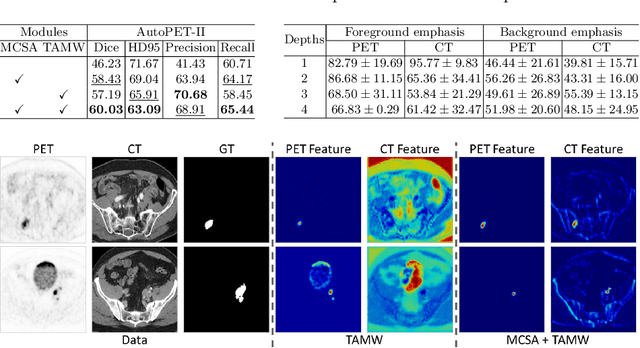

H2ASeg: Hierarchical Adaptive Interaction and Weighting Network for Tumor Segmentation in PET/CT Images

Mar 28, 2024

Abstract:Positron emission tomography (PET) combined with computed tomography (CT) imaging is routinely used in cancer diagnosis and prognosis by providing complementary information. Automatically segmenting tumors in PET/CT images can significantly improve examination efficiency. Traditional multi-modal segmentation solutions mainly rely on concatenation operations for modality fusion, which fail to effectively model the non-linear dependencies between PET and CT modalities. Recent studies have investigated various approaches to optimize the fusion of modality-specific features for enhancing joint representations. However, modality-specific encoders used in these methods operate independently, inadequately leveraging the synergistic relationships inherent in PET and CT modalities, for example, the complementarity between semantics and structure. To address these issues, we propose a Hierarchical Adaptive Interaction and Weighting Network termed H2ASeg to explore the intrinsic cross-modal correlations and transfer potential complementary information. Specifically, we design a Modality-Cooperative Spatial Attention (MCSA) module that performs intra- and inter-modal interactions globally and locally. Additionally, a Target-Aware Modality Weighting (TAMW) module is developed to highlight tumor-related features within multi-modal features, thereby refining tumor segmentation. By embedding these modules across different layers, H2ASeg can hierarchically model cross-modal correlations, enabling a nuanced understanding of both semantic and structural tumor features. Extensive experiments demonstrate the superiority of H2ASeg, outperforming state-of-the-art methods on AutoPet-II and Hecktor2022 benchmarks. The code is released at https://github.com/JinPLu/H2ASeg.

A Localization-to-Segmentation Framework for Automatic Tumor Segmentation in Whole-Body PET/CT Images

Sep 14, 2023Abstract:Fluorodeoxyglucose (FDG) positron emission tomography (PET) combined with computed tomography (CT) is considered the primary solution for detecting some cancers, such as lung cancer and melanoma. Automatic segmentation of tumors in PET/CT images can help reduce doctors' workload, thereby improving diagnostic quality. However, precise tumor segmentation is challenging due to the small size of many tumors and the similarity of high-uptake normal areas to the tumor regions. To address these issues, this paper proposes a localization-to-segmentation framework (L2SNet) for precise tumor segmentation. L2SNet first localizes the possible lesions in the lesion localization phase and then uses the location cues to shape the segmentation results in the lesion segmentation phase. To further improve the segmentation performance of L2SNet, we design an adaptive threshold scheme that takes the segmentation results of the two phases into consideration. The experiments with the MICCAI 2023 Automated Lesion Segmentation in Whole-Body FDG-PET/CT challenge dataset show that our method achieved a competitive result and was ranked in the top 7 methods on the preliminary test set. Our work is available at: https://github.com/MedCAI/L2SNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge