Jinpei Han

Graph Neural Networks Uncover Geometric Neural Representations in Reinforcement-Based Motor Learning

Oct 31, 2024

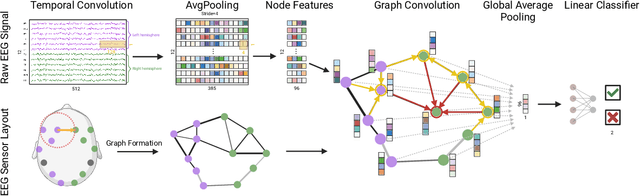

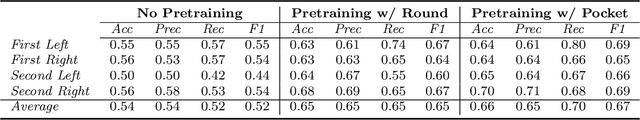

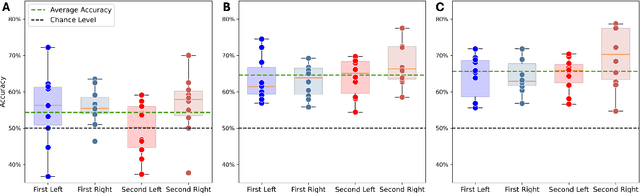

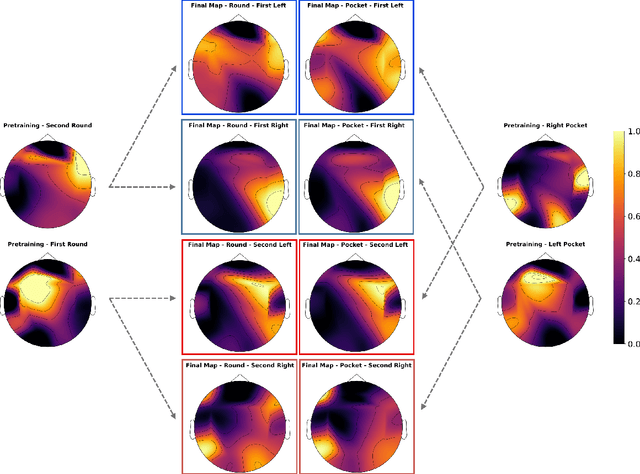

Abstract:Graph Neural Networks (GNN) can capture the geometric properties of neural representations in EEG data. Here we utilise those to study how reinforcement-based motor learning affects neural activity patterns during motor planning, leveraging the inherent graph structure of EEG channels to capture the spatial relationships in brain activity. By exploiting task-specific symmetries, we define different pretraining strategies that not only improve model performance across all participant groups but also validate the robustness of the geometric representations. Explainability analysis based on the graph structures reveals consistent group-specific neural signatures that persist across pretraining conditions, suggesting stable geometric structures in the neural representations associated with motor learning and feedback processing. These geometric patterns exhibit partial invariance to certain task space transformations, indicating symmetries that enable generalisation across conditions while maintaining specificity to individual learning strategies. This work demonstrates how GNNs can uncover the effects of previous outcomes on motor planning, in a complex real-world task, providing insights into the geometric principles governing neural representations. Our experimental design bridges the gap between controlled experiments and ecologically valid scenarios, offering new insights into the organisation of neural representations during naturalistic motor learning, which may open avenues for exploring fundamental principles governing brain activity in complex tasks.

Noise-Free Explanation for Driving Action Prediction

Jul 08, 2024

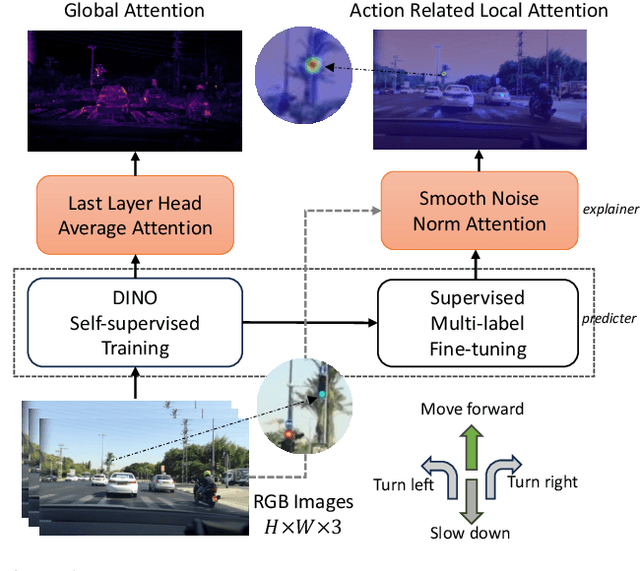

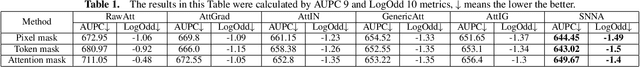

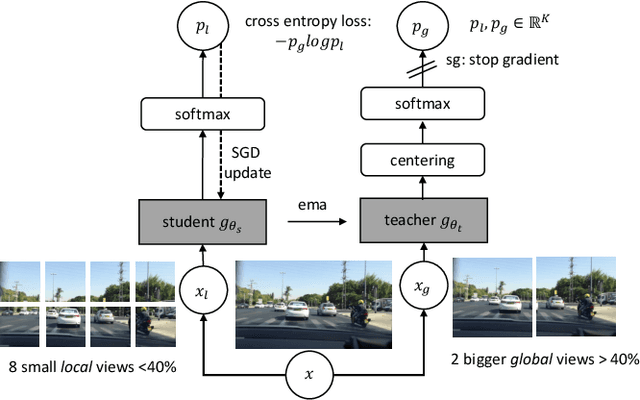

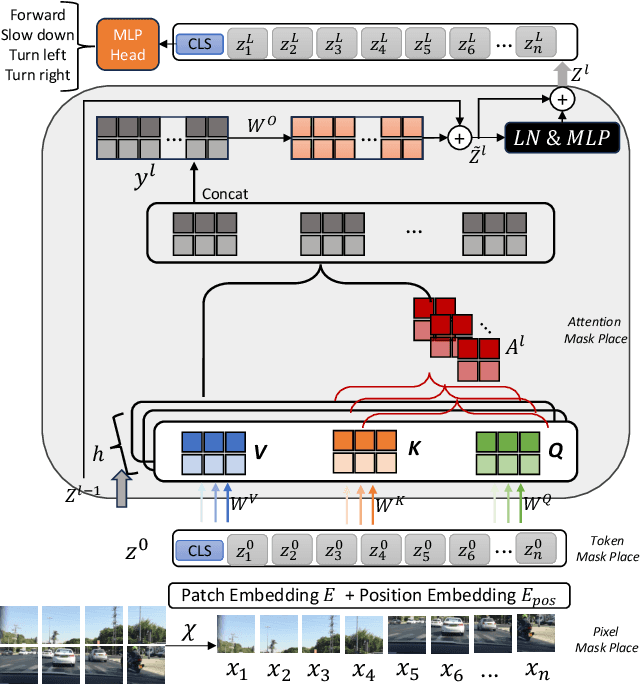

Abstract:Although attention mechanisms have achieved considerable progress in Transformer-based architectures across various Artificial Intelligence (AI) domains, their inner workings remain to be explored. Existing explainable methods have different emphases but are rather one-sided. They primarily analyse the attention mechanisms or gradient-based attribution while neglecting the magnitudes of input feature values or the skip-connection module. Moreover, they inevitably bring spurious noisy pixel attributions unrelated to the model's decision, hindering humans' trust in the spotted visualization result. Hence, we propose an easy-to-implement but effective way to remedy this flaw: Smooth Noise Norm Attention (SNNA). We weigh the attention by the norm of the transformed value vector and guide the label-specific signal with the attention gradient, then randomly sample the input perturbations and average the corresponding gradients to produce noise-free attribution. Instead of evaluating the explanation method on the binary or multi-class classification tasks like in previous works, we explore the more complex multi-label classification scenario in this work, i.e., the driving action prediction task, and trained a model for it specifically. Both qualitative and quantitative evaluation results show the superiority of SNNA compared to other SOTA attention-based explainable methods in generating a clearer visual explanation map and ranking the input pixel importance.

EEG Decoding for Datasets with Heterogenous Electrode Configurations using Transfer Learning Graph Neural Networks

Jun 20, 2023Abstract:Brain-Machine Interfacing (BMI) has greatly benefited from adopting machine learning methods for feature learning that require extensive data for training, which are often unavailable from a single dataset. Yet, it is difficult to combine data across labs or even data within the same lab collected over the years due to the variation in recording equipment and electrode layouts resulting in shifts in data distribution, changes in data dimensionality, and altered identity of data dimensions. Our objective is to overcome this limitation and learn from many different and diverse datasets across labs with different experimental protocols. To tackle the domain adaptation problem, we developed a novel machine learning framework combining graph neural networks (GNNs) and transfer learning methodologies for non-invasive Motor Imagery (MI) EEG decoding, as an example of BMI. Empirically, we focus on the challenges of learning from EEG data with different electrode layouts and varying numbers of electrodes. We utilise three MI EEG databases collected using very different numbers of EEG sensors (from 22 channels to 64) and layouts (from custom layouts to 10-20). Our model achieved the highest accuracy with lower standard deviations on the testing datasets. This indicates that the GNN-based transfer learning framework can effectively aggregate knowledge from multiple datasets with different electrode layouts, leading to improved generalization in subject-independent MI EEG classification. The findings of this study have important implications for Brain-Computer-Interface (BCI) research, as they highlight a promising method for overcoming the limitations posed by non-unified experimental setups. By enabling the integration of diverse datasets with varying electrode layouts, our proposed approach can help advance the development and application of BMI technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge