Jinming Ma

An Efficient and Multi-Modal Navigation System with One-Step World Model

Jan 18, 2026Abstract:Navigation is a fundamental capability for mobile robots. While the current trend is to use learning-based approaches to replace traditional geometry-based methods, existing end-to-end learning-based policies often struggle with 3D spatial reasoning and lack a comprehensive understanding of physical world dynamics. Integrating world models-which predict future observations conditioned on given actions-with iterative optimization planning offers a promising solution due to their capacity for imagination and flexibility. However, current navigation world models, typically built on pure transformer architectures, often rely on multi-step diffusion processes and autoregressive frame-by-frame generation. These mechanisms result in prohibitive computational latency, rendering real-time deployment impossible. To address this bottleneck, we propose a lightweight navigation world model that adopts a one-step generation paradigm and a 3D U-Net backbone equipped with efficient spatial-temporal attention. This design drastically reduces inference latency, enabling high-frequency control while achieving superior predictive performance. We also integrate this model into an optimization-based planning framework utilizing anchor-based initialization to handle multi-modal goal navigation tasks. Extensive closed-loop experiments in both simulation and real-world environments demonstrate our system's superior efficiency and robustness compared to state-of-the-art baselines.

Learning Diverse Skills for Behavior Models with Mixture of Experts

Jan 18, 2026Abstract:Imitation learning has demonstrated strong performance in robotic manipulation by learning from large-scale human demonstrations. While existing models excel at single-task learning, it is observed in practical applications that their performance degrades in the multi-task setting, where interference across tasks leads to an averaging effect. To address this issue, we propose to learn diverse skills for behavior models with Mixture of Experts, referred to as Di-BM. Di-BM associates each expert with a distinct observation distribution, enabling experts to specialize in sub-regions of the observation space. Specifically, we employ energy-based models to represent expert-specific observation distributions and jointly train them alongside the corresponding action models. Our approach is plug-and-play and can be seamlessly integrated into standard imitation learning methods. Extensive experiments on multiple real-world robotic manipulation tasks demonstrate that Di-BM significantly outperforms state-of-the-art baselines. Moreover, fine-tuning the pretrained Di-BM on novel tasks exhibits superior data efficiency and the reusable of expert-learned knowledge. Code is available at https://github.com/robotnav-bot/Di-BM.

Safety Constrained Multi-Agent Reinforcement Learning for Active Voltage Control

May 14, 2024

Abstract:Active voltage control presents a promising avenue for relieving power congestion and enhancing voltage quality, taking advantage of the distributed controllable generators in the power network, such as roof-top photovoltaics. While Multi-Agent Reinforcement Learning (MARL) has emerged as a compelling approach to address this challenge, existing MARL approaches tend to overlook the constrained optimization nature of this problem, failing in guaranteeing safety constraints. In this paper, we formalize the active voltage control problem as a constrained Markov game and propose a safety-constrained MARL algorithm. We expand the primal-dual optimization RL method to multi-agent settings, and augment it with a novel approach of double safety estimation to learn the policy and to update the Lagrange-multiplier. In addition, we proposed different cost functions and investigated their influences on the behavior of our constrained MARL method. We evaluate our approach in the power distribution network simulation environment with real-world scale scenarios. Experimental results demonstrate the effectiveness of the proposed method compared with the state-of-the-art MARL methods.

Improving Offline Reinforcement Learning with Inaccurate Simulators

May 07, 2024

Abstract:Offline reinforcement learning (RL) provides a promising approach to avoid costly online interaction with the real environment. However, the performance of offline RL highly depends on the quality of the datasets, which may cause extrapolation error in the learning process. In many robotic applications, an inaccurate simulator is often available. However, the data directly collected from the inaccurate simulator cannot be directly used in offline RL due to the well-known exploration-exploitation dilemma and the dynamic gap between inaccurate simulation and the real environment. To address these issues, we propose a novel approach to combine the offline dataset and the inaccurate simulation data in a better manner. Specifically, we pre-train a generative adversarial network (GAN) model to fit the state distribution of the offline dataset. Given this, we collect data from the inaccurate simulator starting from the distribution provided by the generator and reweight the simulated data using the discriminator. Our experimental results in the D4RL benchmark and a real-world manipulation task confirm that our method can benefit more from both inaccurate simulator and limited offline datasets to achieve better performance than the state-of-the-art methods.

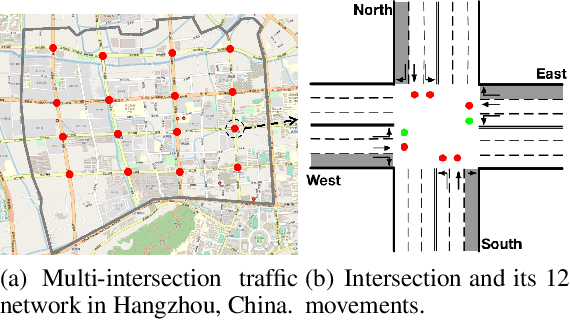

Real-Time Network-Level Traffic Signal Control: An Explicit Multiagent Coordination Method

Jun 15, 2023Abstract:Efficient traffic signal control (TSC) has been one of the most useful ways for reducing urban road congestion. Key to the challenge of TSC includes 1) the essential of real-time signal decision, 2) the complexity in traffic dynamics, and 3) the network-level coordination. Recent efforts that applied reinforcement learning (RL) methods can query policies by mapping the traffic state to the signal decision in real-time, however, is inadequate for unexpected traffic flows. By observing real traffic information, online planning methods can compute the signal decisions in a responsive manner. We propose an explicit multiagent coordination (EMC)-based online planning methods that can satisfy adaptive, real-time and network-level TSC. By multiagent, we model each intersection as an autonomous agent, and the coordination efficiency is modeled by a cost (i.e., congestion index) function between neighbor intersections. By network-level coordination, each agent exchanges messages with respect to cost function with its neighbors in a fully decentralized manner. By real-time, the message passing procedure can interrupt at any time when the real time limit is reached and agents select the optimal signal decisions according to the current message. Moreover, we prove our EMC method can guarantee network stability by borrowing ideas from transportation domain. Finally, we test our EMC method in both synthetic and real road network datasets. Experimental results are encouraging: compared to RL and conventional transportation baselines, our EMC method performs reasonably well in terms of adapting to real-time traffic dynamics, minimizing vehicle travel time and scalability to city-scale road networks.

Effective Multimodal Reinforcement Learning with Modality Alignment and Importance Enhancement

Feb 18, 2023Abstract:Many real-world applications require an agent to make robust and deliberate decisions with multimodal information (e.g., robots with multi-sensory inputs). However, it is very challenging to train the agent via reinforcement learning (RL) due to the heterogeneity and dynamic importance of different modalities. Specifically, we observe that these issues make conventional RL methods difficult to learn a useful state representation in the end-to-end training with multimodal information. To address this, we propose a novel multimodal RL approach that can do multimodal alignment and importance enhancement according to their similarity and importance in terms of RL tasks respectively. By doing so, we are able to learn an effective state representation and consequentially improve the RL training process. We test our approach on several multimodal RL domains, showing that it outperforms state-of-the-art methods in terms of learning speed and policy quality.

Feudal Multi-Agent Reinforcement Learning with Adaptive Network Partition for Traffic Signal Control

May 27, 2022

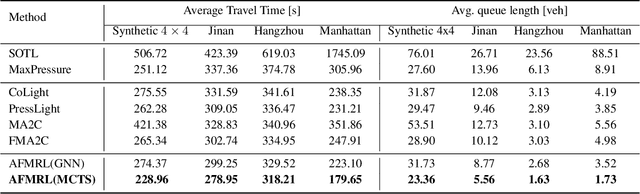

Abstract:Multi-agent reinforcement learning (MARL) has been applied and shown great potential in multi-intersections traffic signal control, where multiple agents, one for each intersection, must cooperate together to optimize traffic flow. To encourage global cooperation, previous work partitions the traffic network into several regions and learns policies for agents in a feudal structure. However, static network partition fails to adapt to dynamic traffic flow, which will changes frequently over time. To address this, we propose a novel feudal MARL approach with adaptive network partition. Specifically, we first partition the network into several regions according to the traffic flow. To do this, we propose two approaches: one is directly to use graph neural network (GNN) to generate the network partition, and the other is to use Monte-Carlo tree search (MCTS) to find the best partition with criteria computed by GNN. Then, we design a variant of Qmix using GNN to handle various dimensions of input, given by the dynamic network partition. Finally, we use a feudal hierarchy to manage agents in each partition and promote global cooperation. By doing so, agents are able to adapt to the traffic flow as required in practice. We empirically evaluate our method both in a synthetic traffic grid and real-world traffic networks of three cities, widely used in the literature. Our experimental results confirm that our method can achieve better performance, in terms of average travel time and queue length, than several leading methods for traffic signal control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge