Jingyun Hu

Frank

Traffic Sign Detection and Recognition for Autonomous Driving in Virtual Simulation Environment

Oct 27, 2019

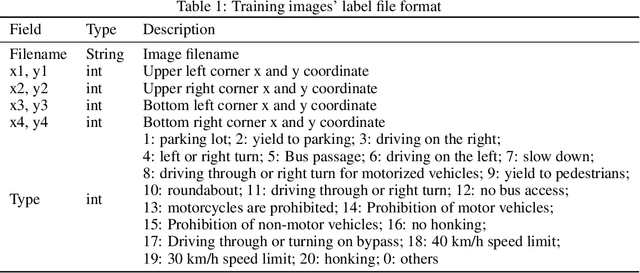

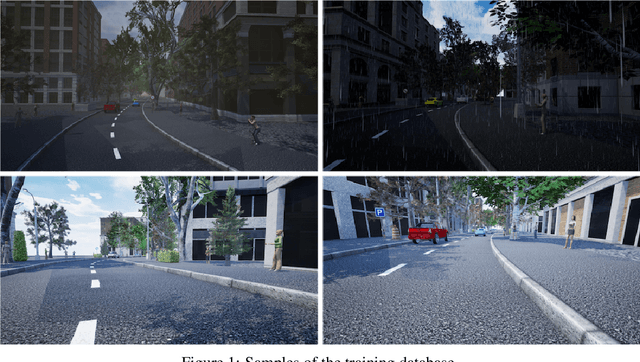

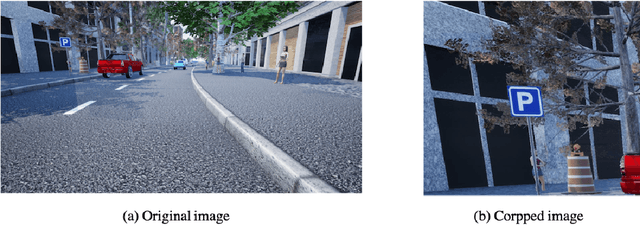

Abstract:This study developed a traffic sign detection and recognition algorithm based on the RetinaNet. Two main aspects were revised to improve the detection of traffic signs: image cropping to address the issue of large image and small traffic signs; and using more anchors with various scales to detect traffic signs with different sizes and shapes. The proposed algorithm was trained and tested in a series of autonomous driving front-view images in a virtual simulation environment. Results show that the algorithm performed extremely well under good illumination and weather conditions. Its drawbacks are that it sometimes failed to detect object under bad weather conditions like snow and failed to distinguish speed limits signs with different limit values.

Personalized Context-Aware Multi-Modal Transportation Recommendation

Oct 13, 2019

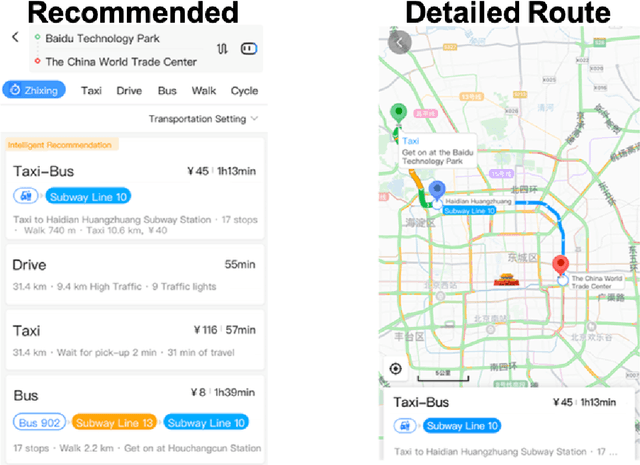

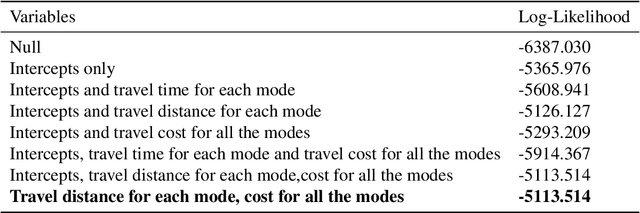

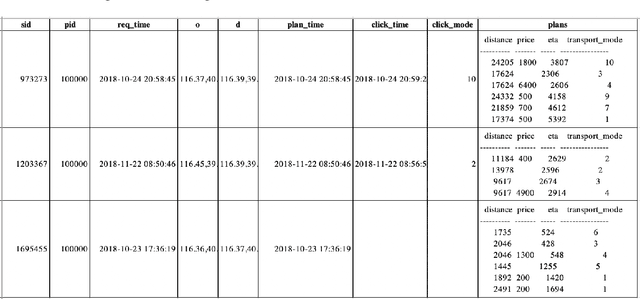

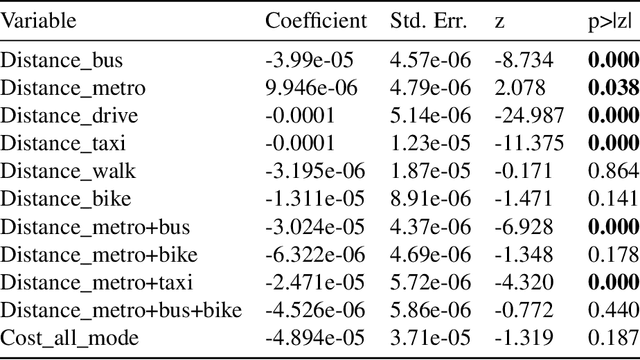

Abstract:This study proposes to find the most appropriate transport modes with awareness of user preferences (e.g., costs, times) and trip characteristics (e.g., purpose, distance). The work was based on real-life trips obtained from a map application. Several methods including gradient boosting tree, learning to rank, multinomial logit model, automated machine learning, random forest, and shallow neural network have been tried. For some methods, feature selection and over-sampling techniques were also tried. The results show that the best performing method is a gradient boosting tree model with synthetic minority over-sampling technique (SMOTE). Also, results of the multinomial logit model show that (1) an increase in travel cost would decrease the utility of all the transportation modes; (2) people are less sensitive to the travel distance for the metro mode or a multi-modal option that containing metro, i.e., compared to other modes, people would be more willing to tolerate long-distance metro trips. This indicates that metro lines might be a good candidate for large cities.

Safe, Efficient, and Comfortable Velocity Control based on Reinforcement Learning for Autonomous Driving

Jan 29, 2019

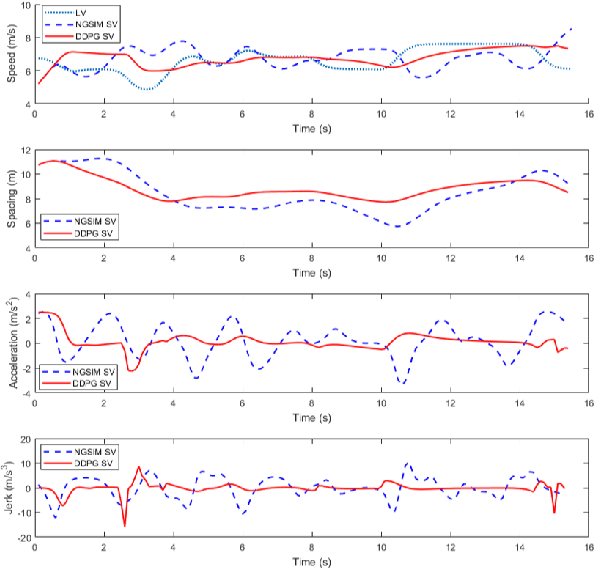

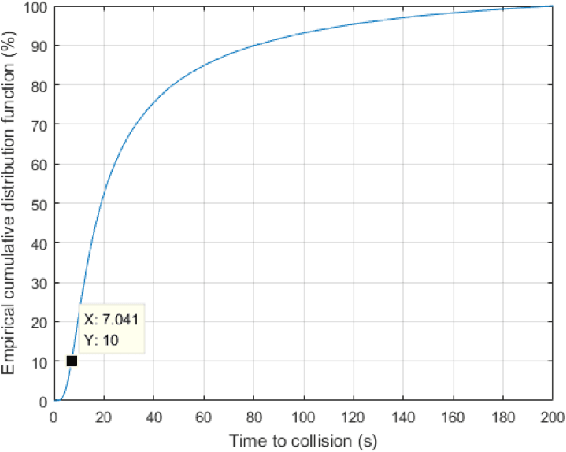

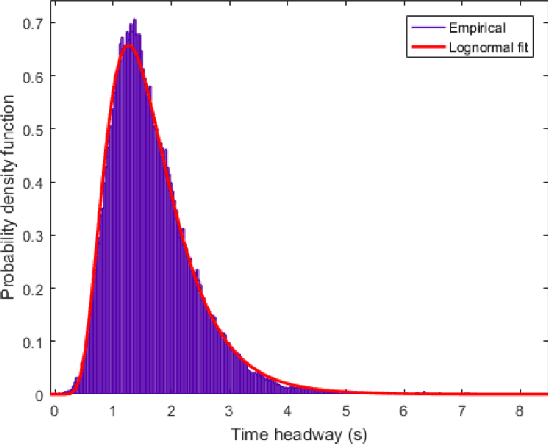

Abstract:A model used for velocity control during car following was proposed based on deep reinforcement learning (RL). To fulfil the multi-objectives of car following, a reward function reflecting driving safety, efficiency, and comfort was constructed. With the reward function, the RL agent learns to control vehicle speed in a fashion that maximizes cumulative rewards, through trials and errors in the simulation environment. A total of 1,341 car-following events extracted from the Next Generation Simulation (NGSIM) dataset were used to train the model. Car-following behavior produced by the model were compared with that observed in the empirical NGSIM data, to demonstrate the model's ability to follow a lead vehicle safely, efficiently, and comfortably. Results show that the model demonstrates the capability of safe, efficient, and comfortable velocity control in that it 1) has small percentages (8\%) of dangerous minimum time to collision values (\textless\ 5s) than human drivers in the NGSIM data (35\%); 2) can maintain efficient and safe headways in the range of 1s to 2s; and 3) can follow the lead vehicle comfortably with smooth acceleration. The results indicate that reinforcement learning methods could contribute to the development of autonomous driving systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge