Jieping Xu

COCO-CN for Cross-Lingual Image Tagging, Captioning and Retrieval

May 22, 2018

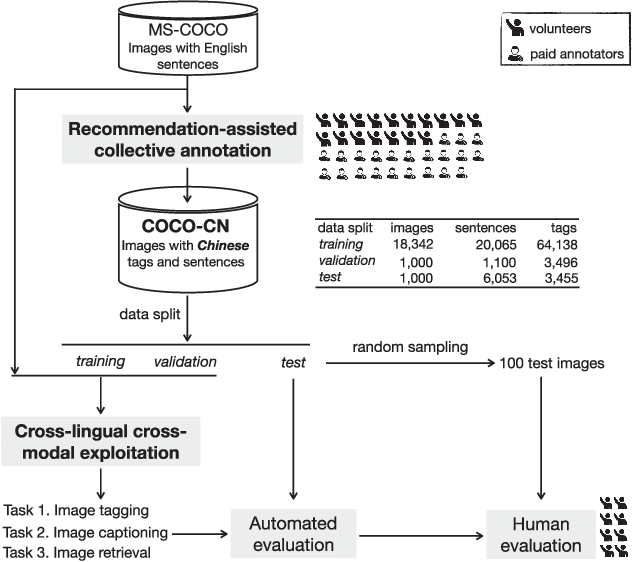

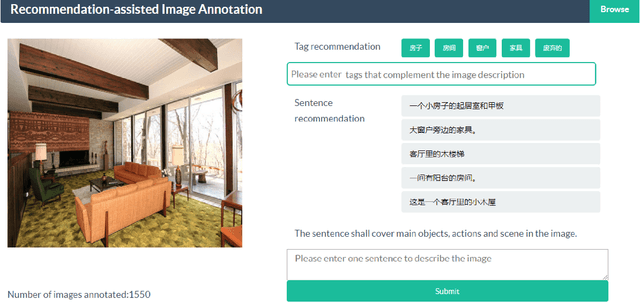

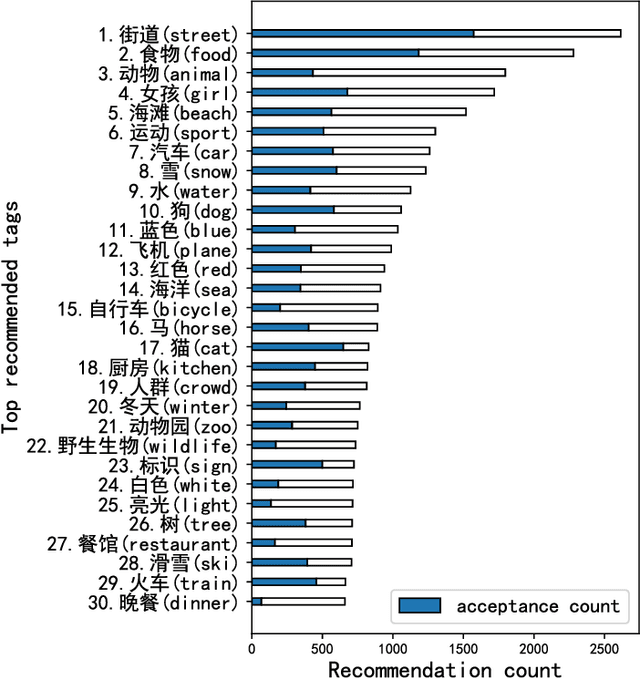

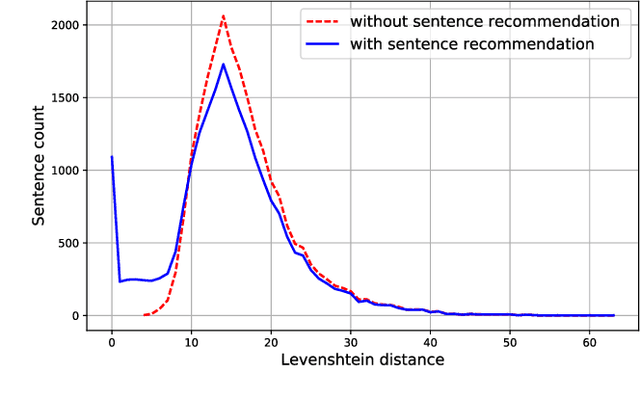

Abstract:This paper contributes to cross-lingual image annotation and retrieval in terms of data and methods. We propose COCO-CN, a novel dataset enriching MS-COCO with manually written Chinese sentences and tags. For more effective annotation acquisition, we develop a recommendation-assisted collective annotation system, automatically providing an annotator with several tags and sentences deemed to be relevant with respect to the pictorial content. Having 20,342 images annotated with 27,218 Chinese sentences and 70,993 tags, COCO-CN is currently the largest Chinese-English dataset applicable for cross-lingual image tagging, captioning and retrieval. We develop methods per task for effectively learning from cross-lingual resources. Extensive experiments on the multiple tasks justify the viability of our dataset and methods.

Detecting Violence in Video using Subclasses

Apr 27, 2016

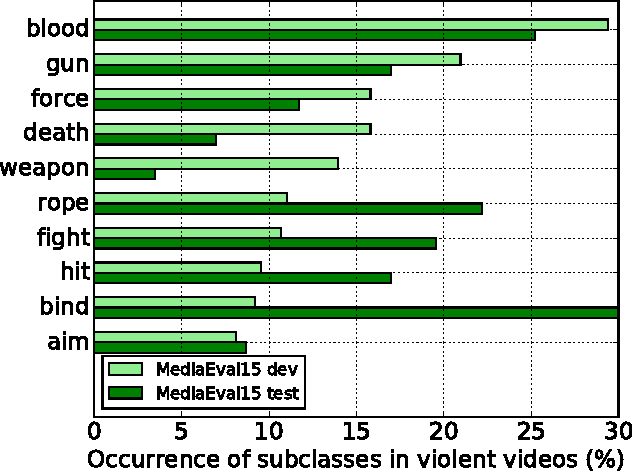

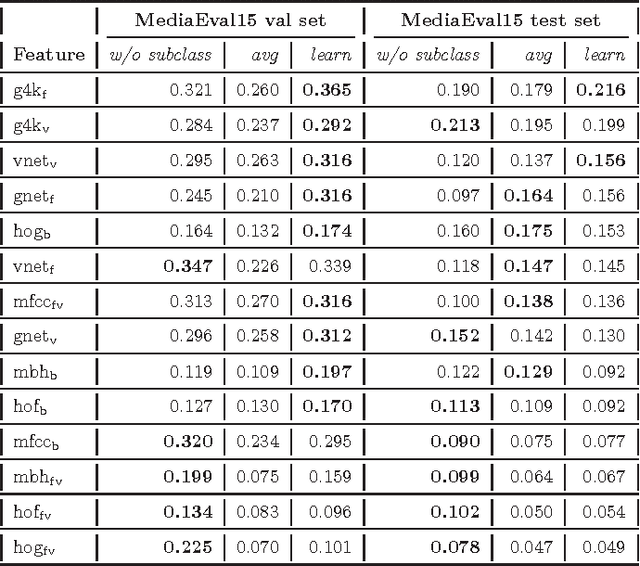

Abstract:This paper attacks the challenging problem of violence detection in videos. Different from existing works focusing on combining multi-modal features, we go one step further by adding and exploiting subclasses visually related to violence. We enrich the MediaEval 2015 violence dataset by \emph{manually} labeling violence videos with respect to the subclasses. Such fine-grained annotations not only help understand what have impeded previous efforts on learning to fuse the multi-modal features, but also enhance the generalization ability of the learned fusion to novel test data. The new subclass based solution, with AP of 0.303 and P100 of 0.55 on the MediaEval 2015 test set, outperforms several state-of-the-art alternatives. Notice that our solution does not require fine-grained annotations on the test set, so it can be directly applied on novel and fully unlabeled videos. Interestingly, our study shows that motion related features, though being essential part in previous systems, are dispensable.

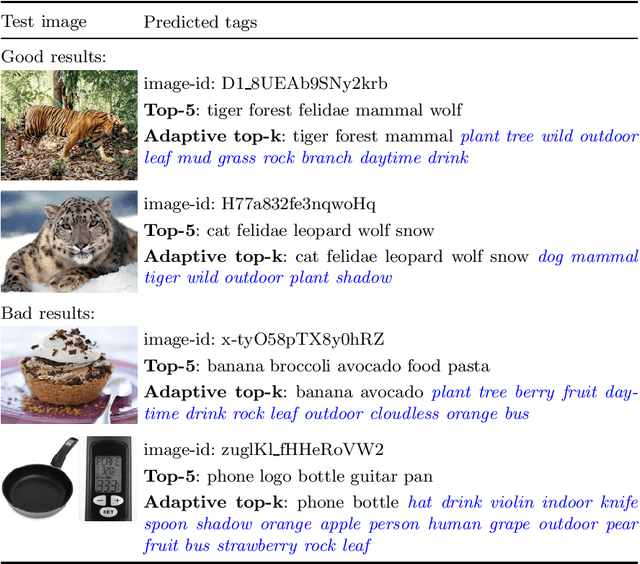

Adaptive Tag Selection for Image Annotation

Sep 17, 2014

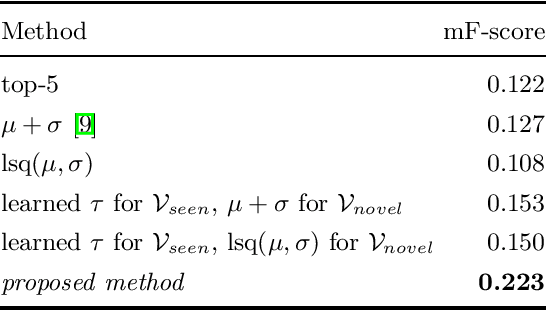

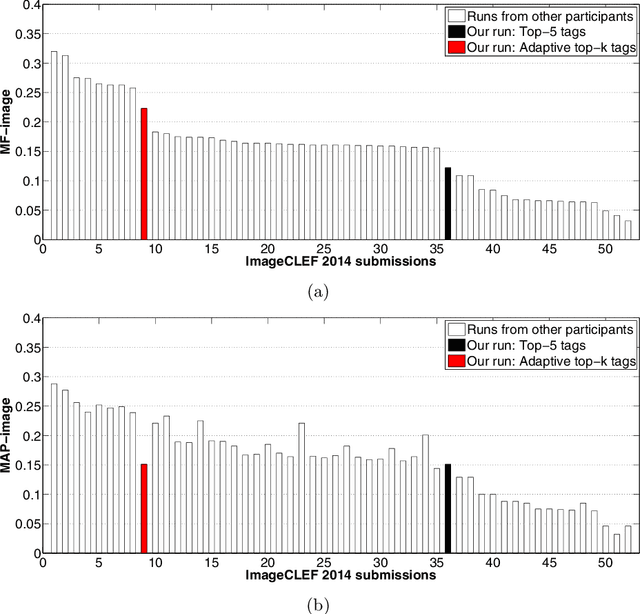

Abstract:Not all tags are relevant to an image, and the number of relevant tags is image-dependent. Although many methods have been proposed for image auto-annotation, the question of how to determine the number of tags to be selected per image remains open. The main challenge is that for a large tag vocabulary, there is often a lack of ground truth data for acquiring optimal cutoff thresholds per tag. In contrast to previous works that pre-specify the number of tags to be selected, we propose in this paper adaptive tag selection. The key insight is to divide the vocabulary into two disjoint subsets, namely a seen set consisting of tags having ground truth available for optimizing their thresholds and a novel set consisting of tags without any ground truth. Such a division allows us to estimate how many tags shall be selected from the novel set according to the tags that have been selected from the seen set. The effectiveness of the proposed method is justified by our participation in the ImageCLEF 2014 image annotation task. On a set of 2,065 test images with ground truth available for 207 tags, the benchmark evaluation shows that compared to the popular top-$k$ strategy which obtains an F-score of 0.122, adaptive tag selection achieves a higher F-score of 0.223. Moreover, by treating the underlying image annotation system as a black box, the new method can be used as an easy plug-in to boost the performance of existing systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge