Jiayin Chen

VTAO-BiManip: Masked Visual-Tactile-Action Pre-training with Object Understanding for Bimanual Dexterous Manipulation

Jan 07, 2025

Abstract:Bimanual dexterous manipulation remains significant challenges in robotics due to the high DoFs of each hand and their coordination. Existing single-hand manipulation techniques often leverage human demonstrations to guide RL methods but fail to generalize to complex bimanual tasks involving multiple sub-skills. In this paper, we introduce VTAO-BiManip, a novel framework that combines visual-tactile-action pretraining with object understanding to facilitate curriculum RL to enable human-like bimanual manipulation. We improve prior learning by incorporating hand motion data, providing more effective guidance for dual-hand coordination than binary tactile feedback. Our pretraining model predicts future actions as well as object pose and size using masked multimodal inputs, facilitating cross-modal regularization. To address the multi-skill learning challenge, we introduce a two-stage curriculum RL approach to stabilize training. We evaluate our method on a bottle-cap unscrewing task, demonstrating its effectiveness in both simulated and real-world environments. Our approach achieves a success rate that surpasses existing visual-tactile pretraining methods by over 20%.

Resolving Task Confusion in Dynamic Expansion Architectures for Class Incremental Learning

Jan 03, 2023

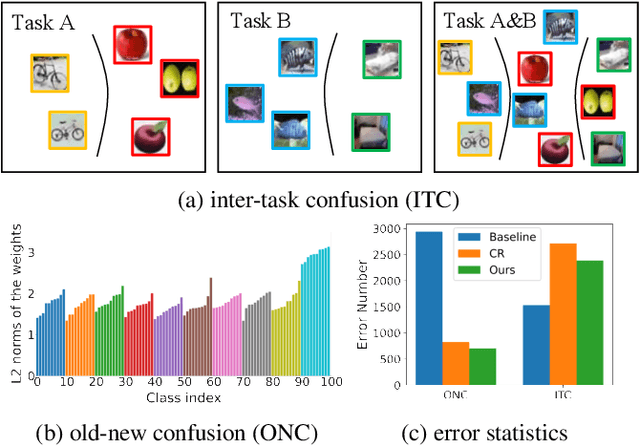

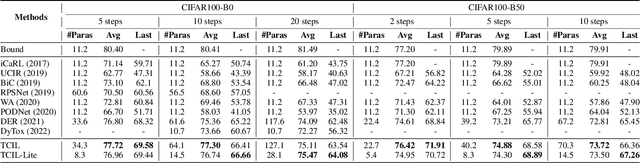

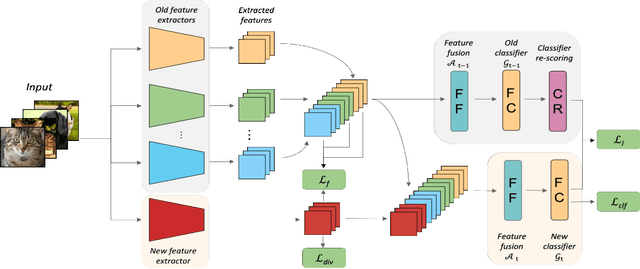

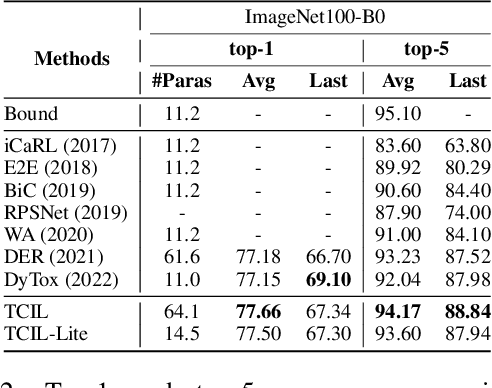

Abstract:The dynamic expansion architecture is becoming popular in class incremental learning, mainly due to its advantages in alleviating catastrophic forgetting. However, task confusion is not well assessed within this framework, e.g., the discrepancy between classes of different tasks is not well learned (i.e., inter-task confusion, ITC), and certain priority is still given to the latest class batch (i.e., old-new confusion, ONC). We empirically validate the side effects of the two types of confusion. Meanwhile, a novel solution called Task Correlated Incremental Learning (TCIL) is proposed to encourage discriminative and fair feature utilization across tasks. TCIL performs a multi-level knowledge distillation to propagate knowledge learned from old tasks to the new one. It establishes information flow paths at both feature and logit levels, enabling the learning to be aware of old classes. Besides, attention mechanism and classifier re-scoring are applied to generate more fair classification scores. We conduct extensive experiments on CIFAR100 and ImageNet100 datasets. The results demonstrate that TCIL consistently achieves state-of-the-art accuracy. It mitigates both ITC and ONC, while showing advantages in battle with catastrophic forgetting even no rehearsal memory is reserved.

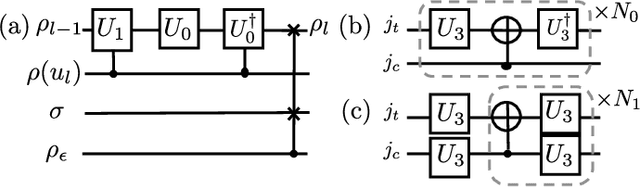

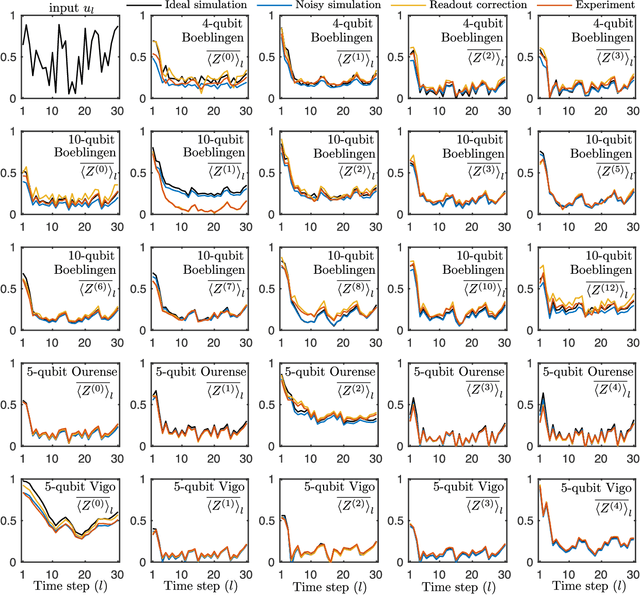

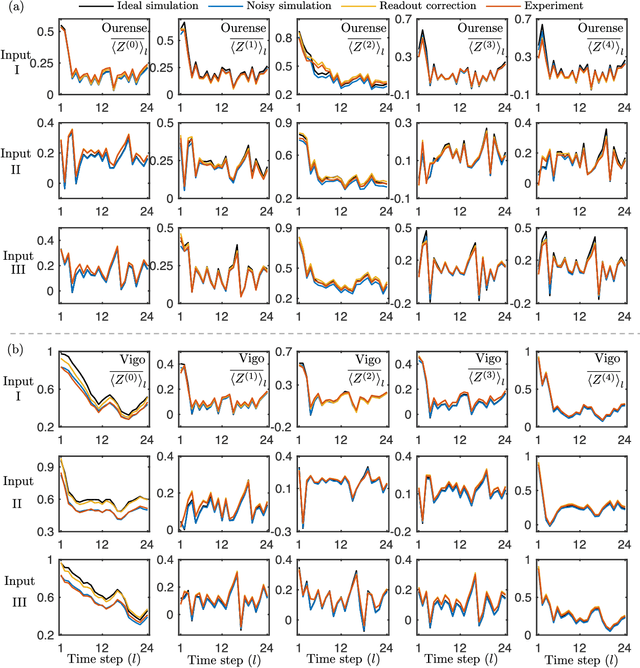

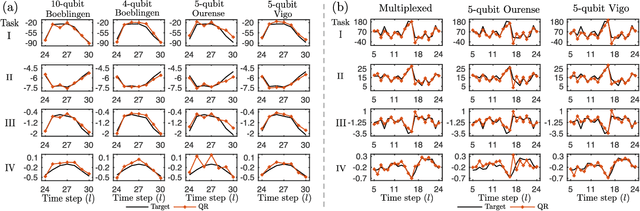

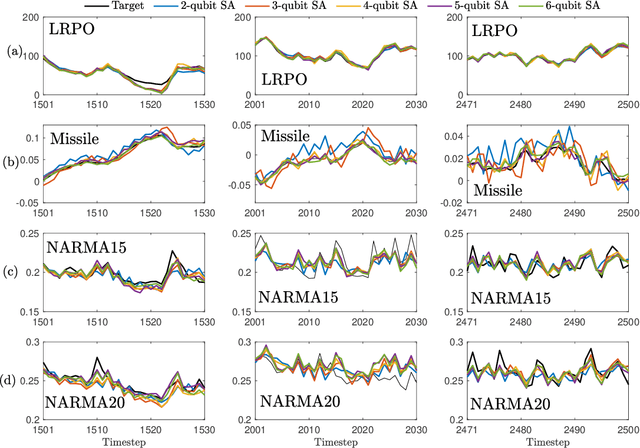

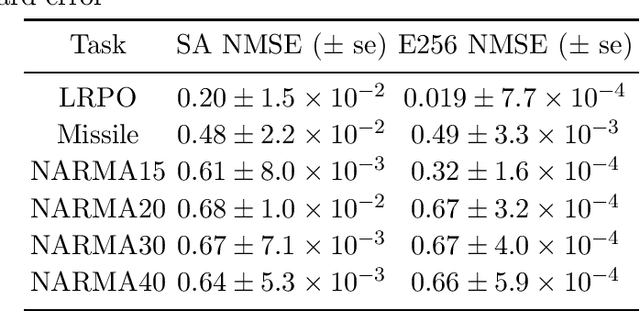

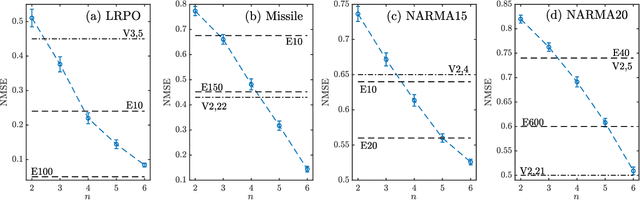

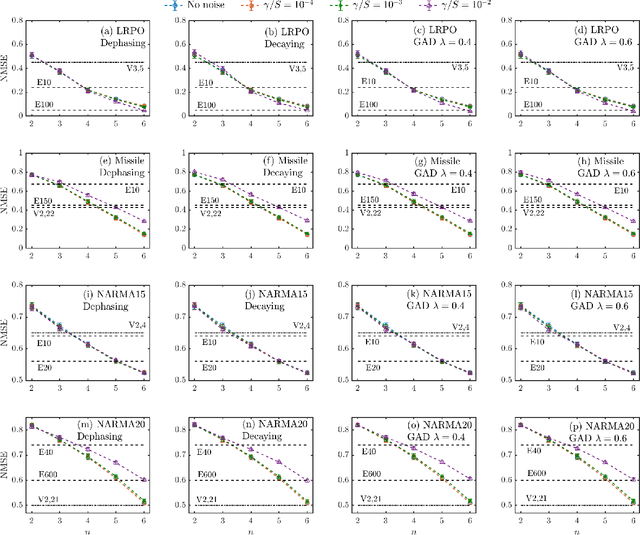

Temporal Information Processing on Noisy Quantum Computers

Jan 26, 2020

Abstract:The combination of machine learning and quantum computing has emerged as a promising approach for addressing previously untenable problems. Reservoir computing is a state-of-the-art machine learning paradigm that utilizes nonlinear dynamical systems for temporal information processing, whose state-space dimension plays a key role in the performance. Here we propose a quantum reservoir system that harnesses complex dissipative quantum dynamics and the exponentially large quantum state-space. Our proposal is readily implementable on available noisy gate-model quantum processors and possesses universal computational power for approximating nonlinear short-term memory maps, important in applications such as neural modeling, speech recognition and natural language processing. We experimentally demonstrate on superconducting quantum computers that small and noisy quantum reservoirs can tackle high-order nonlinear temporal tasks. Our theoretical and experimental results pave the way for attractive temporal processing applications of near-term gate-model quantum computers of increasing fidelity but without quantum error correction, signifying the potential of these devices for wider applications beyond static classification and regression tasks in interdisciplinary areas.

Learning Nonlinear Input-Output Maps with Dissipative Quantum Systems

Jan 07, 2019

Abstract:In this paper, we develop a theory of learning nonlinear input-output maps with fading memory by dissipative quantum systems, as a quantum counterpart of the theory of approximating such maps using classical dynamical systems. The theory identifies the properties required for a class of dissipative quantum systems to be {\em universal}, in that any input-output map with fading memory can be approximated arbitrarily closely by an element of this class. We then introduce an example class of dissipative quantum systems that is provably universal. Numerical experiments illustrate that with a small number of qubits, this class can achieve comparable performance to classical learning schemes with a large number of tunable parameters. Further numerical analysis suggests that the exponentially increasing Hilbert space presents a potential resource for dissipative quantum systems to surpass classical learning schemes for input-output maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge