Jiayi Kong

Voronoi-Assisted Diffusion for Computing Unsigned Distance Fields from Unoriented Points

Oct 14, 2025Abstract:Unsigned Distance Fields (UDFs) provide a flexible representation for 3D shapes with arbitrary topology, including open and closed surfaces, orientable and non-orientable geometries, and non-manifold structures. While recent neural approaches have shown promise in learning UDFs, they often suffer from numerical instability, high computational cost, and limited controllability. We present a lightweight, network-free method, Voronoi-Assisted Diffusion (VAD), for computing UDFs directly from unoriented point clouds. Our approach begins by assigning bi-directional normals to input points, guided by two Voronoi-based geometric criteria encoded in an energy function for optimal alignment. The aligned normals are then diffused to form an approximate UDF gradient field, which is subsequently integrated to recover the final UDF. Experiments demonstrate that VAD robustly handles watertight and open surfaces, as well as complex non-manifold and non-orientable geometries, while remaining computationally efficient and stable.

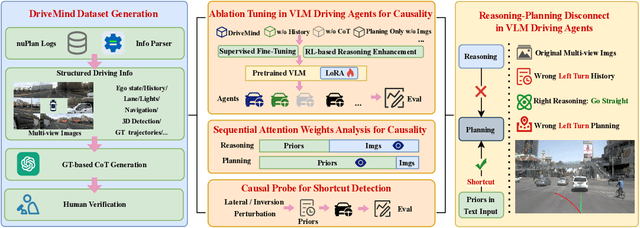

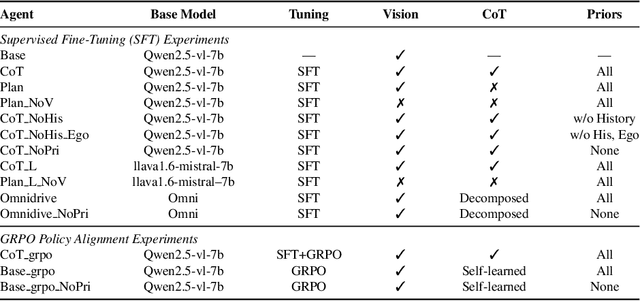

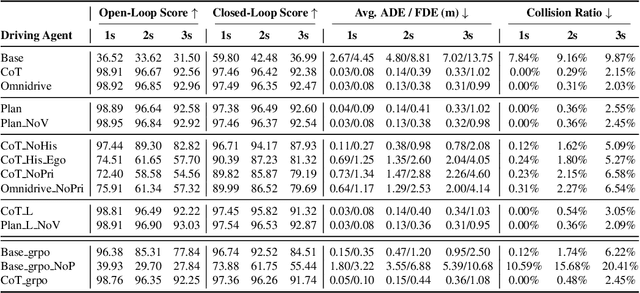

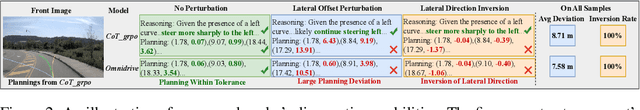

More Than Meets the Eye? Uncovering the Reasoning-Planning Disconnect in Training Vision-Language Driving Models

Oct 06, 2025

Abstract:Vision-Language Model (VLM) driving agents promise explainable end-to-end autonomy by first producing natural-language reasoning and then predicting trajectory planning. However, whether planning is causally driven by this reasoning remains a critical but unverified assumption. To investigate this, we build DriveMind, a large-scale driving Visual Question Answering (VQA) corpus with plan-aligned Chain-of-Thought (CoT), automatically generated from nuPlan. Our data generation process converts sensors and annotations into structured inputs and, crucially, separates priors from to-be-reasoned signals, enabling clean information ablations. Using DriveMind, we train representative VLM agents with Supervised Fine-Tuning (SFT) and Group Relative Policy Optimization (GRPO) and evaluate them with nuPlan's metrics. Our results, unfortunately, indicate a consistent causal disconnect in reasoning-planning: removing ego/navigation priors causes large drops in planning scores, whereas removing CoT produces only minor changes. Attention analysis further shows that planning primarily focuses on priors rather than the CoT. Based on this evidence, we propose the Reasoning-Planning Decoupling Hypothesis, positing that the training-yielded reasoning is an ancillary byproduct rather than a causal mediator. To enable efficient diagnosis, we also introduce a novel, training-free probe that measures an agent's reliance on priors by evaluating its planning robustness against minor input perturbations. In summary, we provide the community with a new dataset and a diagnostic tool to evaluate the causal fidelity of future models.

Quasi-Medial Distance Field (Q-MDF): A Robust Method for Approximating and Discretizing Neural Medial Axis

Oct 23, 2024Abstract:The medial axis, a lower-dimensional shape descriptor, plays an important role in the field of digital geometry processing. Despite its importance, robust computation of the medial axis transform from diverse inputs, especially point clouds with defects, remains a significant challenge. In this paper, we tackle the challenge by proposing a new implicit method that diverges from mainstream explicit medial axis computation techniques. Our key technical insight is the difference between the signed distance field (SDF) and the medial field (MF) of a solid shape is the unsigned distance field (UDF) of the shape's medial axis. This allows for formulating medial axis computation as an implicit reconstruction problem. Utilizing a modified double covering method, we extract the medial axis as the zero level-set of the UDF. Extensive experiments show that our method has enhanced accuracy and robustness in learning compact medial axis transform from thorny meshes and point clouds compared to existing methods.

Identity-Obscured Neural Radiance Fields: Privacy-Preserving 3D Facial Reconstruction

Dec 07, 2023Abstract:Neural radiance fields (NeRF) typically require a complete set of images taken from multiple camera perspectives to accurately reconstruct geometric details. However, this approach raise significant privacy concerns in the context of facial reconstruction. The critical need for privacy protection often leads invidividuals to be reluctant in sharing their facial images, due to fears of potential misuse or security risks. Addressing these concerns, we propose a method that leverages privacy-preserving images for reconstructing 3D head geometry within the NeRF framework. Our method stands apart from traditional facial reconstruction techniques as it does not depend on RGB information from images containing sensitive facial data. Instead, it effectively generates plausible facial geometry using a series of identity-obscured inputs, thereby protecting facial privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge