Jianpeng Yao

CommCP: Efficient Multi-Agent Coordination via LLM-Based Communication with Conformal Prediction

Feb 05, 2026Abstract:To complete assignments provided by humans in natural language, robots must interpret commands, generate and answer relevant questions for scene understanding, and manipulate target objects. Real-world deployments often require multiple heterogeneous robots with different manipulation capabilities to handle different assignments cooperatively. Beyond the need for specialized manipulation skills, effective information gathering is important in completing these assignments. To address this component of the problem, we formalize the information-gathering process in a fully cooperative setting as an underexplored multi-agent multi-task Embodied Question Answering (MM-EQA) problem, which is a novel extension of canonical Embodied Question Answering (EQA), where effective communication is crucial for coordinating efforts without redundancy. To address this problem, we propose CommCP, a novel LLM-based decentralized communication framework designed for MM-EQA. Our framework employs conformal prediction to calibrate the generated messages, thereby minimizing receiver distractions and enhancing communication reliability. To evaluate our framework, we introduce an MM-EQA benchmark featuring diverse, photo-realistic household scenarios with embodied questions. Experimental results demonstrate that CommCP significantly enhances the task success rate and exploration efficiency over baselines. The experiment videos, code, and dataset are available on our project website: https://comm-cp.github.io.

Towards Generalizable Safety in Crowd Navigation via Conformal Uncertainty Handling

Aug 07, 2025Abstract:Mobile robots navigating in crowds trained using reinforcement learning are known to suffer performance degradation when faced with out-of-distribution scenarios. We propose that by properly accounting for the uncertainties of pedestrians, a robot can learn safe navigation policies that are robust to distribution shifts. Our method augments agent observations with prediction uncertainty estimates generated by adaptive conformal inference, and it uses these estimates to guide the agent's behavior through constrained reinforcement learning. The system helps regulate the agent's actions and enables it to adapt to distribution shifts. In the in-distribution setting, our approach achieves a 96.93% success rate, which is over 8.80% higher than the previous state-of-the-art baselines with over 3.72 times fewer collisions and 2.43 times fewer intrusions into ground-truth human future trajectories. In three out-of-distribution scenarios, our method shows much stronger robustness when facing distribution shifts in velocity variations, policy changes, and transitions from individual to group dynamics. We deploy our method on a real robot, and experiments show that the robot makes safe and robust decisions when interacting with both sparse and dense crowds. Our code and videos are available on https://gen-safe-nav.github.io/.

SoNIC: Safe Social Navigation with Adaptive Conformal Inference and Constrained Reinforcement Learning

Jul 24, 2024Abstract:Reinforcement Learning (RL) has enabled social robots to generate trajectories without human-designed rules or interventions, which makes it more effective than hard-coded systems for generalizing to complex real-world scenarios. However, social navigation is a safety-critical task that requires robots to avoid collisions with pedestrians while previous RL-based solutions fall short in safety performance in complex environments. To enhance the safety of RL policies, to the best of our knowledge, we propose the first algorithm, SoNIC, that integrates adaptive conformal inference (ACI) with constrained reinforcement learning (CRL) to learn safe policies for social navigation. More specifically, our method augments RL observations with ACI-generated nonconformity scores and provides explicit guidance for agents to leverage the uncertainty metrics to avoid safety-critical areas by incorporating safety constraints with spatial relaxation. Our method outperforms state-of-the-art baselines in terms of both safety and adherence to social norms by a large margin and demonstrates much stronger robustness to out-of-distribution scenarios. Our code and video demos are available on our project website: https://sonic-social-nav.github.io/.

Adaptive actuation of magnetic soft robots using deep reinforcement learning

Apr 25, 2022

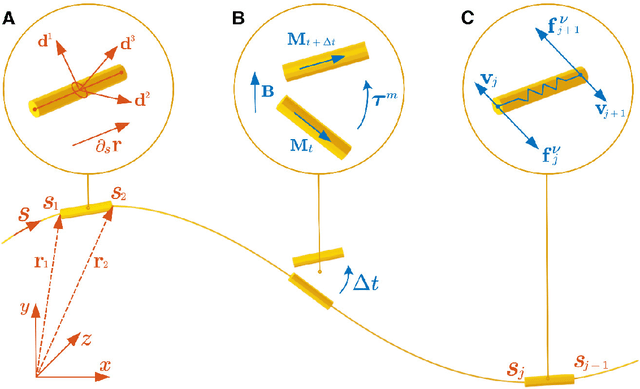

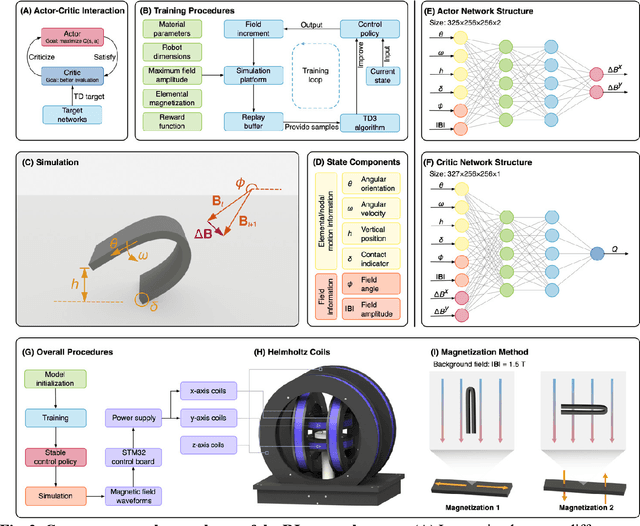

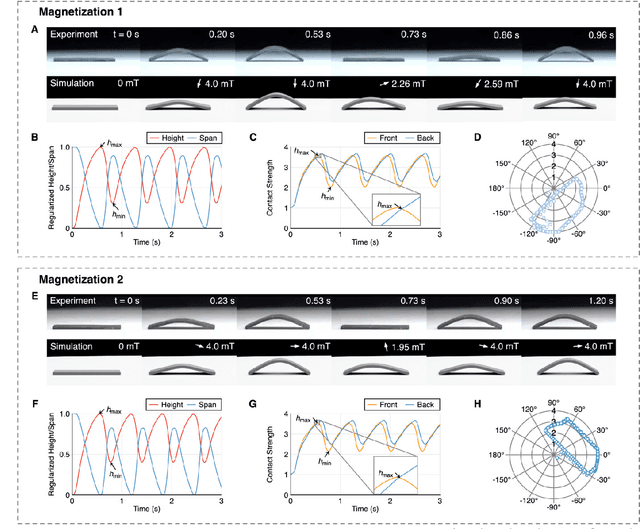

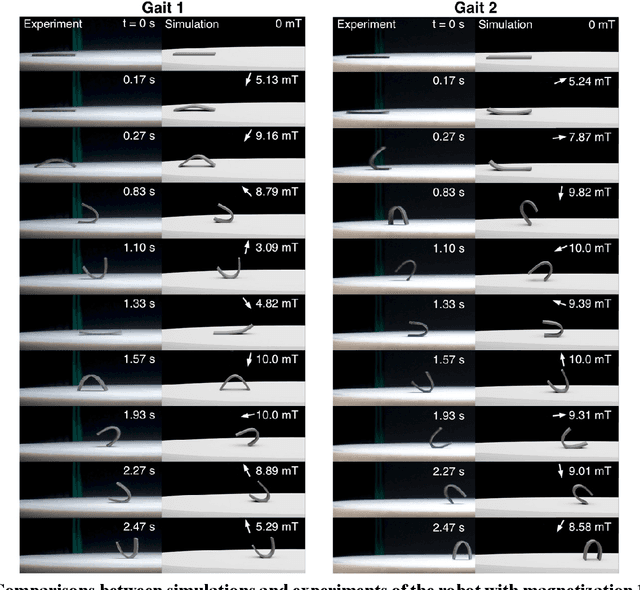

Abstract:Magnetic soft robots have attracted growing interest due to their unique advantages in terms of untethered actuation and excellent controllability. However, finding the required magnetization patterns or magnetic fields to achieve the desired functions of these robots is quite challenging in many cases. No unified framework for design has been proposed yet, and existing methods mainly rely on manual heuristics, which are hard to satisfy the high complexity level of the desired robotic motion. Here, we develop an intelligent method to solve the related inverse-design problems, implemented by introducing a novel simulation platform for magnetic soft robots based on Cosserat rod models and a deep reinforcement learning framework based on TD3. We demonstrate that magnetic soft robots with different magnetization patterns can learn to move without human guidance in simulations, and effective magnetic fields can be autonomously generated that can then be applied directly to real magnetic soft robots in an open-loop way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge