Jennifer Chu-Carroll

Bell Laboratories

LLM-ARC: Enhancing LLMs with an Automated Reasoning Critic

Jun 25, 2024

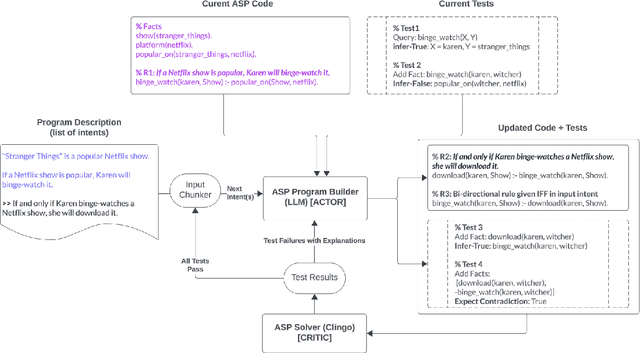

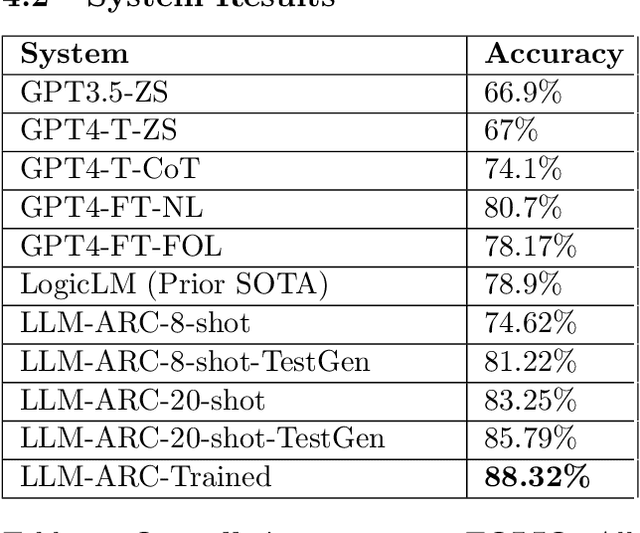

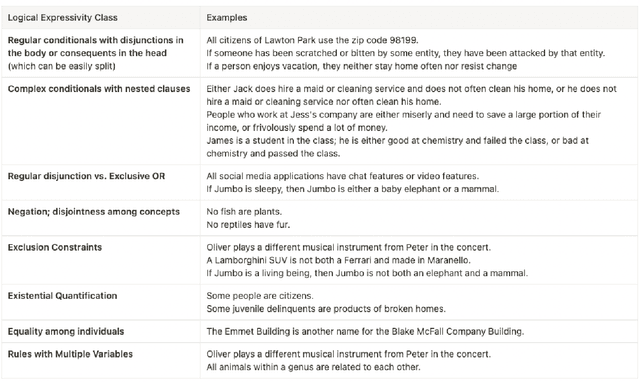

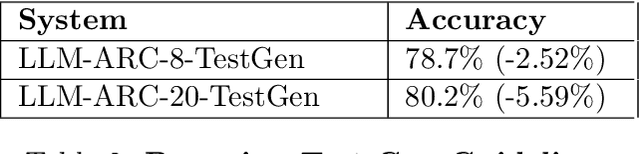

Abstract:We introduce LLM-ARC, a neuro-symbolic framework designed to enhance the logical reasoning capabilities of Large Language Models (LLMs), by combining them with an Automated Reasoning Critic (ARC). LLM-ARC employs an Actor-Critic method where the LLM Actor generates declarative logic programs along with tests for semantic correctness, while the Automated Reasoning Critic evaluates the code, runs the tests and provides feedback on test failures for iterative refinement. Implemented using Answer Set Programming (ASP), LLM-ARC achieves a new state-of-the-art accuracy of 88.32% on the FOLIO benchmark which tests complex logical reasoning capabilities. Our experiments demonstrate significant improvements over LLM-only baselines, highlighting the importance of logic test generation and iterative self-refinement. We achieve our best result using a fully automated self-supervised training loop where the Actor is trained on end-to-end dialog traces with Critic feedback. We discuss potential enhancements and provide a detailed error analysis, showcasing the robustness and efficacy of LLM-ARC for complex natural language reasoning tasks.

Beyond LLMs: Advancing the Landscape of Complex Reasoning

Feb 12, 2024

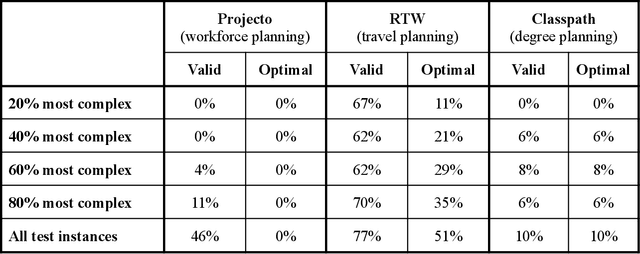

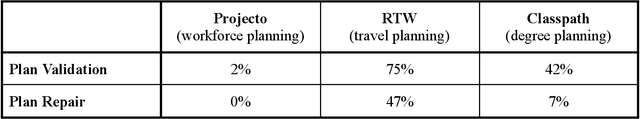

Abstract:Since the advent of Large Language Models a few years ago, they have often been considered the de facto solution for many AI problems. However, in addition to the many deficiencies of LLMs that prevent them from broad industry adoption, such as reliability, cost, and speed, there is a whole class of common real world problems that Large Language Models perform poorly on, namely, constraint satisfaction and optimization problems. These problems are ubiquitous and current solutions are highly specialized and expensive to implement. At Elemental Cognition, we developed our EC AI platform which takes a neuro-symbolic approach to solving constraint satisfaction and optimization problems. The platform employs, at its core, a precise and high performance logical reasoning engine, and leverages LLMs for knowledge acquisition and user interaction. This platform supports developers in specifying application logic in natural and concise language while generating application user interfaces to interact with users effectively. We evaluated LLMs against systems built on the EC AI platform in three domains and found the EC AI systems to significantly outperform LLMs on constructing valid and optimal solutions, on validating proposed solutions, and on repairing invalid solutions.

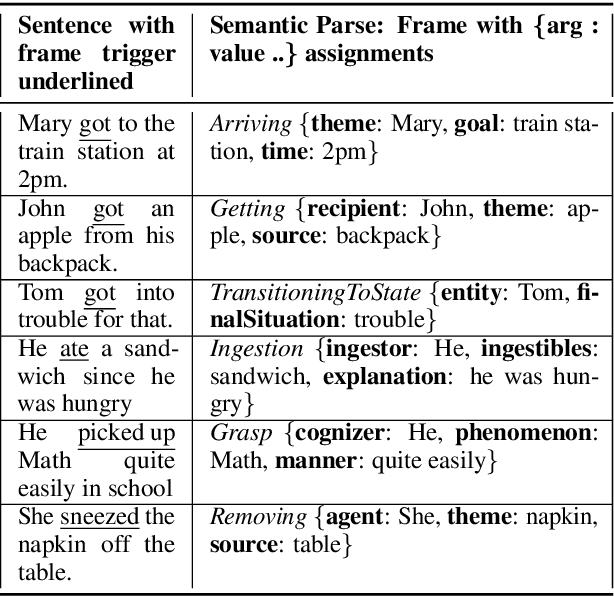

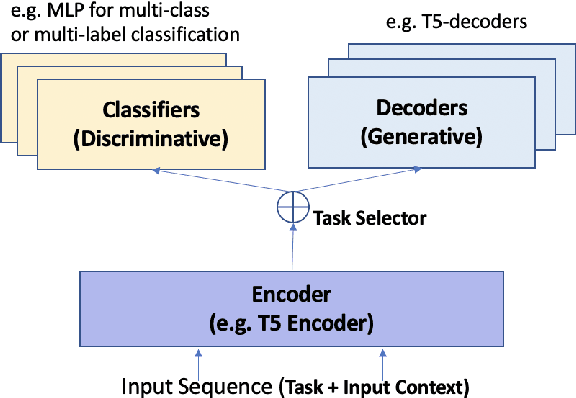

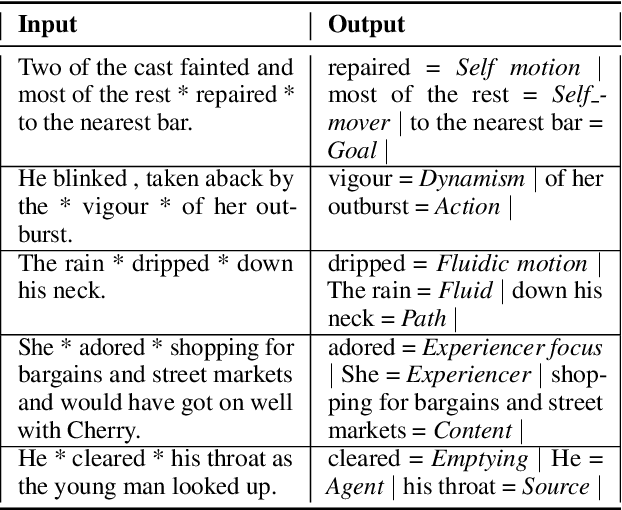

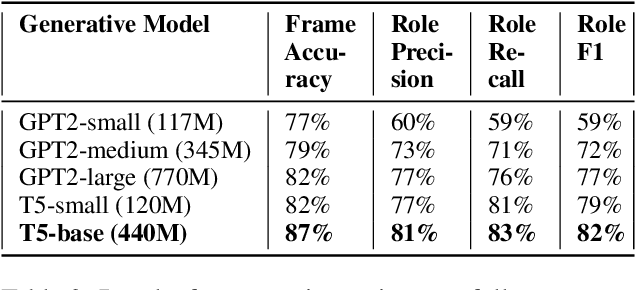

Open-Domain Frame Semantic Parsing Using Transformers

Oct 23, 2020

Abstract:Frame semantic parsing is a complex problem which includes multiple underlying subtasks. Recent approaches have employed joint learning of subtasks (such as predicate and argument detection), and multi-task learning of related tasks (such as syntactic and semantic parsing). In this paper, we explore multi-task learning of all subtasks with transformer-based models. We show that a purely generative encoder-decoder architecture handily beats the previous state of the art in FrameNet 1.7 parsing, and that a mixed decoding multi-task approach achieves even better performance. Finally, we show that the multi-task model also outperforms recent state of the art systems for PropBank SRL parsing on the CoNLL 2012 benchmark.

GLUCOSE: GeneraLized and COntextualized Story Explanations

Sep 16, 2020

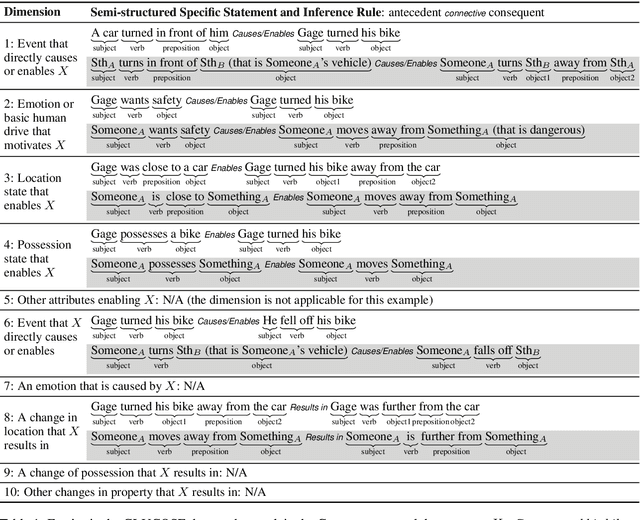

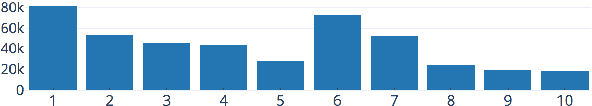

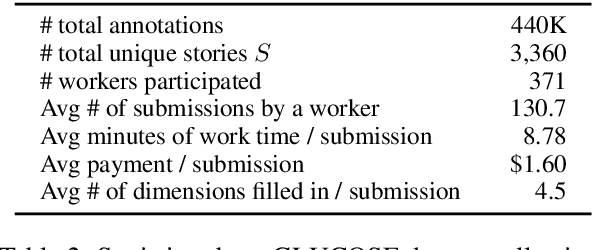

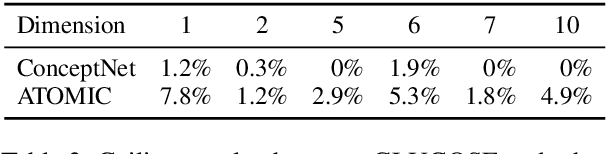

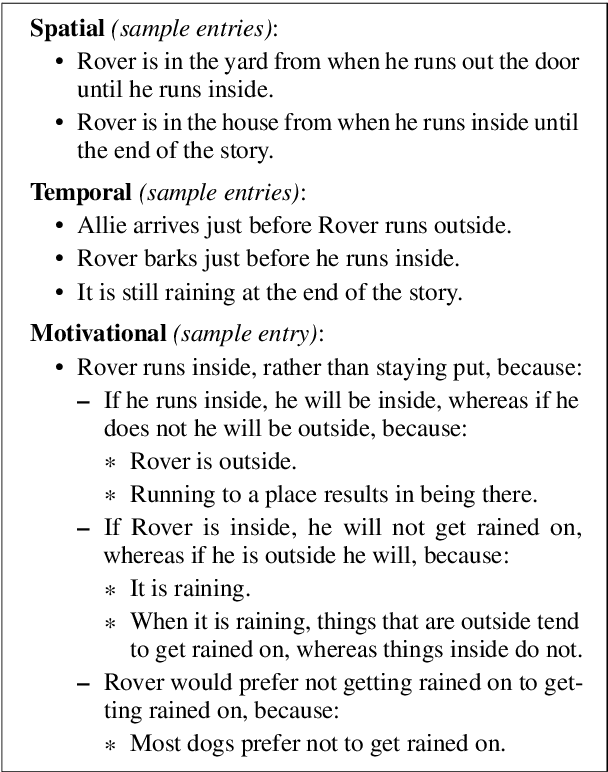

Abstract:When humans read or listen, they make implicit commonsense inferences that frame their understanding of what happened and why. As a step toward AI systems that can build similar mental models, we introduce GLUCOSE, a large-scale dataset of implicit commonsense causal knowledge, encoded as causal mini-theories about the world, each grounded in a narrative context. To construct GLUCOSE, we drew on cognitive psychology to identify ten dimensions of causal explanation, focusing on events, states, motivations, and emotions. Each GLUCOSE entry includes a story-specific causal statement paired with an inference rule generalized from the statement. This paper details two concrete contributions: First, we present our platform for effectively crowdsourcing GLUCOSE data at scale, which uses semi-structured templates to elicit causal explanations. Using this platform, we collected 440K specific statements and general rules that capture implicit commonsense knowledge about everyday situations. Second, we show that existing knowledge resources and pretrained language models do not include or readily predict GLUCOSE's rich inferential content. However, when state-of-the-art neural models are trained on this knowledge, they can start to make commonsense inferences on unseen stories that match humans' mental models.

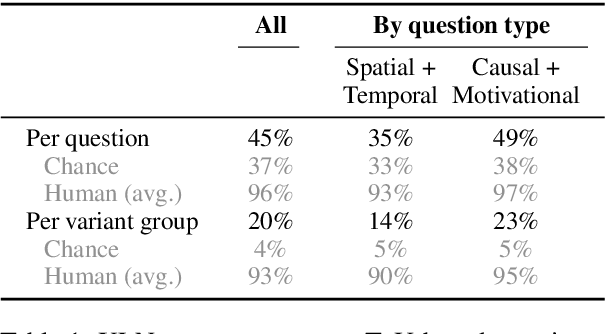

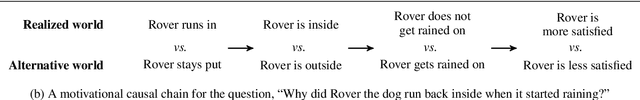

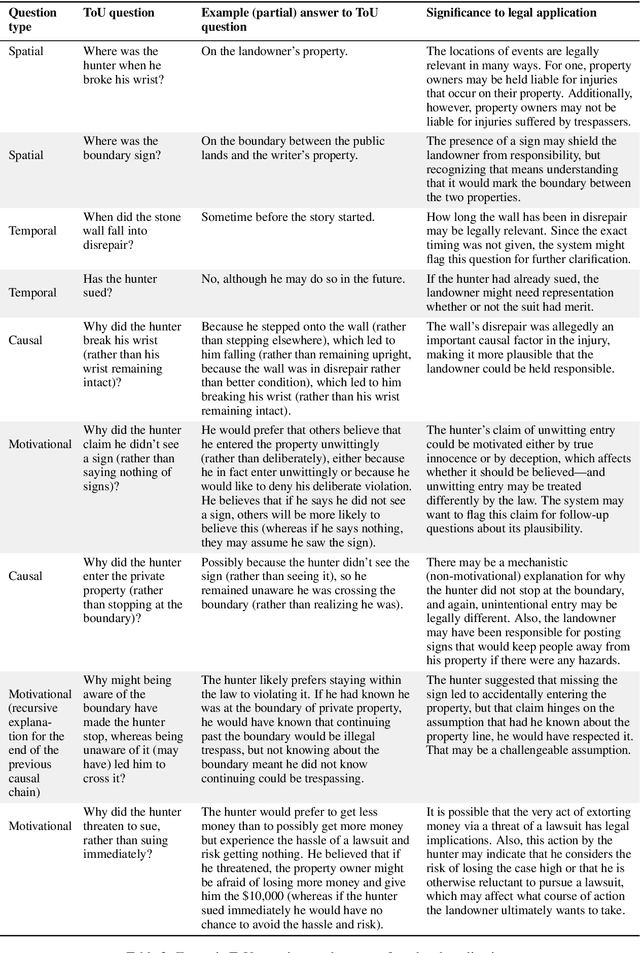

To Test Machine Comprehension, Start by Defining Comprehension

May 11, 2020

Abstract:Many tasks aim to measure machine reading comprehension (MRC), often focusing on question types presumed to be difficult. Rarely, however, do task designers start by considering what systems should in fact comprehend. In this paper we make two key contributions. First, we argue that existing approaches do not adequately define comprehension; they are too unsystematic about what content is tested. Second, we present a detailed definition of comprehension -- a "Template of Understanding" -- for a widely useful class of texts, namely short narratives. We then conduct an experiment that strongly suggests existing systems are not up to the task of narrative understanding as we define it.

Tracking Initiative in Collaborative Dialogue Interactions

Apr 17, 1997

Abstract:In this paper, we argue for the need to distinguish between task and dialogue initiatives, and present a model for tracking shifts in both types of initiatives in dialogue interactions. Our model predicts the initiative holders in the next dialogue turn based on the current initiative holders and the effect that observed cues have on changing them. Our evaluation across various corpora shows that the use of cues consistently improves the accuracy in the system's prediction of task and dialogue initiative holders by 2-4 and 8-13 percentage points, respectively, thus illustrating the generality of our model.

* 9 pages, uses psfig, and times

Generating Information-Sharing Subdialogues in Expert-User Consultation

Jan 06, 1997

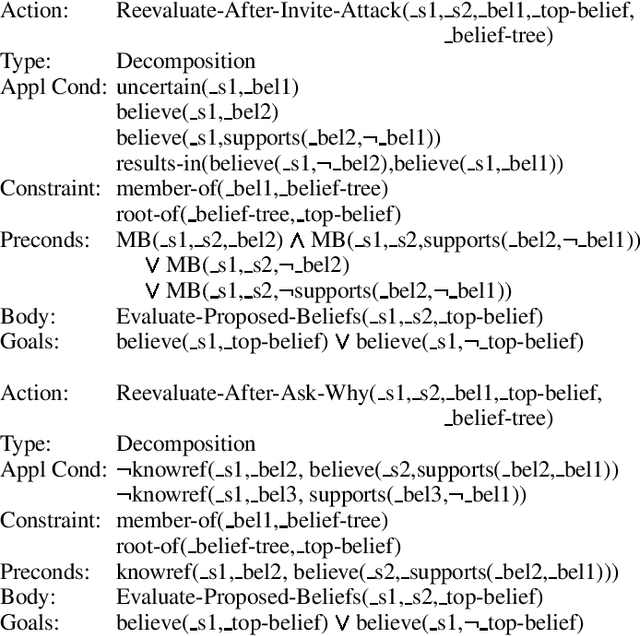

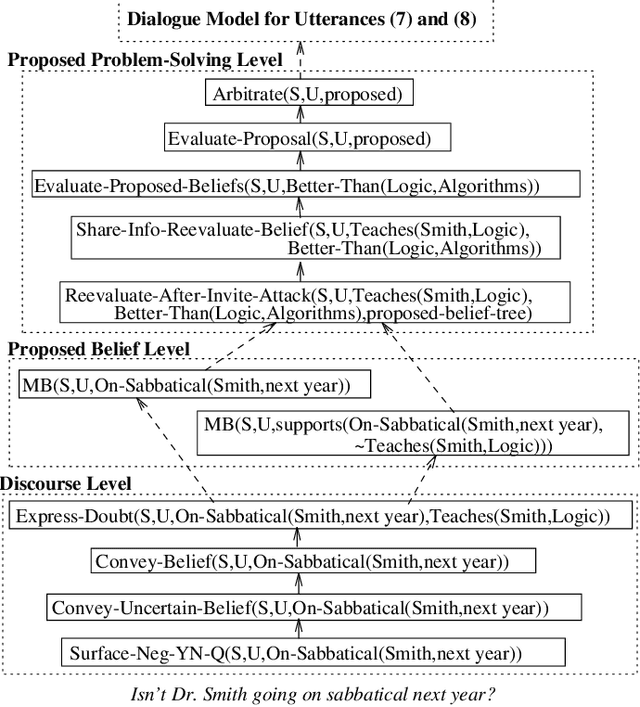

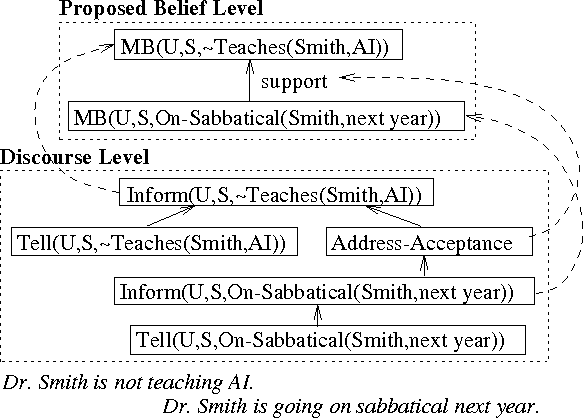

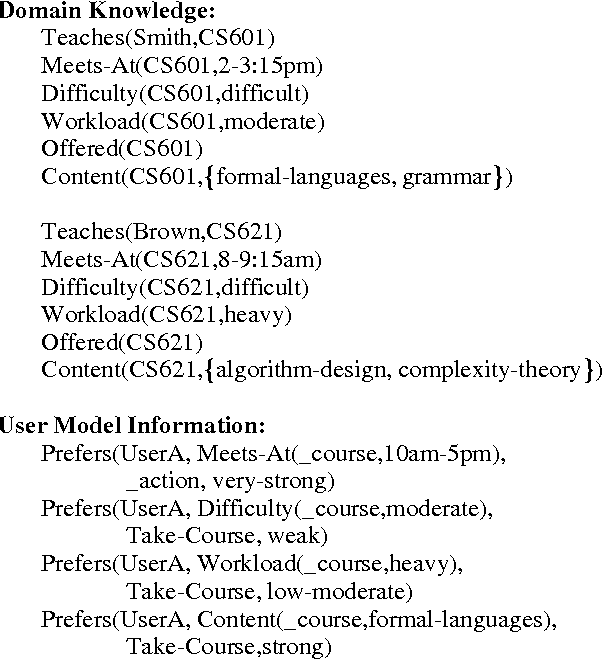

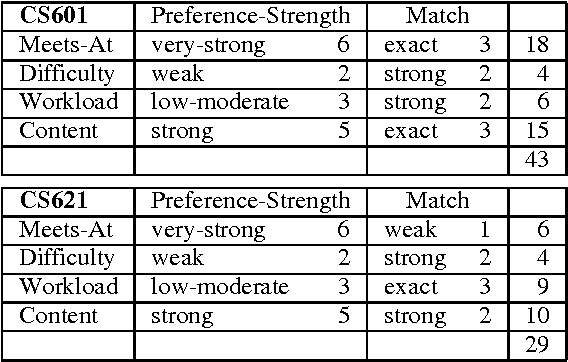

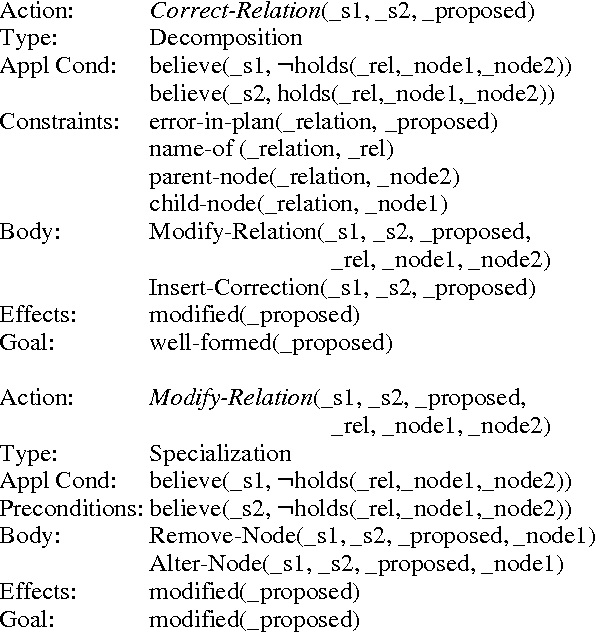

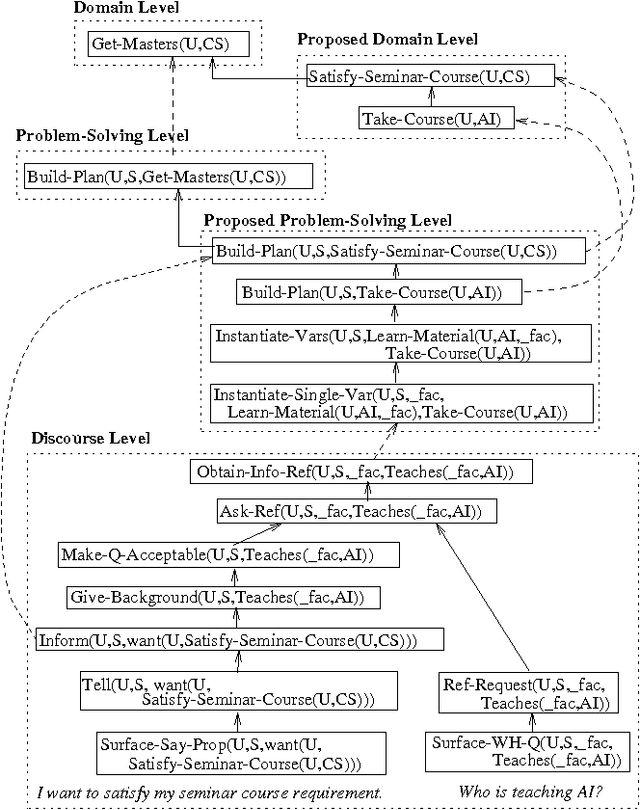

Abstract:In expert-consultation dialogues, it is inevitable that an agent will at times have insufficient information to determine whether to accept or reject a proposal by the other agent. This results in the need for the agent to initiate an information-sharing subdialogue to form a set of shared beliefs within which the agents can effectively re-evaluate the proposal. This paper presents a computational strategy for initiating such information-sharing subdialogues to resolve the system's uncertainty regarding the acceptance of a user proposal. Our model determines when information-sharing should be pursued, selects a focus of information-sharing among multiple uncertain beliefs, chooses the most effective information-sharing strategy, and utilizes the newly obtained information to re-evaluate the user proposal. Furthermore, our model is capable of handling embedded information-sharing subdialogues.

* 9 pages, 1 figure; uses epsf.sty, times.sty, and named

Response Generation in Collaborative Negotiation

May 01, 1995

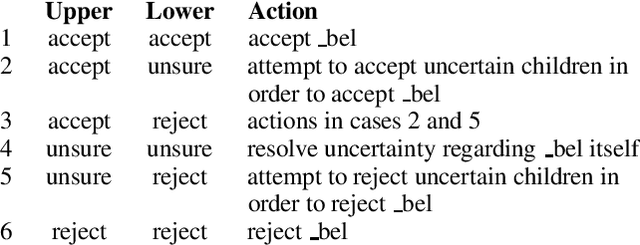

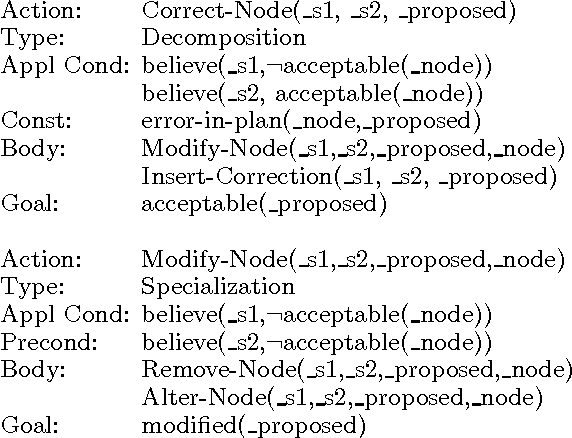

Abstract:In collaborative planning activities, since the agents are autonomous and heterogeneous, it is inevitable that conflicts arise in their beliefs during the planning process. In cases where such conflicts are relevant to the task at hand, the agents should engage in collaborative negotiation as an attempt to square away the discrepancies in their beliefs. This paper presents a computational strategy for detecting conflicts regarding proposed beliefs and for engaging in collaborative negotiation to resolve the conflicts that warrant resolution. Our model is capable of selecting the most effective aspect to address in its pursuit of conflict resolution in cases where multiple conflicts arise, and of selecting appropriate evidence to justify the need for such modification. Furthermore, by capturing the negotiation process in a recursive Propose-Evaluate-Modify cycle of actions, our model can successfully handle embedded negotiation subdialogues.

A Plan-Based Model for Response Generation in Collaborative Task-Oriented Dialogues

May 06, 1994

Abstract:This paper presents a plan-based architecture for response generation in collaborative consultation dialogues, with emphasis on cases in which the system (consultant) and user (executing agent) disagree. Our work contributes to an overall system for collaborative problem-solving by providing a plan-based framework that captures the {\em Propose-Evaluate-Modify} cycle of collaboration, and by allowing the system to initiate subdialogues to negotiate proposed additions to the shared plan and to provide support for its claims. In addition, our system handles in a unified manner the negotiation of proposed domain actions, proposed problem-solving actions, and beliefs proposed by discourse actions. Furthermore, it captures cooperative responses within the collaborative framework and accounts for why questions are sometimes never answered.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge