Jason Brown

GLL: A Differentiable Graph Learning Layer for Neural Networks

Dec 11, 2024

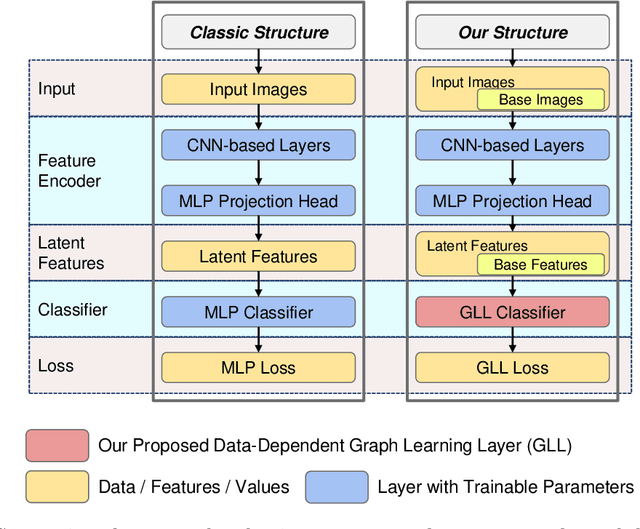

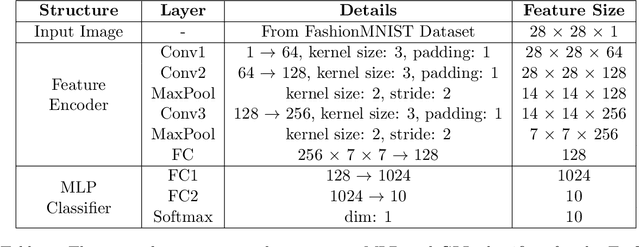

Abstract:Standard deep learning architectures used for classification generate label predictions with a projection head and softmax activation function. Although successful, these methods fail to leverage the relational information between samples in the batch for generating label predictions. In recent works, graph-based learning techniques, namely Laplace learning, have been heuristically combined with neural networks for both supervised and semi-supervised learning (SSL) tasks. However, prior works approximate the gradient of the loss function with respect to the graph learning algorithm or decouple the processes; end-to-end integration with neural networks is not achieved. In this work, we derive backpropagation equations, via the adjoint method, for inclusion of a general family of graph learning layers into a neural network. This allows us to precisely integrate graph Laplacian-based label propagation into a neural network layer, replacing a projection head and softmax activation function for classification tasks. Using this new framework, our experimental results demonstrate smooth label transitions across data, improved robustness to adversarial attacks, improved generalization, and improved training dynamics compared to the standard softmax-based approach.

Learning Surface Terrain Classifications from Ground Penetrating Radar

Apr 13, 2024Abstract:Terrain classification is an important problem for mobile robots operating in extreme environments as it can aid downstream tasks such as autonomous navigation and planning. While RGB cameras are widely used for terrain identification, vision-based methods can suffer due to poor lighting conditions and occlusions. In this paper, we propose the novel use of Ground Penetrating Radar (GPR) for terrain characterization for mobile robot platforms. Our approach leverages machine learning for surface terrain classification from GPR data. We collect a new dataset consisting of four different terrain types, and present qualitative and quantitative results. Our results demonstrate that classification networks can learn terrain categories from GPR signals. Additionally, we integrate our GPR-based classification approach into a multimodal semantic mapping framework to demonstrate a practical use case of GPR for surface terrain classification on mobile robots. Overall, this work extends the usability of GPR sensors deployed on robots to enable terrain classification in addition to GPR's existing scientific use cases.

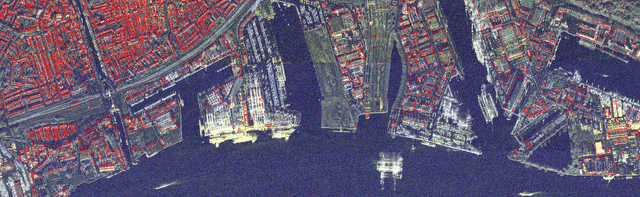

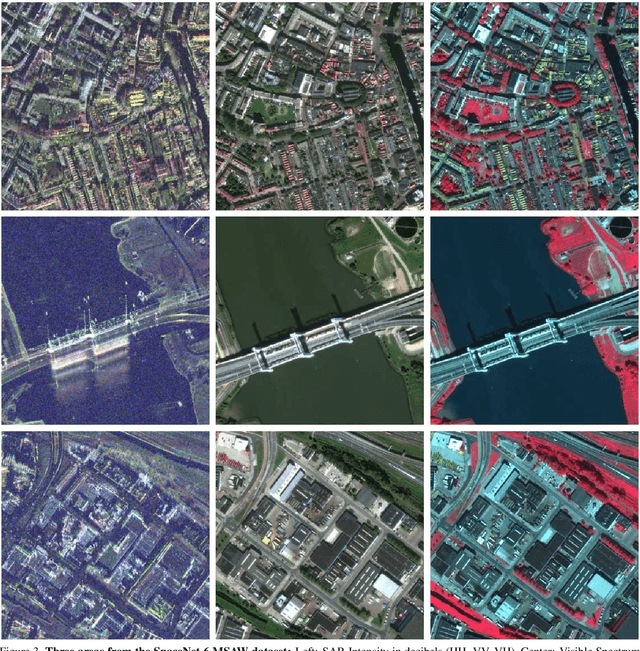

SpaceNet 6: Multi-Sensor All Weather Mapping Dataset

Apr 14, 2020

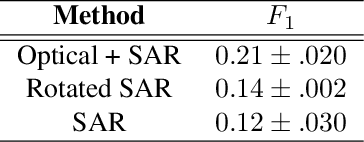

Abstract:Within the remote sensing domain, a diverse set of acquisition modalities exist, each with their own unique strengths and weaknesses. Yet, most of the current literature and open datasets only deal with electro-optical (optical) data for different detection and segmentation tasks at high spatial resolutions. optical data is often the preferred choice for geospatial applications, but requires clear skies and little cloud cover to work well. Conversely, Synthetic Aperture Radar (SAR) sensors have the unique capability to penetrate clouds and collect during all weather, day and night conditions. Consequently, SAR data are particularly valuable in the quest to aid disaster response, when weather and cloud cover can obstruct traditional optical sensors. Despite all of these advantages, there is little open data available to researchers to explore the effectiveness of SAR for such applications, particularly at very-high spatial resolutions, i.e. <1m Ground Sample Distance (GSD). To address this problem, we present an open Multi-Sensor All Weather Mapping (MSAW) dataset and challenge, which features two collection modalities (both SAR and optical). The dataset and challenge focus on mapping and building footprint extraction using a combination of these data sources. MSAW covers 120 km^2 over multiple overlapping collects and is annotated with over 48,000 unique building footprints labels, enabling the creation and evaluation of mapping algorithms for multi-modal data. We present a baseline and benchmark for building footprint extraction with SAR data and find that state-of-the-art segmentation models pre-trained on optical data, and then trained on SAR (F1 score of 0.21) outperform those trained on SAR data alone (F1 score of 0.135).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge